Course:CPSC522/Variational Recurrent Neural Networks

Variational Inference in Recurrent Neural Networks

The intersection of variational inference and recurrent neural networks aims to capture variability within sequential data.

Principal Author: Jeffrey Niu

Collaborators:

Main papers:

- Bayer, J., & Osendorfer, C. (2014). Learning stochastic recurrent networks. arXiv preprint arXiv:1411.7610.

- Chung, J., Kastner, K., Dinh, L., Goel, K., Courville, A. C., & Bengio, Y. (2015). A recurrent latent variable model for sequential data. Advances in neural information processing systems, 28.

Abstract

Building upon the breakthroughs in variational inference and recurrent neural networks, these papers provide two different methods to merge the two concepts to leverage both of their advantages. Both methods add a latent variable for each timestep, altering the process for generation and inference. By adding the latent variable, additional variability is added into the RNN architecture. This article will discuss the modifications to the RNN architecture made and how generation and inference are performed. Finally, the article discusses the evaluation metrics used to compare these two methods.

Builds on

Variational recurrent neural networks directly builds on work in Recurrent Neural Networks and Variational Autoencoders. The variational recurrent network itself is a form of Graphical Model. The motivation behind variational recurrent neural networks stem from previous work in learning generative models of sequential data such as Dynamic Bayesian Networks, Hidden Markov Models, and Kalman Filters.

Related Pages

Recurrent neural networks are often tied to problems in Natural Language Processing. One example problem is processing speech data, which is sequential and contains high variability such as the speaker's vocal qualities.

Content

Background

Recurrent Neural Networks

Recurrent neural networks (RNNs) are a type of neural network designed to handle variable length inputs and outputs. The RNN architecture contains connections that form loops, allowing the outputs from one timestep to be passed to the next timestep as input. Specifically, given input sequence , output sequence , and hidden states , the RNN recursively evaluates for each timestep :where are parameters of the RNN and are non-linear activation functions.

RNNs can model the joint sequence probability as:where is the probability distribution derived from from the output of the RNN at timestep .

Variational Autoencoders

Stochastic gradient variational bayes and variational autoencoders were introduced by Kingma & Welling. They were interested in modelling a data distribution with latent variables . These latent variables represent the underlying variation seen in the data. The distribution can be expressed as . Since this integral is intractable to compute, a variational approximation is used. This allows for a lower bound on , which is used for training:

Motivation

The motivation for variational recurrent neural networks comes from the lack of variability present in the RNN architecture. A RNN has a deterministic transition function for the transitions between hidden states, so only the output defines the family of joint probability distributions expressed by the RNN. This is unlike dynamic Bayesian networks, whose nodes are random variables. For the highly structured data with variability, standard RNNs may be inappropriate due to their inability to model these variations. Thus, we want to add latent variables to the RNN architecture to help model the variability.

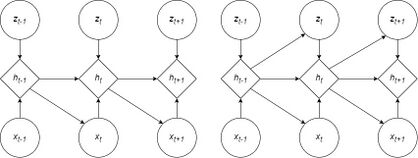

Stochastic Recurrent Networks

Stochastic Recurrent Networks (STORN) were proposed by Bayer & Osendorfer, adding a latent variable for each timestep. The new hidden state transition function incorporates the latent variables:The same definition of specifies the distribution . When the parameter is set to 0, then the model is equivalent to the standard RNN.

With the new latent variables, the factorization of the data likelihood becomes:

Since the formula for is deterministic with respect to and , follows a Dirac distribution with mode given by the formula. A Dirac distribution, also called the unit impulse, is a distribution where the distribution is zero everywhere except for its mode, and whose integral is one. Thus, the integral over can be replaced by a point denoted by that is the mode from the formula above. Thus, we can rewrite the joint probability as:For training STORNs, we need to derive the variational lower bound:This is similar to the standard variational lower bound. For the prior on the latent variables, a standard Normal is used, where is the -th latent sequence at timestep .Like other variational autoencoders, the distribution over the latent variables are a normal distribution parameterized by a mean and variance . The mean and variance are represented by the output at each timestep. The output is of length where the first represent the mean and the second represents the variance. Specifically, given the output , where is the encoder network. Here, the variance is calculated by squaring the output to ensure non-negativity. This is slightly different from other VAE methods which output the log-variance.

STORNs use the same reparameterization trick presented by Kingma & Welling, which samples the standard Normal in order to sample from the approximation by . Using this trick, we sample a complete sequence , which is passed through the decoder network to calculate , which is used as part of the optimization to minimize the KL-divergence.

Variational Recurrent Neural Networks

Variational recurrent neural networks (VRNN) were proposed by Chung et al., which also integrates latent variables at each timestep, but also introduces temporal dependencies between the latent variables. Like STORNs, VRNNs are a sequence of variational autoencoders connected by timesteps. However, in a VRNN, each VAE is conditioned on the hidden state of the RNN. Moreover, the prior on the latent variables is not the standard Normal distribution. Instead, VRNNs use to parameterize the prior Normal distribution.

The prior on the latent variable follows the distribution:where and are the parameters of the conditional prior distribution. is a function that computes the parameters. In general, this function is highly flexible, such as a neural network. This defines the distribution . Then, to generate , the distribution is conditioned both on and :where and are the parameters of the generating distribution. is the decoder and is also a highly flexible function, such as a neural network. Additionally, two other neural networks and are added to extract features from and respectively. These feature extractors help learn more complex sequences. Overall, this defines the distribution .

The RNN hidden state update takes in the extracted features from and and feeds them into a deterministic, non-linear transition function , such as long short-term memory (LSTM) or gated recurrent unit (GRU). The formula presented for VRNN transition is more general than the transition function for STORNs, which describe vanilla RNN transitions.Together, as , it combines to form the factorization:The method for performing inference with VRNNs is almost identical to STORNs. An approximate posterior is used and the distribution is given by an encoder neural network :

By conditioning on the hidden state , this defines the factorization: Finally, putting these two factorizations together gets the timestep-wise variational lower bound. Recalling that , we can substitute in the appropriate distributions to derive the lower bound:

VRNN-GMM

In the paper, the VRNN is also adapted for a Gaussian mixture model (GMM) observation model. Instead of outputting a set of parameters used to model

as a single Normal distribution, the model can output a set of mixture coefficients , means , and covariances . Then, the probability of under the GMM is: Both the standard Normal distribution VRNN and VRNN-GMM were evaluated.

Evaluation

Stochastic recurrent networks, variational recurrent neural networks (both Normal and Gaussian mixture model variants), and vanilla recurrent neural networks (also both Normal and Gaussian mixture model variants) were evaluated on five different datasets across two distinct tasks. The models were all fixed to have a single recurrent layer with 2000 LSTM units. The STORN and VRNN feature extractor, decoder, and encoder neural networks had four hidden layers with ReLU activation and around 600 hidden units per layer.

Speech modelling

Speech modelling tasks involve modelling raw audio signals, which are sequences of 200-dimensional frames, representing 200 consecutive raw acoustic samples. The four datasets used were:

- Blizzard: text-to-speech dataset containing 300 hours of English, spoken by a single female speaker.

- TIMIT: benchmarking dataset for speech recognition systems containing 6300 English sentences read by 630 different speakers.

- Onomatopoeia: set of 6738 non-linguistic human-made sounds made by 51 voice actors.

- Accent: English paragraphs read by 2046 different native and non-native English speakers.

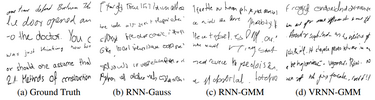

Handwriting generation

This task takes a sequence of -coordinates alongside binary indicators of pen-up/pen-down to model handwriting. The dataset used was the IAM-OnDB dataset, which contains 13040 handwritten lines of text by 500 different writers.

Results

| Models | Speech modelling | Handwriting | |||

|---|---|---|---|---|---|

| Blizzard | TIMIT | Onomatopoeia | Accent | IAM-OnDB | |

| RNN-Normal | 3539 | -1900 | -984 | -1293 | 1016 |

| RNN-GMM | 7413 | 26643 | 18865 | 3453 | 1358 |

| STORN | 8933

9188 |

28340

29369 |

19053

19368 |

3843

4180 |

1332

1353 |

| VRNN-Normal | 9223

9516 |

28805

30235 |

20721

21332 |

3952

4223 |

1337

1354 |

| VRNN-GMM | 9107

9392 |

28982

29604 |

20849

21219 |

4140

4319 |

1384

1384 |

For the vanilla RNN models, the exact log-likelihood was reported while in the variational models, both the variational lower bound () and the approximated marginal log-likelihood () are reported. Overall, the addition of latent variables do improve performance in speech modelling. There is an improvement in the handwriting task, though less pronounced. The VRNN models outperform STORNs, indicating that using the hidden state as a prior for the next timestep latent variable helps model temporal relationships.

Generation

The models were used to generate both speech waveforms and handwriting. For the generated speech waveforms, the waveforms generated by the VRNN are less noisy than the waveforms generated by vanilla RNNs. Likewise, for the handwriting, the VRNN produces more diverse handwriting. However, the results from the VRNN models are far from perfect. Even though the average log-likelihood has increased, the quality of the generated data is not a close approximation. For example, the handwriting produced by VRNN-GMM is no less illegible than the standard RNNs.

Extensions

The popularity of RNN-based architectures has decreased since the publication of these two papers. Instead, other complex models have overtaken them in spaces such as speech generation. However, the idea of sequential variational autoencoders is still present in some later works. For example, temporal difference learning, a concept from reinforcement learning, has been applied to sequential VAEs. In the space of music generation, which RNN models are also well-suited for, adding convolutions can learn better features for the VRNN model.

Outside of RNN-based architectures, generative adversarial networks (GANs) have been used in speech generation and handwriting generation, performing better than simpler RNN architectures. In handwriting generation, RNN-based architectures suffer from requiring stroke sequences, while having to learn long-range dependencies. Methods like GANs can directly operate on images of handwriting and produce handwriting based on pixels.

Annotated Bibliography

- Bayer, J., & Osendorfer, C. (2014). Learning stochastic recurrent networks. arXiv preprint arXiv:1411.7610. This paper introduces Stochastic Recurrent Networks

- Chung, J., Kastner, K., Dinh, L., Goel, K., Courville, A. C., & Bengio, Y. (2015). A recurrent latent variable model for sequential data. Advances in neural information processing systems, 28. This paper introduces Variational Recurrent Neural Networks

- Gregor, K., Papamakarios, G., Besse, F., Buesing, L., & Weber, T. (2018). Temporal difference variational auto-encoder. arXiv preprint arXiv:1806.03107. This paper is an example of a more novel technique that also uses the underlying premise of modelling sequential data using a series of VAEs.

- Kang, L., Riba, P., Wang, Y., Rusinol, M., Fornés, A., & Villegas, M. (2020). GANwriting: content-conditioned generation of styled handwritten word images. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXIII 16 (pp. 273-289). Springer International Publishing. This paper describes a generative adversarial network architecture for handwriting generation. Within, they explain why RNNs have flaws in this task.

- Koh, E. S., Dubnov, S., & Wright, D. (2018, August). Rethinking recurrent latent variable model for music composition. In 2018 IEEE 20th International Workshop on Multimedia Signal Processing (MMSP) (pp. 1-6). IEEE. This paper adds convolutions to the variational recurrent neural networks for music generation.

- Hsu, P. C., Wang, C. H., Liu, A. T., & Lee, H. Y. (2019). Towards robust neural vocoding for speech generation: A survey. arXiv preprint arXiv:1912.02461. This review discusses more current methods in speech generation.

To Add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.

|

|