Course:CPSC522/Natural Language Processing

Natural Language Processing

Natural Language Processing (NLP) is a field of Artificial Intelligence which helps computers to understand, interpret, and generate human speech. The goal of NLP is to make interactions between human communication and computer understanding.

Principal Author: Borna Ghotbi

Collaborators: Kevin Dsouza, Vanessa Putnam

Abstract

Natural language processing (NLP) is a method to translate between computer and human languages. It is a method of getting a computer to understandably read a line of text without the computer being fed some sort of clue or calculation. In other words, NLP automates the translation process between computers and humans.

Traditionally, feeding statistics and models have been the method of choice for interpreting phrases. Recent advances in this area include voice recognition software, human language translation, information retrieval and artificial intelligence. There is difficulty in developing human language translation software because language is constantly changing. Natural language processing is also being developed to create human readable text and to translate between one human language and another. The ultimate goal of NLP is to build software that will analyze, understand and generate human languages naturally, enabling communication with a computer as if it were a human. [1]

Builds on

Natural-language processing (NLP) is a field of computer science which is built on the history of Machine Translation and the history of Artificial Intelligence.

History

Birth of NLP (1955-1965):

Initially people thought NLP is easy and their prediction for solving “machine translation” was 3 years. They started to write hand-coded rules and took linguistic oriented approaches.

The 3 year project continued for 10 years with no good results.

Dark Era (1965-1975):

After initial hype, people believed NLP was impossible.Therefore, NLP research was mostly abandoned.

Slow Revival of NLP (1975-1985):

Some research activities resumed but the emphasis was still on linguistically oriented approaches.

Due to lack of computation power problems were mostly small sized with weak empirical evaluation.

Statistical Era / Revolution (1985-2000):

Computational power increased substantially. Consequently, Data-driven and statistical approaches with simple representations won over complex hand-coded linguistic rules.

Statistics Powered by Linguistic Insights (2000-Present):

More sophisticated statistical models such as neural networks utilized the great computational power on big data and acted as powerful tools for solving heavy problems.

As a result , the focus is now on new richer linguistic representations.

NLP Tasks

The NLP tasks can be divided into two categories, Syntactic and Semantic:

Syntactic NLP Tasks

- Word Segmentation: The goal is to break a string of characters into sequence of words. This may be a difficult task as in some languages, like Chinese, the words are not separated by space.

- Morphological Analysis: Morphology is a field of linguistics that studies the internal structure or words and a morpheme is defined as the smallest linguistic unit that has semantic meaning.

Morphological analysis is referred to the the task of segmenting a word into morphemes. - Parts of Speech (POS) Tagging: Given a sentence, determine annotate each word with a part-of-speech. For example, "book" can be a noun ("the book on the table") or verb ("to book a flight"); "set" can be a noun, verb or adjective.

- Phrase Chunking: Find all noun phrases (NPs) and verb phrases (VPs) in a sentence. For example, [NP I] [VP gave] [NP the phone] [PP to] [NP Susan].

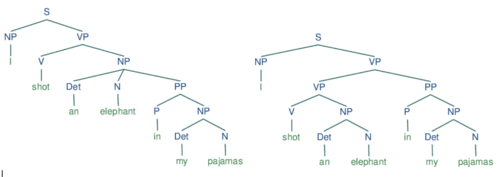

- Syntactic parsing: The term is used to refer to the formal analysis by a computer of a sentence or other string of words into its constituents, resulting in a parse tree showing their syntactic relation to each other, which may also contain semantic and other information. We should note that, some sentences may have different parsing trees which may cause in ambiguity. Let's take a closer look at the ambiguity in the phrase: "I shot an elephant in my pajamas." We can depict them in Figure 1.

Semantic NLP Tasks

- Word Sense Disambiguation (WSD): Words in language can have multiple meanings. For many tasks (question answering, translation), the proper sense of each ambiguous word in a sentence must be determined.

- Semantic Role Labeling (SRL): For each clause, determine the semantic role played by each noun phrase that is an argument to the verb such as that of an agent, goal, or result. For example, given a sentence like "Dana sold the laptop to Arun", the task would be to recognize the verb "to sell" as representing the predicate, "Dana" as representing the seller (agent), "the laptop" as representing the goods (theme), and "Arun" as representing the recipient.

- Textural Entailment: Determine whether one natural language sentence entails (implies) another under an ordinary interpretation. You may also want to entail captions from images.

Language Models

Statistical Language Modeling, or Language Modeling and LM for short, is the development of probabilistic models that are able to predict the next word in the sequence given the words that precede it.

They also try to answer the question:

How likely is a string of English words good English?

These probabilities can help us with reordering or word choice:

We can model the word prediction task as the prediction of the conditional probability of a word given previous words in the sequence and call it a language model:

N-Gram Models

Given: a string of English words . The problem is to calculate

For example the Following pseudo code creates a bigram model for us:

for each sentence

split line into an array of words

append “</s>” to the end and “<s>” to the beginning of words

for each i in 1 to length(words)-1 # Note: starting at 1, after <s>

counts[“w[i-1]w[i]”] += 1 # Add bigram and bigram context

context_counts[“w[i-1]”] += 1

counts[“wi”] += 1 # Add unigram and unigram context

context_counts[“”] += 1

for each ngram, count in counts

split ngram into an array of words # “w[i-1] w[i]” → {“w[i-1]”, “w[i]”}

remove the last element of words # {“w[i-1]”, “w[i]”} → {“w[i-1]”}

join words into context # {“w[i-1]”} → “w[i-1]”

probability = counts[ngram]/context_counts[context]

print ngram, probability

We can decompose using the chain rule :

The model is called bigram and trigram for n=2 and n=3 respectively.

we can also calculate the conditional probabilities using the following formula:

Markov Assumption:

Due to limited memory we want to include the last k words in the history and only the previous history is important. We call this the kth order Markov model. for instance for bigram model we have:

As an example, The probability of the sentence "I gave the phone to Susan" can be written as:

Word2Vec

Word2vec is a group of related models that are used to produce word embeddings. These models are shallow, two-layer neural networks that are trained to reconstruct linguistic contexts of words. Word2vec takes as its input a large corpus of text and produces a vector space, typically of several hundred dimensions, with each unique word in the corpus being assigned a corresponding vector in the space. Word vectors are positioned in the vector space such that words that share common contexts in the corpus are located in close proximity to one another in the space. For more information on word2vec please see the Word2vec page on Wikipedia.

These models are used in two ways:

Continuous Bag of Words (CBOW): use context words in a window to predict middle word. See Figure 2.

Skip-gram: use the middle word to predict surrounding ones in a window. See Figure 3.

To be more precise on the differences we can state that CBOW is learning to predict the word by the context. Or maximize the probability of the target word by looking at the context. And this happens to be a problem for rare words. For example, given the context yesterday was really [...] day CBOW model will tell you that most probably the word is beautiful or nice. Words like delightful will get much less attention of the model, because it is designed to predict the most probable word. This word will be smoothed over a lot of examples with more frequent words.

On the other hand, the skip-gram is designed to predict the context. Given the word delightful it must understand it and tell us, that there is huge probability, the context is yesterday was really [...] day, or some other relevant context. With skip-gram the word delightful will not try to compete with word beautiful but instead, delightful+context pairs will be treated as new observations. [2]

Implementations

Useful sources for NLP will be the Sci-Kit Learn library and the NLTK library.

Annotated Bibliography

Multimodal Learning with Vision, Language and Sound [3]

Natural Language Processing. Wikipedia: The Free Encyclopedia. Wikimedia Foundation[4]

Language Models [5]

Graham Neubig, Nara Institute of Science and Technology [6]

To add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.