Course:CPSC522/Deep Q-Learning

Deep Q-Learning

Deep Q-learning is an approximation technique in reinforcement learning intended to model and represent mammalian perception and behavior.

Principal Author: Fabian Ruffy

Collaborators:

This page is composed of four different works:

The first class of papers (Human-level control through deep reinforcement learning and Continuous control with deep reinforcement learning) describe the deep reinforcement learning algorithm and its extension to continuous action spaces.

The second set is a paper (Towards Generalization and Simplicity in Continuous Control) which criticises the deep learning approach as a whole and suggests other alternatives. It is augmented with general criticism on deep reinforcement learning by the research community (primarily this blog post).

Abstract

This page is dedicated to the field of Deep Q-learning, also titled deep reinforcement learning. It explains the technical background and motivation behind the Deep Q-learning algorithm and provides an explanation of the original work by DeepMind. The page is extended to analyze the follow-up work in continuous, high-dimensional action spaces. Lastly, Deep Q-learning and its effectiveness is critically examined on the basis of discussion by the scientific community.

Builds on

Deep Q-learning is a combination of Reinforcement Learning and neural networks. It is also a modification of Reinforcement Learning using Q-functions as utility mechanism. In its basic form, Deep Q-learning is a variant of Dynamic Programming and is often intended as a form of general game playing, which introduces algorithms play a diverse set of logical games. Overall, reinforcement learning is inspired by functions of the human brain, particularly behavioural and reward systems.

Related Pages

Although Deep Q-learning has become a very popular variant of reinforcement learning, full mature systems are still rare. Examples include DeepMind's AlphaGo and Audi's experimental self-driving car model. Theoretical follow-up work is plentiful. Noteworthy efforts include Asynchronous Advantage Actor Critic (A3C), Trust Region Policy Optimization (TRPO), and "Duelling" Deep Reinforcement Learning.

Content

Background

Reinforcement learning is a discipline of artificial intelligence rooted in the conditional sub-domain of behavioral psychology. It is intended to teach an agent to succeed in an uncertain environment. Agents are conditioned to adapt by iterating over a reward function. Actions, which lead to a desired outcome are rewarded positively whereas wrong behavior is penalized. Deep Q-learning relies on many aspects of reinforcement learning, including Monte Carlo methods, Q-functions, and temporal differencing. A new feature of Q-learning is its use of neural networks. More comprehensive information can be found in the following section: Reinforcement Learning.

Markov Decision Process

In its pure form, reinforcement learning is an evolution of a Markov Decision Process (MDP). A reinforcement learning MDP is comprised of the following components:

- The initial state: .

- A set of possible states the environment or agent can be in: .

- A of possible actions an agent can execute: .

- A transition model which returns the next likely state under a specific action: .

- A reward function which describes the benefit gained from reaching a certain state : .

State-Value Function

Actions are selected on the basis of a policy , which maximizes the reward for the agent for a given state.

The state-value function describes the total expected reward for the current state under a specific policy. The function makes use of the Bellman Equation, which introduces the discount factor . denotes the preference of the agent to prioritize future rewards over immediate reward, a form of delayed gratification.

The overall value function can be denoted as:

which estimates the total reward until a terminal or cut-off state is reached. This function provides the ability to find an optimal policy, which maximizes the overall reward.

Monte Carlo Methods

In order to adapt to large and unknown environments, agents often use Monte Carlo methods, in particular Monte Carlo Tree Search (MCTS). MCTS is a sampling technique which uses randomness to generate a policy as well as to estimate the expected utility for each state. An agent plays "episodes", which are sequences from the starting to terminal state, and ideally converges to an optimal model after enough exploration.

MCTS is used in model-free scenarios, in which the transition model and state-value function for a given state have to be estimated. This incomplete model is referred to as Monte Carlo prediction or passive reinforcement learning. If the general policy also has to be learned, it is referred to as Monte Carlo control, or active reinforcement learning. Active reinforcement learning continuously reevaluates the effectiveness of the policy, which is performed by iterating over the Q-function of each state:

The Q-function defines the utility of a state-action pair, which then allows the construction of a policy based on the highest returns.

Temporal Difference Learning

To update policies and values in Monte Carlo, it is necessary to wait until the completion of a full episode. This is expensive and impractical as some episodes may take a long time or never terminate. Temporal Differencing (TD) draws from classical conditioning to develop an anticipatory reward model. The agent updates the utility function of each state after is has experienced the subsequent state. Utility is estimated based on the expected return from the next action. only considers the state at whereas also factors in older states in its reward function.

The update of the utility in TD can be expressed as:

Here is the previous state, is the subsequent state, is reward the agent received, is rate at which the agent updates at and, is the default discount rate for a specific .

Q-Learning

Q-learning is an off-policy algorithm, which means that it does not depend on a pre-defined policy in order to choose a transition function. In Q-learning, the agent begins with an arbitrary, fixed policy and will generate an optimal policy on the side. It does this by selecting the predetermined Q-function value as the next action, which may be unreliable or useless. However, Q-learning continuously improves the agents second Q-function by observing the effects of the first policy. It uses the following update function:

is updated based on the newly experienced reward and the former estimated return, eventually approximating an ideal Q-function and overall policy . The original Q-function and policy do not change. If the state-space is too large to be reliably stored, function approximators are used.

Actor-Critic

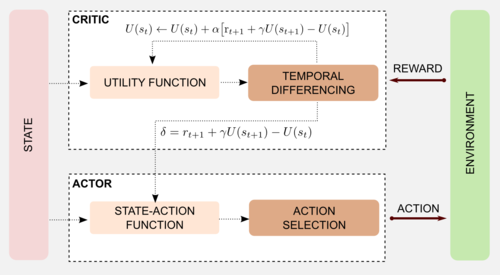

The actor-critic (AC) model is considered a decoupling technique which splits a reinforcement system into an action-selection and action-evaluation component. Actor are any type of reinforcement learning techniques, which actively improve the policy based on new action-value information. In general, actors provide an approximate state-action function, which is updated based on the values of the Q-functions. Critics are in general temporal differencing methods such as Q-learning or SARSA. They actively evaluate the current policy and update the action-value function itself.

AC systems are widely used, as they emulate a neural reward model and allow for clear separation of concerns. In AC, the actor produces an action based on its current Q-learning model. The critic observes the environmental reward, and evaluates the utility of the selected action. Depending on the reward, the critic will provide "feedback" to the actor, which will update its state-action function. Actor-critic methods are useful if the state or action space are too large and have to be approximated. Since approximators do not necessarily guarantee convergence and may also produce wildly varying policies actor-critic servers as a form of balancing algorithm[1].

Figure 1 displays a visual model of the actor-critic algorithm. The critic uses temporal differencing to calculate the state-action value function. The actor recalculates the policy on the basis of the newly updated Q-function.

The DeepMind Deep Reinforcement Learning Algorithm

Motivation

Reinforcement learning has traditionally been confined to domains in which the state space was generally well-defined, fully-observable, and low-dimensional. In order to represent the environment reinforcement agents frequently made use of prior manually generated models and heuristics, which provided a reasonable state-action baseline instead of just random initial guessing. This approach, however, conflicted with the notion of finding a general model. These reinforcement agents only operated well in the environment they had been designed for, and failed in unknown, new domains. A reinforcement learning agent which excelled at playing a game of chess, failed completely at backgammon and vice versa.

Q-learning in particular had been limited in its ability to approximate the full state-action space, partially because existing methods were unable to model neural perception appropriately. With a resurgence of popularity in machine learning, (convolutional) neural networks (CNNs) emerged as a new possible function approximator for Q-learning. Deep CNNs use a feed-forward mechanism which is said to imitate the behavior of receptive fields, boding themselves well for perception-based agents.

Unfortunately, non-linear approximators such as neural nets were known to behave erratically in reinforcement learning, thus limiting their usability.

Deep Q-Learning

To tackle this problem Mnih, Kavukcuoglu, Silver, et al., 2015 [2] proposed a new form of Q-learning, titled deep Q-learning (it is also referred to as deep Q-networks (DQN)). In addition to using a convolutional neural net to model the state-action space, they leverage experience replay[3] as a stabilization mechanism. Experience replay collects a buffer of historical transition models and randomly inserts the transition values during the Q-update function. Any historical transition may influence the future action selection, thus averaging the behavior distribution overall and mitigating the occurrence of local minima or divergence.

Secondly, in every state-action update the target value, i.e. the expected return, is trained independently of the actual update function for several update epochs. This guarantees that an update to does not immediately impact updates to . If the model is at risk of diverging or oscillation, this method establishes a potential buffer to stabilize. It can be considered a hybrid off-policy approach as the policy is only improved every N-steps.

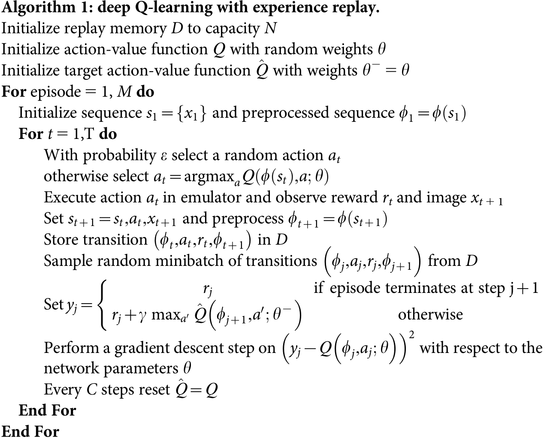

Algorithm

The algorithm below describes the typical behavior of a deep Q-learning framework. For episodes, the agent will iterate over its action-value function . To reduce CPU and memory usage, the state space is preprocessed using the function, which reduces the dimensionality of the input value. The agent uses Q-learning to update its action-value function . In each step, it selects an action -greedily. This means that with an chance it will pick an action randomly to explore, otherwise it exploits and selects the action with the highest expected value. Note that the use of argmax in the selection process implies that the expected action space is low and likely discrete.

After executing the action, it observes the reward and updates the state to the new state . The following sequence of steps is what distinguishes a Deep Q-learning agent from conventional Q-learning. After the reward has been observed, the agent stores the full transition in its memory stash .

When selecting the approximate target-value for the gradient descent update, the agent does not select the latest transition. Instead, it samples a transition from . To remain robust, the agent select the best action from the buffered instead of . The agent now performs a least-squares error gradient descent on and updates the weights of the Q-function. After steps it updates the buffer to the newly improved function.

Continuous Control

Deep Q-learning has been used successfully in a wide range of domains, yet again it was limited to a specific class of applications. Deep Q-learning allowed to model settings with high-dimensional state (or observation) spaces, but was unable to act in the face of continuous, high-dimensional action spaces. Examples include physical simulators and environments, which operate on fine-grained real values and operate on substantially more flexible movement patterns. While it is possible to discretize actions, the degrees of freedom quickly lead to a curse of dimensionality and an action-space explosion.

To handle such a class of high-dimensional action spaces, deterministic policy gradients (DPGs)[4] can be used. DPGs are capable of representing continuous action spaces as one cohesive policy and only iterate over the state space, thus reducing the amount of required computational power dramatically. The DPG traditionally relies on an actor-critic model, in which the actor computes a full policy based on a policy gradient method, and the critic uses Q-learning to guarantee an off-policy Q-value improvement.

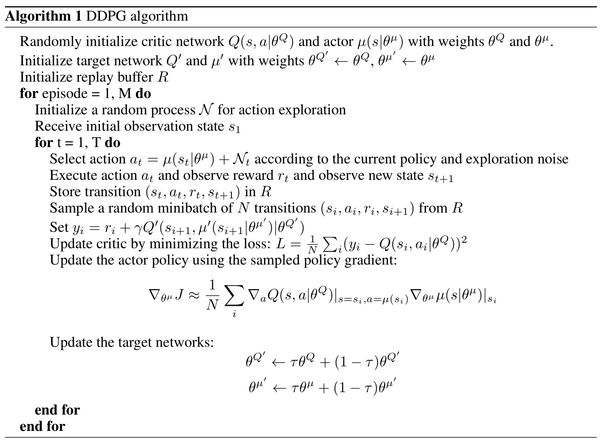

Similar to the motivation behind deep Q-learning, DPG is limited in its ability to represent complex or perception-based state spaces, which caused it to become unstable and slow in high-dimensional state spaces. In order to be able simulate movement and physical environments, Lillicrap, Hunt, et al., 2017[5] developed the Deep Deterministic Policy Gradient (DDPG) algorithm. DDPG again relies on two neural nets as function approximator and uses the lessons learned from DQN to assure convergence and stability. These lessons include an implementation of experience replay, as well as the periodical buffering of Q-functions.

Algorithm

While slightly differing in syntax, DDPG is similar to the deep Q-learning algorithm. It also uses experience replay and updates target values based on sampled transitions. Again, the algorithm buffers target values to prevent quick divergence caused by the use of neural networks. A major difference is the actor-critic model intended to handle the large state space and to guarantee convergence. Instead of selecting actions greedily, the actor decides on the basis of an approximation function . This also affects the computation of the target which is now updated on the basis of , i.e. the action chosen by the buffered target actor for state . The critic itself attempts to minimize the sum of least-squares and updates its target weights correspondingly. Afterwards, the actor modifies its policy following the critic's updated action-value function . As last step, the buffered weights for the actor and critic function are refreshed using a regularization parameter .

Example Usages

Console Games

Deep Q-learning had been primarily intended as a technique to naively learn games, without prior knowledge about goals or targets. Consequently, DQN has seen great popularity in general game playing applications and competitions[6]. Here, agents play on various types of old consoles from Nintendo or Atari and try to exceed human performance. Games from this time-period have extraordinary large state spaces, but expose only a comparably low set of actions. In addition, reward or penalty is often imminent, and does not require extensive preplanning. The size of the state space has often been seen as a limiting factor, which can be approximated using neural networks. Deep reinforcement agents have also demonstrated the ability to play FPS games, which require a much larger action space than simplistic console games.[7].

In Figure 2 on the left, a short clip of an agent mastering Breakout can be seen. After several hundreds of training episodes, the client finds a way to maximize its reward. It continues to aim for the left corner, which keeps the ball locked in, and easily collects points.

Chess and Go

Deep Q-learning achieved most of its fame as a component of the AlphaGo Zero[8] system. AlphaGo Zero is an improvement over AlphaGo Lee. It learns playing Go without any human input and is able to beat any grandmaster after 36 hours of training. AlphaGo Zero plays against itself and continuously improves its policy parameters using gradient descent. As of 2018, AlphaGo Zero is the unbeaten champion in the game of Go.

Criticism of Deep Reinforcement Learning

While the successes and advances of deep Q-learning have been substantial, concerns have been raised about a loss of perspective. The following sections cover several problematic aspects, which have since been highlighted about deep reinforcement learning. Many of the points that are raised are inspired by the publication of Rajeswaran, Lowrey, et al., 2017[9] supplemented by comments in the current community [10].

Zeroing in on Neural Networks

Work on Deep Q-learning poses the solution of using neural networks as function approximators as the only sensible way. The argument is that these complex and opaque networks model animal reasoning in a close manner. This, however, may be a red herring, as highlighted by Rajeswaran, Lowrey, et al[9]. The authors argue that the focus on deep neural nets as a solver may distract from simpler solutions, which may work just as well. Deep Learning agents operate excellently in model-free environments, but as to why exactly is not fully understood. Work demonstrating the effectiveness of these agents with continuous control has been lacking a baseline, which compares the performance to conventional approaches. The authors postulate to take a step back and closely analyze the problems being solved. While neural nets offer great flexibility, a simpler, less compute heavy solution could do the same job. Only once the drawbacks and advantages of simpler methods are well understood, neural nets should become an option.

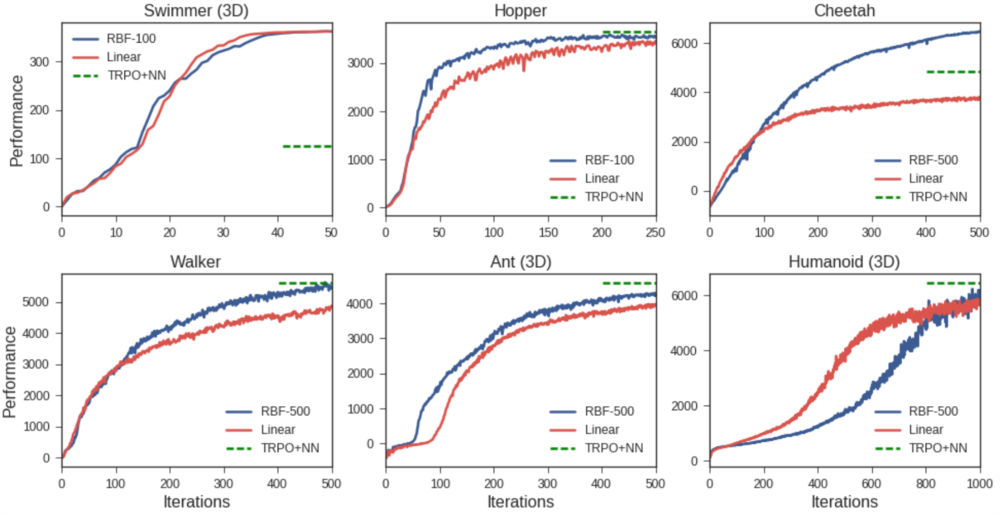

To substantiate their point, the authors developed a reinforcement learning agent which operates on either a simple linear or radial basis function to improve its policy. They compared the performance of the agent against a CNN implemented with Trust Region Policy Optimization (TRPO), which represents state-of-the-art function approximation. The benchmark is the commonly used MuJoCo[11] framework, which simulates continuous, physical motion with large action and state spaces. The performance of the agents is pictured below. The linear policy is colored red, the RBF blue, and the neural network policy is in green. As can be seen, even the linear approximation function outperforms the TRPO+NN in some cases, highlighting that neural nets may not be as generally efficient as commonly assumed.

It should be noted that performance of algorithms highly depends on tuning, which makes accurate benchmarking and comparison difficult.

Reproducing Deep Reinforcement Learning

On this note, criticism has been raised on the current reproducibility of reinforcement learning[12][13]. Slight differences in the configuration of parameters and fixed variables can make a dramatic difference in the outcome of the algorithm. Similarly, compute and storage power is often unevenly distributed in experiments, limiting the amount of generality of an algorithm. Powerful and specialized cluster computing networks (such as Google's (tensor processing units (TPUs))) can impact the overall effectiveness of the agent to react in time. Companies, which are publishing research on the improvements of their models, rarely publicize the exact configuration of their reinforcement agents.

To address these issues, guidelines which establish a fixed baseline for all deep reinforcement learning researchers have been proposed[12][13]. The hope is to entangle the dependencies of reinforcement learning, with the eventual goal of providing clarity on algorithm benefits and drawbacks.

Jack of all Trades, Master of None

Another criticism of the model-free neural network work is the focus on generality of the agent[14]. While a general agent can act adequately in many domains, it rarely masters them. If the intention is to develop a highly successful operator, it may be more sensible to develop a ground truth model which can be leveraged. Although this ground truth model limits the domain in which the agent can move, it nonetheless may help it excel in the designated task. Examples include the work of Boston Dynamics, which does not use deep reinforcement learning for its humanoid robots, or algorithms for the MuJoCo[11] benchmark which merely use a physics-based policy and succeed at all tasks[15].

Deep Learning Does Not Give You a Reward Function

Despite deep Q-learning being model-free, the reward function still has to be specified. The agent needs to be aware of states that are detrimental or beneficial. Implementing a reward function is typically simple, enforcing good behavior, however, can lead to complications. Utility functions, which reward specific states, often have curious side-effects that occur while acting out a policy. Some agents may find obscure exploits in games[16] or environments which maximize the reward, others may just get stuck exploiting a falsely rewarding strategy[17]. In some cases, when a penalty is imminent, agents may just decide to stop acting at all and pause forever.

Deep Q-learning does not solve this modeling problem and may even exacerbate it, as the opaqueness of neural net decisions can be confounding.

Deep Q-Learning is Quite Expensive

Deep Q-learning is able to successfully play games and act out policies, but at what cost? Until the agent has converged to a successful model, it may take millions of episodes and dozens of hours of computing. AlphaGo Zero[8] played 4.9 million games over three days on specialized hardware (TPUs). Although this time-scale is a tremendous improvement over AlphaGo Lee, which played for months and used 48 TPUs, it is still a substantial amount of iterations. The current most efficient deep Q-framework for Atari learning, Rainbow[18], requires approximately 18-million game frames (excluding frame skip) to perform well. These numbers may be too high to deploy reinforcement learning agents efficiently. The time needed to bootstrap such a framework, and its lack of ability to change in volatile environments, can potentially be a impeding factor on its practicability.

Efforts to reduce the amount of required iterations and samples exist, but they may be limited by the sample-inefficient nature of neural networks[19].

References

- ↑ Konda, Vijay R., and John N. Tsitsiklis. "Actor-critic algorithms." Advances in neural information processing systems. 2000.

- ↑ Mnih, Volodymyr, et al. "Human-level control through deep reinforcement learning." Nature 518.7540 (2015): 529.

- ↑ Lin, L.-H. (1992). Self-improving reactive agents based on reinforcement learning, planning and teaching. Machine learning, 8(3/4):69–97.

- ↑ Silver, David, et al. "Deterministic policy gradient algorithms." ICML. 2014.

- ↑ Lillicrap, Timothy P., et al. "Continuous control with deep reinforcement learning." arXiv preprint arXiv:1509.02971 (2015).

- ↑ Mnih, Volodymyr, et al. "Playing atari with deep reinforcement learning." arXiv preprint arXiv:1312.5602 (2013).

- ↑ Lample, Guillaume, and Devendra Singh Chaplot. "Playing FPS Games with Deep Reinforcement Learning." AAAI. 2017.

- ↑ 8.0 8.1 Silver, David, et al. "Mastering the game of go without human knowledge." Nature 550.7676 (2017): 354.

- ↑ 9.0 9.1 Rajeswaran, Aravind, et al. "Towards generalization and simplicity in continuous control." Advances in Neural Information Processing Systems. 2017.

- ↑ Pan Alexir, Deep Reinforcement Learning Doesn't Work Yet, Last-accessed: 2018-03-06

- ↑ 11.0 11.1 Todorov, Emanuel, Tom Erez, and Yuval Tassa. "Mujoco: A physics engine for model-based control." Intelligent Robots and Systems (IROS), 2012 IEEE/RSJ International Conference on. IEEE, 2012.

- ↑ 12.0 12.1 Henderson, Peter, et al. "Deep reinforcement learning that matters." arXiv preprint arXiv:1709.06560 (2017).

- ↑ 13.0 13.1 Islam, Riashat, et al. "Reproducibility of benchmarked deep reinforcement learning tasks for continuous control." arXiv preprint arXiv:1708.04133 (2017).

- ↑ https://blog.openai.com/evolution-strategies/

- ↑ Bellemare, Marc G., et al. "The Arcade Learning Environment: An evaluation platform for general agents." J. Artif. Intell. Res.(JAIR) 47 (2013): 253-279.

- ↑ Chrabaszcz, Patryk, Ilya Loshchilov, and Frank Hutter. "Back to Basics: Benchmarking Canonical Evolution Strategies for Playing Atari." arXiv preprint arXiv:1802.08842 (2018).

- ↑ Amodei, Dario, et al. "Concrete problems in AI safety." arXiv preprint arXiv:1606.06565 (2016).

- ↑ Hessel, Matteo, et al. "Rainbow: Combining Improvements in Deep Reinforcement Learning." arXiv preprint arXiv:1710.02298 (2017).

- ↑ Livni, Roi, Shai Shalev-Shwartz, and Ohad Shamir. "On the computational efficiency of training neural networks." Advances in Neural Information Processing Systems. 2014.

Major Sources

Deep Reinforcement Learning Doesn't Work Yet

Further information of Interest

Link 1 Link 2

Comprehensive introductions to reinforcement learning and deep reinforcement learning. The first link provides an overview and intuition of the domain, while the second course establishes more theoretical foundations.

Link 3

Interesting dissection of deep reinforcement learning and its problems, good recent read to temper expectations.

Link 4

This video series is not necessarily related to deep reinforcement learning since the algorithm is mimicry-based and not model-free. However, it highlights interesting aspects of general game playing, such as observing the agent's creativity in game play or the eventual successes it may achieve.

Link 5 Link 6

These links refer to papers which contain further criticism of Deep Reinforcement Learning and the zero-knowledge assumption. Due to time and space constraints they have not been included but may be of interest.

|

|