Course:CPSC522/Generative Adversarial Networks

Generative Adversarial Networks

Generative Adversarial Networks[1] (GAN) is a framework for training generative models that use deep neural networks. The approach simultaneously trains a generative model alongside an adversarial discriminative model. The discriminative model tries to determine whether a sample comes from the true data distribution or from the generative model, while the goal of the generative model is to fool the discriminative model.

Laplacian Generative Adversarial Networks[2] (LAPGAN) combines the GAN method with the a specific representation of images called the Laplacian Pyramid. LAPGAN uses a sequence of convolutional networks to generate images incrementally, analogous to iteratively creating the Laplacian Pyramid representation.

Principal Author: Tian Qi (Ricky) Chen

Abstract

The aim of this wiki page is to introduce an approach to creating generative models (explained below) from a dataset. The created generative model should be able to generate samples from the same distribution as the provided dataset (the target distribution). This generative model can then be used to generate additional simulated data, which is extremely useful for applications that require (but lack) large amounts of data. This wiki page describes the recently proposed approach of Generative Adversarial Networks, a new method that is competitive with other existing methods when only a finite amount of samples from the target distribution are known. The approach is then built upon by the computer vision community to generate images, using a domain-specific image representation scheme, the Laplacian pyramid. This is then shown to have better generative performance using human evaluation testing.

Background

Knowledge about neural network architectures and probability distributions are assumed.

Generative vs Discriminative Models

We describe the differences between discriminative and generative models. Suppose we have some data and some signal , with joint distribution . A discriminative model is a mapping from a value of to a signal . It does not care about the distribution , only that there exist some boundaries separating the 's that map to a certain value of and the 's that map to a different value of . On the other hand, a generative model tries to directly learn the distribution . In doing so, it does not explicitly learn boundaries separating different signals, but instead learns the entire distribution, which can be used to infer about the signals .

The main advantage of a generative model over discriminative models is the ability to generate samples from the distribution (supposing that the generative model is able to perfectly model the distribution). So while discriminative models are simpler to train, and typically performs better on most supervised tasks, generative models are more expressive as it approximates the true data distribution.

Below, we discuss a framework that uses neural networks to construct a generative model. Neural networks have been shown to perform spectacularly as discriminative models, usually in a classification setting where the inputs are high dimensional. GAN is a method that takes advantage of the performance of neural networks as discriminative models to aid in the training of a generative neural network.

Generative Adversarial Networks [1]

Generative Adversarial Networks (GAN) is a method for constructing a generative model that tries to learn the true distribution of the data, . Let denote the generative model. In this case, is restricted to be a neural network with parameters that takes as input some noise with distribution and outputs a sample according to 's distribution, . Define a second (discriminative) neural network that outputs a single scalar representing the probability that came from the data rather than .

The GAN approach simultaneously trains both and . The discriminative model is trained to maximize the probability of assigning the correct label. Thus the probability output of should be high when the sample comes from and low when the sample comes from . The proposed objective function for training is

However, since is only implicitly defined by the samples of the neural network , there is no explicit formula. Instead, since 's randomness comes from the prior , the second term in the objective function can be reformulated in terms of .

Additionally, the generative model is trained to fool the discriminative model into believing samples from are actually from , thus 's goal is to minimize the second term. This leads to the following minimax function:

The composition is valid due to modularity of neural networks and that samples from and span the same space.

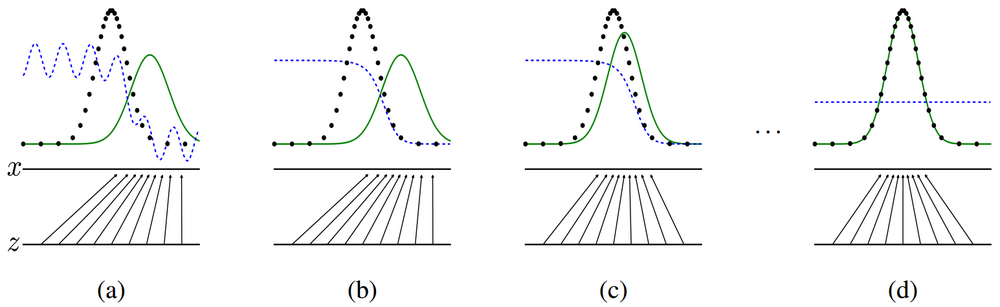

The training procedure alternates between updating whose distribution is visualized by the blue dashed line, and which maps the space of (lower horizontal line) to the space of (upper horizontal line); the distributions and are visualized by the dotted black line and solid green line, respectively.

Starting at (a), the generative model is near convergence and is a partially accurate classifier.

In (b), the discriminative model is updated, tending towards the optimizer . (This property is proven in the paper [1].)

(c) Once has been updated, the gradient for changes to flow to regions where classifies as data.

(d) After convergence, the optimal result is to have perfectly mimic the data distribution and to be unable to differentiate between the two distribution, ie. .

The only requirement for sampling from is the knowledge of a way to sample from . Conversely, if iid samples of can be created, then the samples from are also iid. This property is an advantage of the GAN approach compared with other generative techniques that use MCMC-based sampling, where the samples are not independent.

Some sampling results

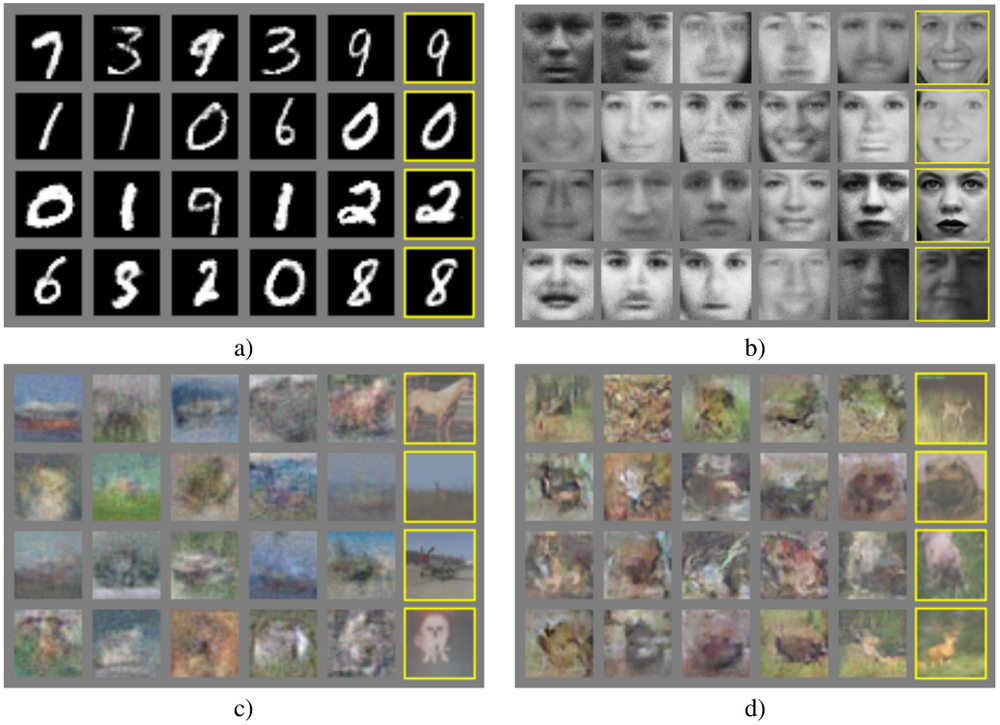

Below are some visualized samples from generative models trained using the GAN approach. The rightmost column shows the nearest neighbour training example of the sample displayed in the second rightmost column, to demonstrate that the model did not simply memorize the training set.

(a) MNIST is a dataset of handwritten digits in black and white. The generated samples are pretty good.

(b) TFD is a dataset of black and white faces. The generated samples definitely capture the structure of the human face, but some samples can still be identified as artificial. We see that some samples have very blurry shadows.

(c) CIFAR-10 is a dataset of 10 different classes of animals and automobiles. The generated samples look very blurred and certainly cannot fool the human eye.

(d) CIFAR-10 but with a different neural network model. This model uses convolutional layers whereas the networks for (a), (b), and (c) only use multilayer perceptrons. The results are still quite blurred and not recognizable as actual photos.

Overall, the GAN approach works for simple scenarios such as MNIST, but this is not particularly interesting. The approach fails as the complexity of the image increases, such as having added colour or varying objects. The resulting samples look blurred.

Laplacian Generative Adversarial Networks [2]

Laplacian Pyramid

The Laplacian pyramid representation stores an image as a sequence of downsampled images, plus residuals for each downsampled image. This technique is typically used in image compression.

Let be a downsampling operator that takes a image as input and produces a blurred image of half the size .

Let be an upsampling operator that takes a image as input and produces a smoothed image of double the size .

For an image , first build a pyramid , where and is constructed by applying repeated applications of . is the number of levels in the pyramid, chosen so that the final level is spatially small ( pixels).

Then calculate coefficients for each level of the Laplacian pyramid by taking the different between the image at that level and the upsampled image at the lower adjacent level.

The coefficient for the final level is simply the image itself ().

Thus the Laplacian pyramid of image is defined by . Reconstruction of the original image from the Laplacian pyramid coefficients uses the backward recurrence:

With being the original image. In order words, from the lowest level, the upsampling operator is applied repeated and the difference/residual is added to reconstruct the higher level.

The LAPGAN Approach

Laplacian Generative Adversarial Networks (LAPGAN) does not modify the GAN approach, but instead uses it at each level of a Laplacian pyramid. The LAPGAN approach is restricted to images. Instead of generating an image directly from scratch, the LAPGAN approach generates an image incrementally by generating the Laplacian pyramid.

One generative model is constructed for each level in the Laplacian pyramid, resulting in a set of convolutional network models . The generative models at each level is responsible for capturing the distribution of the Laplacian coefficients when given an upsampled image of the lower adjacent level and a noise vector . This yields the following model:

With . With this formulation, all generative models except the final are conditional generative models that take the upsampled version of as the conditioning variable. As will be explained in later sections, the LAPGAN approach has the advantage of breaking the generation of an image into successive refinements, while also yielding a training method that trains each level of the pyramid independently.

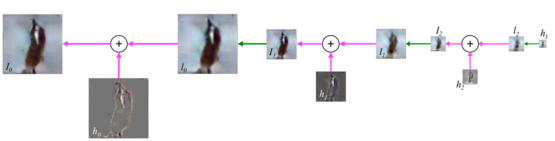

Sampling

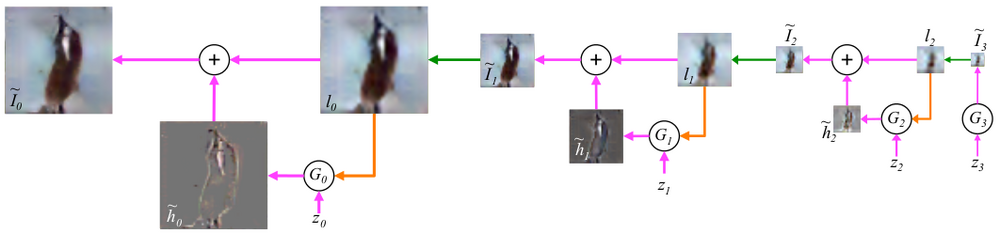

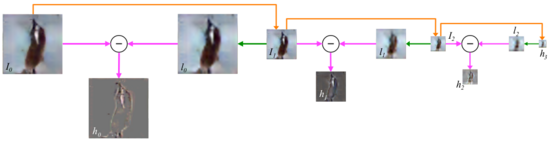

The sampling procedure of a image using the LAPGAN model is shown below.

Starting from a noise sample as input, generates . This image is then upsampled (denoted by the green arrow) and, along with some noise vector , fed as input to to produce , which is then added to to produce . This process is then repeated twice to produce , which is the full resolution generated image.

Training

For each image in the training set, the Laplacian pyramid is first constructed. The generative and discriminative models at each level are trained separately using a conditional variant of the GAN objective function, where both models receive an additional vector of information as input.

The conditioned variable in this case is the upsampled version of , as explained before.

At each level, the Laplacian coefficients are (with equal probability) either taken from the Laplacian pyramid or generated using , in which case . The discriminative model takes as input or , and the conditioning variable , and predicts if the image resulting from the addition of these two inputs is real or generated. At the final level, takes as input just a noise vector , and only has or as input (no conditioning variable).

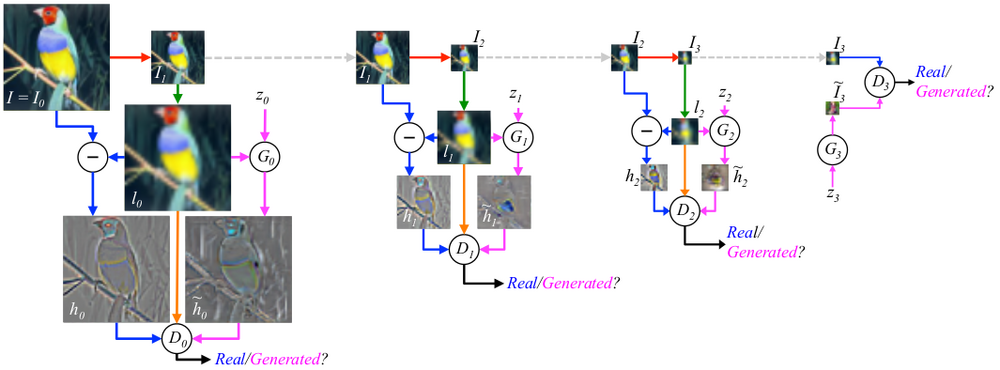

The training procedure for a image is shown below.

Starting with , it is downsampled to produce and upsampled to produce . Then with equal probability, either is calculated and is trained to predict that the input is real, or generates and is trained to predict that the input is generated. In the latter (generated) case, is also trained simultaneously using the gradients backpropagated through . The same procedure is repeated at the other levels. Note that while the models and depend on each other for training, they do not depend on the models at other levels.

LAPGAN Evaluations

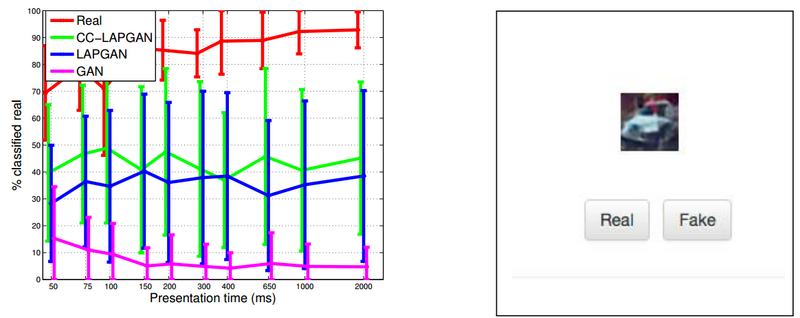

The LAPGAN paper [2] contains a human evaluation experiment. Participants are given a series of images, and for each image asked whether they believe the image is real or generated. The image is chosen as random and could be either sampled from a GAN model, LAPGAN model, or the original dataset. The dataset used is CIFAR10 (consisting of ten classes of animals and automobiles). Prior to the experiment, the participants are given real images from the CIFAR10 dataset to look at. During the experiment, the participants are only presented each image for one of 11 durations ranging from 50ms to 2000ms. The experiment collects ~20k samples from the participants. Below (left) is a plot of the percentage of images classified as real with varying presentation times. The user-interface for this experiment is on the right.

The base GAN model is only able to fool the participants with 10% of the generated images. The LAPGAN and CC-LAPGAN (class-conditioned LAPGAN, where the class label is also given to the generative model) sport an impressive improvement, with around 40% generated images being real enough to fool humans. However, this is still a lot lower than the 90% rate for real images.

References

- ↑ 1.0 1.1 1.2 Goodfellow, Ian, et al. "Generative adversarial nets." Advances in Neural Information Processing Systems. 2014.

- ↑ 2.0 2.1 2.2 Denton, Emily L., Soumith Chintala, and Rob Fergus. "Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks." Advances in Neural Information Processing Systems. 2015.