Course:CPSC522/Diffusion Probabilistic Model

Title

This page focuses on Diffusion Probabilistic Models, covering their introduction in Deep Unsupervised Learning using Nonequilibrium Thermodynamics, as well as a newer advancement, Denoising Diffusion Probabilisitic Models

Principal Author:Matthew Niedoba

Abstract

Generative models are a powerful class of models which are able to draw novel samples which match the distribution of their training distribution. One such class of generative models are diffusion probabilisitic models. These models consist of a forward trajectory which iteratively adds noise to the source data distribution to shift it towards a tractable prior. The reverse trajectory aims to model the reverse of this process, slowly shifting the distribution from the tractable prior back to the target data distribution.

Builds on

The forward and reverse trajectories of diffusion probabilisitc models are examples of Markov Chains. The models are optimized using the ELBO, a technique from Variational Inference.

Related Pages

Diffusion models are generative models. Other members of that class of model are Generative Adversarial Networks ,Variational Auto-Encoders, and Variational Recurrent Neural Networks.

Diffusion Probabilistic Models

In Deep Unsupervised Learning using Nonequilibrium Thermodynamics[1], the authors introduce diffusion probabilistic models. This section summarizes the contributions of this paper, detailing the forward trajectory, reverse trajectory, training objective and experimental results.

Forward Trajectory

We define the distribution of our data as . Generally, this distribution, such as CIFAR-10 images[2] is highly complex and intractable. New samples cannot be drawn from the data set directly. The forward trajectory is the process of converting this complex distribution into an analytically tractable distribution from which samples may be drawn, such as a multivariate Gaussian. The data distribution is transformed by iteratively applying a Markov diffusion kernel where is the diffusion rate at time t. Two possible choices of kernel are Gaussian and Binomial, corresponding toRepeatedly applying the kernel, we obtain the forward trajectoryReverse Trajectory

To generate samples from the diffusion model, a model is trained to reverse the forward trajectory.If is small, then the form of will match . That is, Gaussian transitions in the forward process will lead to Gaussian transitions in the reverse process. For Gaussian forward trajectories, the reverse trajectory is estimated by learning functions and which estimate the mean and covariance of the reverse trajectory transitions.

Training Objective

The objective in training is to maximize the log likelihood of the dataNaively, is intractable as it involves evaluating the integralHowever, the authors transform this integral into an average over forward trajectory samples by multiplying top and bottom by Although somewhat verbose, we can see that is now in the form of an expectation over samples from the forward trajectory . We can therefore produce sample based estimates of this value. Applying the modified form of to the Loss Equation produces

Jensen's Inequality [3] states that for a convex function , . Noting that is convex, and that our expression for is in the form of an expectation, the evidence can be lower bounded as is typical in other variational inference methods[4]. This method of lower bounding the log likelihood is known as the ELBO (Evidence Lower Bound).

The loss is now in the form of an expectation over samples from the forward trajectory. However, it can be reformulated into an expectation into a sum of KL divergence and entropy terms which is advantageous because they are available in closed form for Gaussian probabilities. The full simplification is described in the Appendix of [1], but a condensed version, found in the appendix of [5] is shown here for clarity. Although it is not important to know the exact steps to arrive at the simplified loss, this form of the loss functions is the jumping off point for the advances made in Denoising Diffusion Probabilistic Models.This loss is made up of two KL divergence terms and a cross entropy. Note that in the original derivation, the authors replace with the cross entropy minus the entropy which is equivalent.

Learning Beta

One key difference between this first paper and future diffusion probabilistic models is that the authors choose to learn the variance schedule of the forward trajectory . The authors set to a small value, but otherwise optimize through gradient ascent on the learning objective.

Experimental Results

The authors demonstrated the effectiveness of their method by fitting a Diffusion Probabilistic Model to a toy 2D distribution, a binary heartbeat distribution and a variety of image datasets

Toy Example Results

The authors use a proof of concept "Swiss Roll" distribution to validate that diffusion models are capable of learning complex 2D distributions. For this problem, a Gaussian forward and reverse trajectory were used. Figure 1 shows that the reverse trajectory is capable of closely matching the target distribution for this simple problem.

Binary Heartbeat Distribution

To illustrate that diffusion probabilistic models can be used to capture binary distributions, the authors fit a model to a synthetic heartbeat dataset. The heartbeat dataset consists of sequences of 20 binary variables, with ones every 5th step and zeros elsewhere. The diffusion probabilistic model was able to almost perfectly match the synthetic distribution.

Image Datasets

The authors trained their method on a variety of image datasets, including MNIST digits[6], CIFAR-10[2], and Dead Leaf Images [7][8]. They parameterize their reverse trajectory and using a multi-scale convolutional neural network. On MNIST, they claim superior performance as measured by a sample based estimate of the negative log likelihoods. When compared to other generative models, their method trails only generative adversarial networks[9].

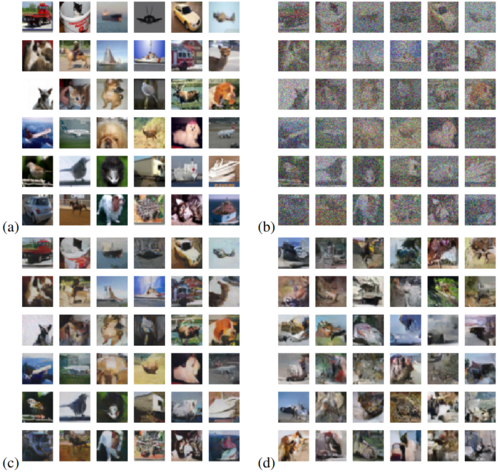

On CIFAR-10, they show qualitative samples highlighting that the model is able to produce reasonable samples from the data distribution. These samples are shown in Figure 2. Examining the samples from Figure 2c, we can see that the samples somewhat match the original dataset images, but contain some artifacts such as blurring and brightness shift. The samples in Figure 2d do not seem to correspond to any of the 10 classes and are not easily recognizable.

Conclusion

In this first paper, the authors introduce a new class of generative models and demonstrate its effectiveness through several experiments. Although the model performs well on the toy Swiss Roll problem and MNIST, the CIFAR-10 samples show clear room for improvement.

Denoising Diffusion Probabilistic Models

In the first paper, the authors introduced diffusion probabilistic models, and showed that they can generate image samples from various datasets including CIFAR10. However, the resulting sample quality is somewhat poor, especially in unconditional samples. In Denoising Diffusion Probabilistic Models[5], the authors seek to improve the quality of these samples. The authors select a specific parameterization of the forward and reverse process, which is guided by a connection between diffusion probabalistic models and denoising score matching[10]. This section covers the modifications made to the previously introduced diffusion probabilistic model, and the experimental results reported in the work.

We follow the structure of the paper, covering the modifications made in the context of the three main terms of the training loss introduced previously

Forward Trajectory and

Unlike the previous paper, the authors decide here to fix the forward trajectory by setting a linear schedule for . By fixing the diffusion rate, the loss term is constant since is fixed and .

Reverse Trajectory and

Next, the authors select the parameterization where like in [1], is a neural network which outputs the mean of the distribution. The key difference between this parameterization and the parameterization used in [1] is the covariance. Unlike [1], the authors here use a diagonal covariance matrix instead of a full covariance matrix. Further, they do not estimate the covariance at each timestep. Instead, they fix the scale of the variance to match the diffusion rate at every timestep.

The authors then use this parameterization to simplify . Given , where is the posterior mean of the forward trajectory, the KL divergence can be rewritten asWhere C is a constant with respect to the parameters of the reverse trajectory. From this loss formulation it is clear that to minimize , the mean of the reverse trajectory must match the posterior mean of the forward trajectory. Since the posterior mean of the forward trajectory can be computed in closed form given and , can therefore be optimized using this L2 error over random samples from .

Inspired by [10], the authors provide an alternate objective which relates the parameterization of the reverse trajectory to a score matching objective over varying noise scales. Since the forward trajectory is a Markov chain with Gaussian transitions, we can sample from the forward trajectory using the reparameterization trick ie: , . For the Gaussian forward trajectory which is specified as , we have where . By substituting this function of into the of equation, expanding, and rearranging for , the authors find that an optimal choice of parameterization for isWhere is a function approximator which estimates the noise used to generate . Substitution this parameterization of , simplifies to

The authors empirically find that the coefficient impedes sample quality, so they train their model using a simplified objective:

Experimental Results

The evaluate their changes to the training objective and the reverse trajectory parameterization by evaluating their model on a variety of image based datasets. They also perform ablations with different parameterizations to highlight the benefits of the denoising parameterization. In all experiments, the authors use a U-Net similar to PixelCNN++[11] for their function approximators .

Image Datasets

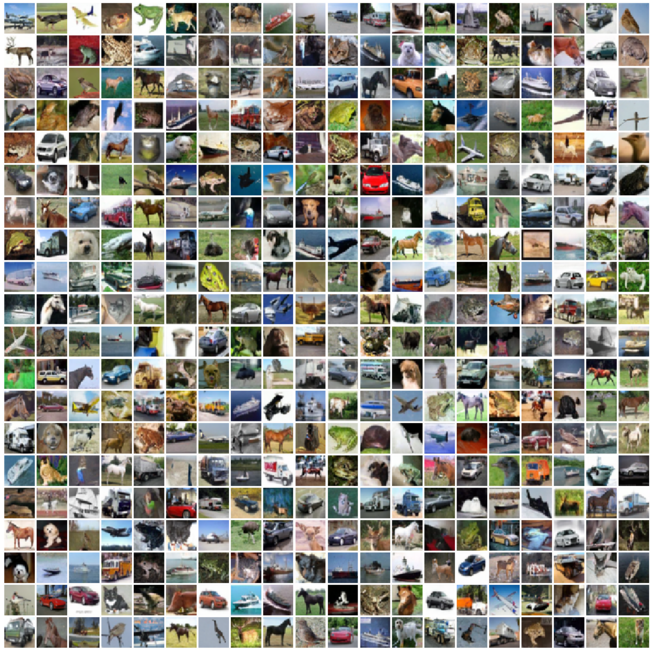

The authors present samples from CIFAR10[2], CelebA-HQ[12], and LSUN[13]. CIFAR10 samples are highlighted in Figure 3. Comparing to the previous results in Figure 2d, it is clear that these samples are higher quality. The samples seem much crisper and clearly correspond to the classes of CIFAR10 for most images. The authors back up these observations by comparing the negative log likelihood on the CIFAR10 set to those previously reported in the original diffusion probabilistic models paper. The negative log likelihoods as wellas FID and inception scores are shown in Table 1

Ablation of Training Objectives

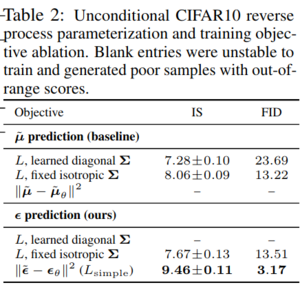

In addition to analyzing the performance of their method to prior work, the authors also investigate their design choices through an ablation experiment. In the experiment, they compare performance for models trained with fixed and learned variance schedules, along with models trained to predict the score vs those which predict the posterior mean. The results of the ablation are in Table 2.

Conclusions

In the first paper, the authors introduce Diffusion Probabilistic Models, and show experimental results on image generation. However, the the method did not generate much traction, perhaps due to the density of the notation in the original paper or the somewhat uninspiring unconditional samples on the CIFAR10 dataset. In Denoising Diffusion Probabilistic Models, Ho et al. build on the work introduced by Sohl-Dickstein et al. and by show that with some modifications, diffusion probabilistic models can produce exceptional image samples. Thanks to these two works, there has been an explosion in generative modelling methods, many of which leverage diffusion probabilistic models and denoising diffusion probabilistic models.

Annotated Bibliography

- ↑ 1.0 1.1 1.2 1.3 1.4 Sohl-Dickstein, J., Weiss, E., Maheswaranathan, N. & Ganguli, S.. (2015). Deep Unsupervised Learning using Nonequilibrium Thermodynamics. Proceedings of the 32nd International Conference on Machine Learning, in Proceedings of Machine Learning Research 37:2256-2265 Available from https://proceedings.mlr.press/v37/sohl-dickstein15.html.

- ↑ 2.0 2.1 2.2 Krizhevsky, A., & Hinton, G. (2009). Learning multiple layers of features from tiny images.

- ↑ "Jensen's Inequality". Wikipedia. Retrieved Feb 13, 2023.

- ↑ Blei, D. M., Kucukelbir, A., & McAuliffe, J. D. (2017). Variational inference: A review for statisticians. Journal of the American statistical Association, 112(518), 859-877.

- ↑ 5.0 5.1 Ho, J., Jain, A., & Abbeel, P. (2020). Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems, 33, 6840-6851.

- ↑ LeCun, Y. (1998). The MNIST database of handwritten digits. http://yann. lecun. com/exdb/mnist/.

- ↑ Jeulin, D. Dead leaves models: from space tesselation to random functions. Proc. of the Symposium on the Ad- vances in the Theory and Applications of Random Sets, 1997.

- ↑ Lee, A., Mumford, D., and Huang, J. Occlusion models for natural images: A statistical study of a scale-invariant dead leaves model. International Journal of Computer Vision, 2001.

- ↑ Goodfellow, I. J., Shlens, J., & Szegedy, C. (2014). Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572.

- ↑ 10.0 10.1 Yang Song and Stefano Ermon. Generative modeling by estimating gradients of the data distribution

- ↑ Tim Salimans, Andrej Karpathy, Xi Chen, and Diederik P Kingma. PixelCNN++: Improving the PixelCNN with discretized logistic mixture likelihood and other modifications. In International Conference on Learning Representations, 2017.

- ↑ Karras, T., Aila, T., Laine, S., & Lehtinen, J. (2017). Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196.

- ↑ Fisher Yu, Yinda Zhang, Shuran Song, Ari Seff, and Jianxiong Xiao. LSUN: Construction of a large-scale image dataset using deep learning with humans in the loop. arXiv preprint arXiv:1506.03365, 2015

To Add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.

|

|