Course:CPSC522/Deep Q Network

Title

Prioritized Experience Replay

Principal Author: Obada Alhumsi

Papers discussed: 1) V. Mnih et al., “Human-level control through deep reinforcement learning,” Nature, vol. 518, no. 7540, pp. 529–533, Feb. 2015, doi: 10.1038/nature14236.

2) T. Schaul, J. Quan, I. Antonoglou, and D. Silver, “Prioritized Experience Replay,” ArXiv151105952 Cs, Feb. 2016.

Abstract

This paper explores the utilization of experience replay in deep reinforcement learning. It will focus on prioritized experience replay, an improved method of sampling experiences.

Builds on

Experience Replay is a sampling method used in Deep Q-Learning, a common learning algorithm in Deep Reinforcement Learning. Deep Q networks mix Q-learning and Deep Neural Networks to extend Q-learning to continous action states.

Related Pages

Experience Replay in general is closely related to hippocampal replay.

Content

Introduction

In the past few years, there has been many advancements in the field of Deep reinforcement learning. One of the first breakthroughs in the field was the introduction of Deep Q Networks (DQNs). A DQN represents an agent that learns to achieve specific goals from observations, interactions and rewards from the environment. DQNs utilize convolutional neural networks to apply Q learning in continuous state space environments, where the CNN approximates the optimal action-value function [1].

The Q learning method is augmented with experience replay, where the agent learns from past experiences randomly sampled from a uniform distribution [1]. Therefore, eliminating temporal correlations and stabilizing the training of the action-value function [2]. The advantages offered by experience replay has motivated the development of improved methods that use more advanced sampling methods. This paper will focus on one of these methods, Prioritized Experience Replay which enhanced the performance of original DQNs in single-agent environments.

Prioritized Experience replay

Background

Research into animal behaviour and neuro-structure has motivated many of the developments in reinforcement learning. Uniform experience replay and prioritized experience replay are both motivated by studies indicating the presence of experience replay in the brains of some of animals [1][2].

While Uniform experience replay samples from memories uniformly, prioritized experience replay prioritizes the sampling of some memories over others using methods inspired by evidence that suggests that memory sequences in rodents are repeated based upon their reward and TD-error [2]. In order to explain these methods, its important to briefly explain single-agent Deep Q Networks and the use of TD-error in these networks.

Single-Agent Deep Q Networks

Notation

Please note that the following notations are similar to the notations used in the paper by Foerster and his colleagues.

- defines the state of the environment at time t,

- defines the action taken by the agent at time t

- defines the reward received by the agent at time t

- defines the expected discounted reward which is equal to where is the discount factor that ranges from 0-1.

- The action is chosen based upon the policy which chooses what action to take given the state of the environment (Markov property).

- The action-value function Q of the policy is

- Finally, the Bellman optimality equation where defines the transition function.

In single-agent deep Q networks, the Q function is approximated by a convolutional neural network with parameters . The action taken in the environment is the action that maximizes the action-value function Q; this forms a problem as the agent will not be able to explore the environment and will always take actions that maximizes its rewards based upon previous experiences. Therefore -greedy policy is used to choose between taking a random action to explore the environment space or the greedy action outputted by the DQN. The action , observation , reward and the next observation are then stored in memory for training. The parameters of the neural network , are learned via sampling from the memory and minimizing the squared TD-error.

Authors Contribution

In this paper, the authors aim to improve the performance of DQNs by improving the experience replay module. Firstly the authors propose to prioritize the replay of experience based upon TD-error, where experiences that yield a higher TD-error are sampled more often. TD-error is a great variable to prioritize experiences by, as it is a indirect measure of the importance of each transition [2]. TD-error measures the difference between the estimated reward and reward received at a given time-step. In DQNs, , or the network output, estimates the reward, while represents the maximum reward achievable given the current state and action. An Increase in error represents a discrepancy between the expected reward and the achievable reward. Thus replaying these experiences is vital in order to learn actions that receive a reward close to the maximum expected reward. Moreover, a state with low TD-error can be thought of as a stimulus to the agent, as it is highly certain of future rewards from this action. In this paper, the authors save the TD-error with each transition and replay experiences with the highest absolute TD-error, preforming a Q-learning update proportional to the TD-error. The use of this method in simple environments decreased learning time as it decreased the amount of experiences replayed from memory. The authors term this method as ‘greedy TD-error prioritization’ as experiences with low TD-error are barley visited.

Nonetheless, there are many issues with using TD-error by itself. Firstly, a transition with low TD error might take extensive time before getting replayed. Secondly, in environments where the rewards are noisy, the prioritization of experiences might not be accurate, reducing the overall performance of the algorithm. Lastly, as only part of the experiences are replayed, this makes this method prone to overfitting. To solve these issues, the authors mix greedy TD-error prioritization with uniform sampling in order to preform stochastic sampling. In stocahstic sampling, each transition has a probability of being sampled shown below.

is the priority of transition and is the amount of prioritazation used. will always be more than 0, and when a=0 the equation represents the original uniform sampling method where the probability of sampling a transition will equal to one over the total number of transitions. Increasing will increase the rate at which higher priority transitions will be sampled. can be calculated using two methods that both ensure non-zero probability and a monotonic TD-error distribution, with the latter method being more robust to outliers.

Firstly, can be calculated using 'propotional prioritization' where and is a small postive constant that ensures never reaches zero. Secondly, we can calculate using a rank based prioritization where and is the rank of transition when transitions are ordered from highest TD-error to lowest TD-error. Based on scores from Attari games, Rank-based prioritization performs four times better than uniform experience replay while propotional prioritization performs five times better. Nonetheless, rank based prioritization is resistant to outliers as previously mentioned.

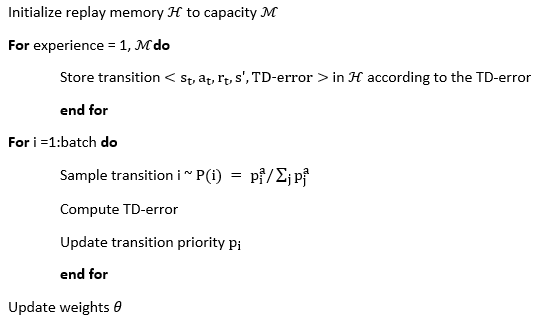

The full algorithm can be seen in [2], however its not posted on this page due to the lack of explicit consent from the authors. Nonetheless, the prioritized experience replay can be seen below.

-

Simple algorithm explaining the application of prioritized experience replay in DQNs. Note that the code is simplified for a broad audience.

Discussion

Deep Q Networks are known to be computationally expensive; they need to randomely sample experiences from a memory inorder to eliminate temporal correlations and stabilize the training process. In order to decrease training time and increase the overall performance of deep Q networks, the authors of [2] introduced prioritized experience replay. Prioritized experience replay samples experiences based upon their TD-error by either using proportional prioritization or rank based prioritization. In simple environments, using propotional prioritization yields better results than rank-based prioritization, however, in complex environments, rank-based prioritization performs better due to its resistance to outliers. In general, DQNs using prioritized experience replay have become the new state of the art in DQN architecture, while uniform experience replay has become redundant in literature.

Annotated Bibliography

[1] V. Mnih et al., “Human-level control through deep reinforcement learning,” Nature, vol. 518, no. 7540, pp. 529–533, Feb. 2015, doi: 10.1038/nature14236.

[2] T. Schaul, J. Quan, I. Antonoglou, and D. Silver, “Prioritized Experience Replay,” ArXiv151105952 Cs, Feb. 2016.

[3] J. Foerster et al., “Stabilising Experience Replay for Deep Multi-Agent Reinforcement Learning,” ArXiv170208887 Cs, May 2018.

To Add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.

|

|