Course:CPSC522/Decision Networks

Decision networks

Decision networks (also called influence diagrams) are a graphical representation of decision problems by extending bayesian networks with decision making and utility.

Principal Author: Yan Zhao

Collaborators: Ricky Chen, Yu Yan

Abstract

This page introduces decision networks, and it covers motivations, basics, applications and possible extensions of decision networks.

Agents usually need to make decisions under uncertainties. We use decision networks to model and solve this kind of problems. Decision networks generalize bayesian network by adding decision nodes and a utility node. Simple "one-off" decision problems can be modeled by single-stage decision networks and general sequential decision problems can be modeled by (general) decision networks. Both can be solved efficiently with variable elimination. Decision networks are a very useful model , and there are various applications and extensions.

Builds on

Decision networks extend bayesian networks by adding decision nodes and a utility node. They can be efficiently solved using variable elimination.

This page is mostly built with materials from Artificial Intelligence: Foundations of Computational Agents unless specified.

Content

Motivation

Consider a cleaning robot in an environment with randomness (it may fall down, people may littering). It needs to make decisions such as where to go or whether to clean or not. So how does the robot make decisions to better achieve its task?

In general, there are many problems involving decision making under randomness. And the "final result" usually depends on both decisions and randomness. To model and solve this kind of problems, we can borrow the idea from bayesian networks. However, there is no decision making in bayesian networks. Also also the goal now is to compute "final result" rather than make probabilistic inference. To fill this gap, based on bayesian network we introduce decision nodes to model decision making and a utility node to model "final result". And the resulting model is called "decision network".

Some times decision making is "one-off", which means we only need to make decision before anything happens. But more general, the decision making can be "sequential", which mean we make decisions sequentially based on outcome of previous decisions. The "one-off" case seems to be simpler then sequential ones. Therefore, we will introduce single-stage decision networks to model and solve this kind of decision problems. And base on that, we will then introduce (general) decision networks to handle sequential cases.

Single-Stage Decision Networks

Single-stage decision networks model "one-off" decision problems and are a simplified version of (general) decision networks. The definition of (general) decision networks is built on single-stage decision networks.

Definition

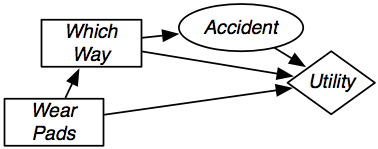

A single-stage decision network is a directed acyclic graph that has three kinds of nodes:

- Chance nodes (drawn as ovals) represent random variables. They are the same as the nodes in bayesian networks. Each chance node has a associated domain and a conditional probability distribution (given its parents). Both chance nodes and decision nodes can be parents of a chance node.

- Decision nodes (drawn as rectangles) represent decision variables. Each decision node has a associated domain and the agent has to choose a value for it. Only decision nodes can be parents of a decision node.

- A utility node (drawn as diamond) represents the utility. There is no domain associated with the utility node, but a utility function of the utility node's parents instead. Both chance nodes and decision nodes can be parents of the utility node.

For each node, we need to determine its parents (e.g. nodes that directly determine it). Then we should draw an directed edge from each parent to its child. Note that we should not have any cycles here. The resulting network (with domains of nodes, conditional probability tables, the utility function) is called singe-stage decision network.

Example

In this example, a delivery robot may run into accident during its delivery task. The robot can choose to wear a pad to mitigate the effects of the accident (but not to reduce the chance of accident). However, the robot becomes slower due to the extra weight of the pad. The robot can also choose to go a long way around to reduce the chance of accident. However, the robot becomes slower due to the extra path. The utility depends on the decisions (wear pad or not, long way or short way) of the robot and whether it runs into accident.

Solution Method

A policy is an assignment of a value to each decision variables. The expected value of the utility conditioned on a policy is the expected utility of a policy. An optimal policy is a policy with maximal expected utility. And the goal of the single-stage decision network is to find the optimal policy.

A standard method to find an optimal policy in single-stage decision networks is variable elimination, as shown in the algorithm below (from Artificial Intelligence: Foundations of Computational Agents).

1: Procedure OptimizeSSDN(DN): 2: Inputs 3: DN: a single stage decision network 4: Output 5: An optimal policy and its expected utility 6: Prune all nodes that are not ancestors of the utility node 7: Sum out all chance nodes 8: // at this stage there is a single factor F that was derived from utility 9: Let v be the maximum value in F 10: Let d be an assignment that gives the maximum value 11: return d, v

Decision Networks

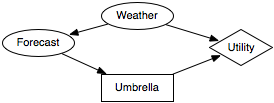

Single-stage decision network assumes that the agent makes decisions first and carries it out blindly. However, the agent's actions usually depend on its observations. This can be modeled as a sequential decision problem. And (general) decision network can be used to handle sequential decision problems.

Definition

Decision networks generalize single-stage decision networks by allowing both chance nodes and decision nodes to be parents of decision nodes.

Example

In this example, a agent needs to decide whether to take umbrella or not when it is possible to rain. The agent can't observe weather directly but can observe forecast instead. And the forecast probabilistically depends on weather. The agent's utility depends on the actual weather and whether it takes an umbrella.

No-Forgetting Property

A no-forgetting agent makes decisions in some order, and it remembers its previous decisions and any information related to its previous decisions. A no-forgetting decision network is a decision network where decisions nodes are in some order and, if decision node is before , then is a parent of and any parent of is also a parent of . Assume no-forgetting property holds, we have an efficient algorithm that can solve decision networks.

Solution Method

Again the goal of decision network is to find optimal policy. And with no-forgetting property, we can again use variable elimination to efficiently solve decision networks as follows (from Artificial Intelligence: Foundations of Computational Agents):

1: Procedure VE_DN(DN): 2: Inputs 3: DN: a decision network 4: Output 5: An optimal policy and its expected utility 6: Local 7: DFs: a set of decision functions, initially empty 8: Fs: a set of factors 9: Remove all variables that are not ancestors of the utility node 10: Create a factor in Fs for each conditional probability 11: Create a factor in Fs for the utility 12: while (there are decision nodes remaining) 13: Sum out each random variable that is not a parent of a decision node 14: // at this stage there is one decision node D that is in a factor F with a subset of its parents 15: Add argmaxDF to Fs. 16: Add argmaxDF to DFs. 17: Sum out all remaining random variables 18: Return DFs and the product of remaining factors

Decision Network in Action

Let's revisit the umbrella example in last section and see how to use decision networks in practice.

Build Decision Networks

Although decision network in the umbrella example is already given, it is still worth mentioning how to build decision networks in the first place. In general, the recipe for building decision networks is:

- Determine all nodes and their types.

- Find parents of all nodes, then connect from parents to children.

- Determine domain for all chance nodes and decision nodes.

- Determine conditional probability table for chance nodes.

- Determine utility function.

Now we follow the above recipe and build the decision network.

First we find all nodes and their types:

- Weather (chance node)

- Forecast (chance node)

- Umbrella (decision node)

- Utility (utility node)

Then we try to find parents of each node and connect all nodes:

- Weather: nothing can determine weather, so there is no parents of weather.

- Forecast: only weather can determine forecast, so weather is parent of forecast.

- Umbrella: the agent decide to take umbrella or not based on forecast, so forecast is parent of umbrella.

- Utility: the final utility depends on whether it rains and whether umbrella is taken, so both weather and umbrella are parents of utility.

Now we can connect all nodes and it should give the same network as shown in last section.

The next step is to determine domain for chance nodes and decision nodes:

- Weather: {rain, no rain}

- Forecast: {sunny, cloudy, rainy}

- Umbrella: {take it, leave it}

Then we find conditional probability table for chance nodes. In general, this should be based on historical data or learning algorithm. But we just show results here for simplicity.

P(Weather = rain) = 0.3

P(Forecast | Weather) is given by:

| Weather | Forecast | Probability |

|---|---|---|

| no rain | sunny | 0.7 |

| no rain | cloudy | 0.2 |

| no rain | rainy | 0.1 |

| rain | sunny | 0.15 |

| rain | cloudy | 0.25 |

| rain | rainy | 0.6 |

The last step is to find utility function. Again this should be based on historical data or learning algorithm. and we just show results here for simplicity.

Utility(Weather, Umbrella) is given by:

| Weather | Umbrella | Utility |

|---|---|---|

| no rain | take it | 20 |

| no rain | leave it | 100 |

| rain | take it | 70 |

| rain | leave it | 0 |

Now we have successfully build the decision network, the next step is to solve it using variable elimination.

Solve Decision Networks

We follow the algorithm VE_DN in last section to solve the decision network.

The initial factors are P(Weather), P(Forecast | Weather) and Utility(Weather, Umbrella).

We first eliminate Weather by summing out, resulting in a factor on Forecast and Umbrella:

| Forecast | Umbrella | Value |

|---|---|---|

| sunny | take it | 12.95 |

| sunny | leave it | 49 |

| cloudy | take it | 8.05 |

| cloudy | leave it | 14 |

| rainy | take it | 14 |

| rainy | leave it | 7 |

The we maximize over Umbrella for each value of Forecast, giving a optimal policy:

| Forecast | Umbrella |

|---|---|

| sunny | leave it |

| cloudy | leave it |

| rainy | take it |

So this means the agent should take umbrella when forecast says it is raining and leave it otherwise. It looks like a pretty reasonable decision!

Applications

There are various applications of decision networks, including but not limited to:

- Machine learning

- Robotics

- Intelligent systems

Extensions

Markov Decision Process

The decision networks are for finite-stage decision problems. However, agent usually does not know exactly how many decisions it needs to make. In fact, the decision problems can be infinite-stage (the decision process is forever) or indefinite-stage (the decision process will stop but no one knows when it will stop). The model this set of decision problems, we can combine decision network and Markov chain. The resulting model is Markov decision process (MDP).

Partially Observable Markov Decision Process

The decision network (and Markov decision process) also assumes that the outcome of each decision is fully observable. This is usually not the case due to noise or incomplete observation. To model this kind of decision problems, we can combine MDP and hidden Markov model (HMM). The resulting model is partially observable Markov decision process (POMDP).

Annotated Bibliography

Artificial Intelligence: Foundations of Computational Agents

|

|