Course:CPSC522/Concatenating Hyperspherical Distributions in Hyperspherical VAE

Concatenating Hyperspherical Distributions in Hyperspherical VAE

In this page, we take a look at the effects of using a concatenation of multiple independent hyperspherical distributions to extend Hyperspherical VAE proposed by Davidson et al. on single-cell RNA sequencing data. [1]

Principal Author: Sarah Chen, Yilin Yang

Collaborators:

Abstract

As high-dimensional data becomes more and more prevalent, how to deal with high-dimensionality becomes an increasingly popular research topic. While traditionally VAEs use the Gaussian normal distribution, Davidson et al. [2] considered using a von Mises-Fisher (vMF) distribution instead. Although they empirically showcase the power of such a distribution, they also note the limited performance in higher dimensional space. In this page, we take a look at the paper titled "Increasing Expressivity of a Hyperspherical VAE" by Davidson et al. [1], which claims to alleviate Hyperspherical VAE's problem with high dimensional data by considering a product-space assumption. We will first explain the improvement proposed by Davidson et al. [1] and then empirically evaluate its performance on two high-dimensional single-cell RNA-sequencing datasets in a high dimensional latent space. In addition, we also evaluate its performance in a low dimensional latent space which the original authors did not do.

Builds on

The wiki page made by Sarah Chen: Hyperspherical Variational Auto-Encoders for the background information.

The paper we will be focusing on: Increasing Expressivity of a Hyperspherical VAE [1]

Related Pages

Hyperspherical Variational Auto-Encoders by Davidson et al.[2]

Introduction

Motivation

Single-cell RNA-sequencing (scRNA-seq) data is a valuable resource for inferring new biological insights such as understanding cell relationships, as well as identifying and categorizing cell types. [3] Dimensionality reduction is a critical step for these downstream analyses. The hyperspherical variational auto-encoder, denoted as -VAE, has been demonstrated to be a powerful way of doing so. However the experiments only went up to a latent dimension of 20 and it was noted that for a 20-sphere, the -VAE became more uncertain about the embeddings. This problem could worsen as the dimension increases, but a higher dimensional latent space may be more desirable in some cases. We examine the concatenated -VAE as a potential solution to this problem.

Problem: Why do Hyperspherical VAE Suffer in Higher Dimensional Space?

As Sarah Chen's Hyperspherical Variational Auto-Encoders provides a comprehensive background on Hyperspherical VAEs, we directly introduce the problem setting.

Vanishing Surface Problem:For a hypersphere , the surface area is described as:

Where is dimension and is the radius, we observe that as , even for as small as 20, we have a very smaller surface area, and will lead to limited expressiveness in Hyperspherical VAEs in higher dimensions.

Taking a look at the vMF distribution we have:WhereHere is a normalization constant. The equation for it is below. It is hard to compute and has no closed form. is the modified Bessel function of the first kind, where indicates the order. Davidson et al. [1] note that this scalar , also called the concentration parameter is fixed for all dimensions, thus the authors hypothesize that is why Hyperspherical VAE has limited expressiveness as the dimensions are increased.

Proposed Method

Instead of using a single latent hyperspherical assumption, Davidson et al. [1] propose to use a concatenation of multiple independent hyperspherical distributions. This composition of hyperspherical distributions effectively bypasses the concentration parameter constraint by using a for each hypersphere. Therefore we can rewrite the pdf as:Here each hyperspherical random variable is sampled from a uniform distribution naturally defined to be on a hypersphere . Here we assume that each is independent of the other. We will also assume the independence of the vMF posterior: , and based on the derivations in Appendix A [1], we can simplify the KL divergence to:

Degree of Freedom Trade-Off

The author of the paper notes that there is an inherent trade-off between the Degree of Freedom (dof) and the concentration parameter . When we only have a single hypersphere, say , we can support up to independent feature dimensions. Therefore each dimension will have restricted flexibility as we have a single concentration parameter . When we use the proposed improved method, we can rewrite using the Cartesian cross-product , thus we lose an independent dimension (or dof) for increased flexibility as each hypersphere will have a different . As each concentration parameter describes fewer dimensions, we result in greater flexibility.

This is in contrast to the Gaussian distribution used by standard VAEs, as the Gaussian distribution already has a concentration parameter for each dimension in the form of the covariance matrix. Therefore it does not benefit from such a formulation. In contrast, vMF only has a single concentration parameter for all dimensions, using the proposed method will theoretically yield a more flexible model that should be ideal for high-dimensional data, which we aim to explore.

Hypothesis

In the following section, we will empirically evaluate the claims made by the authors by comparing the performance of the -VAE and the concatenated -VAE on two scRNA-seq datasets, which we will introduce before moving to the experiment setup. We hypothesize that the concatenated -VAE will achieve better performance than the -VAE in high dimensions and similar performance in low dimensions.

Datasets

For our two scRNA-seq datasets, we use the data provided by Cross-tissue Immune Cell Atlas, they have also linked the science article that details the datasets. Here we will briefly introduce this as most of the biological background is beyond the scope of this course.

cellxgene Matrix

A cellxgene matrix is a 2D matrix where each of the rows represents one cell and each of the columns represents a gene. The raw matrix contains counts where for a given cellxgene matrix , the entry contains the count of gene for the cell .

Myeloid Cells

Myeloid cells dataset contains a cellxgene matrix where 8 different cells were detected by CellTypist, a tool for automated cell type annotation by the same authors as Cross-tissue Immune Cell Atlas. After manual curation, three additional cell types were detected for a total of eleven types. Most of the individual cell types can be placed into one of three groups: macrophages, monocytes, and dendritic cells.

B-cells

The B-cell dataset contains a cellxgene matrix where 6 different cells were detected by CellTypist, after manual curation, 5 additional cell types were detected for a total of eleven types. B-cells include the progenitors in the bone marrow, developmental states in lymphoid tissues, and terminally differentiated memory and plasma cell states in lymphoid and nonlymphoid tissues. Memory B-cells include ABCs which are a specific type of memory B-cells. Germinal center (GC) B-cells, both I and II, form another group and so do plasmablasts and plasma cells.

Preprocessing

Prior to running the experiments, we applied standard preprocessing steps for scRNA-seq datasets. The datasets were first normalized by the total count, scaled by 10,000, and then log-transformed. The data was further scaled to have zero mean and unit variance. As the dimensionality of the datasets was very high, highly variable genes were selected which reduce the dimensionality of each dataset to about 2000. Lastly, we account for batch effects which is variation arising from technological noise. In this case, we regress out batch effects from chemistry to remove variation between cells arising from the sequencing method used to obtain the scRNA-seq data.

Experiments

In their paper, the authors did not provide code for the proposed concatenated -VAE, therefore for the purpose of our experiments, we contacted the original authors and modified their encoder structure for our purposes as well as assumed a student-T distribution in the reconstruction term. [4] We used three layers with 128, 64, and 32 units for the encoder and two layers with 32 and 128 units for the decoder. For the -VAE, we used an Adam optimizer with a learning rate of . For the concatenated -VAE, we also used an Adam optimizer but with a learning rate of .

We tried out dimensions of , , and for the -VAE and the concatenated -VAE. For the concatenated -VAE, we tried increasing the degrees of freedom for and . For , we tried keeping the ambient space fixed and increasing the degrees of freedom. Both balanced and unbalanced breaks were tested. The specific breakdown of subspaces for the concatenated VAE is outlined in the table below.

After the -VAE and concatenated -VAE are trained, the datasets are fed to the models and the means of the posterior distribution are output by the encoder. These means are used as low-dimensional cell embeddings for downstream analyses such as clustering.

We use k-means and k-NN to cluster and classify the cell types, and Adjusted Rand Index (ARI), Normalized Mutual Information (NMI) and Adjusted Mutual Information (AMI) for evaluation.

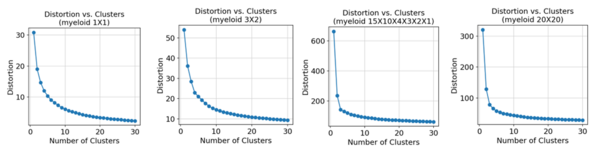

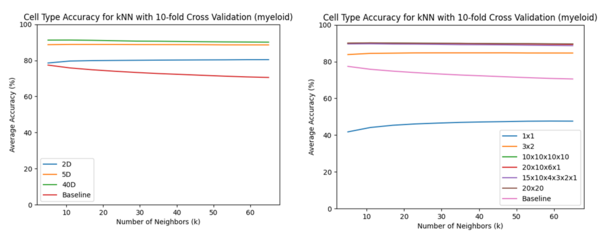

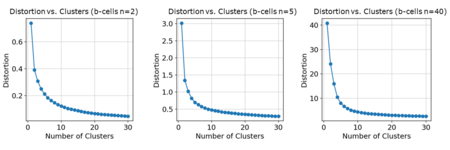

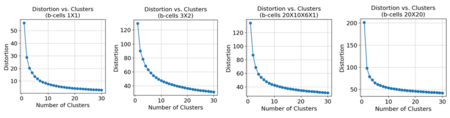

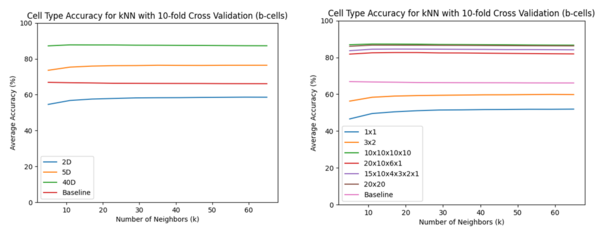

Elbow plots for k-means were used to chose the number of clusters. The x-axis is the number of clusters, and the y-axis is the distortion which is the sum of squared distances of each data point to the centroid of the cluster it is assigned to. The that is chosen is the one that causes a "kink" in the elbow. For k-NN, we split the datasets into ten folds. Nine folds were used for training and one for testing. Various were used which is shown on the x-axis, and the y-axis is the average accuracy over each of the test folds. The baseline used all of the highly variable genes kept after preprocessing of the datasets. The cell types were used as the class labels.

ARI measures the similarity between two cluster random index assignments and adjusts for chance. NMI is the Normalized version of Mutual Information between two clusterings with 0 being no mutual information and 1 for perfect correlation. AMI is the Mutual Information score that accounts for chance. It should be noted that all three evaluation metrics are invariant to the actual labels, and any permutation of class labels will not change the score.

Further details can be found in the documentation of sklearn.metrics.

Results

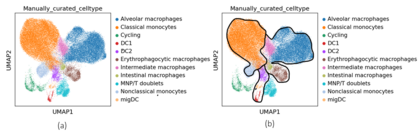

In the figures, the product space is denoted as where is the dimension of the subspace and there are subspaces. For example, would be . Clusterings are shown for the product space with the best performance and the product space with the worst performance. Performance was measured by the clustering metrics with better-performing product spaces having scores closer to 1.

Myeloid Cells

Inferring the number of cell types

Regardless of the dimension when using the -VAE, basing the number of cell types on where a "kink" in the elbow appeared would result in the number being underestimated. The "kink" typically appeared around to while the true number of types was 11.

For the concatenated -VAE, the number of cell types was also underestimated. For , the underestimation got worse as the "kink" consistently appeared around to although the "kink" was smoother for .

k-means Clustering

-VAE

When comparing the clusterings with chosen from the elbow plot, the results were similar. The monocytes and macrophages tended to get grouped together whereas the dendritic cells and doublets were more mixed with one another. With though, there was a noticeable improvement when using clustering with as some of the nonclassical monocytes began to form a separate cluster from the classical monocytes and the doublets formed their own cluster. It was a similar case for with .

Concatenated -VAE

While the -VAE with had a decent performance, the concatenated one performed very poorly as the cell types were all mixed together in the clusters. For , the monocytes were split more distinctly between classical and non-classical but it was not entirely correct as some classical monocytes were being included with the nonclassical ones. For , performance was similar to using the non-concatenated -VAE. Shown in the below figure are the clusterings for the worst () and best () product space with . There was not much of a difference except with the intermediate macrophages. When , the ARI, AMI, and NMI scores were 0.69, 0.61, and 0.61 for the worst performing product space and for the best performing product space, the ARI, AMI, and NMI scores were 0.75, 0.66, and 0.66 for the clusterings where was chosen from the elbow plot. In all cases, the concatenated -VAE failed to distinguish between the dendritic cells.

k-NN Classification

The -VAE worked better for the lower dimensions particularly for as the accuracy was much higher. The performance between the -VAE and concatenated -VAE, regardless of the product space used, was similar for . These results agree with the performance of the clusterings.

B-cells

Inferring the Number of Cell Types

For the -VAE, using an elbow plot to choose the number of clusters for the B-cells would also cause the number of cell types to be underestimated. The true number was 11 but the "kink" appeared around to .

Using the concatenated -VAE, the results were similar to using the -VAE. Like the myeloid cells, the number of breaks or whether the breaks were balanced or unbalanced did not affect the elbow plot for .

k-Means Clustering

-VAE

Unlike the myeloid cells, the clusterings across all dimensions were poor. It was especially bad for since the cells were all essentially mixed no matter the type. Performance improved slightly for and with seeming better since the plasma cells formed their own cluster. However, no matter the dimension, clusters tended to be split into two large groups without much granularity since types with few cells, such as doublets and ABCs, would get clustered with other cells.

Concatenated -VAE

The concatenated -VAE failed to properly cluster the cell types for and whether was set using the elbow plot or set to . The one exception for the plasmablasts for and . The -VAE had better performance overall for though. When , the ARI, AMI, and NMI scores were 0.15, 0.27, and 0.27 for the worst performing product space and for the best performing product space, the ARI, AMI, and NMI scores were 0.30, 0.39, and 0.39 for the angles where was chosen from the elbow plot. For and , using helped separate the three rightmost cell types: T/B doublets, plasmablasts, and plasma cells. In contrast, for and , using improved the separation of the naive B-cells and memory B-cells. This seemed like a slight improvement over just the -VAE, but the clusterings still had some other cells mixed in though and smaller clusters were not detected.

k-NN Classification Accuracy

Notably, the concatenated -VAE performed worse for the low dimensions. For , the product space did affect the accuracies. Uneven breaks had lower accuracies while even breaks achieved similar results to the un-concatenated -VAE. There also seemed to be a small preference for a higher number of breaks as (purple) is above (red) and (green) is very slightly above (brown).

Discussion

Performance in High Dimensions

The concatenated -VAE achieved only a similar performance to the -VAE. Noting that results of the -VAE at were not much different than at , it may be the case that the concatenated -VAE will show its benefits most when the dimension of the latent space starts to become so high that it negatively impacts the performance of the -VAE.

Running the concatenated -VAE for more epochs may also eventually result in it achieving better performance than the -VAE since the concatenated -VAE was only run for 10 epochs. The -VAE was run for about 3 epochs. In contrast, the models in scPhere were run for 500 epochs for a dataset of this size. The reason we used a low number of epochs was that we would encounter NaN values past that. This was after decreasing the learning rate, which partially helped, as the issue was worse when it was higher. Additionally, more hyperparameter tuning may be beneficial. For example, we used the same default architecture as scPhere so it may be worth trying deeper networks and adjusting the number of units per layer.

Regarding how the space should be broken up and the number of subspaces, we found a slight preference for balanced breaks. The authors mention that this is because if the breaks are uneven, the model could end up ignoring some subspace channels which is problematic if it ignores higher-dimensional subspaces in favour of lower ones. No preference could be seen for the number of breaks which may be because since the minimum and maximum number was 2 and 6, the difference was not significant enough to have much of an impact.

Performance in Low Dimensions

For lower-dimensional parts, we followed the analysis conducted by Davidson et al. [1] and tried both models on MNIST with . The concatenated -VAE performed slightly worse by all metrics, which might indicate that it’s not suitable for lower dimensions. As for intuition behind this result, we think it may come from tuning or the dof trade-off, Although it could be that we may have chosen a poor set of hyperparameters, we think it's likely due to flexibility trade-off being adverse to our concatenated -VAE model, as we likely won't need more concentration parameters for such a low dimensional task.

Performance on the Myeloid Dataset vs. the B-cells Dataset

Using the embeddings from both models resulted in clusterings of the major groups with chosen from the elbow plot. The granularity of the cell types was more variable. Specifically, performance was consistently better for the myeloid dataset than the B-cells dataset. This may be because cell types within a subcategory for the myeloid dataset are more distinguishable from one another and are illustrated by the intermediate macrophages tending to be separated from the alveolar macrophages. For the B-cells, they may be more similar, such as the memory B-cells and ABCs or plasma cells and plasmablasts. There was a similar problem with the monocytes in the myeloid dataset. The cell types of the B-cells dataset are also determined by the maturity level of the B-cell until it eventually differentiates into a memory B-cell or plasma cell. Plasma cells may be more clearly distinguishable whereas the rest are more similar since they are essentially the same cell but at different points in time. As a more complicated dataset, the number of epochs then may be too low for the B-cells as mentioned in in Performance in High Dimensions. The good performance of the -VAE though on the myeloid dataset further suggests the appropriateness of the -VAE for scRNA-seq data.

Conclusion

Overall, the experiments do not support our hypothesis. The -VAE performed better than the concatenated -VAE in low dimensions and the concatenated -VAE did not demonstrate an improvement in performance for . It may be the case that steps as discussed could be taken to resolve this, but when using a high latent dimension, it seems difficult to say for certain whether the issue lies with the concatenated -VAE itself or hyperparameter tuning without further experimentation. In the meanwhile, it seems more appropriate to use the -VAE for scRNA-seq data.

Annotated Bibliography

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 1.7 Davidson, Tim; Tomczak, Jakub; Gavves, Efstratios (2019). "Increasing Expressivity of a Hyperspherical VAE" (PDF). 4th workshop on Bayesian Deep Learning (NeurIPS 2019) – via arXiv.

- ↑ 2.0 2.1 Davidson, Tim; Falorsi, Luca; De Cao, Nicola; Kipf, Thomas; Tomczak, Jakub. "Hyperspherical Variational Auto-Encoders" (PDF). 34th Conference on Uncertainty in Artificial Intelligence – via arXiv.

- ↑ Ding, Jiarui; Regev, Aviv (2021). "Deep generative model embeddings of single-cell RNA-seq profiles on hyperspheres and hyperbolic spaces". Nat Commun.

- ↑ Ding, Jiarui; Condon, Anne; Shah, Sohrab. "Interpretable dimensionality reduction of single cell transcriptome data with deep generative models". Nat Commun.

|

|

![{\displaystyle [{\mathcal {S}}_{1}]_{\times 2}}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/dc9006098a1147a750c452f77be4088e5a7a7d75)

![{\displaystyle [{\mathcal {S}}_{20}]_{\times 2}}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/24dfcb5660b66d47979011582b58bf1a422b5d23)

![{\displaystyle [{\mathcal {S}}_{10}]_{\times 2}}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/bfc6f7af1607167cf59e01adc327cd529058da1a)