Course:CPSC522/Bounded Rationality

Bounded Rationality

Bounded rationality is a concept that the rationality of a realistic agent is limited by resources such as time, access to information, capacity for information, and processing power, and as such cannot be absolute as rationality is often defined.

Principal Author: Julin Song

Collaborators: Ainaz Hajimoradlou, Bronson Bouchard, Gudbrand Tandberg

Abstract

Bounded rationality is a concept that a realistic agent can only be rational to a certain extent. Deciding on how far that extent should go and then how to reach that point makes interesting problems. This page gives an introduction to bounded rationality as distinguished from unbounded rationality with a little bit of history, a brief discussion of the motivation of the concept in cognitive psychology, then presents overviews of some of bounded rationality's manifestations in computing.

Builds on

Agents modeled with unbounded rationality act to maximize utility, while agents modeled with bounded rationality can only aim for some satisfactory amount of utility. This topic also builds on deviation from strict logic.

Related Pages

Bounded rationality has application in reinforcement learning and multi-agent systems.

Game theory typically assumes unbounded rationality.

Another related concept is rational ignorance.

Content

Compare to unbounded rationality

Before bounded rationality there was unbounded rationality. It was referred to simply as "rationality" since it was the only kind; it was an absolute and opposed to it was irrationality. The preferences of a rational agent is expected to adhere to the axiom of transitivity[3]. The neoclassical economics due to von Neumann and Morgenstern makes the assumption that groups of agents such as the whole market have approximately unbounded rationality, and as such a generic agent is able to calculate the sum of his[note 1] expected future utility per action. And because they're able, those agents are always trying to maximize utility. This relatively simple model of the world is still taught today and often used on real problems.

In the 1960s, bounded rationality appeared as a reaction to those sometimes-oversimplified models. It may be too hard to process expected utility to the end of time; there may be more variables than the agent could store even in a limited timeframe; the search space of possible actions may be too large.

"No one wants to do all that!" The boundedly rational agent complains.

- ↑ Before political activists claimed personal pronouns, a person of unknown gender was referred to as he.

Bounded rationality

Herbert Simon first used the words "bounded rationality" in Models of Man: Social and Rational (1957). He suggests that a solution to the problem of limited rationality in agents, that is, a solution to the impossibility of actually maximizing utility, is to simply go for a satisfactory result - he calls this satisficing, a portmanteau of "satisfy" and "suffice". With this assumption, sometimes a boundedly rational model is more tractable than an unboundedly rational model[4].

Motivation in psychology

Humans are often thought of as rational agents. One does not often mention the limitations to that statement and the boundaries beyond which it wouldn't be true. But if humans were always and wholely rational, self-sabotage would not exist. The mind plays games - some sensory, like in Figure 1; others are on a higher level, like loss aversion, context dependence of perception, and framing effect. A human can only assimilate a fraction of the potential stimuli in the environment at each point in time; for perception, this allows retina displays and oil painting. Humans are physically wired to notice change more than static stimuli[5] and to extract the signal amid noise[6].

The preceding paragraphs discussed the inevitable boundedness of human rationality. Often, decisions that can be made logically do not go through a logical thinking process; for example, muscle memory or taking lethal impact for a loved one. This kind of bounded rationality may be caused by a competition between the unconscious and conscious systems in the brain[7]. The existence of two systems in the brain is known as Dual Process Theory and has been supported by psychological experiments, fMRIs and spectroscopy.

Theories in inattention and selective attention [8][9][10] suggest that bounding the number of variables and thus the problem size in a decision when not necessary has evolutionary benefits. Since information acquisition is costly, variables in the "gap" - not important enough but still relevant - are often modeled as unknown but fixed values.

Computing

Sparsity

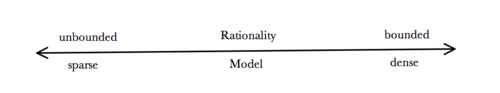

The bounded rationality assumption correlates with dense models. Imagine an agent that knows there are

dimensions that have real effects on a problem, where

is very large. With intentions of sparsity, the agent operates on a model that gives most dimensions a weight of 0, making the model realistic yet keeping it tractable. In finding an appropriate model, the agent also needs to avoid Herbert Simon's problem of infinite regress[11], i.e., spending more resources on finding the optimal simplification of the problem than solving the original problem. Figure 3 shows one algorithm for finding a sparse model, which (1) looks for a computing model

that minimizes the difference between the value of the it and that of the true model

with a certain action

, all with a penalty for additional non-zero dimensions while (2) looks for the action that maximizes the value function with a certain model

.

In reality, for both human and computional agents, the retrieval of information is very costly, not least because there is limited space within the agent and within the environment for information storage. Consequently the sparse model's variables are often those that are local or recent information. For pyramidal problems (that is, problems that branch into smaller problems, though not necessarily recursive), there is a tendency to resolve uncertainties early lest the problem size explode.[12]

Emotion

Attention needs to be conserved. Are there ways attention is biologically constrained, other than vicinity of memory? Emotion is a likely candidate[13]. Case in point is fear. Evolutionarily there are obvious benefits where this emotion in particular focuses attention. Although the intricacies of how emotion affects the decision making human agents is not clear, many studies in psychology and economics incorporate their versions of emotion. The main flavors of emotion modeling are categorical, with various lists, and real-valued, with some remix of valence, arousal and dominance. There are different attempts to model emotion in computational agents, but the emotional-Belief-Desire-Intent agents may be the closest to using emotion as a constraint of actions as discussed.[14]

Reinforcement Learning

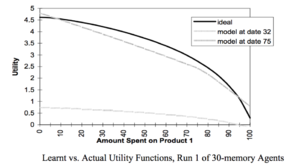

Computational models of bounded rationality are often some flavour of reinforcement learning, and the two are both more or less descended from the concept of animals learning in trial and error. Assumptions of bounded rationality in computation is often that there is a hidden utility function that agents are not explicitly aware of, but it exists. Edmonds (1999)[15] is an early work using evolutionary programming to show that reinforcement learning can closely approximate a hidden utility function within assumptions of bounded rationality.

Annotated Bibliography

- ↑ Simon HA. Human nature in politics: The dialogue of psychology with political science. American Political Science Review. 1985 Jun;79(2):293-304.

- ↑ Moss S, Sent EM. Boundedly versus procedurally rational expectations. In Analyses in Macroeconomic Modelling 1999 (pp. 115-145). Springer, Boston, MA.

- ↑ Arrow KJ. Utilities, attitudes, choices: A review note. Econometrica: Journal of the Econometric Society. 1958 Jan 1:1-23.

- ↑ Ellison G. Bounded rationality in industrial organization. Econometric Society Monographs. 2006 Jan;42:142.

- ↑ Angela JY, Dayan P. Uncertainty, neuromodulation, and attention. Neuron. 2005 May 19;46(4):681-92.

- ↑ Rabinowitz NC, Willmore BD, King AJ, Schnupp JW. Constructing noise-invariant representations of sound in the auditory pathway. PLoS biology. 2013 Nov 12;11(11):e1001710.

- ↑ Kahneman D. Maps of bounded rationality: Psychology for behavioral economics. American economic review. 2003 Dec; 93(5):1449-75.

- ↑ Schwartzstein J. Selective attention and learning. Journal of the European Economic Association. 2014 Dec 1;12(6):1423-52.

- ↑ Caplin A, Dean M. Revealed preference, rational inattention, and costly information acquisition. American Economic Review. 2015 Jul;105(7):2183-203.

- ↑ Sims CA. Implications of rational inattention. Journal of monetary Economics. 2003 Apr 1;50(3):665-90.

- ↑ 11.0 11.1 Gabaix X. A sparsity-based model of bounded rationality. The Quarterly Journal of Economics. 2014 Sep 17;129(4):1661-710.

- ↑ Gennaioli N, Shleifer A. What comes to mind. The Quarterly journal of economics. 2010 Nov 1;125(4):1399-433.

- ↑ Hanoch Y. “Neither an angel nor an ant”: Emotion as an aid to bounded rationality. Journal of Economic Psychology. 2002 Feb 1;23(1):1-25.

- ↑ Pereira D, Oliveira E, Moreira N. Modelling emotional BDI agents. In Workshop on Formal Approaches to Multi-Agent Systems (FAMAS 2006), Riva Del Garda, Italy (August 2006) 2006.

- ↑ 15.0 15.1 Edmonds B. Modeling bounded rationality in agent-based simulations using the evolution of mental models. In Computational techniques for modeling learning in economics 1999 (pp. 305-332). Springer, Boston, MA.

Further Reading

Prospect theory is related to loss aversion.

Control Theory may be relevant for understanding costly acquisition of information.

To Add

Discuss how Markov Networks and Bayesian Networks are related to BR.

Spiegler R. Bayesian networks and boundedly rational expectations. The Quarterly Journal of Economics. 2016 Mar 7;131(3):1243-90.

|

|