Transformers

Transformer Models in NLP: Revolutionizing Language Processing with Self-Attention Mechanisms

Transformers have revolutionized Natural Language Processing (NLP) since their introduction in 2017. They are a type of neural network architecture that is specifically designed to process sequential data, making them highly effective in dealing with language-based tasks such as language translation, text summarization, sentiment analysis, text classification and more.

Principal Author: Mehar Bhatia

Collaborators:

Abstract

This page will cover some crucial fundamentals and provide a walk-through on the basis of how transformer models are being used in the field of Natural Language Processing.

Builds on

Before the introduction of transformers, the most common approach in NLP was to use Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) models. These models were effective in processing sequential data, but they had their limitations, such as the inability to process parallel inputs, the rise of vanishing gradients due to the problem of long-term dependencies and being slow to train. Additionally, these architectures do not have an explicit way to model hierarchy. Though there have been some efforts to address these difficulties, for example, a convolutional sequence model using Convolutional Neural Networks (CNNs) where representations are produced for variable-length sequences. Though each layer is trivial to parallelize, the long-distance dependencies require many layers which can be proportional to the length of the string.

What exactly are transformers, and how do they work?

The transformer architecture aims to address the above limitations and has become a widely used, popular go-to model for various NLP tasks, such as machine translation, language modelling, and text classification[1]. It was introduced in the paper "Attention is All You Need" by Vaswani et al. (2017)[2]

It is entirely based on a self-attention mechanism, which allows the model to compute representations and focus on different parts of the input sequence while predicting the output sequence. Unlike RNNs that process input sequentially, transformers process the entire sequence in parallel, making them more efficient for long sequences and faster computation along with producing a smaller carbon footprint.[2]

Self-Attention Mechanism

"Self-attention, sometimes called intra-attention is an attention mechanism relating different positions of a single sequence in order to compute a representation of the sequence" [2]. In simpler terms, self-attention helps the model create similar connections within the same sentence and are the fundamental building blocks of the overall architecture.

On the other hand, attention weights measure the importance of different parts of the input sequence for generating the output at a given time step. For example, when translating a sentence from English to French, attention weights can be used to determine which words in the English sentence are most relevant for generating the corresponding word in the French sentence.[3]

"An attention function can be described as mapping a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors. The output is computed as a weighted sum of the values, where the weight assigned to each value is computed by a compatibility function of the query with the corresponding key." [2]

Calculating self-attention

In self-attention, the input sequence is projected into three parts, i.e, queries, keys, and values, each represented by a vector. These are calculated by multiplying the input vector (say with dimension 512, 768 or even 1024) with weight matrices that are jointly learnt while model training.

- Query Vector, i.e, the current word represented as

- Key Vector, used to index the value vector, represented as

- Value Vector represented as

As summarized by Ria Kulshrestha in the article[4], the next steps to calculate self-attention for an input word which involves taking a dot product of the query and key to find the most similar key for a query. The closest query-key product will have the highest value. This product is scaled by the square root of the dimensionality of the key vector for better gradient flow (to prevent the dot products from becoming too large or too small). This is followed by a softmax for normalization. This softmax distribution is multiplied with value . The value vectors multiplied with ~1 will get more attention while the ones ~0 will get less. The sizes of these query , key and value vectors are referred to as 'hidden size'. Mathematically, the self-attention is calculated as follows,

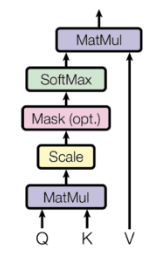

This is called scaled dot-product attention, shown in Figure 1. In practice, these computations can be effectively applied to the entire set of queries simultaneously. Vaswani et al. [2] explains that this scaled dot-product attention is identical to the multiplicative attention of Luong et al. (2015)[5], except for the added scaling factor of . It further also explains that the choice for opting for multiplicative attention instead of additive attention of Bahdanau et al. (2014)[6] was based on the computational efficiency since dot-product attention is much faster and more space-efficient in practice since it can be implemented using highly optimized matrix multiplication code.

Multi-Head Attention

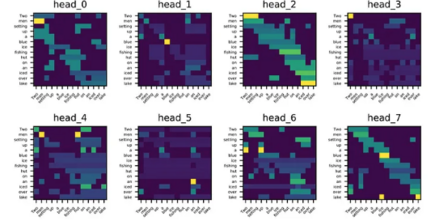

Vaswani et al. also propose a multi-head attention mechanism. The key advantage of multi-head self-attention is that it allows the model to attend to different parts of the input sequence at different levels of granularity. For example, one head might focus on high-level semantic features of the input sequence, while another head might focus on low-level syntactic features. This helps the model capture complex relationships between different elements of the sequence. An example of encoder self-attention through different heads is demonstrated in Figure 2.

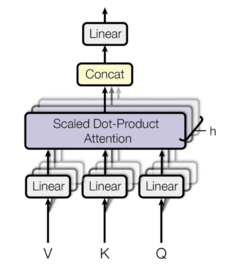

The multi-head attention mechanism linearly projects the queries, keys, and values times, using a different learned projection each time. The 512 dimension input gets segmented to 8 64 dimension vectors.The single attention mechanism is then applied to each of these projections in parallel to produce outputs, which, in turn, are concatenated and projected again to produce a final result.[7] Overall, this can be visualized from Figure 3.

The multi-head attention function can be represented as follows:

Here, each implements a single attention function characterized by its own learned projection matrices.

In a nutshell, to compute the multi-attention, first, apply the single attention function for each head, concatenate the outputs and apply a linear projection through multiplication with weight matrix to generate the final result. Due to the reduced dimension of each head, the total computational cost is similar to that of single-head attention with full dimensionality.

High-level Architecture

The Transformer architecture does not rely on recurrence and convolutions in order to generate an output. As seen in Figure 4, the overall architecture is composed of two main components: an encoder and a decoder. The encoder processes the input sequence, while the decoder generates the output sequence. The input and output sequences are both divided into tokens (i.e., individual words or sub-words) and represented as vectors. Let us break down each component for a better understanding.

The Encoder

The encoder consists of a stack of N = 6 identical layers, where each layer is composed of two sublayers.

Sublayer 1 - Multi-Head Self-Attention

This layer processes the input vectors X using a multi-head self-attention (described in Section 5) using 8 heads.

Sublayer 2 - Feed Forward Network

A simple, position-wise fully connected feed-forward network consisting of two linear transformations with Rectified Linear Unit (ReLU) activation in between.

The six layers of the Transformer encoder apply the same linear transformations to all the words in the input sequence, but each layer employs a different weight and bias parameters to do so.

Furthermore, each of these two sublayers has a residual connection around it.

Each sublayer is also succeeded by a normalization layer, , which normalizes the sum computed between the sublayer input, , and the output generated by the sublayer itself, :

An important consideration to keep in mind is that the Transformer architecture cannot inherently capture any information about the relative positions of the words in the sequence since it does not make use of recurrence. This information has to be injected by introducing positional encodings to the input embeddings.

Positional Encodings

In recurrent networks like LSTMs and GRUs, the network processes the input sequentially, token after token. This way, the network has a reference to identify the relative positions of each token by accumulating information. However, Transformers has no notion of word order and it is essential to provide information on the word’s position [8].

Hence the requirement of positional encodings.

The positional encoding vectors are of the same dimension as the input embeddings and are generated using a cyclic (dynamic) solution where sine and cosine functions with different frequencies are added to each word embedding. Then, the input embeddings are summed in order to inject the positional information.[7]

The Decoder

The decoder shares several similarities with the encoder. However, it is autoregressive.

The decoder also consists of a stack of N = 6 identical layers that are each composed of three sublayers.

Sublayer 1 - Masked Multi-Head Attention

The first sublayer receives the previous output of the decoder stack, augments it with positional information, and implements multi-head self-attention over it (as described in Section 5). While the encoder is designed to attend to all words in the input sequence regardless of their position in the sequence, the decoder is modified to attend only to the preceding words. Hence, the prediction for a word at position can only depend on the known outputs for the words that come before it in the sequence. This is achieved by introducing a mask over the values produced by the scaled multiplication of matrices query and key . It is implemented by suppressing the matrix values that would otherwise correspond to illegal connections [7]. It is important to note that this masking makes the decoder unidirectional (unlike the bidirectional encoder).

Sublayer 2 - Multi-Head Attention

The second layer is similar to the one implemented in the first sublayer of the encoder (reference Section 6.1.1). On the decoder side, this multi-head mechanism receives the queries from the previous decoder sublayer and the keys and values from the output of the encoder. This allows the decoder to attend to all the words in the input sequence.

Sublayer 3 - Feed Forward Network

This layer is similar to the one implemented in the second sublayer of the encoder (reference Section 6.1.2).

Furthermore, the three sublayers on the decoder side also have residual connections around them and are succeeded by a normalization layer.

Positional encodings (reference Section 6.2) are also added to the input embeddings of the decoder in the same manner as previously explained for the encoder.

From the final output of the decoder, a linear layer followed by a softmax on the logits is applied to select the final probabilities of words.

The architecture uses an Adam optimizer with a custom learning rate that varied over the course of training. As for the loss function, Categorical Cross Entropy is used.

It operates by beginning with a special token which is initialized with the encoder output and generates a possible word. In the next iteration, it takes the previous output(s) as input(s) and again the encoder outputs to generate the next word. This decoding loop continues until it generates (short for end-of-sentence) token as an output. However, one point is missing. Since the model produces the outputs one at a time, there are mainly two ways to select the word from the probability distribution. The first way is to directly pick the word with highest probability from the distribution (i.e, greedy decoding), the second way is to hold on to, say, the top two words (say, ‘I’ and ‘a’ for example), then in the next step, run the model twice: once assuming the first output position was the word ‘I’, and another time assuming the first output position was the word ‘a’, and whichever version produced less error considering both positions #1 and #2 is kept. This is repeated for positions #2 and #3 till . This method is called beam search. [9]

The pioneers in transformer-based self-supervised language models

Beast #1 - BERT

Bidirectional Encoder Representations from Transformers (BERT) by Devlin et. al, (2018)[10] is one of the first developed Transformer-based self-supervised language models. BERT has 340M parameters and is an encoder-only bidirectional Transformer.

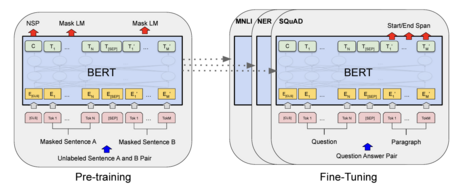

As shown in Figure 5, BERT is pre-trained with unlabeled language sequences from the BooksCorpus (800 million tokens) and English Wikipedia (2,500 million tokens). To construct pre-training objectives, 15% of all tokens in each sequence are masked at random and the model is trained to predict the masked words rather than reconstructing the entire input. This is called Masked Language Modelling (MLM). In addition to MLM, BERT also uses a Next Sentence Prediction (NSP) task to jointly pre-train the model.[11]

As the name implies, BERT only uses a transformer’s encoder parts for downstream adaption, where users add new trainable layers on top to fine-tune for a domain-specific task.

BERT performs well in classification tasks like sentiment analysis and answering questions. The model also excels in named entity recognition (NER) and next sentence prediction. BERT has been immensely helpful for:

- Voice assistants and chatbots aiming to enhance customer experience

- Customer review analysis (this is one of the most common sentiment analysis and classification use applications)

- Enhanced search results

- Named Entity Recognition

- Handle content moderation on various platforms

- and many more! [12]

Beast #2 - GPT

GPT, or Generative Pre-trained Transformer, is a family of natural language processing (NLP) models that has garnered a lot of attention in recent years for its impressive performance on a wide range of language tasks.

The first GPT model, GPT-1, was introduced by OpenAI in 2018. The primary objective of GPT-1 was to pre-train a large neural network on a vast corpus of text data by maximizing the likelihood of the next work in a given sequence, given the previous words. The model was trained on a massive corpus of text data, including the BooksCorpus dataset and the English Wikipedia dataset, to achieve state-of-the-art performance on a range of language tasks.[13]

Given an unsupervised corpus of tokens , we use a standard language modelling objective to maximize the following likelihood:where is the size of the context window, and the conditional probability is modelled using a neural network. These parameters are trained using stochastic gradient descent. A multi-layer Transformer decoder is used which applies a multi-headed self-attention operation over the input context tokens followed by position-wise feedforward layers to produce an output distribution over target tokens:

where is the context vector of tokens, is the number of layers, is the token embedding matrix, and is the position embedding matrix.

Building on the success of GPT-1, OpenAI released the GPT-2 model in 2019. GPT-2 was trained on an even larger corpus of text data, which also included Common Crawl, a massive web crawl dataset containing over 40 terabytes of data. The GPT-2 model had 1.5 billion parameters and achieved even better performance than GPT-1 on a range of language tasks. GPT-2 also introduced a training objective to model language with a prompt, which is a short sequence of text that provides a context for the language generation task. The model is then trained to generate a continuation of the prompt that is coherent and follows the context provided by the prompt.[14]

In 2020, OpenAI released GPT-3, which marked a significant advancement in the state-of-the-art for language modelling. GPT-3 was trained on an even larger corpus of text data than GPT-2, and it has 175 billion parameters. GPT-3 achieved impressive results on a range of language tasks, including natural language generation, language translation, and question-answering. One of the key advantages of GPT-3 is its ability to perform zero-shot learning, where the model can generate coherent text for a task that it has never seen before, without any fine-tuning or training.[15]

Conclusion

In conclusion, transformers have revolutionized the field of natural language processing by allowing for more efficient and accurate language modelling. The transformer architecture introduced in the original transformer paper has been adapted and refined in a variety of ways, leading to significant improvements in state-of-the-art language models. While transformers have shown great promise in many areas of NLP, there is still much work to be done to improve their performance and address ethical and social concerns around their use. In the current NLP landscape it is exciting to see how the field continues, how transformers are further developed and currently applied to innovative use cases.

References

- ↑ https://ai.googleblog.com/2017/08/transformer-novel-neural-network.html

- ↑ 2.0 2.1 2.2 2.3 2.4 Vaswani, Ashish, et al. "Attention is all you need." Advances in neural information processing systems 30 (2017)

- ↑ https://towardsdatascience.com/attaining-attention-in-deep-learning-a712f93bdb1e

- ↑ https://towardsdatascience.com/transformers-89034557de14

- ↑ Luong, Minh-Thang, Hieu Pham, and Christopher D. Manning. "Effective approaches to attention-based neural machine translation." arXiv preprint arXiv:1508.04025 (2015).

- ↑ Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. "Neural machine translation by jointly learning to align and translate." arXiv preprint arXiv:1409.0473 (2014).

- ↑ 7.0 7.1 7.2 https://machinelearningmastery.com/the-transformer-attention-mechanism

- ↑ https://tamoghnasaha-22.medium.com/transformers-illustrated-5c9205a6c70f

- ↑ http://jalammar.github.io/illustrated-transformer/

- ↑ Devlin, Jacob, et al. "Bert: Pre-training of deep bidirectional transformers for language understanding." arXiv preprint arXiv:1810.04805 (2018).

- ↑ https://towardsdatascience.com/self-supervised-transformer-models-bert-gpt3-mum-and-paml-2b5e29ea0c26

- ↑ https://symbl.ai/blog/gpt-3-versus-bert-a-high-level-comparison/

- ↑ Radford, Alec, et al. "Improving language understanding by generative pre-training." (2018).

- ↑ Radford, Alec, et al. "Language models are unsupervised multitask learners." OpenAI blog 1.8 (2019): 9.

- ↑ Brown, Tom, et al. "Language models are few-shot learners." Advances in neural information processing systems 33 (2020): 1877-1901.