Documentation:Moot Court

Introduction

First-year moot can be intimidating in usually the first or second semester of law school. As an effort to eliminate any stress or anxiety around this “psychologically terrifying” portion of the curriculum, the Emerging Media Lab at UBC has collaborated with Peter A. Allard School of Law to create a tool for law students. To allow students to gain familiarity with mooting, EML has built an open source moot simulator in a web application for accessibility. This moot simulator is a mock trial that allows students to practice and prepare in a simulated environment.

Background

What is moot court? It is one of the most crucial part of the curriculum that first-year law students must be accustomed to in order to start gaining confidence in their speech and argument in court. A moot consists of two teams of law students competing against each other in front of a panel of judges. There is a simulated courtroom with judges who ask them questions while the students are preparing their arguments and practicing delivery to the court. This can be viewed as a proxy for several big things such as representing a front-end tool to rapidly prototype legal arguments, creating a virtual classroom, etc.

Objective

- Creating the best possible VR/AR/XR simulated environment to expose students to what an actual moot environment will feel like.

- Allowing students to practice their mooting skills in a virtual environment (see “Ovation” as a commercial example of a similar speaking tool)

- Developing and evolving an A.I. natural language tool (related to the Socratic tool already being refined through EML) that further prepares students for the moot experience, improve their learning, and their performance.

Consideration

Some considerations into building the web application includes visually impaired and users with hearing loss. For the visually impaired, the pairing of colours within the colour pallet are paired to enhance the legibility of text content. For users with hearing loss, closed captions are included in the simulator.

Format

A React-Three-Fiber web application hosted through AWS CloudFront.

Development

This section was last updated on April 30 2023

Github Branches

In order to understand what each branch in the Github repository consists of, please clone this repository. For any inquiries, please contact the Emerging Media Lab.

Active Branches

Please refer to the table below for a description of all the branches in the repository.

| Branch Name | Description |

|---|---|

| master | The default branch that has the most up-to-date stable code. |

| codebase-refactor | The branch where the dev team tried to remove some redundancies of code and introduce performance monitors. |

| question-editor | The development team was considering a UI page on the web for PIs to edit questions, upload and download JSON question files that could be directly inputted to the codebase. |

Speech Synthesis

The speech synthesis function of this application uses the React Speech Recognition hook. This React hook essentially has Web Speech API built in that allows developers the accessibility to the API in a React framework. This implementation in the application is used on the judge avatars for pause detection and keyword recognition to ensure questions are asked at accurate timing and that users' response are correct. This aspect of development is not fully implemented as it is still a work in progress. For more information about React Speech Recognition, please visit this website.For more information on the road block of the speech synthesis component within the app itself, please visit the Future Plans section below for clarifications.

Animations

The animation on the judge avatar uses a combination of three resources: DeepMotion, Mixamo and Plask.ai. DeepMotion is used to source an adequate Judge model for the scene. The model obtained from DeepMotion is then imported into Mixamo to rig the model to allow animations. Once the model is rigged in Mixamo, it is put into Plask.ai to record custom animation. Developers could take a different steps to obtain the same results for animated avatars. The only note to keep in mind is that the final file format of the model should be of .glb file type, while importing into Mixamo needs a .fbx file type. To implement avatar models, please refer to the 3D Models in the Scene section immediately below.

Suitable motions initially were obtained through online videos sources performing the actions which resulted in accessible clips in a short amount of time. However, later implementations resulted in filming a person performing the actions for smoother and more compatible animations. Key guidelines for ideal clips include monochromatic tight clothing, full body shot, and a plain empty background with no nearby objects as a distraction. Here are additional tips that are useful for Plask.ai filming.

3D Models in the Scene

The current application uses the court room scene model built in SketchUp. However, developers can use any 3D models build from any softwares as long as the modelling software allows .glb file exports. The implementation of models is simple. Simply import and upload the model file into the repository and replace the model URL with the file path to the model. Currently, all the models of the scene is stored in the public folder under "models."

Deployment and Site Hosting

Developers would deploy this application like any other React application. Simply run npm build or yarn build. The contents of the build folder should be uploaded to the server that is hosting the application. The current application is hosted on the AWS cloudfront. To access the S3 bucket of this project, please inquire the Emerging Media Lab for permissions.

User Manual

To access the live version of Moot court, go to React App (mootpractice.ca).

Setup Page

When the user first enters the Moot Court website, the setup page with UI is displayed for the user to customize the application

Scene

The user is presented with the judge who asks questions according to the customization setting set by the user. The user can choose to go back to the setup page by clicking a button.

Moot Practice

Once the setup is complete, the moot practice will begin according to the customizations specified by the user in the setup page. When the practice starts, the user will be standing at a podium before the judge in a room similar to ones used during the first-year moots. The most optimal voice for the judge should be automatically chosen according to the OS and browser. The timer is available on the bottom right of the screen. After the judge greets the user by stating "Council, you may begin your presentation," the user can start presenting. As the user practices, the judge will ask questions from the question set at intervals specified by the user.

Resources

First-year moot resources provided by course instructors have been compiled and listed on the website as well to provide students a centralized location for everything they need to have a successful moot!

Features

Customizable Moot Court Practice

Using the setup page, users can customize their practice moot based on the specifications of their assigned moot as well as their personal needs for their practice session.

Simulated Moot Court Scene

In order to create a more realistic and therefore more effective practice tool for the user, a simulated moot court scene has been created to emulate the environment that first-year law students will be conducting their mandatory moot in.

Timer

During the practice moot, a timer is available on the bottom right of the screen with colour-coded time indications that transition from the colour green, to yellow, then red as the timer runs out, similar to the timer warnings that are available during in-person moots. The timer can also be paused to allow more control and flexibility for the user as they practice their moot.

Mooting Resources

First-year moot resources provided by course instructors are included on the website.

Challenges

Adapting models for optimal usage within React Three Fiber

DeepMotion models are limited to a single animation per model. This made it inconvenient when trying to have the judge model perform distinct actions only once. Importing multiple copies of the same model performing different actions at specific times is an option, but it came at the downside of overall performance and loading times of the entire application. Another alternative is to have the model contain multiple animations which can avoid additional loading. Plask.ai is an alternative that animates models in a similar style to DeepMotion, but lacks the variety of suitable models for a human judge unlike DeepMotion. However the rigging between the two online tools differs. By pre-processing the DeepMotion model and importing it into Mixamo for rigging, the gap between the two platforms is bridged.

React-Speech-Recognition

In the 2021W2 term, the use of react-speech-recognition was explored to detect pauses in student speech, and to potentially detect certain keywords the user speaks. The component that detects the speech is Dictaphone.jsx, which can be found on the EML Moot Court repository. If the browser supports speech recognition, Dictaphone sets the speech recognition object to be accessible by subsequent useEffect hooks and adds a listener.

Every time the transcript is updated, the console.log prints the script which resulted in results with high accuracy. In the future if the team wishes to build upon student speech, extracting the transcript from the speechRecognitionObject would be useful. The useEffect hook is not necessary to ensure the transcript is continuously updated.

Ensuring the speech detection is continuously on was a challenging task. When speech is not being detected, the speech detection would terminate and the user would have to manually turn on the mic. The development team from 2021W2 wished to have the system detect the pause and start of speech continuously but was not able to resolve the issue after a considerable use of development hours, then the task was not deemed worthy of further commitment of the developers at the moment.

The redundant use of triggerEffect and addListener can be removed as it was part of the exploration for the developers.

Future Plans

This section is last updated on April 30 2023. For a more descriptive scope of where the team left off in development and design, as well as more future plans, please contact EML for access to this Trello Board. This Trello Board should give access to all the ideation and brainstorming process during the development of this project.

Optimization of speech synthesis

The development team should be looking for a solution to modify the default browser speech synthesis API with more robustness. The default voice used must be tested to be reasonably effective in as many browsers as possible. Refer to this article for guidance.

Connecting the customization interface to the presentation components

If the customization interface includes features that do not exist in the presentation components, the features should be added and be connected to the interface.

Multiuser Server

Currently, Moot Court only supports moot practice for an individual user, specifically a single student, which requires practice questions to be preset or added into the application in advance through the setup page. This single user configuration also means that questions will be asked at time intervals specified by the user. The combination of these two requirements may result in awkward interruptions throughout the moot practice as well as questions that are ambiguous and unrelated to what the student is discussing. Therefore, we would like to implement a room system to allow two users in a moot practice at a time. This would allow a student to practice their moot while having an additional user that can input questions in real time for a more effective practice session where questions are asked at a more appropriate time and are also more relevant and specific to the student's oral presentation.

Speech Recognition for pause detection and keyword recognition

Another goal is the implementation of speech synthesis to have the judge accurately time their questions to the student.

Full Scale User Testing

For the current iteration of Moot Court, we were able to test the general usability of the application with other EML Student Work Learns. Surveys were also available on the website for first-year Allard students to complete after they have tested the application during their mooting preparations. However, due to a lack of responses, for the future, we would like to also organize user testings with these students in order to fully examine the usefulness of this application. This will allow us to evaluate whether the features we have provided (e.g., the simulated 3D scene, judges, and mooting customizations) have helped to enhance students' mooting practices, and identify necessary changes and additions to the application to further facilitate their mooting preparations.

Engineering Notes / Lessons Learned

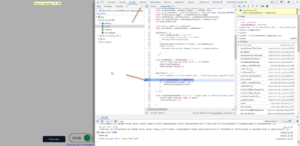

Console output in the inspector might be enough for small projects, but for larger scale systems / prototypes, it helps to learn how to use the debugger to fix issues quickly, efficiently, and to gather more information about the state of the program at a given time. During debugging, you can move your mouse over the objects/variables to examine the contents.

Debugging JS / React Apps

Debugging files directly (see picture on right):

- Open the inspector (Chrome in this example).

- Click on Sources tab, and then the kebab menu (3 dot menu, found near the top left of the inspector), then Open file.

- Type in a name of the file (in my example, JudgeAvatar.js).

- Click on a line of code you want to debug to insert a breakpoint (note: click on the line number itself, and it should show a blue marker).

- Refresh the page / or trigger it to examine the state of program at the breakpoint.

Debugging event listeners:

With the inspector, we can also breakpoint on events such as Mouse events, Window events, Keyboard events and much more. So lets say, for example, we want to debug a useEffect that uses a keyDown event

- Open the inspector (Chrome in this example).

- Click on Sources tab, and then expand the Event Listener Breakpoints on the right.

- Under Keyboard, click on keydown as an event you want to monitor.

- When the program is during the court scene, the code will breakpoint when you keydown on keys, such as Spacebar.

Team Members

Principal Investigators

Jon Festinger, Q.C. (He, Him, His)

Adjunct Professor

Peter A. Allard School of Law

The University of British Columbia

Nikos Harris, Q.C.

Associate Professor of Teaching

Peter A. Allard School of Law

The University of British Columbia

Barbara Wang BA, JD

(Pronouns: She/Her/Hers)

Manager, Student Experience

Peter A. Allard School of Law

The University of British Columbia

Current Team

Team Lead and Developer

Seungyeon Baek

Work Learn at the Emerging Media Lab at UBC

Undergraduate in Bachelor of Arts in Computer Science

University of British Columbia

Software Developer

Michelle Huynh

Work Learn at the Emerging Media Lab at UBC

Undergraduate in Bachelor of Science in Computer Science

University of British Columbia

Juno Yoon

Work Learn at the Emerging Media Lab at UBC

Undergraduate in Bachelor of Science in Computer Science

University of British Columbia

Jena Arianto

Volunteer and Work Learn at the Emerging Media Lab at UBC

University of British Columbia

Past contributors

Project Leads

Dante Cerron

Community Engaged Documentation and Research Space (CEDaR) Lab Supervisor

University of British Columbia

UX/UI Designer

Vita Chan

Work Learn at the Emerging Media Lab at UBC

Undergraduate in Bachelor of Science in Computer Science

University of British Columbia

Veronica Chen

Work Learn at the Emerging Media Lab at UBC

Undergraduate in Bachelor of Art in Cognitive Systems

University of British Columbia

Model Artist

Olivia Chen

Work Learn at the Emerging Media Lab at UBC

Undergraduate in Bachelor of Fine Arts in Theatre Design and Production

University of British Columbia