Documentation:23-3003 Vestibular Assessment

Introduction

Background

The vestibular system is located in the inner ear and consists of three fluid-filled semicircular canals. The movement of the fluid within these canals helps to determine the position and movement of our head and maintain balance. As we age, small calcium crystals in the inner ear can become dislodged and fall into the canals causing benign paroxysmal positional vertigo (BPPV) which is identified by feelings of dizziness and loss of balance. A series of head movements, called the Epley maneuver, is performed to reposition the crystals and therefore relieve the vertigo symptoms.

Students in the Department of Physical Therapy receive training for the Epley maneuver and will practice the maneuver on each other. However, this strategy for teaching and learning is insufficient as students are prone to making errors in how the head should be oriented as they struggle to understand how head positioning affects crystal movement. Although training equipment exists to help visualize the process, it is a limited resource given its high cost and its availability only being in the classroom.

Objective

The objective of this project is to develop a virtual reality (VR) teaching tool that can enhance instructor demonstrations of the various assessment techniques used to treat BPPV. By creating a 3D interactive model with the vestibular system superimposed onto a virtual patient's head, the instructor can demonstrate the Epley maneuver and have this be live broadcasted to the personal devices of students. This would allow students to better observe and understand how the crystals move through the semicircular canals as the Epley maneuver is performed.

Format

The project was made with Unreal Engine 5.1.1 using the Meta Quest 2 headset. The decision to use Unreal Engine instead of Unity was motivated by Unreal Engine's high-fidelity MetaHumans which would allow us to create realistic animations.

Primary Features

MetaHuman and Vestibular System

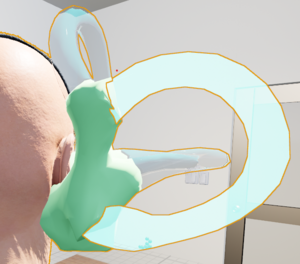

The project uses an older-looking MetaHuman as the virtual patient because it represents the demographic that is usually treated with the Epley maneuver. Superimposed onto the MetaHuman is the vestibular system. As a proof-of-concept, the project focuses on representing the Epley maneuver on the right ear which is why the model is only visualized on the right side of the MetaHuman. The posterior canal is highlighted in a lighter blue for contrast and labelling, and contained within it are the crystals. There are no crystals in the other canals to minimize distractions from the posterior canal. As the MetaHuman moves, the vestibular model and the crystals will behave accordingly to help simulate and visualize crystal movement in real life.

Epley Maneuver

Using the application, users can interact with the MetaHuman to perform the Epley maneuver as if they are treating a virtual patient suffering from BPPV. As the Epley maneuver is conducted, the crystals within the vestibular system will move accordingly to demonstrate how the maneuvers treat BPPV by relocating the crystals.

More information regarding how to conduct the Epley maneuver in the application can be found here.

Live Stream/Broadcast

Since the project was created to be a teaching tool, instructors can broadcast their VR session to the personal devices of students as they perform the Epley maneuver to provide students with a better view and visualization of the crystal movement. On their personal devices, students can adjust their view to see the vestibular system from angles different from the instructor's. This feature also allows students to record the demonstration for their personal notes.

Please refer to Future Plans for more information regarding this feature.

Process

Ideation

Our principal investigator (PI) wanted an augmented reality (AR) application to help students learn and study the Epley maneuver which involves moving a patient’s head in various angles (e.g., turning the head 45°) and orientations (e.g., head facing down), as well as changing the patient's body position (e.g., sitting up, laying down). However, one of the main requirements from our PI was that the application needs to be accessible, meaning students should be able to use the application without needing additional equipment. Therefore our initial idea was to create a handheld AR application where a 3D model of the vestibular system would be overlaid on top of a student's head, and the crystals within the system would move accordingly as the Epley maneuver is performed on the student. The possible technological approaches to this idea that we explored were:

Face tracking

We explored existing face tracking technologies like Lens Studio and Unreal Engine’s (UE) Live Link Face. However, considering the different positions that patients are put in throughout the Epley maneuver, there will be certain movements where the face can no longer be tracked (i.e., when side of the face or back of the head is facing the camera), meaning the vestibular system and crystals will not move accordingly. UE's Live Link Face also has the additional problem of not being able to provide real time face capture data to a built UE project.

Image tracking

As an attempt to address the face tracking difficulties, we considered multi-image tracking (inspired by ZapBox controllers). Although it would require additional equipment, we thought having students print the image markers would not be too much of a hassle. However, after testing with different marker images and different sized markers, we continued encountering issues with tracking as the camera moves further than approximately 1 foot away from the marker. This is problematic because having the phone too close to the face would make it difficult for students to conduct the maneuver while also visualizing the vestibular system on their devices.

HoloLens 2

As we continued exploring different technological approaches, we realized that the major characteristics of AR that are most relevant for this project is the ability to recognize the 3D environment and correctly place the vestibular system onto a person, and image/object tracking to correctly visualize the vestibular system. But handheld AR could not accurately support these features and ensure that the elements would be stable which is why we thought the HoloLens 2 would be the best solution for this project. The HoloLens 2 can track objects and hand movements fairly accurately, meaning we would be able create an application that allowed students to interact directly with virtual patient. Another motivation for this approach is due to the fact that students would probably be less likely to use the application for their studies if it is unappealing and buggy.

Since the switch to using the HoloLens 2 would make our project less accessible to students due to the headset being quite costly for either the department or students to purchase, we decided to instead make our project a teaching tool for when instructors demonstrate the Epley maneuver in class. We would include the additional feature of broadcasting the AR session to students' personal devices while instructors are using the HoloLens 2 to conduct the maneuver on a virtual patient so students can have a better visualization of the vestibular system and the crystal movement. As students are viewing the AR session on their own devices, they can also change the perspective to view the system and crystals at different angles as well. The broadcasting feature also provides the option for students to record the demonstration for future reference.

HoloLens 2 vs. Meta Quest 2

During development, we noticed flaws in the HoloLens 2's user experience that consequently affected our project such as the discomfort and learning curve of viewing and interacting with holograms. More importantly however, the HoloLens 2 only natively supported a limited number of hand gestures for hand tracking which made implementing interactions with the virtual patient a challenge as we needed different gestures for turning the patient's head and changing the patient to different body positions. As a result, we began experimenting and ultimately decided to work with the Meta Quest 2 instead because virtual reality (VR) would help to create a more seamless and therefore comfortable experience for demonstrating the Epley maneuver and visualizing the vestibular system, and it also supported a significantly larger set of hand gestures. Additional reasons to use the Meta Quest 2 include the fact that the headset is more future-proof as Meta Quests are still being produced and upgraded, and they are also significantly more affordable than the HoloLens 2 if either the department or students decide to purchase the Meta Quest 2 themselves.

Challenges

MetaHuman Interaction

Implementing the MetaHuman interaction to simulate the Epley maneuver was a challenge given the complexities of the maneuver itself and limited documentation and resources available for understanding for how Unreal Engine MetaHumans can be interacted with.

Head Rotation

For adjusting the head rotation of the MetaHuman, the team is currently using the Unreal Engine Look At Function which makes the MetaHuman follow a specific target. The initial implementation had the MetaHuman follow an object resulting a user experience where the instructor would have to grab the object in order to change the head rotation of the MetaHuman virtual patient. However, this interaction was improved by having the MetaHuman follow the instructor's hand once a particular hand gesture is made.

Body Position

The team has also briefly explored a framework for Mixamo animation retargeting which involves live capturing another person going through the motions of the Epley maneuver and retargeting the movement into MetaHuman animations on Unreal Engine (find relevant files in the Additional Files section). Although this implementation provided realistic body movements, it interfered with the simulation of the crystals. As a result, the team created manual keyframe animations.

Although the combination of the head rotation interaction and body position animations in the August 2023 prototype are able to visually represent the Epley maneuver for demonstration purposes, it is insufficient for simulating the actual movements that are conducted throughout the treatment. Therefore, future iterations should consider direct bone modification of the MetaHuman or motion capture technology in order to accurately replicate the Epley maneuver. For more information regarding these future considerations please refer to the Future Plans section.

Crystals

A requirement for the project was to have the vestibular system superimposed onto the virtual patient so the crystal movement could be visualized and observed simultaneously while the Epley maneuver is performed. However, as we were working on simulating the crystal movement, we encountered problems with the crystals falling out of the posterior canal during sudden movements and if the model was being moved at high speeds. This was due to the size of the crystals being too small and the walls of the posterior canal being too thin for the engine to appropriately calculate the velocity and therefore correctly simulate the physics. As a result, we attempted to resolve the issue by creating a custom collider for the semicircular canals that are much larger and thicker than the walls of the presented model.

Although this solution helped with ensuring the crystals stay in place, even during quicker movements, the problem is still present during instances where the user changes the virtual patient's body position and rotates the virtual patient's head at the same time. For more information about this bug, please refer to the Issues/Bugs section.

Future Plans

Live Stream/Broadcast

Pixel streaming is a built in feature in Unreal Engine that allows the primary user to stream their application session to others in real time. In the context of this project the instructor will be able to demo the session live to the classroom. The August 2023 prototype does not have Pixel Streaming available for Meta Quest 2. More details on setup and research is in Teams channel under the Subject Reference folder.

Please request access to the 23-3003 Vestibular Assessment channel on Teams under subject reference/Pixel Streaming in Unreal Engine.docx for further information regarding the team's progress so far.

Improving MetaHuman Interaction

The current MetaHuman interaction uses a series of hand gestures for changing the head rotation and body position of the MetaHuman. However, the approach of using preset animations does not appropriately simulate the Epley maneuver, which is why for future iterations the team should consider alternative methods such as bone modification for direct manipulation of the MetaHuman, or using motion capture to retarget a person's movement onto the MetaHuman.

Note: Using motion capture would change the user experience of this project. Instead of the instructor performing the Epley maneuver on the MetaHuman, they would be doing it on another person whose motions throughout the treatment would be captured either through computer vision or body sensors and retargeted to the MetaHuman virtual patient.

Eye Movement

Part of learning the Epley maneuver is understanding the abnormal eye movements that are triggered by changes in the patient's body position. As an additional feature, we would like to create animations of these eye movements and have them be triggered by the movement and positioning of the crystals in the posterior canal to help students further understand the treatment.

Epley Maneuver on the Left Side

As a proof-of-concept, the project thus far has focused on representing the Epley maneuver on the right side. In the future, we would like to include the option to perform the Epley maneuver on the right side and instructors would be able to choose which side they would like the demonstrate. This would involve including a vestibular model for the right ear and modifying the existing body animations to turn the MetaHuman to the right instead of the right.

User Interface for Instructions

First time users would be instructed on the types of gestures to use, as they are hand specific. Refer to the Figma prototype for how this should be implemented.

Development

GitHub Repo

GitHub link: https://github.com/ubcemergingmedialab/23-3003-Vestibular_Assessment

Active Branches

| Branch Name | Purpose | Last Modified Date | Modified By | Build/Demo Ready? |

|---|---|---|---|---|

| main | Default branch (branch also contains work done for HoloLens 2) | July 6, 2023 | Michelle Huynh | No |

| vesti_complete03 | Completed demo for August 2023 for Meta Quest 2. Built in Unreal Engine 5.1.1. Contains primary features: Epley maneuver for posterior canal and reset function. | August 30, 2023 | Jerry Wang | Yes |

| VR_nogit | Testing various implementation methods that allow users to directly manipulate the MetaHuman (bone modification and motion capture with Pose Cam) | August 8, 2023 | Jerry Wang | No |

| branch88 | Project using original MetaHuman interactions (hand gestures to control head movement and body positioning) | July 21, 2023 | Jerry Wang | No |

| branch89 | Project using original MetaHuman interactions (hand gestures to control head movement and body positioning) | August 10, 2023 | Michelle Huynh | No |

Unused Branches

The following branches in the repository are not longer being used:

| Branch Name | Purpose | Last Modified Date | Modified By |

|---|---|---|---|

| CrystalTests | Testing crystal movement simulation | July 6, 2023 | Frederik Svendsen |

| Detection | Prototyping handheld AR, specifically image tracking idea | May 31, 2023 | Jerry Wang |

| VR_nogit2 | Continue VR development after UE Git plugin was removed | July 19, 2023 | Michelle Huyhn |

| Vr_0 | Branch for VR development using Meta Quest 2 headset | July 14, 2023 | Jerry Wang |

| Vr_1 | Branch for VR development using Meta Quest 2 headset | July 18, 2023 | Michelle Huynh |

| Vr_2 | Test branch for removing UE Git plugin and reverting back to using Git Bash (Git plugin prevented project from building and was not handling checked in/out files properly) | July 17, 2023 | Jerry Wang |

| hololol | Originally created with the intention of using for HoloLens 2 development (but ended up working in main branch when UE Git/EML Perforce plugin was incorporated) | June 6, 2023 | Michelle Huynh |

| metaHOLOL | Originally created with the intention of using for HoloLens 2 development (but ended up working in main branch when UE Git/EML Perforce plugin was incorporated) | June 6, 2023 | Michelle Huynh |

| testbranchdelete | Test branch for removing UE Git plugin and reverting back to using Git Bash (Git plugin prevented project from building and was not handling checked in/out files properly) | July 18, 2023 | Michelle Huynh |

Key Project Files

Below are files that are relevant to the functionality and core features of the project.

| File or Folder name | Purpose | File Path within Project | Important Values/ Specific features/ Reference Location |

|---|---|---|---|

| VRTemplateMap | The main level/map for the application. It should load by default. This is where you would design your environment and arrange things such as camera, lighting, props etc... | Content/VrTemplate/Maps | Level Blueprint -> Contains base code for key press events, left hand gesture logic that will be used in the Epley Maneuver Animation, and event tick checks to see if right hand is in grab state. |

| BP_Sook-ja | The metahuman model used in the project. 3D model of ear is attached to it, and blueprints directly reference this (level blueprint). | Content/MetaHumans/Sook-ja | Viewport (Components) -> Can edit Vestibular system model alignment here with the hierarchy.

|

| BP_PosteriorCanal | Location the crystals will spawn in. Contains the blueprint code regarding when to start and stop crystal movement. The mesh/model is also included. | Content/EML/OOP/Actors/Objects | Viewport -> Position, Material and collision can be adjusted here.

EventGraph -> Scripts regarding crystal spawn and physics conditions are here Inserted into BP_Sookja viewport |

| Tubes | The mesh/model of the other 2 semicircular canals of the vestibular system. | Content/EML/Art/Meshes | Position on the metahuman can be adjusted in BP_Sookja. Material and collision can be adjusted here. |

| Utricle | The mesh/model that connects all the semicircular canals of the vestibular system. | Content/EML/Art/Meshes | Position on the metahuman can be adjusted in BP_Sookja. Material and collision can be adjusted here. |

| BP_Ball | The basic crystal model. Can be used to adjust appearance, colliders, shape, and movement speed. Posterior Canal will run code that will automatically generate multiple actors of BP_Ball | Content/EML/OOP/Actors/Objects | EventGraph -> ToggleSimulatePhysics (function to unfreeze/freeze crystal movement) |

| BodyAnimation | Contains the order animations are played and specify the conditions animations are played. (Reversing animation, play speed etc...) | Content/EML/Animations | AnimGraph -> AnimStateMachine Where all the animation states are. |

| EnumAction | It is an ordered list of the steps of the Epley Maneuver key positions used in BodyAnimation and the level blueprint for VRTemplateMap. | Content/EML/Misc | Level Blueprint -> Values are referenced for animation states with keypress and left hand pinch events

BodyAnimation -> Referenced on Transition states in AnimStateMachine transitions. |

| Face_AnimBP | Metahuman BP adjusted for head animation/position and right hand pinch conditions for head to follow. | Content/Metahumans/Common/Face | AnimGraph -> Contains specifics on how head would move when not following vs following. (References Event graph for this condition)

EventGraph -> Updates info on keeping track of right hand and triggers conditions for anim graph to move head and references VR Pawn. |

| WidgetMenu | Basic Implementation of UI buttons/ Layout (WIP) Not implemented in level yet. | Content/VRTemplate/Blueprint | Currently contains buttons that have labels. No functionality is attached to the buttons. |

| VRPawn | Contains code relevant to righthand function (grabbing), and setting position for LookAtTarget which is referenced in Face_AnimBP | Content/VRTemplate/Blueprint | EventGraph-> Contains code relevant to righthand function (grabbing), and setting position for LookAtTarget which is referenced in Face_AnimBP |

| BP_LookAtTarget | Referenced by other files | Content | referenced by other blueprints as a locator for the Metahuman's head to follow the right hand. |

| BP_ResetButton | A grabbable object in the level that will reload the level. | Content/EML/OOP/Actors/Objects | EventGraph -> Contains delay time between switching levels and level that will be loaded upon trigger

ViewPort -> Contains mesh and custom texture for object as well as the GrabComponent needed for blueprint function. |

| BP_StreamButton | An object in the level that does not have functionality in the level yet. | Content/EML/OOP/Actors/Objects | EventGraph -> Current code is meant to show the color change upon different timings. Does not do anything upon load. Goal is to have the color change to signify if stream is active. |

Additional Files

| File/Folder | Purpose | File Path in Project |

|---|---|---|

| IK_metahuman | Used for retargeting animations to different models | Content/MetaHumans/Common/Common/IK_metahuman.uasset |

| RTG_metahuman | Used for retargeting animations to different models | Content/MetaHumans/Common/Common/RTG_metahuman.uasset |

Assets

Vestibular Model

The inner ear model that was used to help the team create the vestibular model for this project can be found here. The original inner ear model, created by the University of Dundee School of Medicine, was modified by excluding all parts of the inner ear except for the semicircular canals and the utricle.

The final vestibular model is separated into four components (2 halves of the posterior canal, the other two semicircular canals, and the utricle). The canals were hollowed out to create space for the crystals to move through and the materials for the canals were changed so the crystals could be easily observed. Finally, the posterior canal was changed to a different shade of blue from the other canals for differentiation and labelling purposes.

| Asset | Description | Location | Material |

|---|---|---|---|

| Posterior Canal | Posterior Semicircular Canal and where the crystals will spawn | 23-3003-Vestibular_Assessment/Content/EML/Art/Meshes/PosteriorCanal.uasset | M_TransparentBlue |

| Tubes | Superior Semicircular Canal and Lateral Semicircular Canal | 23-3003-Vestibular_Assessment/Content/EML/Art/Meshes/Tubes.uasset | M_GlassBlue |

| Utricle | Connecting point for the 3 vestibular canals | 23-3003-Vestibular_Assessment/Content/EML/Art/Meshes/Utricle.uasset | MI_Green |

Note: The original Blender file for this model can be accessed in the 23-3003 Vestibular Assessment channel on Teams under the Project Assets folder.

Clinic Room

The following assets/packages were also used to create the VR clinic room environment. Note, the packages from Unreal Engine Marketplace were purchased while they were free for the month.

| Asset | Description | Location |

|---|---|---|

| Books | Stack of books | https://sketchfab.com/3d-models/variety-of-books-9ecd80af3b7e4cd59efb4c141511a55b |

| Desktop Computer | Desktop computer (monitor, keyboard, mouse) | https://sketchfab.com/3d-models/desktop-computer-561abc2fc95941609fc7bc6f232895c2 |

| Physiotherapy Bed | Physiotherapy bed for MetaHuman | Original Blender file in 23-3003 Vestibular Assessment channel on Teams under the Project Assets folder |

| Chairs & Tables Vol. 4 | Asset package of various chairs and tables (used for the patient chairs, and computer desk) | https://www.unrealengine.com/marketplace/en-US/product/chairs-tables-vol |

| Brutalist Architecture Office | Asset package of various furniture, props, lights, etc. (used for the ceiling light, office chair, and bookshelf) | https://www.unrealengine.com/marketplace/en-US/product/brutalist-architecture-office |

| Museum Environment Kit | Asset package of museum-related props (used for the paintings on the wall) | https://www.unrealengine.com/marketplace/en-US/product/museum-environment-kit |

| MEDA Furniture Pack | Asset package of various furniture (used for the vases on the bookshelf) | https://www.unrealengine.com/marketplace/en-US/product/meda-furniture-pack |

Preset Animations

These animations were produced using the Control Rig for MetaHumans in a Sequencer. All animations are stored in Content/EML/Animations with folders indicating forward and reverse animations as well as other iterations. Some animations are not used in the final prototype are numbered to represent the stage they would otherwise run in. The preset animations are used in BodyAnimation and can also be migrated to other projects. However if a folder is not present, it will automatically be generated to match the file pathing of the original project. (This can also be used for other assets like meshes).

Plugins

| Plugin Name | Why we need it | Where to get it | Required/Optional |

|---|---|---|---|

| EML Plugin (Unreal) | Generates the EML project structure | https://github.com/ubcemergingmedialab/23-300A-EML_Plugins | Required |

| Meta XR Plugin | Customize Unreal Engine for Meta Quest development | https://developer.oculus.com/downloads/package/unreal-engine-5-integration/ | Required |

| MetaHuman Plugin | Turns custom meshes into MetaHumans and allows for high-fidelity facial-capture animation | UE Marketplace | Required |

| Quixel Bridge | Downloading MetaHumans | UE Marketplace | Required |

Issues/Bugs

Known Issues affecting functionality or stability of the program:

- Project instability

- Project only works on certain Meta Quest headsets

- Project only opens on certain computers

- Still an issue when MetaHuman's body position is changed at the same time of head rotation (rotational freeze when moving only takes into account one moving force, not two, so when the animation is playing, we can't rotate the head or else the crystals will fall)

- Unable to simultaneously account for two physics forces; fix by restricting head rotation interaction while animations are being played (implemented by tracking the MetaHumans hand position to see if it has changed)

- Animation blending bug occurs after the first time the user pinches the right index + thumb to have the MetaHuman watch the hand. The animation transitions smoothly the first time, but snaps on subsequent times.

- Bone transform modification bug: occurs when attaching a grab point to the neck bone that transforms the pose of the metahuman's head. rotation constraint bug occurs where the grab point detaches from the neck and behaves abnormally.

- Crystal simulation bug: crystal tends to fall outside the posterior canal. Semi fixed by extruding an invisible outer layer for the posterior canal, and increase linear and angular dampening of the crystal balls and requiring the metahuman to watch the hand while the body movement animation plays.

- Occasional animation jitters, may be playback speed issue.

- Gesture detection inadequacy, where sometimes your finger pinches aren't registered.

- Hands disappear sometimes if headset taken off while the program is running and put back on head.

First Time Setup

NOTE: Due to usage of the MetaHuman Plugin, the project only supports development using a Windows operating system.

Downloading Unreal Engine

- Download and install the Epic Games Launcher, if you don't have it already

- Sign up for an Epic Games account, if you don't have one already

- Sign in to the Epic Games Launcher, if not signed in already

- Install Unreal Engine 5.1.1.

More detailed instructions can be found on Unreal Engine's documentation for installing Unreal Engine.

Installing Unreal Engine Marketplace Plugins

The following Unreal Engine Marketplace plugin(s) are required for this project:

- MetaHuman Plugin

Follow the steps below to install each plugin:

- In the Epic Games Launcher, navigate to the Unreal Engine tab, and then go to Marketplace

- Search for the plugin

- On the plugin's title, click Add to Cart

- Click the Cart button and check out

- Install the plugin to Unreal Engine 5.1.1

More detailed instructions can be found on Unreal Engine's documentation for working with plugins (refer to "Installing Plugins from the Unreal Engine Marketplace" section).

Downloading and Setting Up Visual Studio (Building Tools)

- Download Visual Studio 2022 (version that is compatible with Unreal Engine 5.1.1)

- Install the following Workloads (necessary for compiling projects):

- .NET desktop development

- Desktop development with C++

- Universal Windows Platform Development

- Game development with C++

- C++ profiling tools

- C++ AddressSanitizer

- Windows 10 SDK (10.0.183.62 or Newer)

- Unreal Engine installer

More detailed instructions can be found on Unreal Engine's documentation for setting up Visual Studio.

Setting Up Android SDK and NDK

Please refer to the more detailed instructions found on Unreal Engine's documentation for setting up Android SDK and NDK.

- Install Android Studio 4.0 (May 28, 2020)

- Set up Android Studio

- Set up command-line tools for Android

- Restart Windows computer

- Set up Android NDK

Setting Up Meta Quest for Unreal Engine

There are several methods for customizing Unreal Engine to support Meta Quest development as described in Meta Quest's documentation.

The summer 2023 team followed Option 1:

- Download and install Unreal Engine 5.1.1, if you haven't done so already

- Download the MetaXR plugin version 54.0 (for Unreal Engine 5.1.1)

.zipfile here - Extract the .zip file contents to the location

<ue5InstallationFolder>\Engine\Plugins\Marketplace\MetaXRon your computer- (e.g.,

C:\Program Files\Epic Games\UE_5.1\Engine\Plugins\Marketplace\MetaXR)

- (e.g.,

More detailed instructions can be found on Meta Quest's documentation for creating a VR application in Unreal Engine.

Opening the Project

GitHub link: https://github.com/ubcemergingmedialab/23-3003-Vestibular_Assessment

- Clone the GitHub repo

- For the most updated version of the project, switch to [BRANCH]

- Open the Unreal Engine project by opening [Vesti_Complete.uproject] file

- When the project is opened, there will be popups indicating missing project settings and/or missing plugins. Click the Enable Missing... button for each popup

- Once all missing project settings and/or missing plugins are enabled, you will be prompted to restart the project.

Manually Enabling Plugins

Any missing plugins that need to be enabled can be done by navigating to Settings, then Plugins.

Testing Functionality With VR Headset

- Turn on the Meta Quest 2 headset

- Log into the headset

- Connect the headset to the computer via the Oculus App using a USB 3 cable

- If you do not have the Oculus App for the Meta Quest 2, you can download it here

- In Unreal Engine, select VR Preview under the Play Options dropdown

Previewing Without VR Headset

Although the project was in made in VR, it can still be viewed without a headset by simulating the project using various play modes.

In Selected Viewport mode, hand gestures cannot be used, but using keypress (1, 2, 3, 4 -- in that order) can trigger the body animations and allow for testing of crystal movement. Errors may appear in the message log when running the project, but that is normal as they are related to the Meta Quest 2 headset not being connected and the application being unable to detect the users hands.

In Simulate mode, you can select objects in the environment and move the camera around.

User Manual

Demonstrating the Epley Maneuver

Turning the Head

- Using the right hand, pinch thumb and index finger to initiate head rotation for the MetaHuman

- You may need to hold your hand still for a moment as the MetaHuman turns its head to your hand

- The MetaHuman virtual patient will continue following your hand

- Using your right hand again, pinch your thumb and index finger to stop the MetaHuman from following your hand

Changing Body Positions

The MetaHuman starts by sitting fully on the physiotherapy bed.

You can only have the Metahuman move their body if the head is able to follow your right hand.

Change the MetaHuman's body position by using the left hand:

- Pinch thumb and index -> lie down on its back on the physiotherapy bed

- Pinch thumb and middle finger -> turn body to the side

- Pinch thumb and ring finger -> get up to sit on the edge of the physiotherapy bed

- Pinch thumb and pinky -> return to default position (fully sitting on the physiotherapy bed)

You can also go back to the previous step by pinching the previous finger

- E.g., If the MetaHuman is on the side of its body, and you want the MetaHuman to lie on its back again, you can go back by pressing the index finger and thumb together

Restarting the Level

To start over grab the cube that says Press here to reset Vestibular Simulation and move it to restart the level. There will be about a 2 second delay before the level restarts.

Poster

Team Members

Principle Investigator

Roland Fletcher

Assistant Professor of Teaching

Department of Physical Therapy

University of British Columbia

Student Team (Summer 2023)

Team Lead and Designer

Vita Chan

Work Learn at the Emerging Media Lab at UBC

Master of Library and Information Studies

University of British Columbia

Developers

Jerry Wang

Work Learn at the Emerging Media Lab at UBC

Bachelor of Cognitive Systems

University of British Columbia

Michelle Huynh

Work Learn at the Emerging Media Lab at UBC

Bachelor of Science

University of British Columbia

FAQ

As the title suggests

License

MIT License

Copyright (c) 2023 University of British Columbia

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

Last edit: May 18, 2023 by Daniel Lindenberger

|

|