Course:CPSC522/TextSummarizationUsingMachineLearning

Title

This page is about automatic text summarization and two of the many techniques that can be used to generate it. The two papers which will be discussed on this page are:

- Extracting Important Sentences with Support Vector Machines, Tsutomu Hirao and Hideki Isozaki and Eisaku Maeda.[2]

Principal Author: Ekta Aggarwal

Abstract

This page briefly describes the problem of automatic text summarization and its need. Then, two research papers that use machine learning techniques - Decision Trees and Support Vector Machines respectively - to generate summaries are discussed, followed by the comparison between them.

Builds on

This page builds upon the following topics:

- Machine learning domain has garnered importance and appreciation from researchers in different domains. Machine learning has a wide base of cases which can be solved through the use of different techniques available under Machine learning.

- Decision trees are tree-like structures which can be used to make decisions based on historical data. Being a type of machine learning algorithm, the intuition behind decision trees is to first build a decision tree using past data and then draw conclusions on it for the futuristic data.

- Support vector machines have emerged as one of the important methodology used in Machine learning to make decisions. They are a binary classifier which has a significant amount of applications in the real world. For example, detecting if an email is spam or not, is a trivial but vital scenario where Support vector machines can be used.

Related Pages

Topics related to this page are:

- Hidden markov models are Markov models of the system which is itself modeled as the Markov process with a non-fully observable environment or hidden states. Reinforcement learning, handwriting recognition, speech recognition are some of its applications.

- Naive Bayes Classifier as the name suggests is a classifier based on Bayes theorem. It is also called simple Bayes and independence Bayes.

- Neural networks are one of the emerging algorithms which have been put to use for problems of different origins, difficulties, and the end result. Neural networks come in many flavors, some of them being - recurrent neural network, convolution neural network, Long short-term memory.

Content

Introduction

The ever-growing availability of abundant information sources such as the news articles, the internet, blogs etc., has led to an intensive research in the field of text summarization. Mainly due to the need to reduce the text into a short, focused summary which captures the details of the original text. Radev et al.[3] describe summary as” a text that is produced from one or more texts, that conveys important information in the original text(s), and that is no longer than half of the original text(s) and usually significantly less than that “. Some key takeaways from this definition are:

- Summaries should preserve the important information of the text.

- Summaries can be derived out of single or multiple documents.

- Summaries should be short.

Essentially, text summarization is the problem of extracting short, accurate and fluent words from a given longer text. Summarization tools provide us with certain advantages:

- Summaries reduce the reading time.

- Automatic summarization tools produced unbiased summaries as compared to human counterparts.

- Summaries also improves the effectiveness of indexing.

As it is seen, the flow of information is not same throughout a document, which means some sections are more important than others. Thus, the challenge is to correctly distinguish between these sections to generate a summary. But, as the data grows rapidly, it is impossible to manually summarize the text. Thus, need for automation tools has arisen which has to lead the development of the solution to this problem from various domains.

Various machine learning methods have been used to automatically generate summaries:

- Naive Bayes Classifier

- Hidden Markov models

- Log-Linear Models

- Neural Networks

- Decision Tree

- Support Vector Machines

There are two main approaches which can be used while summarizing text:

- Extractive Methods – These methods pick the sentences or phrases from the given text to construct a summary. Ranking the relevance of phrases is essentially deployed to find the words closest to the theme of the text.

- Abstractive Methods – Human tend to use this method, i.e. they generate new phrases/sentences to capture the meaning of the source text. These techniques are more challenging than the extractive ones.

Example

An example of summarizing text using an algorithm is described below.

The text:

"Artificial intelligence (AI, also machine intelligence, MI) is intelligence demonstrated by machines, in contrast to the natural intelligence (NI) displayed by humans and other animals. In computer science AI research is defined as the study of "intelligent agents": any device that perceives its environment and takes actions that maximize its chance of successfully achieving its goals.[1] Colloquially, the term "artificial intelligence" is applied when a machine mimics "cognitive" functions that humans associate with other human minds, such as "learning" and "problem solving".[2] See glossary of artificial intelligence.

The scope of AI is disputed: as machines become increasingly capable, tasks considered as requiring "intelligence" are often removed from the definition, a phenomenon known as the AI effect, leading to the quip "AI is whatever hasn't been done yet."[3] For instance, optical character recognition is frequently excluded from "artificial intelligence", having become a routine technology.[4] Capabilities generally classified as AI as of 2017 include successfully understanding human speech,[5] competing at the highest level in strategic game systems (such as chess and Go[6]), autonomous cars, intelligent routing in content delivery networks, military simulations, and interpreting complex data, including images and videos''."

is taken from Wikipedia: Artificial Intelligence for demonstration purposes. Upon this text, we can run various summarization tools which are available online, free of cost. Using one such summarization tool, the above text was summarized as follows:

"The scope of AI is disputed: as machines become increasingly capable, tasks considered as requiring "intelligence" are often removed from the definition, a phenomenon known as the AI effect, leading to the quip "AI is whatever hasn't been done yet."[3] For instance, optical character recognition is frequently excluded from "artificial intelligence", having become a routine technology.[4] Capabilities generally classified as AI as of 2017 include successfully understanding human speech,[5] competing at the highest level in strategic game systems (such as chess and Go[6]), autonomous cars, intelligent routing in content delivery networks, military simulations, and interpreting complex data, including images and videos."

Thus, as observed here, the summarization tool eases our task and creates a short and precise summary. In this page, we will see 2 different ways to summarize text automatically with the help of 2 research papers. Below, both papers are described one by one, followed by their comparison. Each paper contains background, author's contribution, and experiments sections.

Paper 1: Training a Selection Function for Extraction

Background

Chin-Yew Lin, emphasize the growing amount of text on the world wide web, which had led to the inevitable need of the use of text summarization technology around 1999, as elicited in [4], [5] and [6]. However, generating a high-quality summary was an arduous task and required the use of natural languages processing techniques such as semantic parsing, world knowledge inference, and discourse analysis. Therefore, the techniques which came around 1999 - the year in which this paper was published - focused mainly on producing extracts instead of abstracts. An extract contains important sentences of the text which reproduce verbatim. Sentences can be assigned scores/importance based on position importance, signature words, word/phrase frequency, lexical cohesion and presence of certain types of words.

In this paper, authors compared the performance of many heuristics used in generating summaries for newspaper articles. They also described the design, implementation, and performance of SUMMARIST, a multilingual – English, Arabic, Bahasa Indonesia, Japanese, Korean and Spanish – text summarization system. SUMMARIST, was based on the following equation:

Summary = Topic identification + Topic interpretation + Summary generation

Here, topic identification stage identified the most important sentences from the given text using positional importance, term frequency etc. Followed by topic interpretation, which was essentially word aggregation and could be generated using concept counting[7] and topic signatures[8]. The last stage of summarization, summary generation, could generate summaries in the form of keywords, extracts, template-based summaries and refined summaries. But, when this paper was published, SUMMARIST was able to generate only keywords and extracts from the given input.

Author's contribution

Author's initially used a simple linear combination function for SUMMARIST, in which coefficients were specified manually on the basis of experimental results. But this technique did not work well with a large collection of texts. After which various heuristics models were used to provide a better understanding of the system. Learning the contribution of each parameter to the end result helped to approximate the glass-box study.

Authors performed a study evaluating SUMMARIST, where a series of measurements were made. The training data was taken from Q&A summary data provided by TIPSTER-SUMMAC. SUMMARIST worked by assigning scores to each sentence using several independent modules. These scores were then combined to decide which sentences would be chosen for text summarization. A sentence was rated good if it also occurred in the human-made summary. Authors chose 4 topics – overcrowded prisons, cigarette consumption, computer security and solar power - for their study as shown in Table 1. One of the topics contained 48 texts while the rest had 30 each.

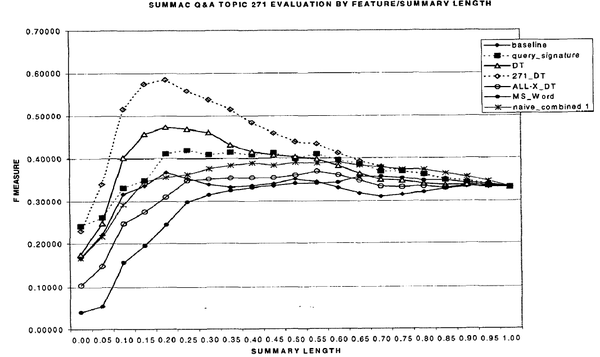

Parameters, features and combination functions chosen by authors for experiment leading to Figure 1 are:

- Baseline - A method in which a sentence is given a score based on its position in the text. Here, the first sentence will be awarded highest score while the last sentence gets the lowest.

- Title - Words in the sentence that are also in the title are awarded more score. For every term in the title, a positive score of 1 is awarded, while for the others 0 is given.

- and scores - Terms with higher term frequency() and values are more important and thus, receive more weight-age. and terms can be found in [9] and in information retrieval research [10].

- Position score - Assuming, that sentence position correlates with importance in genres, sentences with a fixed position in the text are awarded position score. SUMMARIST assigns higher position scores for terms which come in first 4 paragraphs.

- Query signature - Depending on the number of query words the sentence contains, the algorithm awards normalized score to the current sentence.

- IR signature - IR short for information retrieval, assigns a score based on the number and scope of the IR signature words included. Here, in the search, the terms that occur more often in top retrieved documents are considered more important to the user.

- Sentence length - Sentence length is calculated by taking the length of a sentence and normalizing it by the length of longest sentence.

- Average lexical connectivity - It is the number of terms which coincide with other sentences divided by the total number of sentences in the given text. Thus, this parameter assumes that sentences which have more terms in common are more important.

- Numerical data - Sentences which contained numerical data were assigned the Boolean value of 1.

- Proper name - Sentences which contained proper noun were assigned the Boolean value of 1.

- Pronoun and adjective - Sentences which contained pronoun and adjective were assigned the Boolean value of 1.

- Weekday and month - Sentences which included weekdays and months were assigned the Boolean value of 1.

- Quotation - Sentences which contained quotes were assigned the Boolean value of 1.

- First sentences - The first sentence parameter considers the first sentence of each paragraph and normalizes it from 0 to 1.

- Decision tree combination function - The scores from all the above features and parameters are combined and used by the learning process of the decision trees.

- Simple combination function - The scores from parameters - title, query signature, OPP, , IR signature, proper noun, numerical data, adjective, pronoun, weekday and month - were combined by adding their normalized scores.

Experiments

Some results that can be inferred from the experiments are as follows. The best performance as expected came from the automatic learning of the decision tree combination function which included all the above parameters and features while its derivation. It was also observed that query signature, achieved the second-best score for Solar Power topic, mainly due to sufficient information reflected from the text. On the other hand, it did not perform well on other topics. However, several methods tied up for the third position, such as IR signature, baseline and simple combination function. They also found that selecting the first sentence from the given text, for the summary is not a good idea. Another point they highlighted was that summaries usually do not contain quoted sentences. From Figure 1, we can see that the shape of curves indicate most useful summaries are not greater than 35% of the given text.

Authors also conducted experiments to check the effect of various combination functions and to estimate how these functions related to each other. The results can be seen in Figure 2. Two new combination functions were added:

- Variation 1 – The decision tree learning process was trained on test data itself and the result was plotted.

- Variation 2 – To evaluate a normal general case, the decision tree learning process was trained on the rest of the three topics, excluding the topic used in variation 1.

As expected, decision tree from variation 1 performed best, but its F-score was still below 0.6.

Paper 2: Extracting Important Sentences with Support Vector Machines

Background

In this paper, authors proposed a method of sentence extraction using Support Vector Machines(SVM). They also compared their method to three others for Text Summarization Challenge and results show that their method offered the highest accuracy. Authors highlighted that conventional methods focus on extracting sentence features and define scores based on that. Keywords, sentence position and some linguistic clues form the features of a sentence. However, when the number of features grows large, it’s nearly impossible to tune them by hand. In such cases, machine learning comes in handy because of its capability of tuning large quantities of features. Related work in this paper, encompasses of several papers which deploy various machine learning methodology to automatically summarize the text. Some examples are Bayesian classifiers, Decision trees, and Support vector machines.

Support Vector Machines

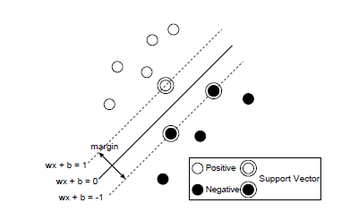

SVM is a supervised learning algorithm used in machine learning problems to classify a set of data. Given a set of training data, SVM learns how to categorize it into 2 classes based on the output of the data. When SVM has finished training, now it can take in some new data, that is the data that it has not seen before, and classify it based on the model it has learned. An example could be identifying if an email is a spam or not. Here, based on the historical data, it learns to differentiate between the two and then uses this model to gather knowledge about the emails given to it in the future. SVM aims to construct a hyperplane or many hyperplanes between the 2 data sets that it has learned. It tries to maximize the distance between them by choosing the hyperplane such that its margin is the maximum as shown below.

A feature vector for SVM can take the following form:

Here, for the j-th sample, is the feature vector while is the label which can be positive or negative.

SVM defines a hyper-plane separating the data set by the follow defined equation:

w · x + b = 0, w ∈ , b ∈ R

For more information about SVM, please refer to SVM UBC Wiki page.[11]

Author's contribution

In order to generate text summary, selection of sentences which are most related to the topic of the given text is required. Thus, all the sentences can be classified into these two categories: important or unimportant. Also, there could be a case where important sentences in training data could be different from the ones in testing data. To accomplish the task, authors employed a simple solution to find the rank of all sentences. They calculated the value of g(x), the distance of the sentence from the hyperplane. Authors used 410 Boolean variables for each sentence in their dataset which consisted of Japanese documents. Where

X = (x[1], . . .,x[410])

Some features used were:

- Position of sentences - Authors defined three feature functions for measuring the position of a sentence . Using these features, the first sentence was awarded the highest score, on the other hand, the last sentence got the lowest.

Posd() = 1−BD( )/|D()| Posp() = 1−BP()/|P()|

Here, |D()| represents the number of characters in document D() that contains .

BD() is the number of characters before in D().

|P()| represents number of characters of paragraph P() that contain .

BP() is number of characters before in a paragraph.

Posd denotes 's position in a document.

While Posp is 's position in a paragraph.

- Length of sentences - Length of a sentence was calculated from the equation given below.

Len() = ||/ max_Sz∈D()||

Here, || is the number of characters of sentence , and maxSz∈D || is the maximum number of characters in a sentence that belongs to D().

- Weight of sentences - This feature used the frequency of the words to assign scores to sentences in given text.

- Density of key words - As the name suggests, this function used the density of keywords in a sentence by using Hanning Window function.

- Named entities - x[r]=1 (1≤r≤8) is in indication of a certain Named entity class which can be observed in sentence .

- Conjunctions - This feature uses x[r]=1 (9≤r≤61) to determine if a conjunction is used in sentence.

- Functional words - This feature assigned scores to only those functional words such as and in a sentence.

- Part of speech - The expression x[r]=1 (235≤r≤300) refers to a certain part of the speech such as "Noun-" and "Verb-" is used.

- Semantical depth of nouns - x[r]=1 (301≤r≤311) denotes the depth of nouns if and only if sentence contains noun at a particular depth of the given text.

- Document Genre - This feature calculated x[r]=1 (312≤r≤315) and detected if the document belongs to a particular genre. There could be 4 possible genres: general, national, editorial and commentary.

- Symbols - It took into account x[r]=1 (r=316) the symbols in the sentence.

- Conversation - x[r]=1 (r=317) decided if a conversation style expression is included in the sentence .

- Assertive expressions - x[r]=1 (r=318) feature is activated if and only if an assertive expression is included in the sentence.

Experiments

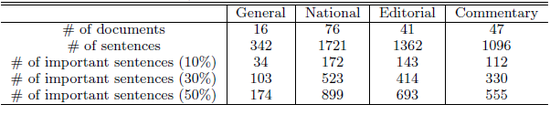

Authors used TSC summarization data for evaluation of their model. It consisted 180 Japanese documents from the years 1994, 1995 and 1998. In each of the documents, important sentences were manually extracted with summarization rates of 10%, 30%, and 50%. Table 2 shows an overview of the dataset used.

They compared decision tree learning, boosting, lead and SVM to generate text summaries.

- For decision tree learning method, authors used C 4.5 (Quinlan, 1993[12]) with default settings enabled. The above-mentioned features were used and sentences were ranked using C 4.5.

- The Boosting method, including the use of C 5.0, which applies boosting to decision tree learning. Here, sentences were ranked according to factors specified by C 5.0.

- In the Lead-based method, a simple methodology was applied, the first sentences were chosen. Parameter was chosen based on summarization rates.

- While in SVM method, authors used the second-order polynomial kernel and used the methodology described above.

The experiments included measures for evaluation, particularly accuracy. Accuracy was defined as follows: Accuracy = * 100

Where is specified number of important sentences, is number of true important sentences that were present in output.

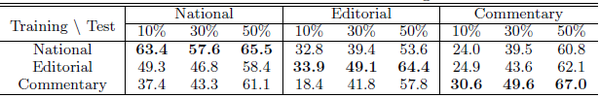

As can be followed from the Table 3, SVM achieved the highest accuracy, while lead-based method came last for all summarization rates and all genres. Table 3 also puts forward a theory that editorial and commentary are difficult as compared to other genres. Authors suspect that there could be two reasons for it:

- Non-standard features were used in these genres.

- There was no feature useful for discrimination for these genres.

Further experiments were conducted to verify the genre dependency. Authors:

- Extracted 36 documents at random from some genre which was used for training.

- Similarly they extracted 4 random documents from some genre for testing.

- And they repeated the above steps for 10 times for all combinations of ().

Their results are shown in Table 4, demonstrating that in Commentary and Editorial documents non-standard features play a vital role. Some observations that resulted were that features that were responsible for genre discrimination differed with the genre.

Comparison & Conclusion

Though both the papers described above, solve the same problem i.e text summarization, they do so by using entirely different techniques. Using machine learning as the basis for their implementation, they choose Decision trees and Support Vector Machines respectively to find the sentences which are most suited to be included in the summary. Some differences that can be highlighted in their approach were as follows:

- Authors of both the papers used different parameters or features except for 1 or 2, on which they built their solution. This activity indicates the importance of different features used to build a text summarizer. As their features were different, the summary generated by them was different from the another. However, as the authors of the second paper, Tsutomu Hirao and Hideki Isozaki and Eisaku Maeda, didn't compare the results from their approach and the one used by Chin-Yew Lin, it is difficult to say which one performed better.

- The second paper, which chose SVM as their methodology, decided it based on the increasing number of features required to assign ranks to the sentences. By using SVM, fine-tuning of the features could be easily done mainly because of the capacity of SVM to works with huge chunks of data. On the other hand, authors of the first paper were not using SVM or any other technique which could fine tune the features/parameters. So, they were using their parameters directly without tuning, which I assume could be the reason that SVM approach will give better results than Decision trees when compared.

However, results generated in second paper were compared with C4.5 and C5.0 decision trees and not in particular with the tree used in the first paper. From the results, it could be seen that use of SVM gives a significant upper hand as compared to the use of decision trees.

Annotated Bibliography

- ↑ Lin, Chin-Yew. "Training a selection function for extraction." Proceedings of the eighth international conference on Information and knowledge management. ACM, 1999.

- ↑ Hirao, Tsutomu, et al. "Extracting important sentences with support vector machines." Proceedings of the 19th international conference on Computational linguistics-Volume 1. Association for Computational Linguistics, 2002.

- ↑ Radev, Dragomir R., Eduard Hovy, and Kathleen McKeown. "Introduction to the special issue on summarization." Computational linguistics 28.4 (2002): 399-408.

- ↑ Baldwin, B., and T. Morton. 1998. Coreference-Based Summarization. In T. Firmin Hand and B. Sundheim (eds) TIPSTER SUMMAC Summarization Evaluation. Proceedings of the TIPSTER Text Phase III Workshop. Washington, DC.

- ↑ Buckley, C. and C. Cardie. 1997. SMART Summarization System. In T. Firmin Hand and B. Sundheim (eds) TIPSTER-SUMMAC Summarization Evaluation. Proceedings of the TIPSTER Text Phase III Workshop. Washington, DC.

- ↑ Cowie, J., E. Ludovik, and H. MoIina-Salgado. 1998. MINDS-Multi-lingual Interactive Document Summarization. Proceedings of the TIPSIER Text Phase Ill Workshop. Washington, DC.

- ↑ Lin, C-Y. 1995. Topic Identification by Concept Generalization, Proceedings of the Thirty-third Conference of the Association of Computational Linguistics (ACL-95), Boston, MA, pp. 308-3 10.

- ↑ Lin, C-Y. 1997. Robust Automated Topic Identification. Ph.D. dissertation, University of Southern California.

- ↑ Luhn, H.P. 1959. The Automatic Creation of Literature Abstracts. IBM Journal of Research and Development: 159-165.

- ↑ Salton, G. 1988. Automatic Text Processing. Reading,MA: Addison-Wesley.

- ↑ http://wiki.ubc.ca/Course:CPSC522/Support_Vector_Machines

- ↑ Quinlan, J. Ross. C4. 5: programs for machine learning. Elsevier, 2014.

|

|