Course:CPSC522/StackedGAN

Stacked Generative Adversarial Networks

Generative adversarial networks are popular for generative tasks, yet it can be hard to target the desired generations in the vast space of possible generations; stacking GANs is a good way of making a relatively vague generation problem into smaller, more specific, and thus easier generation problems.

Principal Author: Julin Song

Collaborators:

Abstract

Generative adversarial networks (GANs) is a deep learning approach that simultaneously trains a generative network and a discriminative network that compete with each other. While this is one of the most successful models for generative tasks, one major problem is that it can be hard to coax the structure to generate what the programmer desires in the vast space of possible generations. Stacking GANs is a way of delineating the problem with known subtasks. This page is based on Wang et al. Generative image modeling using style and structure adversarial networks[1] and Zhang, et al. StackGAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks[2][note 1]. Wang et al.'s paper uses two cooperating GANs, one generating the geometric structure of an image, and the other generating the texture and style for the geometric blocks generated by the first; their paper addresses the problem of generating realistic indoors scenes. Zhang et al.'s paper also uses two cooperating GANs, the first generating a low-resolution image, and the second essentially performing a super-resolution task on the results of the first.

This page gives a brief overview of GANs, then goes over the problem context - image synthesis, and lastly discusses the above-mentioned two papers.

Builds on

This page builds on generative adversarial networks and lies in the problem domain of image synthesis.

Related Pages

Related approaches to the problem include image generation from attributes[3], image synthesis using autoencoders[4], visualizing the pre-image using deep CNNs[5], and laplacian stacking of CNNs[6].

- ↑ The author Wang X. in each paper are different people.

Content

Background

Generative adversarial networks

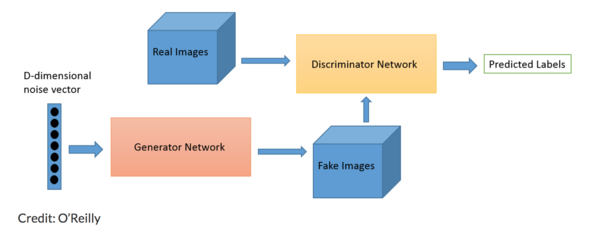

The figure below is requoted from this page, which is also a good explanation of how GANs work.

Shown above is a typical GAN structure. The goal for the discriminator in this example might be to distinguish real cat pictures from fake cat pictures. The goal for the generator in this example might be to generate fake cat pictures that are indistinguishable from real cat pictures; it is optimized to reproduce the true data distribution of real cat pictures by training on samples therefrom. Within a GAN, the generator and the discriminator component compete against each other. Ideally, the generator becomes better at generating fake data, which drives the discriminator to become better at spotting more minute differences between real and fake data, which again drives the generator to become even better at generating fake data, and so on. This is not the same as actor-critic methods. Both the generator and the adversary/discriminator evolve in GANs, while in actor-critic, although the critic's internal variables may change, it does not get smarter over time. It is the moving goal posts in GANs that make them the best at generative tasks among deep learning algorithms. As one can also imagine, there are many things that can happen during the training instead of getting into the desired upward spiral, making GANs much less predictable than the basic CNN.

An early high-profile use of GANs was the generation of fake images, often looking like nothing at all, that fool image classification networks into calling them a penguin or a bus[7]. A more recent high-profile result is Rutger University's abstract art generation[8] using GANs which is the first instance of deep-learning-generated images that are beyond conglomerates of pixels.

There are variations of GANs with multiple generators or multiple adversaries[9], often with better results than their vanilla counterparts; this is somewhat related to multi agent systems and systems science.

Image synthesis

Image synthesis is concerned with the generation of an image often from a description. This description can be an object category: Labrador; chair - or something more complex: Labrador running on grass with children; chair in an empty room with a blue ball. Procedural and combinatorial generation are comparatively well-explored methods, but do not have the level of freedom people ultimately expect of this task. It is much more difficult for deep learning networks to generate convincingly realistic images compared to identifying images, because humans are great at noticing inconsistencies; a warped outline, an overly fuzzy patch, a disfigured finger - an image easily and confidently identified as the intended object is even more easily identified as "not real".

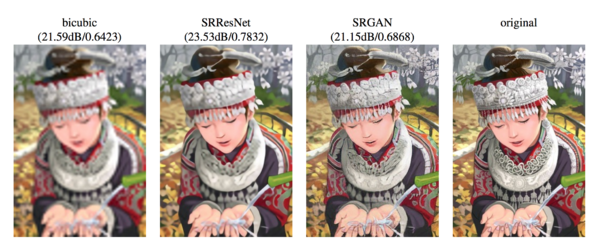

Super-resolution

Super resolution can be considered a kind of image synthesis. A low resolution image is used to generate a high resolution version of itself. Below is a comparison of several super-resolution algorithms taken from a 2016 paper Photo-realistic single image super-resolution using a generative adversarial network[10].

Generative image modeling using style and structure adversarial networks

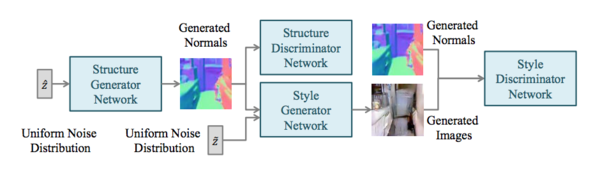

For the problem of generating realistic indoor scenes, similar to those found in real estate photos, Wang and Gupta[1] proposed an S2GAN stacking a structure GAN generating the geometry of an indoor scene and a style GAN generating the colors and textures to fill in the previously generated geometry. Between these, the structure GAN uses a simple deep convolutional network for the generator and discriminator, generating a surface normal map of an indoor scene. The style GAN is more complex: the generator takes a surface normal map as input, and it has two discriminators. One is a regular GAN discriminator taking as input the normal map and the final scene image, outputting a realistic-or-not classification. The other discriminator is a convolutional network reverse generating a normal map from the scene image and calculating a loss compared to the original supplied normal map, similar to an auto-encoder.

In the experiments of this paper, the two GANs are first trained separately on ground-truth data, then combined so that the output of the structure network becomes the input of the style network (removing the convolutional discriminator) and trained jointly end-to-end, now propagating the loss in the style network back to the structure network.

Specifically, this paper implements their S2GAN using gradient descent and the following loss functions for the structure GAN:

and the following loss functions for the style GAN:

where is an input image, is the corresponding surface normal map, and are the discriminator and generator functions for each GAN, is sampled from a uniform distribution, is sampled from an unspecified noise distribution, possibly Gaussian, and is the binary crossentropy loss.

The results, though still low-resolution at 128x128, was visibly more structured than other contemporary methods. The innovation in this paper was in the high level network composition, while the neural network setup was fairly standard.

StackGAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks

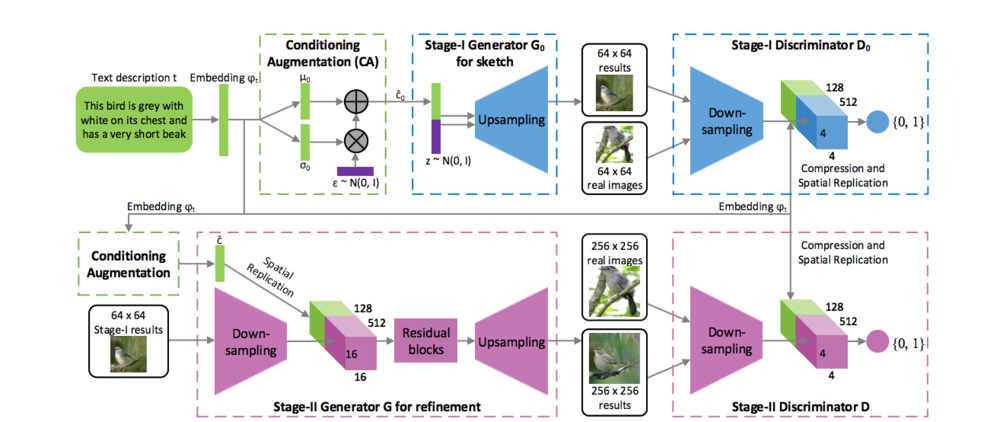

Conditional Augmentation

This paper uses a text description as the seed for image generation. The text is first encoded by an encoder into a high dimensional text embedding. A trick the paper uses is to fill the latent space of the embedding with randomly generated fillers in order to make the data manifold more continuous and therefore more conducive to the later training. An intuitive interpretation of why this added randomness works is that a single text description corresponds to many images, with different poses, camera angles, lightings, and other variables not explicitly mentioned in the text.

Additionally, they add the Kullback-Leibler divergence of the input Gaussian distribution and the standard Gaussian distribution as a regularization term to the training output of the generator, again to make the data manifold more continuous and more conducive to training.

Two levels of GAN

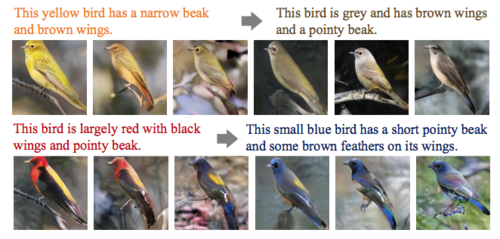

The StackGAN is composed of two smaller GANs that are for the most part the same. Unlike the previous paper, this method is more generalizable and stackable, the "patches" to fill in being a fuzzier version of the intended image instead of a surface normal map; this also makes training data easier to come by. Each of the discriminators takes real and generated images as input and try to distinguish between the two types of images. The generated images come from the generators, which take the preprocessed text embedding as input. The only differences are the output from the level I generator that is fed into the level II generator as input alongside the text embedding, and the resolution of the images generated which are 128x128 in level I and 256x256 in level II. Below are some results.

Formally, the level I GAN uses the following loss functions:

and the level II GAN uses the following loss functions:

where is the text embedding of the given description, is a Gaussian distribution, is sampled from a Gaussian distribution from which is drawn (this distribution is learned along with the rest of the network), and is 1.

Like the previous paper, the innovation of this paper lies in the high-level network structure, and uses fairly standard internal components: nearest neighbor upsampling, convolution layers, and (leaky) ReLU activations. Unlike the previous paper, the results of this paper is visually much more impressive: higher resolution and at a cursory glance flawless, although the problem domain is images with one main object, which is ostensibly less difficult than generating an entire scene. In comparison of the methods, StackGAN has a less rigid structure and can be expected to work without much modification across more problems. One could easily imagine adding a couple levels to StackGAN, although more than that probably would not help - see below for examples from an incremental paper which does exactly that in 2018, Photographic Text-to-Image Synthesis with a Hierarchically-nested Adversarial Network[11], which conveniently also illustrates the previously mentioned continuous input manifold.

This is not the same as this paper[12], which is more of a multi-generator-multi-adversarial-network.

Annotated Bibliography

- ↑ 1.0 1.1 Wang X, Gupta A. Generative image modeling using style and structure adversarial networks. In European Conference on Computer Vision 2016 Oct 8 (pp. 318-335). Springer, Cham.

- ↑ Zhang H, Xu T, Li H, Zhang S, Huang X, Wang X, Metaxas D. Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks. In IEEE Int. Conf. Comput. Vision (ICCV) 2017 Oct 1 (pp. 5907-5915).

- ↑ Yan X, Yang J, Sohn K, Lee H. Attribute2image: Conditional image generation from visual attributes. In European Conference on Computer Vision 2016 Oct 8 (pp. 776-791). Springer, Cham.

- ↑ Nguyen A, Yosinski J, Bengio Y, Dosovitskiy A, Clune J. Plug & play generative networks: Conditional iterative generation of images in latent space. arXiv preprint arXiv:1612.00005. 2016 Nov 30.

- ↑ Mahendran A, Vedaldi A. Visualizing deep convolutional neural networks using natural pre-images. International Journal of Computer Vision. 2016 Dec 1;120(3):233-55.

- ↑ Denton EL, Chintala S, Fergus R. Deep generative image models using a laplacian pyramid of adversarial networks. In Advances in neural information processing systems 2015 (pp. 1486-1494).

- ↑ Nguyen A, Yosinski J, Clune J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. InProceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015 (pp. 427-436).

- ↑ Elgammal A, Liu B, Elhoseiny M, Mazzone M. CAN: Creative Adversarial Networks, Generating" Art" by Learning About Styles and Deviating from Style Norms. arXiv preprint arXiv:1706.07068. 2017 Jun 21.

- ↑ Durugkar I, Gemp I, Mahadevan S. Generative multi-adversarial networks. arXiv preprint arXiv:1611.01673. 2016 Nov 5.

- ↑ Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z, Shi W. Photo-realistic single image super-resolution using a generative adversarial network. arXiv preprint. 2016 Sep 15.

- ↑ Zhang Z, Xie Y, Yang L. Photographic Text-to-Image Synthesis with a Hierarchically-nested Adversarial Network. arXiv preprint arXiv:1802.09178. 2018 Feb 26.

- ↑ Huang X, Li Y, Poursaeed O, Hopcroft J, Belongie S. Stacked generative adversarial networks. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017 Jul 1 (Vol. 2, p. 4).

To Add

|

|