Course:CPSC522/Character Level Language Models using LSTM

Character Level Language Models using LSTM

This page primarily follows [1] and [2].

Principal Author: Kevin Dsouza

Collaborators:

Abstract

This page covers character level language models implemented using Long-Short-Term-Memory-Networks (LSTMs). The first half introduces a Character-Aware neural model and the second half builds on this idea to follow a hierarchical structure in building character level models.

Builds on

This page builds on Recurrent Neural Networks and Natural Language Processing.

Related Pages

Character level language models depart from Word level language models and come under the general category of Language models.

Paper 1: Character-Aware Neural Language Models

Introduction

Traditional methods in language modeling involve making an n-th order Markov assumption and estimating n-gram probabilities via counting. The count-based models are simple to train, but due to data sparsity, the probabilities of rare n-grams can be poorly estimated. Neural Language Models (NLM) address the issue of n-gram data sparsity by utilizing word embeddings [3]. These word embeddings derived from NLMs exhibit the property whereby semantically close words are close in the induced vector space. Even though NLMs outperform count-based n-gram language models [4], they are oblivious to subword information (e.g. morphemes). Embeddings of rare words can thus be poorly estimated, leading to high perplexities (Perplexity is the measure of how well a probability distribution predicts a sample) which is especially problematic in morphologically rich languages.

In this work, the authors propose a language model that leverages subword information through a character-level convolutional neural network (CNN), whose output is used as an input to a recurrent neural network language model (RNN-LM). Unlike previous works that utilize subword information via morphemes [5], this model does not require morphological tagging as a pre-processing step.

Long-Short-Term-Memory

Long short-term memory (LSTM) [6] addresses the problem of learning long-range dependencies in the Recurrent Neural Networks by adding a memory cell vector at each time step. One step of an LSTM takes as input (input vector at time ), (hidden state vector at ), (memory cell vector at time ) and produces (hidden state vector at time ), (memory cell vector at time ) via the following intermediate calculations:

Here and are the element-wise sigmoid and hyperbolic tangent functions, is the element-wise multiplication operator, and , , are referred to as input, forget, and output gates. At , and are initialized to zero vectors. Parameters of the LSTM are for .

Memory cells in the LSTM are additive with respect to time, alleviating the vanishing gradient problem. This is the most important distinction of LSTM in the sense that it allows for an uninterrupted gradient flow through the memory cell. Gradient exploding is still an issue, though in practice simple gradient clipping works well. LSTMs have outperformed vanilla RNNs on many tasks, including on language modeling [7].

Recurrent Neural Network Language model (RNNLM)

Let be the fixed size vocabulary of words. A language model specifies a distribution over (whose support is ) given the historical sequence . A recurrent neural network language model (RNN-LM) applyies an affine transformation to the hidden layer followed by a softmax:

where is the th column of (output embedding), and is a bias term. If are the sequence of words in the training corpus, training involves minimizing the negative log-likelihood (NLL) of the sequence, which is done by truncated backpropogation.

Character-level Convolutional Neural Network

Let be the vocabulary of characters, be the dimensionality of character embeddings, and be the matrix of character embeddings. Suppose that word is made up of a sequence of characters , where is the length of word . Then the character-level representation of is given by the matrix , where the th column corresponds to the character embedding for .

A convolution between and a filter (or kernel) of width is applied, after which a bias is added followed by a nonlinearity to obtain a feature map . The th element of is:

where is the -to--th column of and is the Frobenius inner product. Finally, take the max-over-time:

as the feature corresponding to the filter (when applied to word ). The idea, the authors say, is to capture the most important feature for a given filter. "A filter is essentially picking out a character n-gram, where the size of the n-gram corresponds to the filter width". Thus the framework uses multiple filters of varying widths to obtain the feature vector for . So if a total of filters are used, then is the input representation of .

Highway Network

Highway network, recently proposed in [8], have the following function:

where is a nonlinearity, is called the transform gate, and is called the carry gate. Similar to the memory cells in LSTM networks, highway layers allow for training of deep networks by carrying some dimensions of the input directly to the output.

The overall working of the model can be observed in Figure 1. Essentially the character level CNN applies convolutions on the character embeddings with multiple filters and max pools from these to get a fixed dimensional representation. This is then fed to the highway layer which helps in encoding semantic features which are not dependent on edit distance alone. The output of the highway layer is then fed into an LSTM that predicts the next word.

Evaluation

Perplexity (PPL) is used to evaluate the performance of the models. The perplexity of a model over a sequence is given by:

where is calculated over the test set.

The optimal hyperparameters tuned on PTB and the model is then applied to various morphologically rich languages: Czech, German, French, Spanish, Russian, and Arabic.

Penn Treebank is a large annotated corpus consisting of over 4.5 million words of American English [9].Two versions of the model are trained by the authors to assess the trade-off between performance and size. As another baseline, two comparable LSTM models that use word embeddings (LSTM-Word-Small, LSTM-Word-Large) are also trained. The large model presented in the paper is on par with the existing state-of-the-art (Zaremba et al. 2014), despite having approximately 60% fewer parameters. The small model significantly outperforms other NLMs of similar size as can be observed in Figure 3. English is relatively simple from a morphological standpoint, and thus the next set of results and also the main contributions of this paper (as claimed by the authors) are focused on languages with richer morphology. Results are compared against the morphological log-bilinear (MLBL) model from [5], which takes into account subword information through morpheme embeddings. On DATA-S it is clear from Figure 4 that the character-level models outperform their word-level counterparts despite being smaller. The character models also outperform their morphological counterparts (both MLBL and LSTM architectures).

Discussion

Learned Word Representations

Observing Figure 5, before the highway layers, the nearest neighbors of you are your, young, four, youth, which is close to you in terms of edit distance. The highway layers, however, seem to enable encoding of semantic features that are not derivable from distance alone. After highway layers, the nearest neighbor of you is we, which is orthographically distinct from you. The model also makes some clear mistakes (e.g. his and hhs), which is a drawback of this approach. The authors hypothesize that highway networks are especially well-suited to work with CNN's, adaptively combining local features detected by the individual filters.

Learned Character N-gram Representations

Each filter of the CharCNN is essentially learning to detect particular character n-grams. The initial expectation would be that each filter would learn to activate on different morphemes and then build up semantic representations of words from the identified morphemes. However, upon reviewing the character n-grams picked up by the filters, the authors found that they did not (in general) correspond to valid morphemes. The learned representations of all character n-grams are plotted via principal components analysis. Each character n-gram is fed into the CharCNN and the CharCNN’s output is used as the fixed dimensional representation for the corresponding character n-gram. From Figure 6, the model learns to differentiate between prefixes (red), suffixes (blue), and others (grey). They also find that the representations are particularly sensitive to character n-grams containing hyphens (orange).

Conclusion

- The work introduces a neural language model that utilizes only character-level inputs. Predictions are still made at the word-level. Despite having fewer parameters, the model outperforms baseline models that utilize word/morpheme embeddings in the input layer. The work questions the necessity of word embeddings as inputs for neural language modeling.

- Analysis of word representations obtained from the character composition part of the model further indicates that the model is able to encode, from characters only, rich semantic and orthographic features.

- The model requires additional convolution operations over characters and is thus slower than a comparable word-level model which can perform a simple lookup at the input layer but is manageable with optimized GPU implementations.

Paper 2: Character-Level Language Modelling With Hierarchical Recurrent Neural Networks

Introduction

The previous approach considered character level inputs and word level outputs. These give state of the art performance but still output the probability distribution over words and also don't completely handle out of vocabulary instances. Also, word level embeddings need to be stored in memory to compute cross entropy loss for this model. The motivation behind this work is to consider characters as both the inputs and the outputs to handle rich morphology in a better way. The problem with this is that Character Level Models (CLM) have to consider a longer sequence of history tokens to predict the next token than the Word Level Models (WLM), due to the smaller unit of tokens.

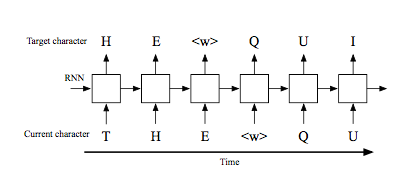

Character-aware word-level language modeling

As shown in Figure 7, the RNN is trained to predict the next character by minimizing the cross-entropy loss of the softmax output that represents the probability distributions of the next character. One of the most successful approaches to understand character level inputs is to encode the arbitrary character sequence to a word embedding, and feed this vector to the word-level RNN LMs. The previously discussed work uses CNN to generate word embeddings and achieves the state of the art results on English Penn Treebank corpus. Some works also use Bidirectional LSTMs [10] instead of CNN's to generate these embeddings. However, in all of these approaches, LMs still generate the output probabilities at the word- level.

The approach in this work is different from the above ones in many ways.

- First, the base model is the character-level RNN LMs, instead of WLMs, and is extended to consider long-term contexts. Therefore, the output probabilities are generated with character-level clocks. This property the authors claim is extremely useful for character-level beam search for end-to-end speech recognition.

- In this work, athe authors propose hierarchical RNN based LMs that combine the advantageous characteristics of both character and word-level LMs. The proposed network consists of a low-level and a high-level RNN. The low-level RNN employs the character-level input and output and provides the short-term embedding to the high-level RNN that operates as the word-level RNN.

- This hierarchical LM can be extended for processing a longer period of information, such as sentences, topics, or other contexts.

LSTMs With External Clock and Reset Signals

The LSTM equations previously introduced can be generalized and extended to support clocks and reset functions. The equations can be generalized by setting and . Any generalized RNNs can be converted to the ones that incorporate an external clock signal, , as:

where is 0 or 1. The RNN updates its state and output only when = 1. Otherwise, when = 0, the state and output values remain the same as those of the previous step. The reset of RNNs is performed by setting to 0. Specifically the above equation becomes:

where the reset signal = 0 or 1. When = 1, the RNN forgets the previous contexts. If the original RNN equations are differentiable, the extended equations with clock and reset signals are also differentiable.

Hierarchical RNN

The hierarchical RNN (HRNN) architectures proposed in this paper have several RNN modules with different clock rates as depicted in Figure 8.

For character-level language modeling, a two-level () HRNN is used in the paper by letting be a character-level module and be a word-level module. The word-level module is clocked at the word boundary input, . The input and softmax output layer is connected to the character-level module, and the current word boundary token ( or ) information is given to the word-level module.

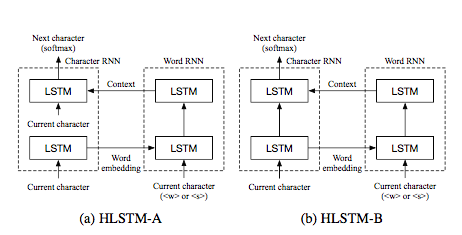

Two types of two-level HRNN CLM architectures are proposed. As shown in Figure 9, both models have two LSTM layers per submodule. In the HLSTM-A architecture, both LSTM layers in the character-level module receive one-hot encoded character input. Hence, the second layer of the character-level module is conditioned by the context vector. Contrastively, in HLSTM-B, the second LSTM layer of the character-level module does not receive the character inputs but a word embedding from the first LSTM layer. The experimental results conducted by the authors show that HLSTM-B is more efficient for CLM applications. The model is trained to generate the context vector that contains useful information about the probability distribution of the next word.

Evaluation

The models are compared with other WLMs in literature in terms of word-level perplexity (PPL). The word-level PPL of the models is directly converted from bits-per-character (BPC), which is the standard performance measure for CLMs, as follows:

where and are the number of characters and words in a test set, respectively.

Wall Street Journal (WSJ) Corpus

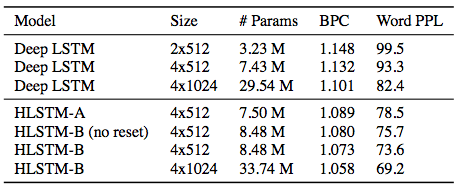

The Wall Street Journal (WSJ) corpus is designed for training and benchmarking automatic speech recognition systems. Figure 10 shows the perplexities of traditional mono-clock deep LSTM and HLSTM based CLMs. The size means that the network consists of LSTM layers, where each layer contains memory cells. The HLSTM models show better perplexity performances even when the number of LSTM cells or parameters is much smaller than that of the deep LSTM networks. " It is important to reset the character-level modules at the word- level clocks for helping the character-level modules to better concentrate on the short-term information ". As observed in Figure 10 and pointed by the authors, removing the reset functionality of the character-level module of the HLSTM-B model results in degraded performance.

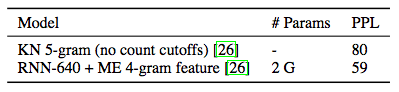

The perplexities of WLMs in the literature are presented in Figure 11. The Kneser-Ney (KN) smoothed 5-gram model (KN-5) is a strong non-neural WLM baseline and all HLSTM models in Figure 11 show better perplexities than KN-5 does.

End-to-end automatic speech recognition (ASR)

The proposed CLMs are applied to the end-to-end automatic speech recognition (ASR). The CLMs are trained with WSJ training data. Unlike WLMs, the proposed CLMs have a very small number of parameters, so they can be employed for real-time character-level beam search.

The results are summarized in Figure 12. It is observed that the perplexity of LM and the word error rate (WER) have a strong correlation as observed by the authors. As shown in the table, a better WER can be achieved by replacing the traditional deep LSTM (4x1024) CLM with the proposed HLSTM-B (4x512) CLM, while reducing the number of LM parameters to 30%.

Conclusion

- In this paper, hierarchical RNN (HRNN) based CLMs are proposed. The HRNN consists of several submodules with different clock rates. Therefore, it is capable of learning long-term dependencies as well as short-term details.

- As shown in the WSJ speech recognition example, the proposed model can be employed for the real-time speech recognition with less than 10 million parameters.

- Also, CLMs can handle OOV words by nature, which is a great advantage for the end-to-end speech recognition and many NLP tasks.

Although the character level language models explored here do a good job of handling rich morphology in languages, diversity in representation is missing. Frequent n-grams in the training set will result in the model overfitting and will produce rigid results. Recently variational frameworks for language modeling have been investigated to explore this issue. Also, the models discussed in this page don't take into account the global sentence context and only operate on the local information. Thus, the variational frameworks coupled with smart attention mechanisms can result in a model that produces diverse representations with global sentence context. In the final page, we will explore such a framework that can generate diverse sentences from global sentence representations. A hierarchical form of the Variational Autoencoder will be studied in an attempt to analyze the effect of hierarchy in the posterior and the prior on representation.

All the pictures on this page are borrowed from [1] and [2].

Annotated Bibliography

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 Kim, Y., Jernite, Y., Sontag, D., & Rush, A. M. (2016, February), "Character-Aware Neural Language Models", In AAAI, pp. 2741-2749. 2016.

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 2.6 2.7 Hwang, K., & Sung, W. (2017, March), "Character-level language modeling with hierarchical recurrent neural networks.", In Acoustics, Speech and Signal Processing (ICASSP), 2017 IEEE International Conference on (pp. 5720-5724). IEEE.

- ↑ Mikolov, T.; Karafiat, M.; Burget, L.; Cernocky, J.; and Khudanpur, S. 2010, "Recurrent Neural Network Based Language Model.", In Proceedings of INTERSPEECH.

- ↑ Mikolov, T.; Deoras, A.; Kombrink, S.; Burget, L.; and Cernocky, J. 2011, "Empirical Evaluation and Combination of Advanced Language Modeling Techniques.", In Proceedings of INTERSPEECH.

- ↑ 5.0 5.1 Botha, J., and Blunsom, P. 2014, "Compositional Morphology for Word Representations and Language Modelling.", In Proceedings of ICML.

- ↑ Hochreiter, S., and Schmidhuber, J. 1997, "Long Short-Term Memory.", Neural Computation 9:1735–1780.

- ↑ Sundermeyer, M.; Schluter, R.; and Ney, H. 2012, "LSTM Neural Networks for Language Modeling."

- ↑ Srivastava, R. K.; Greff, K.; and Schmidhuber, J. 2015, "Training Very Deep Networks."

- ↑ Marcus, Mitchell P., Mary Ann Marcinkiewicz, and Beatrice Santorini., 1993, "Building a large annotated corpus of English: The Penn Treebank.", Computational linguistics 19.2 (1993): 313-330.

- ↑ Yasumasa Miyamoto and Kyunghyun Cho, 2016, "Gated word- character recurrent language model.", Conference on Empirical Methods in Natural Language Processing, 2016, pp. 1992–1997.

Further Reading

To add

|

|