Course:CPSC522/Adaptive Network Routing using ACO

Adaptive Network Routing using ACO

In this page we discuss the application and analysis of Ant Colony Optimization (ACO) in building an efficient network protocol.

Principal Author: Kumseok Jung

Collaborators:

Abstract

Ant Colony Optimization (ACO) is a metaheuristic for finding solutions to combinatorial optimization problems. Inspired by the behaviour of ants, it uses deliberative mobile agents to assess different parts of the problem space, and applies the concept of stigmergy - an indirect and asynchronous way of exchanging information through the environment - to propagate feedback signals for iteratively correcting the current solution until they converge to the optimal solution. The problem of finding the optimal path in a communication network is a multi-objective optimization problem in a dynamic environment, and is not trivial. AntNet applies ACO to implement a routing protocol in datagram networks with irregular topology, and experiments indicate that it outperforms existing state-of-the-art protocols such as OSPF (Open Shortest Path First) currently used in the Internet and is comparable to Dijkstra's shortest path algorithm, which is the theoretical optimal solution.

Papers discussed

- AntNet: Distributed stigmergetic control for communications networks[1] discusses the implementation of AntNet, published by Di Caro and Dorigo in 1998.

- Performance analysis of the AntNet algorithm[2], published in 2007 by Dhillon and VanMieghem, provides a comprehensive analysis of the AntNet algorithm.

Builds on

- Routing Protocol specifies how routers communicate with each other, distributing information that enables them to select routes between any two nodes on a computer network.

- Dijkstra's Shortest Path Algorithm is an algorithm for finding the shortest paths between nodes in a graph.

- Metaheuristic is a higher-level procedure or heuristic designed to find, generate, or select a heuristic (partial search algorithm) that may provide a sufficiently good solution to an optimization problem, especially with incomplete or imperfect information or limited computation capacity.[3]

- Swarm Intelligence is a form of collective intelligence that emerges as a result of a group of natural or artificial agents interacting with each other and the environment.

- Ant Colony Optimization is a population-based metaheuristic that can be used to find approximate solutions to difficult optimization problems.[4]

- Combinatorial Optimization is a topic that consists of finding an optimal object from a finite set of objects.[5]

- Multi-agent Systems is an area of artificial intelligence concerned with the study of multiple autonomous agents acting in an environment.

- Monte Carlo methods are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results.

- Temporal Difference Learning is an approach to learning how to predict a quantity that depends on future values of a given signal.

- Reinforcement Learning is learning by interacting with an environment.

Content

Motivation

In a computer network, not all devices have direct physical links between each other and therefore communication between any two devices are established by a series of routers redirecting data packets from the source node to the destination node. Routing involves each device maintaining a table of neighbouring nodes and the relevant statistics, so that an incoming packet can be strategically forwarded to an outgoing link selected from the table. A routing protocol describes the structure of the routing table, the rules for updating the table, and a policy for selecting a node for a given packet. The goal of a good routing protocol is to maximize the overall network performance and to minimize the cost involved; in other words, it must maximize the throughput and minimize the latency.

"the effect of good routing is to increase throughput for the same value of average delay per packet under high offered load conditions and to decrease average delay per packet under low and moderate offered load conditions." -Bertsekas & Gallager, 1992

The problem of routing is difficult because it is intrinsically distributed. A change in the system state, say a node failure, cannot be globally observed immediately, and thus it is impossible to have an up-to-date and complete model of the distributed (global) state. Additionally, although there is certainly a global optimal policy, there is no global decision system. Each node in the network has to make routing decisions based solely on its local policy, using delayed and incomplete information about the global state. It can only be hoped that the policies employed locally by individual nodes add up to the global optimal policy. Another aspect that makes the problem difficult is that the problem itself is stochastic and non-stationary. The theoretical optimal policy varies with time and depends on the traffic status; even if a complete model was available, techniques like dynamic programming cannot be used. Furthermore, the routing problem involves optimizing multiple conflicting and intertwined aspects of the system - an example being the previously mentioned throughput and latency - while considering multiple constraints imposed by the underlying network infrastructure and the demands of the service users. Besides the algorithmic complexity, at the implementation level the solution needs to be fault-tolerant and reliable, being robust against random node failures.

Approach

Taxonomy of Routing Algorithms

At a higher level, routing algorithms can be characterized by the following properties:

- Centralized vs Distributed

- Static vs Adaptive

In most cases, centralized algorithms are not fault-tolerant and are impractical due to the delays in gathering information about the network and communicating the routing decisions across the network. Static algorithms compute routes based only on the source and destination, but does not take into account the current network state, such as number of packets queued in the outgoing links. For real-world applications, it makes more practical sense to use distributed adaptive routing algorithms so that it can adapt to varying spatial and temporal traffic conditions. For the rest of the discussion in this page, we will focus on adaptive and distributed solution.

One of the issues that arise from using an adaptive algorithm is the problem of oscillating paths, where a constantly changing forwarding policy causes a packet to travel in a cycle.

From an optimization perspective, the routing paradigms can be divided into the following:

- Minimal routing vs Non-minimal routing

- Optimal routing vs Shortest path routing

Minimal routing algorithms only select paths with minimal cost, while non-minimal routers choose from all the available paths using a heuristic. Optimal routing is an approach focused on optimizing a certain function over all the individual links of the network and requires knowledge of the traffic patterns across the whole system. For instance, minimizing the sum of all the link costs is an optimal routing approach. In contrast, shortest path routing is concerned with minimizing the communication overhead between two nodes based on the link states, and without considering a global cost function and without a priori knowledge of the traffic status. This makes shortest path routing algorithms more flexible to system changes and therfore is the most widely used approach, for example in the Internet.

ARPANET, the ancestor of Internet, first used a shortest path routing algorithm called Bellman-Ford in 1969, which belongs to the class of distance-vector algorithms based on the principles of dynamic programming. In Bellman-Ford, each router keeps a routing table of a 3-tuple of the form (Destination, Estimated Distance, Next Hop) defined for all the nodes in the network. Asynchronously and iteratively, the nodes exchange with its neighbours its current estimated distance to other nodes in the network. Each node updates the entries in its routing table corresponding to the node that sent the new values. The process converges in finite time, but it introduces circular paths and therefore was replaced by Shortest Path First (SPF) algorithm in 1980, which is classified as a link-state routing algorithm. The current version in use, Open Shortest Path First (OSPF) improves upon its predecessor and was introduced in 1994. Link-state algorithms keep routing tables much larger than those in distance-vector algorithms, each table essentially representing a dynamic map of the complete network, and involves flooding the network with local state of each node to keep the information synchronized. OSPF requires static management of the topology and delegates congestion control to an external system; it is merely a "best compromise" among efficiency, stability, and robustness[7]. This brief summary of the history of routing algorithms emphasizes the difficulty of implementing a flexible and robust system, and it is worth to consider other perspectives at tackling this problem.

AntNet

Di Caro and Dorigo introduced a novel routing protocol named AntNet[1], based on the principles of Ant Colony Optimization (ACO). It attempts to solve the routing problem by taking a multi-agent systems approach, in which multiple mobile agents concurrently and in parallel perform an on-line Monte Carlo (MC) simulation. However, there are some key differences that distinguish the AntNet algorithm from classical Monte Carlo:

- The simulation is "on-line", where the model is updated during the execution. This makes it also similar to Temporal Difference learning.

- The updated model is shared asynchronously with other random MC instances (ants) operating in the vicinity, but not with all the instances.

- A single MC instance solves only its local optimal solution and cannot solve the global optimal solution. The global optimal of the network emerges as a result of individual MC instances concurrently and stochastically discovering partial solutions.

Some parallels with Reinforcement Learning (RL) can also be drawn, since the routing problem can be interpreted as a stochastic time-varying RL problem[1]. In AntNet, each router receives reinforcement signals from the incoming ants to iteratively update its internal model of the world and improves its routing policy towards a local optimum. However, unlike classical RL problems, the routing problem is non-stationary and non-Markovian, making usual RL approaches unsuitable.

The following is a higher level description of the algorithm:

- Each node in the network concurrently sends out mobile agents towards a randomly selected destination.

- A single mobile agent, called an ant, has one behaviour when going in the forward direction, and has a different behaviour going in the backward direction. When going forward, it is called a forward ant, and it travels in the same traffic as the data packets. In contrast, backward ants travel in a privileged traffic.

- The mobile agents act independently and communicate with each other indirectly by reading and writing information in the network nodes.

- Each agent seeks to travel along the shortest path between the source and the destination node.

- During the trip going forward, each ant collects information about time taken to reach an intermediate node and the congestion status.

- At each node, an ant chooses the next hop based on information locally available in the node and its own private information.

- After arriving at the destination, the forward ant trasfers its accumulated knowledge to the backward ant, and the backward ant begins its trip along the same route going in the opposite direction towards the source node.

- During the backward travel, the backward ant updates the routing table in each node based on the information collected during the forward trip.

- After the backward ant reaches the source node, it is destroyed.

The technical details of the algorithm are further discussed in the following sections.

Model of a network node

In a network consisting of nodes, each node maintains a routing table . The routing table stores probabilities describing the current routing policy at node . Each entry in the table indicates the likelihood of selecting neighbour as the next node given the destination node , where:

The raw value is not used as-is for path selection, as there are some heuristic factors to be considered that are described in the following sections. Data packets use the same routing table for selecting the next link, but use a power function that amplifies the high probability values and suppresses the low probability values, because data packets only need to exploit the information and should not explore.

Each node also maintains another table of statistics about the current locally-observed traffic. More precisely, stores the mean travel time from current node to the destination node , the variance , and an array storing the travel times for the last trips, used to compute the best travel time observed during the moving observation window. The mean travel time provides an estimate of the expected time to reach the destination, and the variance indicates the reliability of this estimate. Whenever there is new data available - i.e. travel time experienced by a newly arriving backward ant - the array is updated with the following (the index will be omitted as all updates are local to node ):

- where is the newly observed trip time of the agent going from node to destination .

The term is analogous to the constant in the Temporal Difference formula for computing the running average over samples. The moving observation window is updated by shifting it and pushing the new trip time and the best trip time is recomputed with . The moving observation window serves as a short-term memory providing a recent empirical lower bound of the estimated travel time to the destination.

The table provides an estimate of the travel time to different nodes, and table describes the "goodness" of each link for a given destination.

Model of a mobile agent

Given the model of the nodes, each node generates a forward ant at regular intervals , travelling from the current (source) node to destination node . In order for a forward ant to experience the real traffic load, they are pushed into the same queue as the outgoing data packets. Since the queue is FIFO (First in, first out), if there are data packets waiting to be sent, the forward ant's departure will also be delayed proportionally to the size of the pending queue; this allows information about the congestion to be carried implicitly by the mobile agents. The destination node is selected probabilistically according to the locally observed traffic. The probability of selecting destination is proportional to the traffic towards node , such that:

- where is some measure (e.g. size of the queue) of the data from node to .

During its trip, an ant maintains a record of the traversed path, storing the identifier of a visited node and the time taken to reach that node since its departure from node . This information is stored in a stack and is part of the network packet representing the ant. Therefore the size of the ant increases linearly with the number of hops, but this increase has minimal effect on the performance of the algorithm.

For each node a forward ant visits along its route, it selects the next node among the neighbours it did not already visit. It picks a node based on a corrected probability computed as a function of the raw probability stored in the routing table and the state of the queue at the outgoing link to node . The new probability takes into account a heuristic correction factor that reflects the relative size of the queue of the outgoing link to node :

The heuristic correction factor indicates how congested the outgoing link is, and since is inversely proportional to the size of the queue, the corrected probability becomes smaller than the raw probability if the corresponding outgoing link is relatively congested. This allows packets to take alternative routes in the case the theoretical best path has too much traffic. The constant determines the importance of the correction factor , and experiments show that setting the value of between the ranges 0.2 and 0.5 yields good results. Setting higher values of leads to oscillatory behaviour in the route selection, as the decision becomes too sensitive to the traffic.

If a forward ant at node has already visited all the neighbours of , it has run into a circular path. In this case, records of the nodes in the cycle are popped from the ant's stack . If the duration of the cycle is longer than the time taken to reach the cycle (elapsed time before the ant entered the circular path), the ant is destroyed.

When a forward ant reaches the destination, it is converted to a backward ant , retaining the memory stack (in the original paper, it is said that the forward ant transfers its memory to a new backward ant and dies[1]). The backward ant travels along the same path it (forward ant) came from, towards the source node . It traces the path by popping the stack at each node , and travels through a priority queue at the outgoing link. The backward ants reserve this privilege so that fresh information about the route can be propagated quickly.

Backward ants implement the concept of stigmergy, an indirect way of communicating with other ants by modifying the environment. In AntNet, at each node along the path the backward ants update the values in the table with new observations about the network status and modify the routing table accordingly; this is analogous to ants leaving pheromone traces. The new information given by the backward ants act as reinforcement signals to push the routing policy towards a local optimum.

In the tables and , all the entries corresponding to the destination node are updated. Additionally, the entries belonging to nodes in between node and destination - i.e. node - are updated, provided that the trip times for these "sub-paths" are statistically "good". Statistically "good" means that the elapsed time is less than , the sum of the mean trip time and the confidence interval . The nodes between node and destination are potential destinations for other ants and therefore updating these "sub-paths" provide information for other source-destination pairs at zero cost.

The mean trip time , variance , and best trip time during observation window are updated in table as described above, using information stored in the ant's memory stack . Given that the backward ant just arrived from node , the routing table is updated by incrementing the probability - i.e. the probability of choosing node as the next node when destination is - by an amount proportional to a "goodness" measure of the trip time . The other probabilities are decremented proportionally as well.

Observed trip time is the only explicit feedback signal used to evaluate the "goodness" measure , since it implicitly captures information about the physical properties of the path such as transmission capacity of the links, processing speed of the nodes, number of hops; as well as dynamic information about the system like the congestion level. Evaluating the "goodness" of trip time is not a trivial task, since there is no objective "optimal" trip time to compare against. An apparently poor trip time under low traffic could actually be a good one under heavy traffic, so we cannot compute some kind of an error signal like we do in back-propagation (in neural nets). The "goodness" measure can only be used as a reinforcement signal like a "reward" in the context of Reinforcement Learning. is a dimensionless value and is critical to the AntNet algorithm and will be discussed in more detail below.

The first challenge in determining the reinforcement signal for the path from node to node is the credit assignment problem typically seen in RL. The only available metric is the elapsed time between node to the destination node and we cannot know how much the sub-path between node and contributed to the elapsed time. The second challege is finding the right balance between adaptivity and stability. As mentioned in the previous paragraph, the "goodness" is relative to the current traffic conditions, so the reinforcement signal should be able to account for the network status. At the same time, it should not be overly sensitive to the fluctuations in traffic, as this can lead to oscillating routes. In addition, an important insight is gained by considering a simple reinforcement signal . In this case, all paths traversed by the ants are rewarded the same amount, independent of each ant's observed statistics. There is an implicit reinforcement mechanism at work, as faster links are traversed more often and accumulate rewards at a higher rate. The obvious drawback in this case is that bad paths are rewarded too. The authors' experiments show that even with a constant reinforcement signal the network performs moderately well, suggesting that this implicit reinforcement is an important component in the AntNet algorithm. To take into account the information collected by the ants, the feedback signal is computed as a function of the entries in the table and is given by the following:

First we compute a quantity using the equation (obvious indices are omitted for brevity)

- where .

is the best trip time observed in the moving window as previously mentioned, and is an approximation of the upper bound of the confidence interval of the average trip time with specified confidence level . is the cardinality of the observation window . Using only the upper bound of the confidence interval introduces some kind of asymmetry and inaccuracy in this equation, but the authors claim that it is practical enough considering the extra CPU consumption that would be spent on computing an exact quantity. The first term in the above equation is the ratio between the best trip time and the currently observed trip time. It represents how "good" the current record is relative to the best record seen recently and it is more important than the latter term. The second term is a correction term, evaluating how different the observed trip time is from the average time, taking into account the stability of the value of the average time. In the presented implementation of AntNet, and . Experiments show that setting yields good results.

Then quantity is then transformed into by using a squash function :

Using the squashing function has an effect of amplifying the high values of while saturating low values of , so that "rewards" play a bigger role than "punishments". Using the final value , the routing table is updated as previously mentioned:

The authors' experiments indicate that the algorithm is robust to its internal parameter settings and AntNet performs well as long as the paramaters are set to "reasonable" values.

Analysis

Dhillon and Van Mieghem have produced a comprehensive analysis of the AntNet algorithm[2], empirically comparing its performance against existing state-of-the-art routing protocols such as OSPF and against Dijkstra's shortest path algorithm, a theoretical optimum. The study shows that AntNet's performance is comparable to Dijkstra's algorithm and is robust under varying traffic loads.

Computational Complexity

To calculate the complexity of the AntNet algorithm, the complexity of a single ant is first considered. At every node along its path, it needs to compare its memory of the path traversed so far with the neighbours of the node to determine if it has visited some of the nodes before; this is done in . After this comparison, it needs to look through the link values in the routing table to select the best neighbour to move to. Assuming a fully connected network where each node has neighbours, this takes . Then the ant updates its memory with the identifier of the node, taking and then is pushed to the outgoing link queue in . With being the maximum hop count of a forward ant, the ant has to perform the described computation at each of the nodes. On its way back, at each node the backward ant pops its stack, taking , and is pushed to the queue in . Updating the routing table requires computing a value for every neighbour of the node, which takes . This operation is also done for every hop, and thus the backward ant also has complexity of . Therefore the complexity of a single ant is . For the entire system, given that there are ants generated, the worst-case complexity of the AntNet algorithm is . Given that and are relatively small, this is comparable to the worst-case complexity of Dijkstra's shortest path algorithm using a Fibonacci heap, which is .

Experiments

The authors evaluate the AntNet algorithm under static and dynamic network conditions by comparing the performance against solution found by Dijkstra's shortest path algorithm. The term static here does not have the same connotation as in static analysis; it means that the traffic status and varying size of the packets are not taken into account. More precisely, in the static evaluation it is assumed that the forward ants are only affected by the propagation delays between the links - i.e. time taken for a single bit to travel from one node to another - while queueing delays, transmission delays, and link capacity are ignored. This reduces the node selection probability to the raw values in the routing table . In the dynamic evaluation, all the factors used in the original implementation of AntNet are taken into account. It is important to note that while Dijkstra's algorithm can easily be compared with the static version of AntNet, it is more tricky to compare against the dynamic AntNet. The solution given by Dijkstra's algorithm is the sum of all the link weights (propagation delays) along the path, whereas the solution given by dynamic AntNet includes the transmission and queueing delays. To make a fair comparison between the two, transmission and queueing delays are excluded from the solution found by AntNet and only link weights are considered.

The experiments are carried out in a simulated environment, on random graphs with nodes and link density . In each simulation, to graphs are assessed. The upper bound for the average number of paths between a pair of nodes in graph is given by: The propagation delays for the links are set uniformly randomly between and link capacity is set to 8.192 Mbit/s. Each simulation runs for and consists of a training period (TP) of during which only ant packets are generated, and a test period (TEP) of during which both ant and data packets are generated. Data packets are generated from nodes selected by Poisson process with mean interarrival time of 12.5ms, headed towards a randomly selected destination. The size of data packets are also randomly set, following a negative exponential distribution with mean value of 4096 bits. The size of a forward ant is initialized at 192 bits and grows by 64 bits per hop, while backward ants are assumed to be 500 bits. The default value of in the squash function is set to . For the comparison between AntNet and Dijkstra's algorithm, source node and destination are considered. While there is just one shortest-path given by Dijkstra's algorithm, in AntNet the data packets travel probabilistically via different routes between a given source node and a destination node. Accordingly, the average end-to-end delay and hop count of the paths taken by the data packets over the whole simulation are used for the evaluation. The effects of changing other configuration parameters such as , are studied in various simulations in the experiment.

Static AntNet

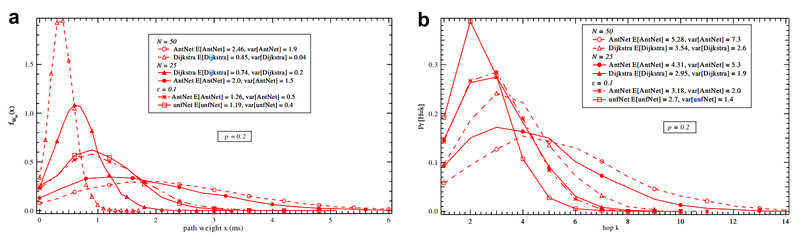

For evaluating AntNet under static conditions - i.e. without considering traffic status - the algorithm is modified to use policy for a forward ant choosing the next node. This is because the correction term in vanishes, preventing the ants from exploring the network. In this simulation the ant generation interval is set to 4ms during TP (Training Period) and 40ms during TEP (Test Period), , , and link density . The simulation is carried out for network sizes of and , each simulation consisting of iterations of random graphs of type . The effect of is also observed by setting it to and . A special case of uniform AntNet, referred to as unfNet for the rest of the discussion, is also considered, where (only explore).

Static implementation of AntNet is shown to converge to a good solution, performing better when . In fact, unfNet is shown to perform better than regular AntNet as all paths are explored with equal probabilities.

Dynamic AntNet

In the evaluation of dynamic implementation of AntNet, effects of different parameters are studied, such as ant generation rate, squash function, observation window, and the power function used in the forwarding policy of data packets.

Effect of ant generation interval and link density

In the first analysis, three different values of ant generation interval are used: 40ms, 4ms, and 0.4ms. These values are applied only during TP, and the generation interval is fixed at 40ms during TEP. Two different values of link density are used: 0.1 and 0.2.

The results indicate that AntNet yields near optimal solution at low values of , but performance degrades as is increased. Performance is slightly worse in the larger network with compared to the network with . In regards to the ant generation rate, AntNet converges to a good solution even at low ant generation rates - i.e. high generation interval . When , although only 25 forward ants are sent out per node during TP, the algorithm finds a good solution. Performance improves as the ant generation rate is increased, but no difference is observed between and . This can be attributed to the correlation between the size of the observation window and the ant generation rate. With , the observation window is not large enough to capture the effect of changing to . Additional simulations with and confirm this idea. At low ant generation rate, unfNet performs worse than AntNet since there are not enough ants to discover the shortest path in the given time. At higher ant generation rates, the performance of AntNet and unfNet are comparable.

Effect of moving observation window and

It is worth reiterating that the parameter used in computing the running average of the trip time and the size of the moving observation window are conceptually coupled. The original AntNet uses the relation . It is hard to determine the optimal size of the moving observation window since its effect is correlated with the ant generation interval. Intuitively, it would make sense to keep an observation window large enough to store diverse samples of the paths, but it cannot be guaranteed that different ants would be exploring distinct and unique paths within that window. Results show that increasing the size of the observation window with a fixed improves the performance slightly. At light traffic loads, does not influence the performance much, as the mean converges quickly. would play a more significant role when there are fluctuations in the traffic loads. In order to observe the combined effect of , , and , an additional simulation is performed with extreme values of the parameters. Ant generation interval is set to 0.4ms during TP and 4ms during TEP, , and . The size of the observation window is large enough to store the trip times of all the forward ants generated by the node as well as other incoming ants.

Effect of confidence interval, squash function, and power function

The confidence interval of the mean trip time and the squash function are used in the calculation of the reinforcement signal . The confidence interval term is mainly used to determine the reliability of the mean trip time when comparing it with the newly observed trip time. The simulations show that this term has very little effect on the performance of AntNet and can even be removed to simplify the AntNet algorithm. Using larger values of in the squash function is shown to improve performance, demonstrating the effect of amplifying the reinforcement signals. Finally, the power function is used to determine the routes for the data packets. Setting implies that the routing decision is dictated by the values in the routing table, leading to data packets taking different sub-optimal paths with a non-zero probability. Setting a very large value for causes the data packets to always travel through a single best path as seen locally by the node. Results show that AntNet performs better with indicating that the shortest path was correctly discovered.

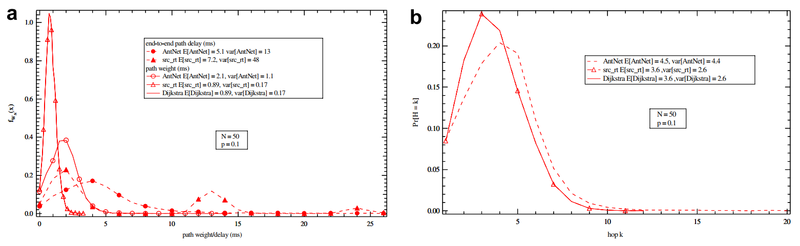

Behaviour under varying traffic

To observe the behaviour of AntNet under varying traffic, randomly chosen nodes are congested during each iteration of the simulation by adding a queueing delay of 10ms per packet. Since it is not possible to account for traffic in Dijkstra's algorithm, one version of AntNet "src_rt" is configured to send out data packets through the shortest path found by Dijkstra's algorithm. Then the end-to-end delays measured in "src_rt" and regular AntNet are compared to see whether the theoretical shortest path is actually the shortest when there are congested nodes along its path. Results show that AntNet outperforms "src_rt" in terms of end-to-end delay, indicating that AntNet was able to find alternative routes that do not have congested nodes. When there are no congested nodes, "src_rt" and AntNet's performance are similar. This load balancing capability of AntNet is demonstrated further by running a slightly different experiment. Five randomly chosen nodes are congested 5000ms after the start of the simulation period, and average packet delay and hopcount are computed over a moving window of 250ms and plotted. AntNet's performance is constant before the 5000ms mark, until there is a sudden increase in the delay at the point of congestion. The delay is quickly reduced in AntNet, showing that it has found an alternative route, whereas "src_rt" suffers from the congestion.

Discussion

Various simulations were performed to examine the different features of AntNet, and results show that AntNet is able to discover near optimal solutions quickly even with a limited number of ants. There are several configuration parameters that are correlated, where changing a single parameter has little influence on the performance unless other coupled parameters are together adjusted. More importantly, it was shown that the theoretical shortest path found by Dijkstra's algorithm may be sub-optimal in the case of congestion, and that AntNet was able to quickly find alternative routes for the data packets. Thus AntNet provides inherent load balancing capabilities and is robust to changing traffic conditions, making it an attractive routing protocol. However, with larger and denser networks, AntNet's performance degraded, suggesting it may require a longer training period. The assessment on the scalability of AntNet algorithm and its applicability in ad hoc networks remains as future work.

Annotated Bibliography

- ↑ 1.0 1.1 1.2 1.3 Di Caro, G. and Dorigo, M., 1998. AntNet: Distributed stigmergetic control for communications networks. Journal of Artificial Intelligence Research, 9, pp.317-365.

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 2.6 Dhillon, S.S. and Van Mieghem, P., 2007. Performance analysis of the AntNet algorithm. Computer Networks, 51(8), pp.2104-2125.

- ↑ Bianchi, Leonora; Marco Dorigo; Luca Maria Gambardella; Walter J. Gutjahr (2009). "A survey on metaheuristics for stochastic combinatorial optimization". Natural Computing: an international journal. 8 (2): 239–287.

- ↑ M. Dorigo. 2007. Ant Colony Optimization. Scholarpedia.org

- ↑ Schrijver, Alexander (February 1, 2006). A Course in Combinatorial Optimization

- ↑ Bertsekas, D.P., Gallager, R.G. and Humblet, P., 1992. Data networks (Vol. 2). New Jersey: Prentice-Hall International.

- ↑ Di Caro, G. and Dorigo, M., 1997. AntNet: A mobile agents approach to adaptive routing. Technical Report IRIDIA/97-12, IRIDIA, Université Libre de Bruxelles, Belgium.

|

|