CNNs in Image Segmentation

CNNs in Image Segmentation

Principal Author: Borna Ghotbi

This article is about image segmentation and how Convolutional Neural Networks (CNNs) will be used to solve this problem. The two papers which will be discussed in this page are:

- Paper1: Mask R-CNN, Kaiming Hen, Georgia Gkioxari , Piotr Dollar, Ross Girshick.[1]

- Paper2: Fully Convolutional Instance-aware Semantic Segmentation, Yi Li, Haozhi Qi , Jifeng Dai, Xiangyang Ji, Yichen Wei.[2]

Abstract

In computer vision, image segmentation is the process of partitioning a digital image into multiple segments. The current image segmentation techniques include region-based segmentation, edge detection segmentation, segmentation based on clustering, segmentation based on weakly-supervised learning in CNN, etc. We will discuss two different approaches in doing image segmentation. The first paper is a more recent one which uses Mask R-CNN instead of Fully Convoutional Network as its architecture. This article will give an introduction on image segmentation and then briefly describes these two papers and discusses the contributions of one over the other.

Builds on

This work is built on Convolutional Neural Networks which is one of the different types of Artificial neural networks.

Related Pages

The article on "Image Classification With Convolutional Neural Networks" describes the other task on images, object detection. In both image segmentation and object detection you will recognize all objects in an image but in image segmentation your output should show this object classifying pixels of the image. You may also find the article Image Colourization using Deep Learning interesting.

Content

Introduction

For the last three decades, one of the most difficult problems in computer vision has been image segmentation. Image segmentation is different from image classification or object recognition in that it is not necessary to know what the visual concepts or objects are beforehand. To be specific, an object classification will only classify objects that it has specific labels for such as horse, auto, house, dog. An ideal image segmentation algorithm will also segment unknown objects, that is, objects which are new or unknown. There are numerous applications where image segmentations could be used to improve existing algorithms from cultural heritage preservation to image copy detection to satellite imagery analysis to on-the-fly visual search and human–computer interaction. In all of these applications, having access to segmentations would allow the problem to be approached at a semantic level. For example, in content-based image retrieval, each image could be segmented as it is added to the database. When a query is processed, it could be segmented and allow the user to query for similar segments in the database—e.g., find all of the motorcycles in the database. In human–computer interaction, every part of each video frame would be segmented so that the user could interact at a finer level with other humans and objects in the environment. In the context of an airport, for example, the security team is typically interested in any unattended baggage, some of which could hold dangerous materials. It would be beneficial to make queries for all objects which were left behind by a human. Now a days the most important application of image segmentation is in medical analysis

Given a new image, an image segmentation algorithm should output which pixels of the image belong together semantically. Instance segmentation is challenging because it requires the correct detection of all objects in an image while also precisely segmenting each instance. For example, in Fig. 1, the input image consists of an audience watching two motorcyclists in a race. In Fig. 2, we see the ideal segmentation which clusters the pixels by the semantic objects all of the pixels belonging to a motorcycle are colored green to show they belong together, similarly with the riders and audience who are colored pink.

Furthermore, image Segmentation has broadly been used in Medical image computing. It became more popular with the rise of deep learning methods in image segmentation.

Fig. 3 shown an example of tumour detection and the use of image segmentation.

The resultant segmentation accuracy was assessed by comparing the automatically obtained lung areas with the manual version using Dice’s coefficient, which is calculated as:

Where:

- T is the lung area resulted from manual segmentation and considered as ground truth.

- S is the area obtained through automatic segmentation using the neural network. [3]

Region Based Segmentation

The region-based methods generally follow the “segmentation using recognition” pipeline, which first extracts free-form regions from an image and describes them, followed by region-based classification. At test time, the region-based predictions are transformed to pixel predictions, usually by labeling a pixel according to the highest scoring region that contains it .

Regions with CNN feature (RCNN) is one representative work for the region-based methods. It performs the semantic segmentation based on the object detection results. To be specific, RCNN first utilizes selective search to extract a large quantity of object proposals and then computes CNN features for each of them. Finally, it classifies each region using the class-specific linear SVMs. Compared with traditional CNN structures which are mainly intended for image classification, RCNN can address more complicated tasks, such as object detection and image segmentation, and it even becomes one important basis for both fields. Moreover, RCNN can be built on top of any CNN structures, such as AlexNet , VGG , GoogLeNet, and ResNet . [4]

First we discuss the main architecture which is also used in Faster R-CNN and then we explain how they are adding the segmentation part to their work and then discuss on how paper1 architecture works. Faster R-CNN has two networks: region proposal network (RPN) for generating region proposals and a network using these proposals to detect objects.

Region Proposal Network

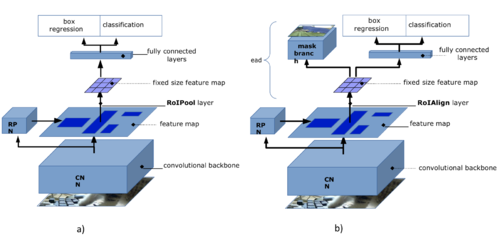

The most important component in RCNNs is the region proposal network. The output of a region proposal network (RPN) is a bunch of boxes/proposals that will be examined by a classifier and regressor to eventually check the occurrence of objects. To be more precise, RPN predicts the possibility of an anchor being background or foreground, and refine the anchor. Anchors or our boxes play an important role in Faster RCNN. In the default configuration of Faster RCNN, there are 9 anchors at a position of an image with different sizes and shapes which help us to better detect the regions. The architectures for Faster RCNN and Mask RCNN are shown in Fig. 4.a.

An overview on Paper1

As it was explained in previous sections RCNNs are mainly used to do object detection. In order to do image segmentation in addition to object detection, authors of paper 1 have proposed a new architecture called Mask RCNN. Mask RCNN uses a region based segmentation and its work is built up on the previous work Faster RCNN [5]. They add a branch for predicting an object mask in parallel with the existing branch for bounding box recognition to do the image segmentation. You can find the architecture in Fig. 4.b. As you can see in the picture the two main contributions of this architecture are RoI Align and the parallel branch which we discussed. As opposed to object detection which the accuracy of the bounding box is not critical, in image segmentation each pixel should be accurately classified. The motivation to use Region of Interest (RoI) Align instead of RoI Pooling is to have a more accurate classification on each pixel. RoI Pool can cause misalignment between the RoI and the extracted features because they are doing a quantization which will cause small shift between generated mask and original instance. On the other hand, RoI Align uses a bilinear interpolation on feature map and avoids quantization.

An overview on Paper2

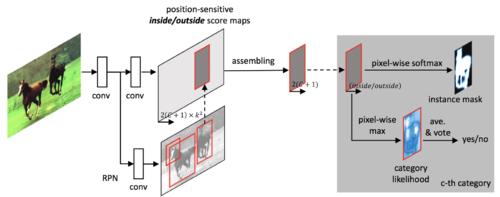

Fully Convolutional Networks (FCNs) [6] train a classifier to predict each pixel’s likelihood score of how much the pixel belongs to some object category and they learn a mapping from pixels to pixels, without extracting the region proposals. The first proposed FCN was translation invariant and unaware of individual object instances and the tasks of object detection and image segmentation were done separately so the parameters could not be learned on the same time. Since FCNs are composed of convolutional, pooling and upsampling layers, depending on the definition of a loss function, they can be end-to-end trainable. The authors of paper 2 propose a translation variant model which detects and segments the object instances jointly and simultaneously. Figure 5 shows their architecture. For detection, they use max to differentiate cases and for segmentation, they use softmax to differentiate cases. A region proposal network (RPN) shares the convolutional feature maps with FCIS. The proposed region-of-interests (RoIs) are applied on the score maps for joint object segmentation and detection. The learnable weight layers are fully convolutional and computed on the whole image and the per-RoI computation cost is negligible.

Incremental Contribution of Paper 1 over Paper 2

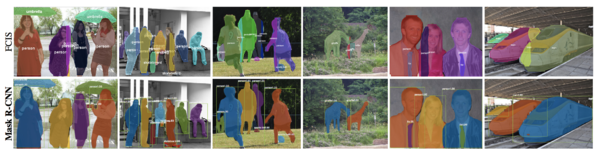

Although both the papers, solve the same problem i.e image segmentation, but they do so by using different techniques as it was described above. Paper 1 uses a branch for doing segmentation in parallel to the object detection branch which helps them to have just a bit of overhead in the case of just doing object detection. While paper2 is using an end to end model which consists object detection as its first phase. This will increase the training time and influence the performance. The authors of paper 1 mention that the model proposed in paper 2 or FCIS exhibits systematic errors on overlapping instances and creates spurious edges showing that it is challenged by the fundamental difficulties of segmenting instances. You can find the results of applying both models on same images in Fig. 6.

My thoughts and Future Directions

The interesting thing about this area of study is the pace of the improvements. From the first R-CNN till this Mask R-CNN paper only took three years. This shows us how fast computer vision and specifically the use of neural networks is improving and gives us the direction to put more investments snd funding on these areas. There are variety of architectures to address problems in Computer Vision and this gives us an open hand to select the ones which are the best based on the expectations in case of efficiency or simplicity. As it was shown in this article, although the Mask R-CNN architecture does not have a complex implementation but it outperforms the performance of the other architecture. There are several ways to expand these architecture. One can be adding an attention mechanism to help in detecting the object in a more precise fashion. Furthermore, Mask R-CNN approach can be used in applications in real life. As it was mentioned, medical analysis is one of the most critical application which can make use of this approach and give the doctors on their decisions. Autonomous cars is the other hot topic which is also using image segmentation in order to detect pedestrians and having a better planning stage.

Annotated Bibliography

- ↑ Kaiming Hen, Georgia Gkioxari , Piotr Dollar, Ross Girshick. "Mask R-CNN." ICCV, 2017.

- ↑ Yi Li, Haozhi Qi , Jifeng Dai, Xiangyang Ji, Yichen Wei. "Fully Convolutional Instance-aware Semantic Segmentation." IEEE Conf., 2017.

- ↑ https://blog.altoros.com/experimenting-with-deep-neural-networks-for-x-ray-image-segmentation.html

- ↑ https://link.springer.com/article/10.1007/s13735-017-0141-z

- ↑ Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In NIPS, 2017.

- ↑ J. Long, E. Shelhamer, and T. Darrell. Fully convolutional networks for semantic segmentation. In CVPR, 2015.

|

|

To Add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.

|

|