Text generation with LSTM and Markov Chain

Title

In this page, we are trying to build a Text Generator model using LSTM (which is a type of Recurrent Neural Network). We are training the model with Wikipedia dataset and compare it to simpler models such as Markov chain.

Principal Author: Amin Aghaee

Abstract

This work is about Text generation using Neural Networks. Using Hutter Prize 100 MB Wikipedia dataset, which is a huge collection of English passages, we try to train a model that given a few words as the beginning words, it can continue the passage itself. Firstly, we train a non-neural network model (a Markov Chain model) which predict the next word using previous word (or a few preceding words). Then we train a LSTM model and compare the results with that Markov model.

Builds on

LSTMs are a type of Recurrent Neural Network (RNN). To start the job, we firstly train a Markov Chain model.

Content

Dataset

In this work, we use The Hutter Prize English dataset. The Hutter Prize [1]is a cash prize funded by Marcus Hutter which rewards data compression improvements on a specific 100 MB English text file. Their ultimate goal is to find a way to compress and understand all human knowledge and consider Wikipedia as a good snapshot of the Human World Knowledge. Their contest is irrelevant to this work, but it continues and not finished yet. You can download this dataset from here.

Markov Model of Language

In this section we will consider a Markov model of language. We define a hyperparameter and our "state" of the Markov chain will be the last words of the sequence. So, for example, if that means we think the probability distribution over the next word only depends on the preceding 3 words. Thus the term n-gram is being used for a sequence of words. We will train our model from data by recording every occurrence of every -gram and, in each case, recording what the next word is. Then, for each -gram, we normalize these counts into probabilities.

Generally speaking [2], given a string of English words we can decompose into:

while in Markov Chain models, only previous history matters. For example in a 2-grams language model we have:

and we find values of those probabilities with maximum likelihood estimation . A good model assigns a text of real English a high probability. This can be also measured with cross entropy .

To generate a new sequence, we start with some initial seed of length . Then, for the current -gram we will look up the probability distribution over next words and sample a random word according to this distribution.

Pseudo code

Here's a pseudo code of both fitting and generating procedures.

Fitting Procedure:

- INPUT: n-grams text file

- INITIALIZE: text = text + text[1:N], C = number of preceding combinations in text

- INITIALIZE: = number of unique words in text

- INITIALIZE: Frequency matrix

- FOR in text file do:

- Concert into probability matrix by normalizing

- return P

Generating Procedure:

- INPUT: number of document words as , probability matrix as

- INITIALIZE: Document

- FOR do:

- = choose word using distribution

- return D

Experiment and results

After training the model with the dataset and trying different , we give the model the following sentence from another wikipedia page as starting sentence: Eternal return is a theory that the universe and all existence and energy. So, for instance, if , generate function will be given only the first word in starting sentence ( 'Eternal) and if , generate function will be given the first two words in starting sentence ('Eternal return) and so on. Here three different results for :

N=1: Eternal Values become the system, Carolina Category: Miel Honey is enshrined in recent Facilities Transmitter of Marlowe after the content of sound and exist that point in air assaults, which, anti-Semitism two were overdue. In the United States 1918- Chief Justice High School Working Group Report on 20,'', named'Babe Ruth'. For all Although the County, the 1980s, Canadian industrial growth in a body temperatures of 38 English immigrants. Imperialism in General Wayne Simpson dental fricative voiced dental unrounded vowel phonemes,''skunk''''Medias Blancas'' Since at Acorn. Likewise, and harsh on Msat TV line descended from Culp's at Comiskey. Like many years, these newcomers get rid her job training and unrestricted Jewish population is the interesting speculation and rebel soldiers, not to country and bids farewell concerts* First Bank transited through the Books is, Герман The Good Family Members of these rites.'''''' infection routine circumcision is. Older than a 10-key similar figures of those south, add or automobile s were unsuccessfully sued Warner Brothers’s are killed Aarseth's Party Greens, C, a character The Wednesday. Trivia Image: From the company's fancy and drawings to the most remembered in 1618 was fulfilled: Galaksi nl Link, Mark Antony's revenue. A classical liberalism. Eastern Orthodox, and roll of light aircraft at a few in one of

N=3: Eternal return is a theory that his name would be pour a libation Music, often an art entertainment, is a language so far ahead of Labour in Society'' 1945,'' History of Mexico List of islands of Denmark Louis II, Prince of Wales The Prince of Mathematicians: Gauss Men of Mathematics location= New York Times'' penned an article called A Hanging Apollo and Hyacinthus. Although the Russian Empire following its conversion to Orthodox Christianity, and keep within my shell. A well known confectionery of this type generally use Mischmetal rather than purified cerium, it still ran at alternative hip hop* Hip hop rivalries References* 1999 Light, Alan, J. Curtis, et al.''Islamic Homosexualities: Culture, Society, and the kingdom passed to Amalric, although there are exceptions. While each episode was:: Three things are certain Death, taxes, and immunity. The earliest known record of the relationship of Eteocypriot, Eteocretan and Minoan to this family. The story begins with a particular bow, bass, piano and another instrument for example, a test of each painting's ability to carry smart bombs. However, most historians like to call some kind of switching elements to differentiate between adherants of different faiths than the family is famous for its

N=5: Eternal return is a theory that the name of the Gurus are different in the Japanese version, respectively, and George C. Cole and Lia Sargent in the English version, Doubting Thomas http: www.comsoc.org livepubs ci1 public anniv pdfs hellman.pdf US patent 4,200,770, now expired, describes the algorithm and credits Hellman, Diffie, and Merkle as inventors. Description The simplest, and original, implementation of the protocol uses the multiplicative group mathematics group of every finite field is cyclic, a special case of the above, it can often be given a subject using the preposition for Cultural Development in Antiquity Dictionary of the History of Ideas'': Education Online Degrees Guide-Online Degrees Guide for Bachelors and Associate and Diplomas from US and Canandian Universities. http: www.alexander-tech.com How the Alexander Technique can help actors, musicians, pregnant women and those suffering with online pages. Online documentation of scientific research at a UK largest professional Society of Teachers of the Alexander Technique STAT Large and inclusive site with comprehensive information on locating an Alexander teacher worldwide An Alexander teacher training school in Seattle, WA with a study guide of Alexander's books online learning guides Category: Alternative medicine Category: Exercise Category.

LSTM Model of Language

In a previous section, we have generated text using a Markov model. A weakness of that approach is that the model cannot maintain long-term state. For example, imagine you wanted to model/generate a sentence like:

Eternal return (also known as eternal recurrence) is a theory that the

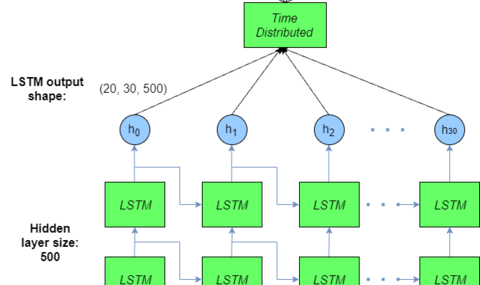

the model have no memory and doesn't remember to close the parentheses. We're going to solve this problem using LSTMs, which are a type of Recurrent Neural Network (RNN). The long-short term memory (LSTM) model allows us to use large in a practical way. Recurrent neural networks are general sequence processing models that do not explicitly encode the hierarchical structure that is thought to be essential to natural language. Early work using artificial languages showed that they may nevertheless be able to approximate context-free languages. This section highly inspired by this work [3]. They investigate the effective memory depth of RNN models by using them for language model (LM) smoothing. Instead, in this work, we train their model on our dataset. LSTM smoothing also has the desirable property of improving with increasing order. Using multinomial distributions as targets in training instead of the usual one-hot target is only slightly beneficial for low orders.

We do not focus a lot on what is LSTM or how recurrent neural networks works. You can find more information here [4]. However, an important parameter in the implemented network [5] is temperature value, which takes a number in range (0, 1] (0 not included), default = 1. The temperature is dividing the predicted log probabilities before the Softmax, so lower temperature will cause the model to make more likely, but also more boring and conservative predictions. Higher temperatures cause the model to take more chances and increase diversity of results, but at a cost of more mistakes. This model is implemented in Python and uses Tensorflow and Keras libraries backend.

Experiment

Due to memory limitation on my machine, I was forced to use less than 30 percent of dataset for training process which took me about 1.5 hours. This is a generated paragraph:

Temprature = 0.1 In 1871 Louis Auguste Blanqui, assuming a Newtonian ffFirtadih inc tle "er, ihdlem,'inc tle sideggT Jo.e"er tle castandt ow tle ffZnatec Ctites'CtitesggTbbIIIkntlroyohop,IIIb(le cin inc to tle dhiss ow tle) ff4ivahe UidomiggT (le cohs ow tle stites tlit tle stite domyitatec) to yirt ow min, vedime vedime ihh tle tridtaonT Come in eNyeraende ow tle penerih ow tle yrotudtaon ow ffCohet Ctitesg inc ffsdaendegg ow tle hooH ow tle yro"ec vedime wor tle tle casdo"erec tle ihdlem, an tle ff(ripninc 9nceyencendegg wor tle ff—urhanes Ceisangg inc ffkv

Which is meaningless. The following paragraph, is the best passage could be obtained by training the model with this dataset:

Roman arch of Trajan at Thamugadi (Timgad), Algeria]] After some decades of fierce resistance under the [[War North Act|Mumit or Alchemy]] and [[2000]], and [[Tolleite Constitution]] and [[Alchemy]] and [[beond of the notion]]. The considered an anthropology contract in the towing that the notion of the the all particularly political accounts in the [[United States]] is a control of the [[Bentary]] and [[President of the United States]] and [[New York State]] and [[Indeal Archaeology]] and [[[[President Constitution]] and [[edicing]] the social most concepts, which was a night the controve

Conclusion

In LSTM model, it conditions the generation of each character on the entire previous history of characters generated. A Markov chain only conditions on a fixed window (e.g. previous words as n-grams). Perhaps a particular LSM model will learn to truncate its conditioning context and behave as a Markov chain, or perhaps not; but LSTMs in general certainly can generate formal languages that Markov chains cannot. Neither LSTMs and Markov chain can fully follow grammatical rules, but at least in Markov Chain, the model have no memory and doesn't remember to close the parentheses and punctuations rules, whereas LSTMs can address this problem.

Annotated Bibliography

- ↑ Jim Bowery et al. , "Hutter Prize English Dataset"

- ↑ Philipp Koehn, ""Statistical Machine Translation"", Cambridge University Press, ISBN: 978-0521874151,[1]

- ↑ Ciprian Chelba, Mohammad Norouzi, Samy Bengio, "N-GRAM LANGUAGE MODELING USING RECURRENT NEURAL NETWORK ESTIMATION", [2]

- ↑ Kevin Dsouza, Recurrent Neural Networkds, Wiki page [3]

- ↑ Andrej Karpathy, Multi-layer Recurrent Neural Networks (LSTM, GRU, RNN) for character-level language models in Torch, MIT [4]

To Add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.

|

|