Course:CPSC522/Knowledge-Aware Graph Networks for Commonsense Reasoning

Exploring the Power of Graph Convolutions for Commonsense Reasoning in NLP

This page explores the power of graph convolutions for Commonsense Reasoning in NLP from the following two papers,

- KagNet: Knowledge-Aware Graph Networks for Commonsense Reasoning (EMNLP-IJCNLP 2019)

- Semi-supervised Classification with Graph Convolution Networks (ICLR 2017)

Principal Author: Mehar Bhatia

Collaborators:

Abstract

Empowering machines with the ability to perform commonsense reasoning (or the ability to make presumptions about ordinary situations in daily life), has been seen as the bottleneck of artificial general intelligence. In this study, we explore a novel knowledge-aware graph network module named KagNet. To encode external knowledge-based schema graphs to aid in answering commonsense questions, the paper utilizes Graph Convolutional Networks (GCNs) as the core component of the reasoning framework. Gathering concepts from the second selected paper, this page also provides a deeper understanding of the intuition for applying GCNs for Commonsense Reasoning in NLP.

Builds on

The key idea behind KagNet is to incorporate external knowledge into the reasoning process through the use of a knowledge graph.

A knowledge graph is a graph-based representation of external knowledge that links entities and their relationships. The KagNet module is designed to process information by passing messages along the edges of the knowledge graph, guiding the flow of information through the network and informing the reasoning process.

Here are a few related pages, to understand the key fundamentals used in this wiki page.

- Natural Language Processing: Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data.

- Knowledge Graphs: In knowledge representation and reasoning, knowledge graph is a knowledge base that uses a graph-structured data model or topology to integrate data.

- Commonsense Reasoning: In artificial intelligence (AI), commonsense reasoning is a human-like ability to make presumptions about the type and essence of ordinary situations humans encounter every day.

- Graph Neural Networks: This page by my classmate Nikhil Shenoy, provides a foundation page on Graph Neural Networks.

Background

Can Commonsense inferences help question-answering?

Recently, there have been a few emerging large-scale datasets for testing machine commonsense with various focuses[1][2][3]. In a typical dataset, CommonsenseQA[4], given a question like “Where on a river can you hold a cup upright to catch water on a sunny day?”, with five answer choices being {waterfall(✓), bridge(✗), valley(✗), pebble(✗), mountain(✗)}, a commonsense reasoner is expected choose the most plausible answer from a list of possible candidates. False choices are usually highly related to the question context, but are just less possible in real-world scenarios, making the task even more challenging. The dataset consists of 12,102 natural language questions in total that require human commonsense reasoning ability to answer. CommonsenseQA is directly gathered from real human annotators and covers a broad range of types of commonsense, including spatial, social, causal, physical, temporal, etc.

Pre-trained Large Language Models

Simply fine-tuning large, pre-trained language models such as GPT[5] and BERT[6] can be a very strong baseline method. However, there still exists a significant gap compared to human performance. Current approaches to reason with neural models need more transparency and interpretability. There is no clear way to how these models manage to answer commonsense questions, thus making their inferences dubious.

Commonsense Knowledge Bases

The authors are specifically interested in proposing reasoners that can exploit commonsense knowledge bases [7][8][9]. Knowledge-aware models can explicitly incorporate external knowledge as relational inductive biases [10] to enhance their reasoning capacity, as well as to increase the transparency of model behaviours for more interpretable results. Furthermore, a knowledge-centric approach is extensible through commonsense knowledge acquisition techniques [11][12].

Problem Statement

Given a commonsense-required natural language question and a set of candidate answers , the task is to choose one answer from the set. No additional context is given along with the question to help solve this task. From a knowledge-aware perspective, the question and choices can be grounded as a schema graph (denoted as ) extracted from a large external knowledge graph , which is helpful for measuring the plausibility of answer candidates. The knowledge graph is described as a fixed set of concepts , and typed edges describing semantic relations between concepts. The goal is to effectively ground and model schema graphs (in the symbolic space) to improve the reasoning process of question-answer candidates (in the semantic space).

Overview of Reasoning Workflow in KagNet

The KagNet module consists of two main components: (i) the graph network module and (ii) the knowledge graph. In the later sections, we describe each component in detail. To provide an overview, the graph network module processes the input data and produces intermediate representations. The knowledge graph provides information to the graph network module, helping it to make decisions and refine its intermediate representations.

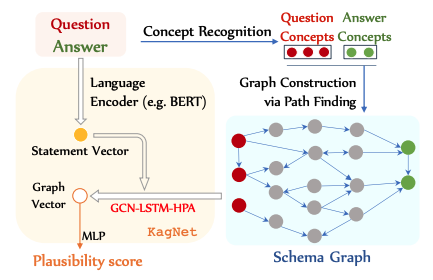

Walking through the proposed framework in Figure 1, the framework accepts a pair of question and answer denoted as and . In Section 5, we explain the schema graph grounding stage and then proceed to the core component, the knowledge-aware graph network in Section 6.

Schema Graph Grounding

Grounding the knowledge graph involves linking the entities in the graph to specific instances in the input data. This stage is two-fold: (i) recognizing concepts mentioned in the text, (ii) constructing schema graphs by retrieving paths in the knowledge graph, and finally pruning noisy paths.

Concept Recognition

The authors choose ConceptNet[13] as a commonsense knowledge base due to its generality. As the first step, tokens from questions and answers are matched to sets of mentioned concepts ( and ) respectively using the n-grams in sentences. Pre-processing in terms of lemmatization, stop-word removal and morphological restorations is performed on these tokens to find the best match in .

Schema Graph Construction

For each question concept and answer concept , an path find finding algorithm is used to efficiently find paths in and construct a minimal spanning (least-weight tree connecting graph vertices) relevant sub-graph .

To prune irrelevant paths from potentially noisy schema graphs, the authors utilize knowledge graph embedding techniques, like TransE[14] to pre-train concept embeddings and relation type embeddings . Each path is scored by decomposing into a set of triples, the confidence of which can be directly measured by the scoring function of the KGE method (i.e. the confidence of triple classification). This is used as the initialization of KagNet.

Knowledge-Aware Graph Network module - KagNet

Here comes the star of the show!

The main idea behind this module is to process information by passing messages along the edges of the schema graph, to form a graph-based representation of external knowledge. This architecture is composed of three main components: (i) Graph Convolutional Network (GCN) to encode structures of schema graphs to accommodate pre-train concept embeddings in their particular context within the schema graph, (ii) Long Short-Term Memory (LSTM) to encode the paths between and , capturing multi-hop relational information, and (iii) Hierarchical Path Attention mechanism (HPA) to selectively aggregate important path vectors.

Graph Convolutional Networks

The Graph Convolutional Network (GCN) component is responsible for processing the graph structure of the schema graph and generating intermediate low-dimensional contextual representations of the entities and relationships. The GCN component uses graph convolutional operations to propagate information along the edges of the graph, allowing the model to leverage the relationships represented in the graph to inform its predictions.

To provide an overview of GCNs, the term ‘convolution’ in Graph Convolutional Networks is similar to Convolutional Neural Networks in terms of weight sharing. The main difference lies in the data structure, where GCNs are the generalized version of CNN that can work on data with underlying non-regular structures. The insertion of an Adjacency Matrix in the forward pass equation of GCNs enables the model to learn the features of neighbouring nodes. This mechanism can be seen as a message-passing operation along the nodes within the graph.

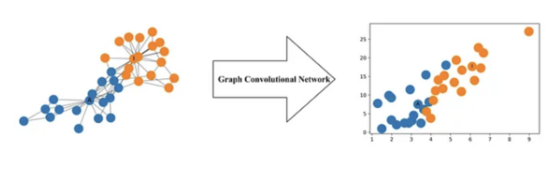

GCNs are a very powerful neural network architecture for machine learning on graphs. In fact, they are so powerful that even a randomly initiated 2-layer GCN can produce useful feature representations of nodes in networks. Figure 3 illustrates a 2-dimensional representation of each node in a network produced by such a GCN. Notice that the relative nearness of nodes in the network is preserved in the 2-dimensional representation even without any training. That is the ‘magic’ of GCN, it can learn feature representation even without training.

Back to the KagNet module, the intuition to apply GCNs is to update the concept vectors with the help of neighbouring nodes to eliminate ambiguity and further obtain context-based concept embedding. An important feature of GCNs is the advantage to capture the structural characteristics of the schema graph to help in reasoning. For example, a shorter and tighter connection between the question and the answer concept may mean that it is more reasonable in a specific text.

The authors of KagNet apply GCN to the plain version (unlabeled, non-directional) of the schema graph, ignoring the type of relationship on the edges.

Specifically, the vector for concept in the schema graph is initialized by their pre-trained embeddings at first . Then, at the layer, the concept vector is iteratively updated by pooling features of their neighbouring nodes and their own at the layer with a non-linear activation function .

Relational Path Encoding

The next step in the KagNet architecture is the LSTM-based path encoder which is responsible for modelling the sequential information. Since the graph representation aims to measure the plausibility of a candidate's answer to a given question, this component processes the intermediate representations generated by the GCN component and generates a final representation that summarizes the input data. The aim is to present graphs with respect to the paths between question concepts and answer concepts .

The path between the question concept and answer concept can be mathematically formulated as a sequence of triples, denoted by as follows,

Note that the relations are represented with trainable relation vectors (initialized with pre-trained relation embeddings ), and concept vectors are the GCNs’ outputs (). Thus, each triple described above, can be represented by the concatenation of the three corresponding vectors.

Then, LSTM, encodes these sequences (of triple vectors) to get the path vector. This is done as follows,

First is expressed as the latent relation between the question concept and the answer concept , after aggregating the representations of all the paths between them in the schema graph.

Then, the statement vector is extracted from a language encoder which can either be a trainable sequence encoder like LSTM or pre-trained universal language encoder like GPT or BERT. This is because we also want to use the representation of the sentence.

So, (representation from graph-space) is concatenated with an additional vector (representation from symbolic-space). The role of this vector is to encode the latent relational information yet from the statement vector instead of the schema graph . Simply put, the motivation is to combine the relational representations of a pair of question/answer concepts from both the schema graph side (symbolic space) and the language side (semantic space). Mathematically, this is done by passing the statement vector along with each question/answer concept through a multilayer perceptron layer.

Now, to get the final representation of the schema graph , the authors formulate the below equation to aggregate all vectors or possible pairs of question-answer concepts in matrix using average pooling.

Finally, is performed using a MLP classifier head followed by sigmoid activation function to determine whether a candidate's answer to the question is plausible.

Hierarchical Attention Mechanism

It is important to note that there are two drawbacks till far on the current approach.

Currently there are two average pooling operations. The first one is constructing the latent relation between the question-answer concepts which presumes that all paths are equally relevant. The second one is when formulating the final representation of the schema graph . This assumes that all question/answer concept pairs are equally important.

So, the hierarchical attention mechanism component of the KagNet architecture is essential for selectively aggregating important path vectors and more important question-answer concept pairs.

This core idea is similar to the work of Yang et al. (2016)[15], which proposes a document encoder that has two levels of attention mechanisms applied at the word-level and sentence-level. However, in this case, it is vital to extract the path-level and concept-pair-level attention for learning to contextually model graph representations. This is done by modelling the two-level importance as latent weights.

For the path-level attention score (for attending on the semantic space), the network learns a parameter matrix so the importance of the path is denoted as . This attention vector is then normalized by passing through a softmax layer to form . Finally, this attention vector is applied while aggregating the representation of all paths, instead of simply average-pooling. Mathematically, this is denoted as,

Similarly, the attention over the concept-pairs is obtained as by learning a parameter matrix by attending on the statement vector . Similar to above, is then normalized by passing through a softmax layer to form . To get the new final representation of the schema graph , this attention vector is applied while concatenating and (instead of simply average-pooling).

To sum up, KagNet is a graph neural network module with the GCN-LSTM-HPA architecture that models relational graphs for relational reasoning under the context of both knowledge symbolic space and language semantic space.

Experimental Details

KagNet architecture is evaluated using the Commonsense QA[4], dataset to measure how well the approach can encode external knowledge-based schema graphs. For the comparisons with the reported results on the dataset's leaderboard, the paper uses the official split (9,741/1,221/1,140) named (OFtrain/OFdev/OFtest). Note that the performance on OFtest can only be tested by submitting predictions to the organizers. To efficiently test other baseline methods and ablation studies, authors choose to use randomly selected ]examples from the training data as our in-house data, forming an (8,500/1,221/1,241) split denoted as (IHtrain/IHdev/IHtest). All experiments are using the random-split setting as the authors suggested, and three or more random states are tested on development sets to pick the best-performing one.

For a fair comparison, two different kinds of baseline methods are considered.

Baseline Methods

Knowledge-agnostic Methods

These methods either use no external resources or only use unstructured textual corpora as additional information, including gathering textual snippets from search engine or large pre-trained language models like BERT-Large. QABilinear, QACompare and ESIM are three supervised learning models for natural language inference that can be equipped with different word embeddings including GloVe and ELMO. BIDAF++ utilizes Google web snippets as context and is further augmented with a self-attention layer while using ELMO as input features. GPT/BERT-Large are fine-tuning methods with an additional linear layer for classification as the authors suggested. They both add a special token ‘[SEP]’ to the input and use the hidden state of the ‘[CLS]’ as the input to the linear layer.

Knowledge-aware Methods

The paper KagNet also mentions other recently proposed methods of incorporating knowledge graphs for question answering. KV-Mem[16] is a method that incorporates retrieved triples from ConceptNet at the word-level, which uses a key-valued memory module to improve the representation of each token individually by learning an attentive aggregation of related triple vectors. CBPT[17] is a plug-in method of assembling the predictions of any models with a straightforward method of utilizing pre-trained concept embeddings from ConceptNet. TextGraphCat[18] concatenates the graph-based and text-based representations of the statement and then feed it into a classifier. A final approach by Rajani et al. (2019)[19] proposes to collect human explanations for commonsense reasoning from annotators as additional knowledge (COS-E), and then train a language model based on such human annotations for improving the model performance.

Performance Comparisons and Analysis

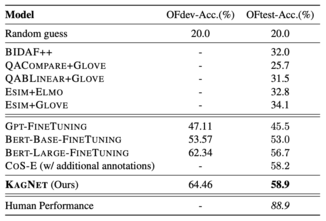

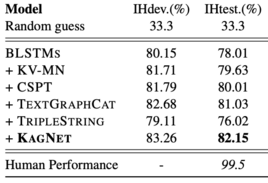

Comparison with standard Knowledge-agnostic baselines

As shown in Table 1, BERT and GPT-based pre-training methods are much higher than other baseline methods, demonstrating the ability of language models to store commonsense knowledge in an implicit way. This presumption is also investigated by Trinh and Le (2019)[20]. KagNet framework achieves an absolute increment of 2.2% in accuracy on the test data, a state-of-the-art performance.

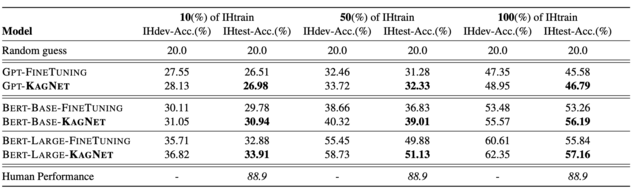

Another set of experiments were conducted to compare universal language encoders (GPT and BERT-Base/Large, particularly with different fractions of the dataset (say 10%, 50%, 100% of the training data). Table 2 shows that KagNet-based methods using fixed pre-trained language encoders outperform fine-tuning themselves in all settings. In a small data situation (10%), improvement is relatively limited, however few-shot learning is an important future research direction.

Comparison with knowledge-aware baselines

To compare KagNet with other adopted baseline methods that also incorporate ConceptNet, the authors set up a bidirectional LSTM networks-based model. Table 3 demonstrates how KagNet outperforms all all knowledge-aware baseline methods by a large margin in terms of accuracy. In Table 2, KagNet and CoS-E (with additional annotations) is compared. Although CoS-E also achieves better result than only fine-tuning BERT by training with human-generated explanations, it is important to note that KagNet does not utilize any additional human efforts to provide more supervision.

Ablation study on model components

To better understand the effectiveness of each component in the KagNet architecture, an ablation study is performed as shown in Table 4. It can be seen that replacing the GCN-LSTM-HPA architecture with traditional relational GCNs, which uses separate weight matrices for different relation types, results in worse performance, due to its over-parameterization. The attention mechanisms matters almost equally in two levels, and pruning also effectively filters noisy paths.

Can KagNet aid in Interpretability?

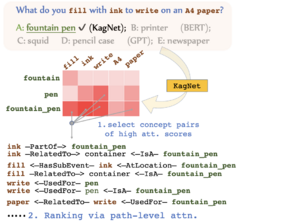

Another advantage of using KagNet, is that it provides interpretable inference process. It analyzes the hierarchical attention scores on the question-answer concept pairs and path between them, therefore aiding to understand model behaviours. Figure 4 shows an example of analysis using KagNet framework through both pair-level and path-level attention scores. The framework first selects the concept-pairs with highest attention scores and then looks at the (one or two) top-ranked paths for each selected pair. Paths located in this way are highly related to the inference process and also shows that noisy concepts like ‘fountain’ will be diminished while modelling.

My thoughts on the contributions and approaches for future work.

In conclusion, the "Semi-Supervised Classification with Graph Convolutional Networks" paper presents a novel approach to semi-supervised learning using GCN. The results of the evaluation show that GCN is a promising method for semi-supervised classification, especially when only a small amount of labeled data is available. This work has important implications for many real-world applications where it can be challenging to obtain labeled data, such as social network analysis and recommendation systems.

In recent years, graph convolutional networks have become a popular method for solving various graph-based problems, and this paper provides important insights into how GCN can be used for semi-supervised classification. The authors' contributions to the field of semi-supervised learning and graph-based analysis have helped to advance the state-of-the-art in these areas. On the other hand, the second paper choses a novel application for commonsense reasoning to apply GCNs. In order to evaluate the performance of KagNet, the authors use a standard accuracy metric, which measures the percentage of questions that are answered correctly. They found that KagNet outperformed the other models in terms of accuracy on both datasets, demonstrating its effectiveness for commonsense reasoning.

The authors also conducted several ablation studies to understand the impact of different components of KagNet on its performance. These studies showed that the knowledge-aware graph network architecture is critical to the performance of KagNet, and that incorporating external knowledge is essential for solving commonsense reasoning tasks.

In conclusion, the experimental setup in the "KagNet: Knowledge-Aware Graph Networks for Commonsense Reasoning" paper provides a comprehensive evaluation of the performance of KagNet on a commonsense reasoning task. The results show that KagNet is a highly effective model for commonsense reasoning and outperforms several state-of-the-art deep learning models. This work makes important contributions to the field of artificial intelligence by demonstrating the potential of graph networks for commonsense reasoning.

Through this approach and my experiments using the source code https://github.com/INK-USC/KagNet, I came across three kinds of hard problems that KagNet is not good at identifying.

- Negative Reasoning: The grounding stage is not sensitive to the negation words (for example, "not", "but", etc), and thus can choose exactly opposite answers.

- Comparative reasoning strategy: Questions with more than one highly plausible answers and explicitly deals with comparisons like "largest, most", the commonsense reasoner should benefit from explicitly investigating the difference between different answer candidates, while KagNet training method is not capable of doing so. A promising direction could be using some logical forms or executable semantic parsing.

- Subjective Reasoning: Many answers actually depend on the “personality” of the reasoner. For instance, “Traveling from new place to new place is likely to be what?” The dataset gives the answer as “exhilarating” instead of “exhausting”, which is more like a personalized subjective inference instead of common sense.

At the same time, I think it will be exciting to work towards learnable graph constructions instead of relying on heuristic algorithms. I also think this approach can be extended to visual reasoning. If you are aware of any work in this direction, please cite the work under "Discussions Tab".

Conclusion

This page explains the KagNet, a novel framework for knowledge-aware commonsense question answering. It also describes a novel graph network architecture for relational reasoning, using graph convolution networks for contextualized node representations, path-based LSTM for path presentation and a hierarchical attention for finding relevant reasoning paths. Though the second paper provides a strong foundation on the theoretical concepts behind Graph Convolutions, it misses the application for relational reasoning, which is the motivation behind the first paper. In summary, the first paper explores a different application of GCN, to effectively represent graphs for relational reasoning purpose in a transparent, interpretable way, yielding new state-of-the-art results on a large-scale general dataset for testing machine commonsense.

Through the discussions, this page also discusses future directions of this work, including better question parsing methods to deal with negation and comparative question answering, as well as incorporating knowledge to visual reasoning.

References

- ↑ Zellers, R., Bisk, Y., Schwartz, R., & Choi, Y. (2018). Swag: A large-scale adversarial dataset for grounded commonsense inference. arXiv preprint arXiv:1808.05326.

- ↑ Sap, M., Rashkin, H., Chen, D., LeBras, R., & Choi, Y. (2019). Socialiqa: Commonsense reasoning about social interactions. arXiv preprint arXiv:1904.09728.

- ↑ Zellers, R., Bisk, Y., Farhadi, A., & Choi, Y. (2019). From recognition to cognition: Visual commonsense reasoning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6720-6731).

- ↑ 4.0 4.1 Talmor, A., Herzig, J., Lourie, N., & Berant, J. (2018). Commonsenseqa: A question answering challenge targeting commonsense knowledge. arXiv preprint arXiv:1811.00937.

- ↑ Radford, A., Narasimhan, K., Salimans, T., & Sutskever, I. (2018). Improving language understanding by generative pre-training.

- ↑ Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

- ↑ Speer, R., Chin, J., & Havasi, C. (2017, February). Conceptnet 5.5: An open multilingual graph of general knowledge. In Proceedings of the AAAI conference on artificial intelligence (Vol. 31, No. 1).

- ↑ Tandon, N., De Melo, G., & Weikum, G. (2017, July). Webchild 2.0: Fine-grained commonsense knowledge distillation. In Proceedings of ACL 2017, System Demonstrations (pp. 115-120).

- ↑ Sap, M., Le Bras, R., Allaway, E., Bhagavatula, C., Lourie, N., Rashkin, H., ... & Choi, Y. (2019, July). Atomic: An atlas of machine commonsense for if-then reasoning. In Proceedings of the AAAI conference on artificial intelligence (Vol. 33, No. 01, pp. 3027-3035).

- ↑ Battaglia, P. W., Hamrick, J. B., Bapst, V., Sanchez-Gonzalez, A., Zambaldi, V., Malinowski, M., ... & Pascanu, R. (2018). Relational inductive biases, deep learning, and graph networks. arXiv preprint arXiv:1806.01261.

- ↑ Li, X., Taheri, A., Tu, L., & Gimpel, K. (2016, August). Commonsense knowledge base completion. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (pp. 1445-1455).

- ↑ Xu, F. F., Lin, B. Y., & Zhu, K. Q. (2017). Automatic extraction of commonsense locatednear knowledge. arXiv preprint arXiv:1711.04204.

- ↑ Speer, R., Chin, J., & Havasi, C. (2017, February). Conceptnet 5.5: An open multilingual graph of general knowledge. In Proceedings of the AAAI conference on artificial intelligence (Vol. 31, No. 1).

- ↑ Wang, Z., Zhang, J., Feng, J., & Chen, Z. (2014, June). Knowledge graph embedding by translating on hyperplanes. In Proceedings of the AAAI conference on artificial intelligence (Vol. 28, No. 1).

- ↑ Yang, Z., Yang, D., Dyer, C., He, X., Smola, A., & Hovy, E. (2016, June). Hierarchical attention networks for document classification. In Proceedings of the 2016 conference of the North American chapter of the association for computational linguistics: human language technologies (pp. 1480-1489).

- ↑ Mihaylov, T., & Frank, A. (2018). Knowledgeable reader: Enhancing cloze-style reading comprehension with external commonsense knowledge. arXiv preprint arXiv:1805.07858.

- ↑ Zhong, W., Tang, D., Duan, N., Zhou, M., Wang, J., & Yin, J. (2019). Improving question answering by commonsense-based pre-training. In Natural Language Processing and Chinese Computing: 8th CCF International Conference, NLPCC 2019, Dunhuang, China, October 9–14, 2019, Proceedings, Part I 8 (pp. 16-28). Springer International Publishing.

- ↑ Wang, X., Kapanipathi, P., Musa, R., Yu, M., Talamadupula, K., Abdelaziz, I., ... & Witbrock, M. (2019, July). Improving natural language inference using external knowledge in the science questions domain. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 33, No. 01, pp. 7208-7215).

- ↑ Rajani, N. F., McCann, B., Xiong, C., & Socher, R. (2019). Explain yourself! leveraging language models for commonsense reasoning. arXiv preprint arXiv:1906.02361.

- ↑ Trinh, T. H., & Le, Q. V. (2019). Do language models have common sense?.

![{\displaystyle P_{i,j}[k]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/5a0d983e323927895059ebdbeecddc2211679ba6)

![{\displaystyle P_{i,j}[k]=[(c_{i}^{(q)},r_{0},t_{0}),...,(t_{n-1},r_{n},c_{j}^{(a)})]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/e8c6f4c8aa3a6ddcf621acc2d9514682503deeec)

![{\displaystyle R_{i,j}={\frac {1}{\left\vert P_{i,j}\right\vert }}\sum _{k}LSTM\ (P_{i,j}[k])}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/691d84822b815365ef418b28fb41784046dc5697)

![{\displaystyle T_{i,j}=MLP([s\ ;\ c_{q}^{(i)}\ ;\ c_{a}^{(j)}])}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/eb2e5c542bfd0fc1e25891c380fbb91915ccd3db)

![{\displaystyle g={\frac {\textstyle \sum _{i,j}\displaystyle [R_{i,j}\ ;\ T_{i,j}]}{\left\vert C_{q}\right\vert \times \left\vert C_{a}\right\vert }}}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/7bbe876ca2094248029d34ecca75083233294d14)

![{\displaystyle {\begin{array}{lcl}\alpha _{(i,j,k)}&=&T_{i,j}\ W_{1}\ LSTM(P_{i,j}[k]),\\{\widehat {\alpha }}_{(i,j,k)}&=&SoftMax(\alpha _{(i,j,.)}),\\R(wAttn)_{i,j}&=&\sum _{k}{\widehat {\alpha }}_{(i,j,k)}\ \centerdot \ LSTM(P_{i,j}[k])\end{array}}}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/0c5e37e9ce681024ca39307dbcbcf2bb34348882)

![{\displaystyle {\begin{array}{lcl}\beta _{(i,j)}&=&s\ W_{2}\ T_{i,j},\\{\widehat {\beta }}_{(\centerdot ,\centerdot )}&=&SoftMax((\centerdot ,\centerdot )),\\g(wAttn)&=&\sum _{i,j}{\widehat {\beta }}_{(i,j)}\ [R(wAttn)_{i,j}\ ;\ T_{i,j}]\end{array}}}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/092cd9dff024a5331bcc74bf16508222700132ba)