Documentation:EML Electrocardiogram (ECG) Project

Introduction

Background

Electrocardiography is the process of producing an electrocardiogram (ECG), which records electrical signals in the heart. It is a painless, non-invasive way to help diagnose many common heart problems in people of all ages. This method can be used to determine and detect: Abnormal heart rhythm (arrhythmias), coronary artery disease, whether you had a previous heart attack, how well certain heart disease treatments, such as a pacemaker are working. The signals are displayed in a graph of voltage as a function of time, which can show around a minute's worth of data on one screen. However, cardiologists need data ranging from 30 minutes to 8 hours for diagnostic purposes, requiring them to go through multiple ECG graphs and put them together, which can be quite a cumbersome process.

Objective

The ECG project aims to provide a data-driven ECG diagnostic tool for cardiologists and their patients to review and evaluate ECG data more effectively. The tool can display 30 minutes to 8 hours of ECG data on one screen under a 3D Virtual Reality (VR) environment. It is built using Unity Engine, navigable with VR controllers. In addition, the tool reduce the time taken to analyze ECG data by incorporating Artificial Intelligence.

Format

This tool will focus on desktop and VR platforms as the primary delivery to maximize ease of use and accessibility. A VR version using Oculus Quest will allow for a more immersive experience.

Generative Adversarial Network (GAN)

Since our project will be used for medical purposes, it is essential that we achieve high accuracy. High efficiency can be achieved by training our machine learning model with thousands of real-world datasets. Currently, we have 31 datasets which are not sufficient to accomplish this task, therefore, we made use of the Generative Adversarial Network (GAN). GAN model is a machine learning framework that is used to generate realistic fake datasets from real-world data. This framework was created by Ian Goodfellow and his colleagues in 2014. In this framework we have two neural networks called generator and discriminator and they compete in-game where the generator tries to make the discriminator believe that the data is real data and not generated data.

Working of GAN model

GAN model has two components: generator and discriminator. The function of the generator is to generate datasets that resemble real-world data whereas the discriminator decides whether data is real-world data or fake data (generated data).

We first train the generator with the dataset and then it tries to generate data of its own and sends it to the discriminator. The discriminator is trained using a real-world dataset and therefore it can tell the difference between generated and real data. If the discriminator decides that the data is fake then the generator learns more patterns by iterating through datasets and creates another dataset that is better than the previously generated dataset and the discriminator registers the previous dataset as fake and learns to identify such datasets as fake. On the other hand, if the discriminator decides that the data is real then the generator learns those patterns and continues improving itself. This goes on until half of the generated datasets are classified as real-world datasets.

Code for GAN model

Code for our GAN-Model can be found here

Amazon Web Services (AWS)

We are using Amazon Web Services for machine learning training. AWS is a cloud computing platform that provides users with compute, storage as needed. Compared with the traditional computer, AWS offers much stronger compute power and database storage.

- AWS Sagemaker: one-click Jupyter notebooks on AWS Sagemaker Studio Notebooks for machine learning coding and training. Each notebook instance comes with different vCPU (2 - 64), Memory (4 - 488GiB), Price per Hour ($0.056 - $30.967). Ensure the Canada (Central) is chosen in the region. The charge is based on the instance type you choose and the duration of use. Here is the link listing the details of different Notebook Instances that AWS Sagemaker offered. https://aws.amazon.com/sagemaker/pricing/

- AWS S3 bucket: to store confidential dateset in AWS S3 bucket and build a connection with AWS Sagemaker to access the stored data.

Initial Setup

To setup jupyter notebook on AWS Sagemaker follow the steps below and make sure region is "Central"

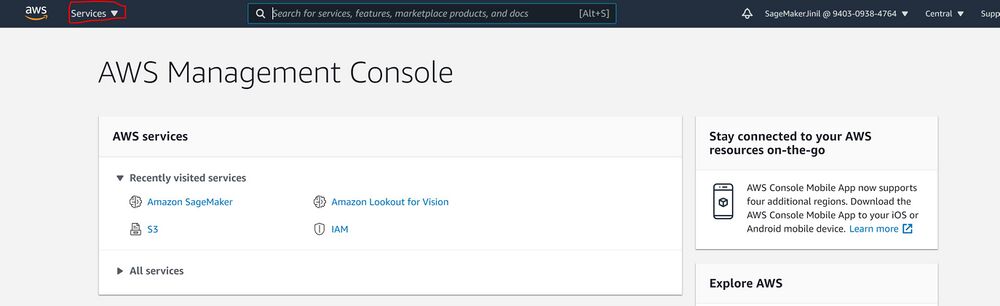

1. Login to AWS console using provided credentials 2. Once you log in click on "services" on the top left corner of your screen

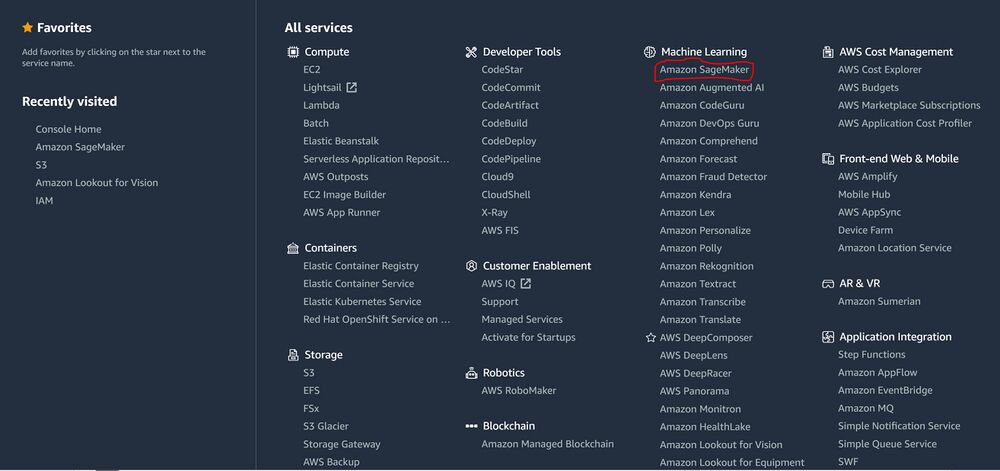

3. Find the "AWS Sagemaker" service in the Machine Learning subsection

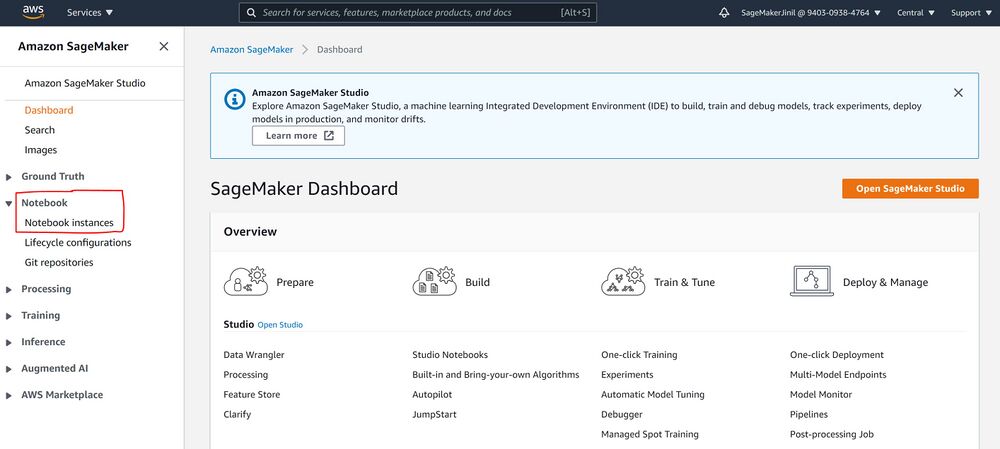

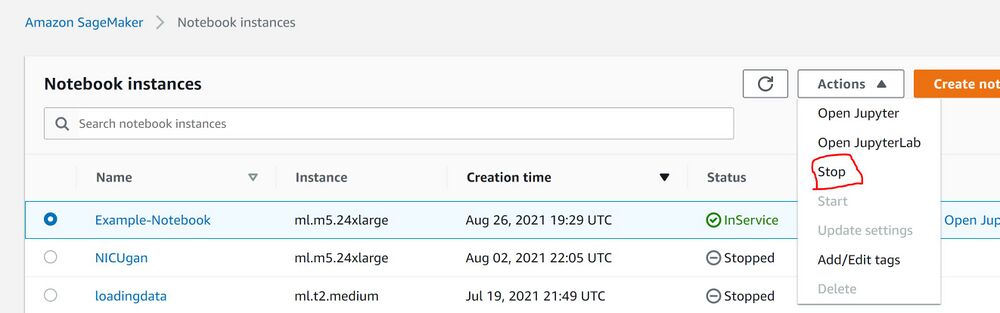

4. Click on "notebook" on the left side of your screen and select "notebook instances"

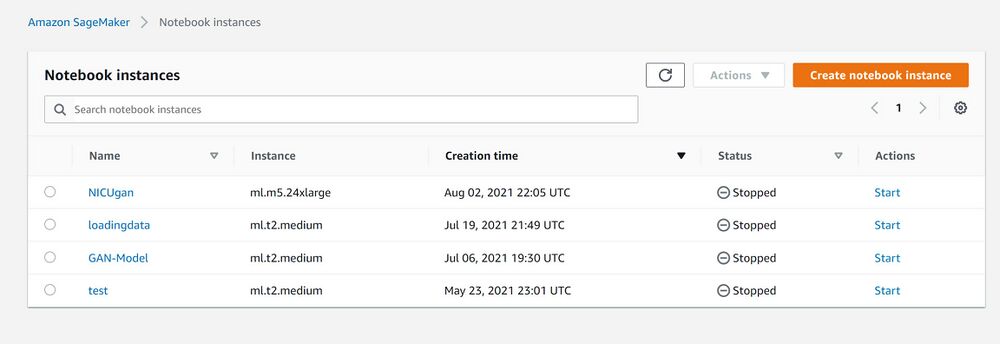

5. Select "create notebook instance" and make sure region is "Central"

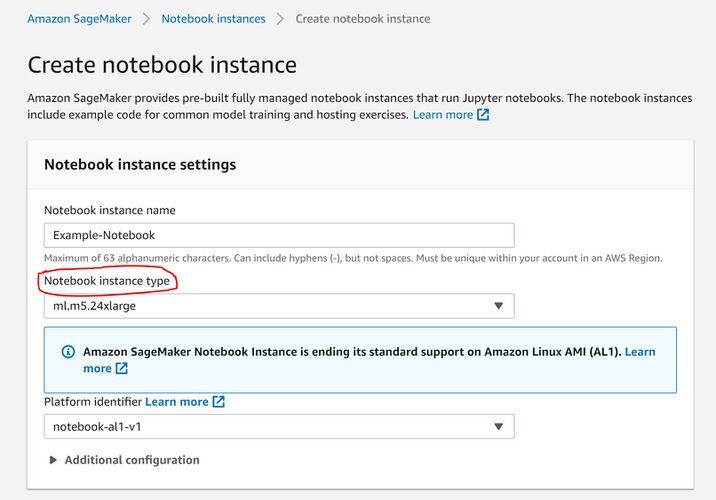

6. Name your notebook and select the notebook instance as needed. Leave all other settings as they are and press create notebook instance at bottom of the page.

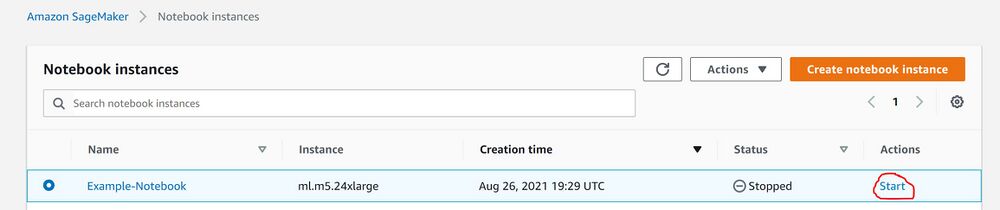

7. Once the notebook is created select the notebook and press start

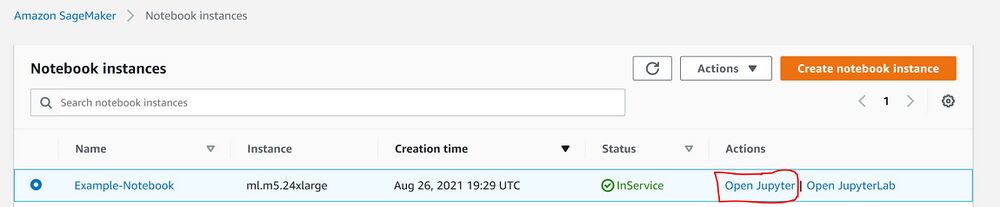

8. Click on "Open Jupyter"

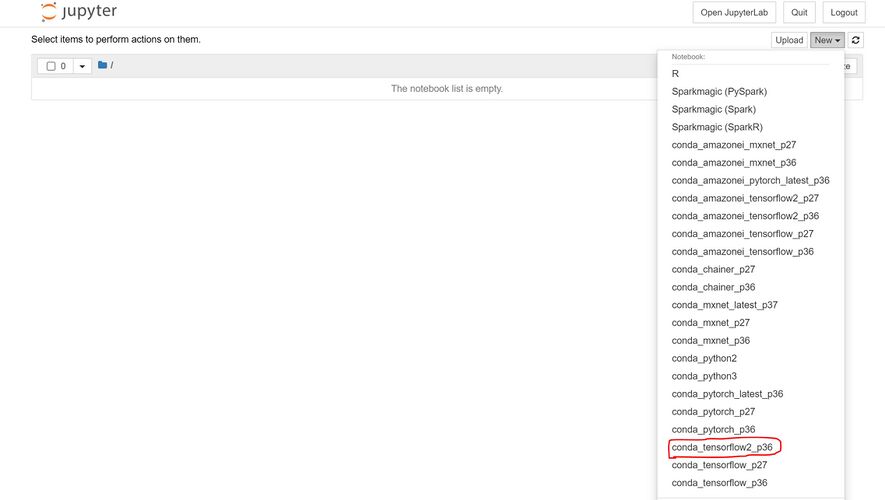

9. Click on "New" to open the drop-down list and then select conda_tensorflow2_p36 and start coding

10. Once done with coding return to the notebook instance page and stop the in-service notebook by selecting "Stop" in action list

Important Links

Development Team

- George Gu

- Cerron Dante

- Jinil Patel