Course:CPSC532:StaRAI2020:Convolutional2DKnowledgeGraphEmbeddings

Convolutional 2D Knowledge Graph Embeddings

In this paper [1], the authors introduced a multi-layer convolutional network model for link prediction in knowledge graphs.

Authors: Melika Farahani

Related Pages

The CPSC 532P page on Knowledge Graphs.

Abstract

Most of the previous work on link prediction has focused on shallow, fast models which can scale to large knowledge graphs. However, these models learn less expressive features than deep, multi-layer models which potentially limits performance. In this work they introduced ConvE, a multi-layer convolutional network model for link prediction, and report state-of-the-art results for several established datasets.

Content

Introduction

Knowledge graphs are graph-structured knowledge bases, where facts are represented in the form of relationships (edges) between entities (nodes). Identifying such missing links is referred to as link prediction. Most of the previous methods for link prediction use embedding entities and relationships of multirelational data in low-dimensional vector spaces. One of the first and most famous one is "Translating Embeddings for Modeling Multi-relational Data"[2]. However, in the previous works, link prediction models are often composed of simple operations, like inner products and matrix multiplications over an embedding space, and use a limited number of parameters. The only way to increase the number of features in shallow models is to increase the embedding size. However, doing so does not scale to larger knowledge graphs, since the total number of embedding parameters is proportional to the the number of entities and relations in the graph. To solve this issue, in this paper they introduce ConvE, a model that uses 2D convolutions over embeddings to predict missing links in knowledge graphs. ConvE is the simplest multi-layer convolutional architecture for link prediction: it is defined by a single convolution layer, a projection layer to the embedding dimension, and an inner product layer.

Translating Embeddings for Modeling Multi-relational Data

TransE, is an energy-based model for learning low-dimensional embeddings of entities. In TransE, relationships are represented as translations in the embedding space: if (h, l, t) indicates that there exists a relationship of name label (l) between the entities head (h) and tail (t), then the embedding of the tail entity t should be close to the embedding of the head entity h plus some vector that depends on the relationship l. Their approach relies on a reduced set of parameters as it learns only one low dimensional vector for each entity and each relationship. This embedding was one of the first powerful approaches for link prediction problem. The table 3 of TransE [2] paper shows how they outperform all the previous methods in the link prediction problem. Although the TransE had a significant result in other applications and problems in knowledge graphs, we only emphasize on the link prediction as ConvE worked on this problem.

Convolutional 2D Knowledge Graph Embeddings

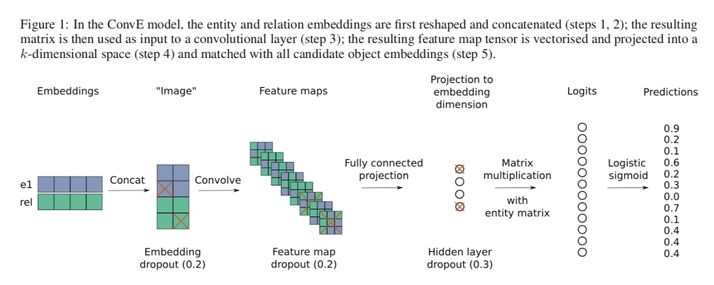

ConvE is a link prediction model where the interactions between input entities and relationships are modeled by convolutional and fully-connected layers. The main characteristic of their model is that the score is defined by a convolution over 2D shaped embeddings. The architecture is summarized in the below figure, which is the first figure of ConvE paper[1]:

In their evaluation for link prediction models, the batch size is usually increased to speed up evaluation in TransE and other similar models. However, this is not feasible for convolutional models since the memory requirements quickly outgrow the GPU memory capacity when increasing the batch size. Their proposed model, ConvE, also achieves state-of-the-art performance for all metrics on most of the knowledge graphs. For more details you can look at table 3 and table 4 of ConvE paper[1].

The incremental contribution

Despite the previous works such as TransE, ConvE has these properties and incrementas:

- It uses fewer parameters. i

- It is fast through 1-N scoring.

- It is expressive through multiple layers of non-linear features.

- It is robust to overfitting due to batch normalisation and dropout.

- It achieves state-of-the-art results on several datasets, while still scaling to large knowledge graphs.

Annotated Bibliography

[1] P. Minervini, P. Stenetorp, S. Riedel, "Convolutional 2D Knowledge Graph Embeddings", AAAI2018.

To Add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.

|

|