Course:CPSC532:StaRAI2020:BlackboxVariationalInference

Variational Inference

One of the most widely used methods for approximate posterior estimation is variational inference[1]. Variational inference tries to find the member of a family of simple probability distributions (called "proposal" or "proxy" distributions) that is closest (in KL divergence) to the true posterior distribution.

Authors: Mohamad Amin Mohamadi

Abstract

While variational inference sounds like a promising approach to finding posteriors in inference problems, it doesn't offer any closed-form solutions for generic models and arbitrary variational families. Thus, computing the required expectations and proposals becomes intractable. In these settings, practitioners have resorted to model-specific algorithms, which again is tedious work.

The two papers presented through this article, try to come up with a form of "black-box" and automatic variational inference, in which the practitioner's main consideration need not be directly solving the KL objective to derive the approximate posteriors.

Introduction

Variational Inference

Variational Inference optimizes the evidence lower bound:

Following the equation above, the goal is to find a distribution

, which is as close as possible to the true posterior distribution in an inference problem, called

. Solving this equation based on the KL divergence of the two distributions directly oftentimes is intractable. Practitioners have used model-based algorithms to solve this equation for different families of models. Obviously, the model-based approach is limited and cumbersome to use in real-world. In this article, we investigate to methods that try to solve the variational inference objective using "blackbox"-ish approaches.

Blackbox Variational Inference

"Blackbox Variational Inference"[1] claims to rewrite the gradient of that objective (minimizing the KL divergence between the proposal distribution and the true posterior) as the expectation of an easy-to-implement function of the latent and observed variables, where the expectation is taken with respect to the variational distribution; and then, they optimize that objective by sampling from the variational distribution, evaluating the function , and forming the corresponding Monte Carlo estimate of the gradient. Using these stochastic gradients in a stochastic optimization algorithm, they optimize the variational parameters and output the final proposal distribution which is supposed to be approximately the true posterior.

From the practitioner’s perspective, this method requires only that he or she write functions to evaluate the model log-likelihood. The remaining calculations (sampling from the variational distribution and evaluating the Monte Carlo estimate) are easily put into a library to be shared across models, which means the method can be quickly applied to new modeling settings.

There is no doubt that naively putting these gradients together in an optimization algorithm will result in extremely unstable values, as the starting parameters of the proposal distribution (and even the family) might be so off the true values. Therefore, the paper comes up with two strategies to reduce to variance of the gradients computed and increasing the convergence rate of the algorithm.

Auto-Encoding Variational Bayes[2]

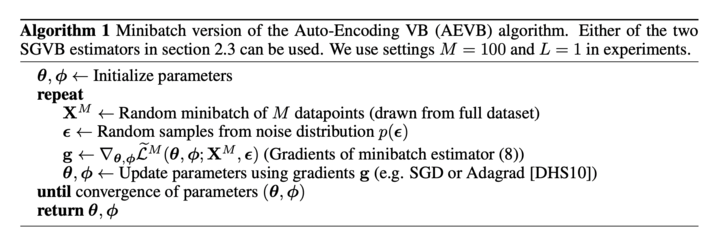

The variational Bayesian (VB) approach involves the optimization of an approximation to the intractable posterior. In this work, the authors use a reparameterization of the variational lower bound that yields a simple differentiable unbiased estimator of the lower bound; and then use this SGVB (Stochastic Gradient Variational Bayes) estimator for efficient approximate posterior inference in almost any model with continuous latent variables and/or parameters.

Now if the data are i.i.d and continues latent variables per datapoint, they propose AEVB[2]. In the AEVB algorithm, they use the SGVB estimator to optimize a recognition model that allows them to perform very efficient approximate posterior inference using simple ancestral sampling, which in turn helps them to efficiently learn the model parameters, without the need of expensive iterative inference schemes (such as MCMC) per datapoint. This greatly enhances the running speed of the algorithm in comparison to the methods that rely on MCMC sampling, such as BBVI and MH within Gibbs methods.

Algorithms

Blackbox Variational Inference

In Blackbox Variational Inference, our goal is to learn a (factorized) approximation to posterior by maximizing the evidence lower bound through this formula:

Towards this goal, we calculate the gradient of the loss function, which results in:where and and is the variance reduction term. Using this gradient, we can optimize the proposal distribution using a stochastic optimization algorithm.

Now the algorithm for this problem is:

Auto-Encoding Variational Bayes

Experiments

The BBVI algorithm is tested to evaluate several models on longitudinal medical data. The models are evaluated and compared using predictive likelihood. The data consist of longitudinal data from 976 patients (803 train + 173 test) from a clinic at New York Presbyterian hospital who have been diagnosed with chronic kidney disease. The model used here is a Gamma-Normal time series model. They model the data using weights drawn from a Normal distribution and observations drawn from a Normal, allowing each factor to both positively and negative affect each lab while letting factors represent lab measurements. The result is compared with the MH withib Gibbs sampling estimations for the parameters of the model.

For AEVB method, they trained generative models of images from the MNIST and Frey Face datasets3 and compared learning algorithms in terms of the variational lower bound, and the estimated marginal likelihood.

Conclusion

Overall, both proposed methods follow a means of stochastic optimization on a proposed distribution of families to approximate the posterior using stochastic optimization. Although they're not fully black-box, but they are the frontiers in search of a means of finding the posterior with little prior information provided. The AEVB algorithm clearly outperforms BBVI in terms of convergence speed because of not relying on MCMC samples, but in turn, is limited in usage as it only works when all the latent variables of the model are continues. Below is a list of limitations of each method that came to my mind.

Limitations of of BBVI:

- Although it promises to be blackbox, the method can not be considered a fully blackbox approach to variational inference as the choice of proposal distribution's family and its domain can largely affect how the algorithm performs and it might not work in some cases.

- The method only works with smooth and differentiable proposal distribution families, which limits the modeling problems that can be solved using BBVI.

- Although the paper suggests to use some techniques to reduce the variance of the gradients and increase the convergence rates, the convergence can still be so slow for some problems, especially if the proposal distribution's initial parameters are way off the correct values.

- Uses MCMC samples, which means way lower speed in comparison to methods that do not use MCMC samples, like AEVB.

- The only algorithm compared with in the experiment is the MH within Gibbs sampling, which is clearly to be outperformed by BBVI.

Limitations of AEVB:

- Latent variables must be continuous, otherwise the method can not help.

- No place to plugin a variance reduction technique or step size choosing method on the final algorithm.

- The experiments are only run using wake-sleep and the algorithm itself, although the paper is published after some other existing stochastic variational inference techniques.

Annotated Bibliography

|

|