Course:CPSC522/Regularization as an Alternative to Negative Sampling in KGs

Title

Unintuitiveness of negative sampling in training of SimplE has led us to try to use regularization on relations as an alternative in link prediction task in knowledge graphs. The tests show that it is possible to achieve good results by only regularizing on relation counts in the train set to train SimplE without any negative samples.

Principal Author: Ali Mohammad Mehr

Collaborators:

Abstract

Due to the fact that most knowledge graphs contain only positive samples, generation of negative samples has been the technique that state-of-the-art algorithms of link prediction in knowledge graphs have been using to make the learning of embeddings possible in this task. In this page, a regularization based on the number of samples of each relation in the training tuples is proposed to be a substitute for the negative sampling approach. In this regard, two different regularization techniques are introduced and tested, which show that regularizing the norm of the embedding vectors will achieve fair results.

Builds on

Basic understanding of knowledge graphs, embeddings, stochastic gradient descent, and regularization.

Related Pages

SimplE algorithm[1]

Knowledge graphs[2]

Link Prediction in Knowledge Graphs

Knowledge Graphs

A knowledge graph consists of (subject, predicate, object) triples, referred to as SPO triples. We will use the (head, relation, tail) notation for the triples in this page. The existence of a particular SPO triple indicates an existing fact, i.e., that the respective entities are in the given relationship. For instance, the information "Leonardo DiCaprio starred in Shutter Island" can be expressed via the triple (Leonardo_DiCaprio, starred_in, Shutter_Island). Under the open world assumption (OWA), a non-existing triple is interpreted as unknown, i.e., the corresponding relationship can be either true or false. Continuing with the above example, the missing edge is not interpreted to mean that Nimoy did not star in Star Wars. This more cautious approach is justified, since KGs are known to be very incomplete. For example, sometimes just the main actors in a movie are listed, not the complete cast.

In schema-based approaches, entities and relations are represented via globally unique identifiers and all possible relations are predefined in a fixed vocabulary. For example, Freebase might represent the fact that Barack Obama was born in Hawaii using the triple (/m/02mjmr, /people/person/born-in, /m/03gh4), where /m/02mjmr is the unique machine ID for Barack Obama.[2]

On this page, we will assume we are using a database with the open world assumption which is schema-based. In particular, the FB15K[3] data set which is a subset of Freebase[4] is used in the experiments described on this page. This data set consists of 14,951 entities and 1,345 relations, defining to be the set of all entities in the data set, . The training set contains of 483,142 triplets, the validation set 50,000 triples and the test set 59,071 triples.

Link Prediction Task

Link prediction task in knowledge graphs is concerned with predicting the existence (or probability of correctness) of edges in the graph (i.e., triples). This is important since existing knowledge graphs are often missing many facts, and some of the edges they contain are incorrect. In the context of knowledge graphs, link prediction is also referred to as knowledge graph completion. For example, by having the two triples (Barack_Obaba, born_in, Hawaii) and (Barack_Obaba, was_president_of, United_States) we might be able to deduct the triple (Hawaii, is_a_state_of, United States).

SimplE for Link Prediction in KGs

SimplE algorithm[1] is a tensor factorization approach which uses embedding vectors to represent entities and relation. SimplE considers two vectors as the embedding of each entity , and two vectors for each relation . The similarity function of SimplE for a triple is defined as , i.e. the average of the scores for and . In the score formula, , where , , and are vectors of size .

SimplE Model Evaluation

After training the model, which is essentially finding embedding vectors that give high scores for triples in train set, the model is evaluated using the mean reciprocal rank (MRR). To do this evaluation, for each test triple we compute the score of triples for all and calculate the ranking of the triple having , and we compute the score of triples for all and calculate the ranking of the triple having . Finally, the MRR is defined as the mean of the inverse of these rankings for all triples in the test set: , where is the set of test triples.

SimplE Training with Negative Sampling

Since in the FB15K data set the training set consists only of positive examples, a model which always predicts "True" will get the minimum training loss for most feasible loss functions. In order to mitigate this issue, state-of-the-art training methods generate negative samples and train the model on a set including both positive and negative samples. To generate negative samples, for each positive triple in data set, negative triples are generated by corrupting the positive triple. For a positive triple , we randomly decide to corrupt the head or tail. If the head is selected, we replace in the triple with an entity randomly selected from and generate the corrupted triple . If the tail is selected, we replace in the triple with an entity randomly selected from and generate the corrupted triple .

Using the union of the training set and the generated negative samples, stochastic gradient descent is used to minimize the following loss function:

,

where is the union of labeled training set and labeled negative samples.

SimplE generates 10 negative samples for each positive sample in FB15K to train on the data set.

Regularization as an Alternative to Negative Sampling

Since generating negative samples is not quite intuitive, in this page, we will evaluate how regularization can be used to mitigate the problem of nonexistent negative samples in training set. Our hypothesis is that some kind of regularization on number of samples of a relation in training set can be used to regularize the SimplE model, so that negative samples are not necessary for training the model. From this point on, the number of samples of a relation in training set will be denoted as . Since we want to regularize using , we will have a regularizer which is a function of and some feature of the model whose point is to regularize relation . Therefore, the general formula for the loss function with this regularization will be as follows:

,

where is the set of all relations in the dataset and is the training set. As you can see in the above formula, there is no label for each sample because all the samples are positive. It is also important to notice the position of in the loss expression. To make this loss function work with stochastic gradient descent, we need summation over all the samples, so the final formula for the loss function, which will be used to train the model, is as follows:

,

which is equal to the previously mentioned loss function.

Test 1 - Regularizing on Predictions of the Model

Usually, the regularization term is based on model structure or parameters, but as a first test, we decided to first try a regularizer based on the model predictions. This is simpler than regularizing based on model structure or parameters because it is hard to devise a regularizer which will incorporate and model parameters. The tested regularization term in this test was , and therefore the loss function was

,

where is the regularization rate for the new regularizer and is a normalizing factor to normalize the values of to the range so that they are comparable with the values of .

The result of a grid search over in range and sensible ⍺ gave very bad results: The MMR score for the trained models were all close to 0.0001, compared to MRR=0.72 for SimplE implementation of Kazemi and Poole[1].

Test 2 - Regularizing on Norm of the Embedding Vectors

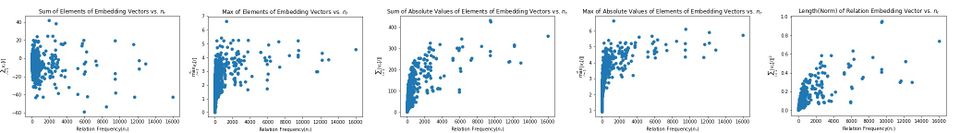

To derive a regularization term based on embedding vector of relation and , a few plots were drawn which can be seen in figure 1. Analyzing the plots in figure 1 shows that there is a correlation between the norm of embedding vectors and .

To make use of the correlation observed in plots in figure 1 to derive a regularization term based on , polynomial regression functions of degrees 1, 2, and 3 were fitted to the plot of vs. . The resulting regression curves can be seen in figure 2.

To derive the regularization term, the degree one polynomial regression curve was used. As a result, the formula for the regularizer was decided to be

. Finally, the loss function will be as follows:

,

where and are derived from the fitted regression line ( and ), and is the regularization rate. MRRs achieved with a grid search over can be seen in figure 3. As it can be seen in figure 3, results as high as MRR=0.18 are achieved, which is comparable to MRR=0.72 that is achieved by SimplE implementation of Kazemi and Poole[1]. It seems like the MRR is increasing as increases and it does not seem to have saturated yet, which means that testing values greater than 0.7 might give even better results.

Devised Regularizer Tested with Negative Sampling

Testing this new regularization term alongside negative sampling resulted in MRR~0.10 for meaning that this regularizer with high values does not increase MRR score of SimplE implementation of Kazemi and Poole. Further tests are needed with smaller values to make a general statement about the regularizers impact on SimplE.

Discussion and Future Work

Results of the second test show that regularization is powerful enough to achieve good results and might some day completely substitute negative sampling in training embedding-based models in link prediction of KGs.

Future work includes multiple tests that can be done to further analyze the potentials of the new devised regularization term. Some of these test include:

- Tests to check if the regression line on the vs. plot stays fixed among multiple data sets, so that this regularizer can potentially be transported to any data set without any change.

- Trying degree two regression to see if that achieves better results than the degree one regression.

Annotated Bibliography

- ↑ 1.0 1.1 1.2 1.3 Seyed Mehran Kazemi and David Poole, SimplE Embedding for Link Prediction in Knowledge Graphs, in Proc. Thirty-second Conference on Neural Information Processing Systems(NeurIPS 2018). Also a previous version in Eighth International Workshop on Statistical Relational AI, July 2018.

- ↑ 2.0 2.1 Nickel, M.; Murphy, K.; Tresp, V.; and Gabrilovich, E. 2016. A review of relational machine learning for knowledge graphs. Proceedings of the IEEE 104(1):11-33.

- ↑ Antoine Bordes and Nicolas Usunier and Alberto Garcia-Dur\'an and Jason Weston and Oksana Yakhnenko, Translating Embeddings for Modeling Multi-relational Data, Advances in Neural Information Processing Systems (NIPS 26), 2013

- ↑ Kurt Bollacker, Colin Evans, Praveen Paritosh, Tim Sturge, and Jamie Taylor. Freebase: a collaboratively created graph database for structuring human knowledge. In ACM SIGMOD, pages 1247–1250. AcM, 2008.

To Add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.

|

|