Course:CPSC522/Improve recommendation system by integration

MovieSpark: An Integrated Movie Recommendation System Based On Spark

Author: Arthur Sun

Referenced Paper

1. Matrix Factorization Techniques for Recommender Systems [1]

2. A hybrid approach to item recommendation in folksonomies [2]

3. Hybrid recommender systems: Survey and experiments [3]

4. A hybrid online-product recommendation system: Combining implicit rating-based collaborative filtering and sequential pattern analysis [4]

5. Expertise recommender: a flexible recommendation system and architecture [5]

6. A hybrid collaborative filtering method for multiple-interests and multiple-content recommendation in E-Commerce [6]

7. A context-aware music recommendation system using fuzzy bayesian networks with utility theory [7]

Introduction

Nowadays, with growing popularity of people getting used to utilize The Internet as a daily information source for input and search, people have started to use various means of tools to better help them get related information that is interested in them or search for the exact information that they need. Internet search engines like Google[1] and Bing[2] have greatly provided people with great convenience in improving users' capability in finding their interested information within limited short amount of time by providing user with keywords for which the user input by specific keywords. However, except for positively seeking information, many companies provide an alternative by positively provide personalized recommendation based on each individual registered account to provide user with better insight about their future predictable action based on their previous searching, looking or buying experience.

For example, Amazon provides user personalized recommendation for online shopping and Netflix provides a personalized recommendation for movies. Recommendation system principles vary by different principles, but overall it can be divided into content-based filtering and collaborative filtering for information extraction and classification. However, in the real world system, such perfect model for fully implementing only on content-based filtering or collaborative filtering is not optimal because the input data for content-based filtering or collaborative filtering is not satisfactory due to lack of enough user input, which results in a sparse condition for further data processing, which results in poor recommendation result and high error commendation rate.

As a result of this, this paper presents an integrated model of content-based filtering with collaborative filtering by combing content feature to user-based feature to improve recommendation system accuracy. Furthermore, it aims to meet a broader range of applications usage, which is closer to real-world recommendation situation. In summary, this paper includes the following contributions:

- Implementation of an integration of content-based feature with collaborative user feature by incorporating IMDB[3] movie feature to user rating from MovieLens[4] to strengthen collaborative recommendation system.

- Evaluation of the MovieSpark system training the system with MovieLens dataset and testing performance by RMSE

- Implementation on Spark to speed up data processing speed and enable large dataset scalability

Background

In this section, we provide an overview of our MovieSpark system with some basic concepts including collaborative filtering, contented-based filtering, data normalization and integrated recommendation system.

Collaborative Filtering

Collaborative filtering is based on the using the history of user behavior which specifically refers to user's past watching, listening or purchasing history record that can be compared with other users making similar decision, which means that if a person A has the same opinion as a person B on an issue, A is more likely to have B's opinion on a different issue x than to have the opinion on x of a person chosen randomly. For instance, we can make a recommendation system for predicting people's taste on television with likes or dislikes but these predictions also aim for targeted user which are gathered from a lot of other users, which is a little bit different from simply giving an average score for items.

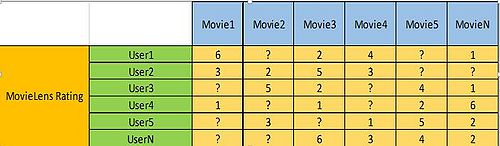

Here we can use a following picture to show what collaborative filtering is:

We want to predict user1 for their "?" mark based on other users' perference. So what we need to do is to find similar users who has the similar ratings which is like the current user and then use the similiar user preference to give recommendation for the current user. For similiarity, we use can use cosine similiarty[5]

|

Contented-based Filtering

Content-based filtering, which can also be called cognitive filtering, is a recommendation algorithm which recommends items based on a comparison between the item content and the profile of a user. For the algorithm to work, personalized profiles first have to be set up by explicitly importing user feedback to classify the key attributes about their like and dislike.

Typically, the user preferences are stored in two-dimensional table format where the row describes one item and the column represents a specific item feature. Boolean values and describing languages can be used to describe the properties and sometimes in order to make every item value more balanced, we may apply numeric weights to each row to balance the weights of each column. Term weights like Term Frequency or Inverse Document Frequency[6] are often utilized to tune and improve the accuracy of the user preferences.

During real world environment, acquiring new user preferences information may not be easy because the system may encounter a "cold start" problem, which means that the system does not have enough information provided by the user to determine the user preference. In this way, we may use system interaction to interact with a user for basic information input and utilize machine learning algorithm to generate a user model based on previous stored similar user history.

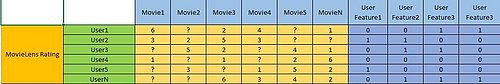

For a real example of content-based filtering, as we can see from the below picture, if we want to predict what the user1 like for movie5, we look for the user feature columns for user1 and look for the similiar user who shares the similiar pattern of user features like user1 and then make recommendation based on the similiarty for the corresponding rating between the two users.

|

Normalization

It is obvious that different user may judge the small film in different rating. Some users regard 15/20 to be high mark while others regard 19/20 to be excellent. This often misleads to misconception and inaccurate prediction caused by over-fitting, which may only represent previous user preference and do not extend scalability and flexibility to predict the current user preference. This problem often occurs during collaborative filtering data input where the previous rating of people is collected solely based on individual preferences. A normal way to clean the data is to systematically balance these input data into a baseline by math

Improved Recommendation System

Researchers have been trying to improve the prediction accuracy and reduce computational complexity for the recommendation system, mainly for collaborative filtering and content-based filtering for a very long time and therefore, certain theoretical models have been proposed to improve the performance of recommendation system by integrating and combining the two systems into a single system.

Integration of different system will result in different results and prediction types based on the prediction domain and data types. Here enlist some common integration types

- Mixed Type of Recommendation: Considering that both collaborative filtering and content filtering lacks input data, which makes the matrix sparse, mixed recommendation tries to make independent recommendations and then present to the user after both have been successfully generated. The limitation of this recommendation methodology is that it can be difficult to evaluate the individual system improvement.

- Mutual Switching: Considering difficult user dataset to parse, mutual switching can be utilized to switch between content filtering and collaborative filtering to deal with part of the data first by a specific algorithm and then by evaluating the result of the data, trying to determine the next algorithm to performance. However, it could be difficult to determine the preferred method to perform if there lack certain criteria.

- Trait Combination: If the current predicting algorithm is hard to implement based on the current traits, then integrating the current trait with other traits to satisfy either collaborative filtering and content-based filtering improves processing capacity and accuracy.

Methodology

The goal of this study is to improve the prediction accuracy rate of a single collaborative recommendation system by integrating content-based filtering as a subsidiary method to correlate content-related information to the current rating information. For collaborative filtering, we are going to use the nearest neighbor algorithm[7] to find movie watcher correlation For content-based filtering, we are going to use extract movie feature and append into respective user-movie-recommendation item to improve the recommendation accuracy.

The dataset we are going to use is the MovieLens and IDMB datasets, where MovieLens focuses on the user rating of specific movies while IDMB focuses on providing the detailed information regarding respective movies.

Datasets

MovieLens Dataset

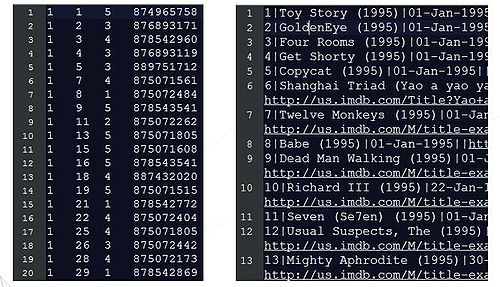

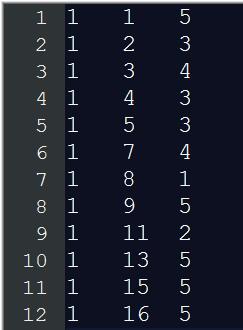

MovieLens dataset was developed by GroupLens[8] group which provides movie rating datasets collected anonymously from the public. The datasets are collected over various periods of time and have four types of datasets for use: 100K, 1M, 10M, and 20M. Here we are going to use the 100K data set because it contains about 900 user comments on about 1600 movie items. The dataset is described in plain text with four properties: userID, itemID, ratingID and timestamp.

For our MovieSpark, we are going to extract the userId, movieID, rating to make up for the main collaborative filtering dataset. For the experiment, we will use 80% dataset for training and 20% for actual testing.

Here is a snapshot of the format of dataset from MovieLens

|

IMDB Dataset

IMDB dataset is short for Internet Movie Database, which is a comprehensive dataset which contains a lot of properties of a certain movie including actor, actress, country, director, keywords, producers and production company, etc. In order to distinguish between different movies and avoid overfitting, which may cause recommendation with no new information to the user, certain attributes should be carefully considered to be included in the content attribute lists . Here we will enclose the following attributes because the following key attributes will not form a sparse matrix with lower than 1% of utilization rate:

- Country

- Date

All the attributes in the IMDB datasets are all in separate files with different formats, so we need to integrate them into a single file. Here is an overview of the actor name dataset

|

Architecture Overview

Since the goal of this paper is to improve the accuracy of collaborative filtering with the nearest neighbor algorithm with content-filtering, the main architecture of the system will be mainly focused on the combination of content-filtering and collaborative filtering.

Here is the architecture overview of the system:

|

System Overview

The whole system will be composed into three main parts:

- MovieLens and IMDB dataset integration

- Normalization and combination of rating and movie content features

- Collaborative filtering for predicting based on above dataset

|

Technology Used

The above overview of the system requires three steps and the respective step requires the following technologies to achieve:

- MovieLens and IMDB dataset integration: Scala test parsing, concatenation, and sparse matrix generation

- Normalization and combination of rating and movie content features: Scala and Spark

- Collaborative filtering for predicting based on above dataset: Spark and Scala

Dataset Integration

Relationship

The connection between MovieLens and IMDB is the name of the movie since both datasets contain the feature. However, as it is known that the IMDB dataset separates each feature into the separate dataset. So we need to first combine the IMDB dataset into a unified two-dimensional table with movie name as the primary key. Then we can integrate the MovieLens and IMDB with movie name is the connection key word. The final relationship is like below and it is surely a connection between MovieLens and IMDB dataset.

| UserID ---- Movie Name ID ---- Movie Rating --- Movie Country --- Movie Date |

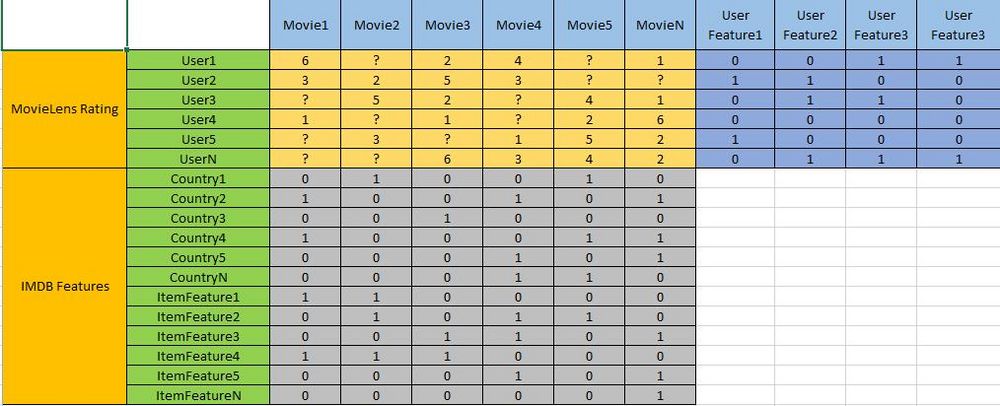

Final Matrix

The dataset relationship will finally be mapped and proceeded into a single matrix for final content filtering to produce result. The matrix shall look like this:

|

Dataset Normalization

When we see the data from MovieLens and IMDB, it can be inferred that the rating between different people towards the same movie varies depending on individual and how to normalize the rating of those people to a baseline is important. From previous chart, we can see that rating varies from 0 to 6. Here I am going to use subtractive normalization to average all values into baseline.

Subtractive Normalization

Subtractive normalization [9] is a type of normalization method which normalize the value based on the overall matrix value, column value as well as row value of the specific matrix cell and the math formula is like this:

, Where the , , total value equals to one.

For example, suppose we want to calculate the normalization value of the user rating matrix (2,2)(Row 2, Column 2), and we suppose , , are all with the same value 1/3

So the process is like this:

The result = -0.193 means that the value 2 is below the normalization middle value 0(the resulting rage is between -1 to 1) and have a negative result towards the overall dataset.

Another important issue is regarding the parameter tuning of , , .

For convenience, I just use all value to be the same is . However, during real environment, it should be changed according to real circumstances. A popular way to determine the three respective value is to use linear regression[]

Multiplicative Normalization

Multiplicative Normalization is used for the IMDB user feature matrix normalization where all the values are 1 or 0 because if we use the previous method, then it is very clear that the total average, column average and row average will always be 1, which makes no sense to our goal.

As can be seen form the below calculation process, the original matrix is on the top left of the picture and it is composed of users and features. First, we are going to transpose the matrix into the right bottom matrix with the feature on the left and user in the bottom. Then we generate the right upper matrix diagonal value , which is calculated by . The left below matrix diagonal value is also calculated by the similar method by .

Then the final normalized value is calculated by the product of the corresponding cell value from the right above matrix and left below matrix.

Implementation

Overview

For system implementation, we will set up two separate working environment for collaborative filtering and integrated recommendation system. For collaborative filtering algorithm, we will first transpose the current data into a two-dimension sparse matrix and implement subtractive normalization to regularize the data and then apply the top-K nearest-neighbour algorithm to predict the rating with training and test dataset like this: The KNN collaborative filtering training dataset contains 80,000 lines of user ratings for training and contains 20,000 lines of user ratings for testing

UserID ----- MovieID ----- Rating

|

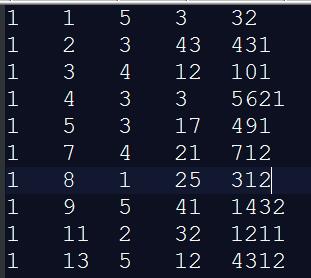

For the integrated recommendation system, we append features of the country, date from IMDB to the previous viewer-rating matrix, where the country and date are represented by a sequential ID starting from 0 based on the collection of country and date from IMDB dataset country.list.tar.gz and release-date.list.tar.gz from IMDB website[[10]. Then we will transpose the current dataset into a two-dimension sparse, apply matrix multiplicative normalization and then KNN algorithm for both training and testing dataset.

|

We will be using RMSE, which is a popular key factor to indicate the error rate of prediction for both algorithms. RMSE(root-mean-square error) is a frequently used measure of the differences between values (sample and population values) predicted by a model or an estimator and the values actually observed[11]. It is calculated by the root of the geometric mean difference of each data.

As for platform, due to the huge iterative matrix calculation, we will be using Spark platform to implement the whole system with the help of KNN plugin from Saurfang[12]. His plugin achieved the functionality of various normalization and KNN algorithm, which greatly reduce the workload of this project.

Spark

Apache Spark is an open source cluster computing framework which was originally developed at the University of California, Berkeley's AMPLab[13]. It is a highly efficient framework for iterative computational work due to its internal RDD(Resilient Distributed Dataset) model, which emphasizes on dealing with the large dataset in memory by pipeline and distributed computing. Spark is written in Scala, which enables it to have properties of functional programming and greatly reduces the programmer's effort in writing code.

We are going to write Scala code to deal with the dataset.

Experiment

We will first write a main Scala template, which is called SparkConf object and it will be used to be compiled into .JAR file because Scala is based on Java and the .Jar file will be transmitted into the Spark computational engine by Spark-submit.

First we will set up the environment of our Spark for the task:

1 val conf = new SparkConf()

2 .setMaster(master)

3. .setSparkHome(sparkHome)

4. .setAppName(CPSC522AI)

5. .set("spark.executor.memory","4g") // My computer only has 8g of memory, so I will allocate half the memory to the computation

6. .setJars(Seq(JarFile)) //Input .Jar file, which is the default input datatype for Spark

Nest we will be using Spark to read the rating for both two data sets which contains training and testing.

1. val homeDir = "D:/cpsc522" //We use only one computer, so it is in standalone mode and we don't use distributed file system.

2. val viewerRatingTraining = sc. textFile(homeDir + "viewerRatingTraining.txt").map{line => val fields = line.spilt(" ")

3. Rating(fields(0).toInt, fields(1).toInt, fields(2).toInt)) //Use map function to store the three respective rows of data by blank as separate symbol and convert into int.

4. val viewerRatingTesting = sc. textFile(homeDir + "viewerRatingTesting.txt").map{line => val fields = line.spilt(" ")

5. Rating(fields(0).toInt, fields(1).toInt, fields(2).toInt)) //The same as above

6. val ContentRatingTraining = sc. textFile(homeDir + "ContentRatingTraining.txt").map{line => val fields = line.spilt(" ")

7. Rating(fields(0).toInt, fields(1).toInt, fields(2).toInt,fields(3).toInt,fields(4).toInt,)) //The same as above

8. val ContentRatingTesting = sc. textFile(homeDir + "ContentRatingTraining.txt").map{line => val fields = line.spilt(" ")

9. Rating(fields(0).toInt, fields(1).toInt, fields(2).toInt,fields(3).toInt,fields(4).toInt,)) //The same as above

Then we can get some intermediate message first by output some statistical information first

1. val numRatingsOfviewerRatingTraining = viewerRatingTraining.count

2. val numRatingsOfviewerRatingTesting = viewerRatingTesting.count

3. val numRatingsOfContentRatingTraining = ContentRatingTraining.count

4. val numRatingsOfContentRatingTesting = ContentRatingTesting.count

5. println("viewerRating" + numRatingsOfviewerRatingTraining + " and " + numRatingsOfviewerRatingTesting)

6. println("ContentRating" + numRatingsOfContentRatingTraining + " and " + numRatingsOfContentRatingTesting)

The output is:

ViewerRating 80000 and 20000

ContentRating 80000 and 20000

OK. Then the data is well-organized from the current perspective and we will transform the dataset into the sparse matrix for next implementation because the following step requires the date input as matrix type.

For sparse matrix implementation, we will use the in-built Spark datatype called distributed matrix because as we know, Spark uses RDD to do iterative computation and because of the large size of our matrix, it would be better to put our matrix into multiple distributed RDDs. We will use CoordinateMatrix as each entry is a tuple of (i: int, j: int, value: int) where i and j represent the column and row and value is the value of the matrix entry.

1. val entries: RDD[MatrixEntry] = viewerRatingTraining //Matrix input for viewerRatingTraining 2. val matViewerRatingTraining: CoordinateMatrix = new CoordinateMatrix(entries) //Matrix generation 3. val entries: RDD[MatrixEntry] = viewerRatingTesting //Matrix input for viewerRatingTraining 4. val matviewerRatingTesting: CoordinateMatrix = new CoordinateMatrix(entries) //Matrix generation 5. val entries: RDD[MatrixEntry] = ContentRatingTraining //Matrix input for ContentRatingTraining 6. val matContentRatingTraining: CoordinateMatrix = new CoordinateMatrix(entries) //Matrix generation 7. val entries: RDD[MatrixEntry] = ContentRatingTesting //Matrix input for ContentRatingTesting 8. val matContentRatingTesting: CoordinateMatrix = new CoordinateMatrix(entries) //Matrix generation

For normalization, we perfrom the subtractive normalization and multiplicative normalization for the viewerRating matrix and contentRating matrix

1. val normalizer = new NormalizerForViewerRating() // I built a class named normalizerForViwerRating to implement the subtractive normalization 2. val normalizer2 = new NormalizerForContentRatingRating() // I built a class named NormalizerForContentRatingRating to implement the multiplicative normalization 2. val normalizedDataOfViewerRatingTraining = matViewerRatingTraining.map( x=> (fields(2), normalizer1.transform(matViewerRatingTraining.fields(2))) //Normalized the rating in the ViewerRatingTraining Dataset 3. val normalizedDataOfviewerRatingTesting = matviewerRatingTesting.map( x=> (fields(2), normalizer1.transform(matviewerRatingTesting.fields(2))) //Normalized the rating in the viewerRatingTesting Dataset 4. val normalizedDataOfContentRatingTraining = matContentRatingTraining.map( x=> (fields(2), normalizer2.transform(matContentRatingTraining.fields(2),matContentRatingTraining.fields(3))) //Normalized the rating in the ContentRatingTraining Dataset 5. val normalizedDataOfContentRatingTesting = matContentRatingTesting.map( x=> (fields(2), normalizer2.transform(matContentRatingTesting.fields(2))) //Normalized the rating in the ContentRatingTraining Dataset

OK. We are almost there. Since we have already implemented the normalization, we just need to feed our normalized matrix into the KNN algorithm.

However, for now, Spark didn't provide built-in KNN algorithm, we will adopt the KNN algorithm from Ondra Fiedler[14] to implement the KNN and then calculate the RMSE value for each run. During the execution, we need to define the number of nearest neighbors every time because KNN algorithm predict missing values by looking up into the nearest N neighbors and this has to be defined from the very beginning.

I used most of the code from Ondra Fiedler's KNN algorithm[15] and adopted accordingly to my needs.

Get Nearest Neighborhood Stage

0. val numberOfNeighbours = 10; // Define the number of neighbours we need to use for this time of experimental run 1. import breeze.linalg.matrix // this is a Scala NLP library which is used to deal with the Matrix and it is a special lib we need to import except standard Spark lib 2 val matrixWithDistances = matrixRDD.map(v => (distanceMetric.getDistance(normalizedDataOfViewerRatingTraining, v), v)) //feed normalizedDataOfViewerRatingTraining Matrix into distance calculation from 3. def cmp: Ordering[(Int, matrixWithDistance)] = Ordering.by[(Int,normalizedDataOfViewerRatingTraining ), Int](_._2) // Get the nearest neighbor by Order 4. val kNearestMatrix = matrixWithDistances.takeOrdered(numberOfNeighbors).map(pair => pair._2) // take k nearest neighbours and we are done here to proceed into the second stage of recommendation

The output of this stage is a matrix which contains the numberOfNeighbours number of items which are closest to the missing value.

Recommendation Stage

The essence of KNN for recommendation is to use the cloest neighbor average value to predict the current missing value

0. val nearestNeighbour: Seq[Vector[int]] = kNearestMatrix // put the value from the previous stage into current stage variable.

1. val addedRatings = nearestNeighbour.reduce(_ + _) // We add all the ratings together

2. val numberOfRatings = nearestNeighbors.map(vec => vec.map { value => if (value > 0) 1 else 0}).reduce(_ + _) // use map to calculate the sum of all none zero ratings.

3. val averageRatings = addedRatings.activeIterator.map { tup => val i = tup._1 (tup._2 / numberOfRatings(i), i)} // Get the average rating

4. val averageRatingsSorted = averageRatings.toList.sortBy(p => -p._1) //Sort Movie based on average ID

5. val productsAlreadyRatedByUser = vector.activeKeysIterator.toSeq

6. val recommendation = averageRatingsSorted.map(p => Rating(0, p._1)).filter(rating => !productsAlreadyRatedByUser.contains(rating.product))

RMSE Calculation

After this step, we get the matrix containing the recommendation value which is missing from the original training dataset. Now we need to compare it with the testing dataset by RMSE calculation.

I used part of the code from Spark's MLLib's ALS algorithm's RMSE calculation method [16] and adopted accordingly to meet my needs.

1. val originialRating = normalizedDataOfviewerRatingTesting; //Testing rating as the original rating

2. val presentRating = recommendation //Present rating as the testing rating

3. val RMSEMatrix = Matrices.sparse(originalRating.map(line => val = line.spilt(" ") Rating(fields(0).toInt, fields(1).toInt, fields(2).toInt)).join(presentRating(line.spilt(" ") Rating(fields(0).toInt, fields(1).toInt, fields(2).toInt))) // Join the two matrices as one then calculate the RMSE

4. val RMSEResult = math.sqrt(RMSEMatrix.map(x=> (x.2 - x. 5) * (x.2-x.5)).mean())

Evaluation

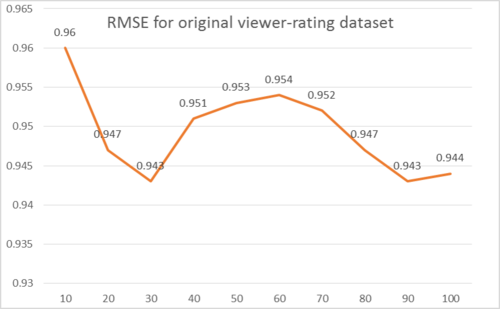

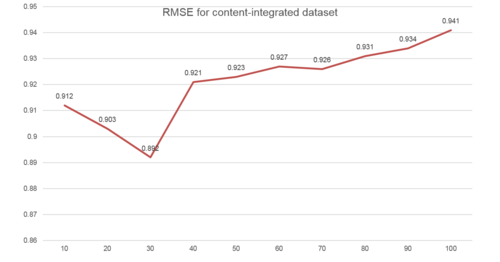

We then calculate the whole process by applying a different number of numberOfNeighbours variable from 10 to 100 at an interval of 10 on both datasets to witness the result. Here below is the result for both datasets. It is clear that for the original dataset, the RMSE varies and fluctuates while for the integrated RMSE value increases steadily along with more neighbour number.

|

|

Result

As we can see, the integrated collaborative and content-based filtering do improve the RMSE by approximately 0.1% comparing to the original collaborative filtering. Content features do help to improve the nearest neighbour algorithm accuracy becasue of its ability to improve the cosine similiarty calculation. However, as has been noted that the collaborative rating matrix has already been a sparse matrix when generated from the raw dataset, adding content features for existing users will do deteriorate the sparse matrix situation. And I think that is the main reason for not having a better RMSE than current result. As to how to solve the sparse matrix problem, I still have not figured out a better soltuion to do this. An alternative is to introduce implicit recommendation to help improve the situation. However, this has already been beyond current topic for this paper.

Future Work

The problem of sparse matrix is a big influence for the accuracy for recommendation system. We can call this problem as cold start. As for the future work, I will mainly introduce implicit features not only from user but also from content feature to improve the sparse matrix problem. Furthremore, I will try to use matrix factorization to simpilify the matrix to improve the running time performance under large input dataset.

Annotated Bibliography

- ↑ Koren, Y., Bell, R. and Volinsky, C., Matrix Factorization Techniques for Recommender Systems, IEEE Computer 2009

- ↑ Wetzker, Robert, Winfried Umbrath, and Alan Said. "A hybrid approach to item recommendation in folksonomies." Proceedings of the WSDM'09 Workshop on Exploiting Semantic Annotations in Information Retrieval. ACM, 2009.

- ↑ Burke, Robin. "Hybrid recommender systems: Survey and experiments." User modeling and user-adapted interaction 12.4 (2002): 331-370.AP

- ↑ Choi, Keunho, et al. "A hybrid online-product recommendation system: Combining implicit rating-based collaborative filtering and sequential pattern analysis." Electronic Commerce Research and Applications 11.4 (2012): 309-317.

- ↑ McDonald, David W., and Mark S. Ackerman. "Expertise recommender: a flexible recommendation system and architecture." Proceedings of the 2000 ACM conference on Computer supported cooperative work. ACM, 2000.

- ↑ MLA Li, Yu, Liu Lu, and Li Xuefeng. "A hybrid collaborative filtering method for multiple-interests and multiple-content recommendation in E-Commerce." Expert Systems with Applications 28.1 (2005): 67-77.

- ↑ Park, Han-Saem, Ji-Oh Yoo, and Sung-Bae Cho. "A context-aware music recommendation system using fuzzy bayesian networks with utility theory." Fuzzy systems and knowledge discovery. Springer Berlin Heidelberg, 2006. 970-979.