Course:CPSC522/Differential Privacy

Introduction to Differential Privacy

Principal Author: Yilin Yang

Collaborators:

Abstract

As we rely more and more on technology, data safety issues nowadays have become more and more prevalent. In light of this unprecedented amount of data used to train models, how do we protect users/data from malicious actors who want to infer this information? An obvious choice will be to choose to omit sensitive features in the dataset, or only report averaged results, however as Dwork et al. [1] has pointed out, these "protection" methods are too naive. Unsurprisingly, even simple attacks are very effective: For two datasets that record voter information and healthcare information while omitting the name, using natural joins is enough to connect two datasets and learn more about an individual, as addresses (or a combination of information) are unique to a very small set of people. Therefore, it's not difficult for adversarial users to obtain critical information. In fact, one of the most famous machine learning competitions, the Netflix recommendation system competition, was subject to privacy risks using the natural join as shown here. Such attacks are so simple in fact that even curious users can pull them off accidentally, and they facilitate the need for a gold standard privacy notion. In this page, we introduce the gold standard privacy notion of Differential Privacy (DP). DP is mathematically guaranteed to preserve privacy and is widely deployed in the industry by tech companies such as Apple, Google, and Facebook. It is even used in the 2020 US census to protect individual privacy. We will follow the notations by Dwork et al.[1] who first introduced DP. We will begin with a formal definition of privacy and DP. We will then introduce a mechanism that can be used to achieve DP, we will then talk about DP properties and finally introduce approximate DP and its mechanisms. We will also beifly go over Rényi DP as a more recent development in DP.

Builds on

As DP is a mathematical guarantee, Probability is the main foundation. However, understanding Rényi Divergence is recommended for the last section.

Related Pages

The Algorithmic Foundations of Differential Privacy[1] by Dwork et al. should be your go-to for most theory DP related.

Programming Differential Privacy is a much simpler overview that goes into implementation more than theory.

CS 860 - Algorithms for Private Data Analysis by Gautam Kamath has very good insight into the more dense proofs of DP, I highly recommend!

Hopefully, all of these along with this Wiki page can provide some well-needed insight into my previous page:

MDP for Differentially Private Reinforcement Learning

For those interested in the 2020 US Census, the blog post by Simson Garfinkel offers excellent insight.

Definition of Privacy and Differential Privacy

Before we introduce Differential Privacy, it is important to formally define privacy as it greatly changes our method of preserving privacy: if the goal is to not leak any information about the population, then every statistical/machine learning method that aims to preserve this notion of privacy cannot be of any use to analysts. However, if we define privacy as protection for members that participated in the training dataset so that there is no incurred risk for participating in the training process, then as ML researchers we can still obtain statistically significant knowledge of the population. We will use this definition of privacy, more formally:

Definition of Privacy:

Data Privacy aims to preserve the sensitive information of participants from malicious individuals while allowing data analysts to conduct statistically significant inferences of the data.

Definition of Differential Privacy

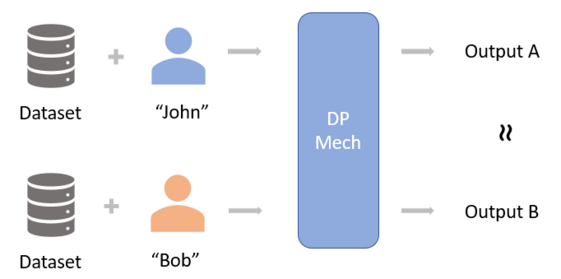

Following the definition by Dwork et al.[1], for some , A mechanism is called -DP if and only if it satisfies the equation:for all neighboring datasets and and for all possible sets of the mechanism's output .

Here, datasets are considered neighboring if they differ by only 1 entry, and a mechanism is any function that satisfies DP.

The DP definition essentially implies that the mechanism cannot be deterministic and must have multiple outputs given some input, and the output for and should be very similar to each other. This ensures that the adversary cannot infer whether or not a specific individual has partaken in the dataset, thereby protecting the user and its sensitive information. To make this clearer:

here is also known as the privacy budget or privacy parameter, the larger is the less private becomes as there is more difference in the output for and . However it should be noted that the exact nature of is not fully understood, in other words, how do we choose in practice? Even companies like Google, Apple, and Facebook who actively deploy DP models to preserve their users' privacy will typically choose an empirical value (or so they claim). In most cases, should be 1 or smaller, and greater than 10 offers little to no privacy.

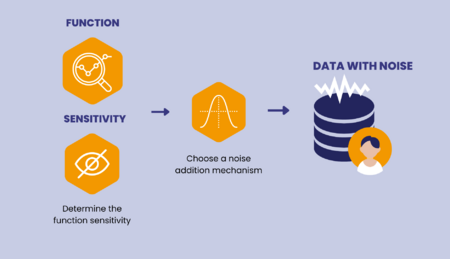

With the definition in hand, how does a function become a DP mechanism ? At a high level, DP can be achieved by injecting noise into a sensitive portion of the training procedure. This noise acts as a privacy barrier that obscures the 1-to-1 relationship of input and output, making any information past the privacy wall "sanitized". This barrier does not need setup such as in a database denying costly access restrictions like in databases as this added noise will make the results of such a query useless for malicious actors. Naturally, injecting noise will degrade the performance of an ML model, and researchers need to balance the tradeoff between protection and performance. In the next section, we will look at the Laplace Mechanism, a classic -DP mechanism achieved by adding controlled Laplace noise.

Laplace Mechanism

Before we introduce the Laplace mechanism, we must define the sensitivity considered in the Laplace Mechanism:

Definition 1: Following the definition by [2], for any given function , the sensitivity of the function is:

Where once again and are neighboring datasets.

This sensitivity is called the -sensitivity. There are multiple definitions of sensitivity, and they are used to scale the DP noise to the magnitude of change when 1 data point is changed in the dataset. Thus ensuring our DP noise is on the correct scale. Now, we can introduce the Laplace Mechanism:

Theorem 1: For a function , the mechanism satisfies -DP:

Where Lap is the laplace distribution with paramters 0 and . For the proof of such mechanism satisfies -DP, please see Theorem 4 of Gautam's Lecture notes[2].

Laplace Mechanism Application: Counting Queries

One of the most classic examples of using the Laplace Mechanism to privatize the counting query result. The counting query in a database are queries that ask the question: "How many data points in the dataset have property/feature A?" In this case, the system will go through each data point and generate an indicator value and sum it up with a function say . As each data point can change this sum by at most 1, the sensitivity of should be 1. Thus an -DP algorithmn for the counting queries is . The returned mechanism will have an error of and is independent of the dataset size.

There are many other use cases for the Laplace Mechanism, including privatizing histogram queries. In the next section, we will showcase the useful properties of DP.

Important Properties of Differential Privacy

DP is a popular choice of privatization not only because of its intuitive mechanisms, but also the convenient properties that follow from the DP definition. Here we will introduce the three most commonly used DP properties in research: post-processing, (basic) composition, and group privacy. We will include the proof as they are simple, but the proofs are largely inspired by Gautam's Lecture notes[2].

Post-Processing

Post-Processing is one of the most important qualities of DP, as it essentially guarantees any downstream task will not "un-DP" sensitive data that has been privatized.

Theorem 2: Let a mechanism be -DP, and let be a randomized mapping algorthim, then is -DP.

Proof. As is randomized, it can be considered as some distribution of deterministic functions, say . Thus for any neighbouring datasets and and output :

(Basic) Composition

Another important property of DP is composition, in the case where we run k DP algorithms on the same dataset, how do we aggregate/compose the privacy budget of these k mechanisms?

Theorem 3: Let be a scequence of k -DP mechanisms, then is -DP.

Proof. For any neighbouring datasets and and output sequence :

This is called basic composition because this budget bound is not the tightest, more recently there is the advanced composition which improves this bound to -DP rather than the current -DP. We will introduce the advanced composition later when we discuss approximate DP.

Group Privacy

Last but not least, we can extend the neighboring dataset definition to datasets that differ by entries, this is called group privacy. Similar to the DP definition:

Theorem 4: Let a mechanism be -DP, thus for all datasets and that differ by data points, and for all possible sets of the mechanism's output .Proof. Defining , , and the sequence from as a sequence of neighboring datasets, for all possible sets of the mechanism's output , we have:

Thus we have learned the three most important properties that help DP to be an intuitive way of adding privacy protection, in practice however -DP is a rather strong guarantee, in the next section, we will talk about the more widely used Approximate DP as it is known to give a better utility-privacy tradeoff.

Approximate Differential Privacy

Similar to -DP, we introduce a relaxation of this guarantee called approximate DP:

Definition 2: Following the definition by Dwork et al. [3], for some , A mechanism is called -DP if and only if it satisfies the equation:for all neighboring datasets and and for all possible sets of the mechanism's output .

Here, the only difference from -DP is the addition of . is called the failure probability, we can summarize its behaviour into two cases:

- With a probability of , we have -DP: .

- With a probability of , we get no privacy guarantee!

At first glance, this seems like a very scary definition, as there's a possibility that this algorithm will fail and leak sensitive data! Therefore in practice, this failure probability will be very small. Although it is still not enough to satisfy some DP purists (nothing ever does anyways), In the following sections we will see -DP mechanism that fail gracefully, and instead of releasing a sensitive dataset when it fails, instead they release the sensitive data with a weaker DP guarantee.

Gaussian Mechanism

Before we introduce the Gaussian mechanism, similar to the Laplace Mechanism we must define the sensitivity considered:

Definition 3: For a function , the sensitivity of is:

Where once again and are neighboring datasets.

This sensitivity is called the -sensitivity. Now, we can introduce the Gaussian Mechanism:

Theorem 5: For a function , the mechanism satisfies -DP:

The (privacy) parameter for a single privacy release satisfies the following inequality: hold for .

For the proof of such mechanism satisfies -DP, please see Theorem 6 of Gautam's Lecture notes[4]. In the following section we will outline the properties that Approximate DP has.

Important Properties of Approximate Differential Privacy

Similar to the properties in -DP, Approximate DP also offers such useful properties. Here, as some proofs are dense we will only state the results, those who are interested in the details can refer to the works by Dwork et al. [1] and Vadhan [5].

Post-Processing

The post-processing property still holds, and the proof is pretty straightforward as you replace the DP definition with the approximate DP definition:

Theorem 6: Let a mechanism be -DP, and let be a randomized mapping algorthimn, then is -DP.

Basic Composition

Theorem 7: Let be a sequence of k -DP mechanisms, where each Mechanism is -DP then is -DP.

Advanced Composition

As stated previously, if we used Advanced Composition, we can improve the aggregated/composed privacy budget of DP algorithms.

Theorem 8: Let be a sequence of k -DP mechanisms, where each Mechanism has the same privacy parameters and . then 's is -DP.

Here , and it's a value that can be manually changed and provides a relationship between the composed and . The exact proof of this bound is detailed in the paper by Dwork et al. [6] But the intuition can be found in Gautam's DP Lecture 6 [7]. Notice that Approximate DP is equivalent to DP when , this bound holds for both DP definitions. This which improves the privacy parameter to rather than the in basic composition.

Group Privacy

Last but not least, we also have group privacy in Approximate DP:

Theorem 9: Let a mechanism be -DP, thus for all datasets and that differ by data points, and for all possible sets of the mechanism's output .Here, the has an additional factor of .

Failure Probability in the Gaussian Mechanism

We mentioned in the previous section how the Gaussian Mechanism is not -DP, instead with failure probability , it is a graceful failure with a weaker DP guarantee.

In other words, with probability , the Gaussian Mechanism satisfies -DP instead, for some value .

As for what this value is, the proof Theorm 6 of Gautam's Lecture notes[4] provides a more detailed explaination.

Finally, we have talked about the most common properties in DP and approximate DP, and why it is widely recognized in both academics and industry as the gold standard privacy notion. In the next section, we will look at a more up-to-date Approximate DP method, Rényi Differential Privacy.

Rényi Differential Privacy[8]

In statistics, there are many divergence metrics used to compare probability distributions. As the DP definition is essentially a probability distribution comparison, researchers have thought of applying these metrics to the DP definition in hopes of improving the bounds of the DP properties stated previously. These metrics, such as the max divergence, KL divergence, and Rényi divergence have sparked variants of DP as they can be directly transformed back to the original DP definition. Here, we will look at the most widely used Rényi Differential Privacy (RDP), we will begin by introducing the definition of the Rényi Divergence:

Definition 4: For two probability distributions and , the Rényi Divergence is defined as:When , this is the KL-divergence and when we can recover the -DP definition (This is just pure mathematics). We can use Rényi Divergence at various other to have a better relaxation of DP while avoiding the failure probability in Approximate DP. We now formally introduce RDP which is first proposed by Mironov [8]:

Definition 5: A mechanism is called -RDP if and only if it satisfies the equation:for all neighboring datasets and . Here should be differentiated from the in the DP definitions.

The relationship between -RDP and -DP are as follows:

Theorem 10: Let a mechanism be -RDP, then for some , is -DP where .

Here, the can once again be manually changed by the data scientists but a small value might be more sensible. One of the most mechanism that achieves -RDP is, once again, the Gaussian Mechanism:

Theorem 11: For a function , the mechanism satisfies -DP:

One of the biggest advantage in using this RDP Gaussian Mechanism is that the composition can be tight, without the need for advanced composition:

RDP Composition

Theorem 12: Let be a sequence of k -RDP mechanisms, where each Mechanism is -RDP then is -RDP.

This bound is particularly powerful as even transforming it back to the original definition of -DP we get a much better privacy cost than advanced composition. Consequently, RDP has been used in Goggle's TensorFlow Privacy package for privacy accounting.

Conclusion

In this Wiki page, we provide a comprehensive overview and introduction to DP, a gold standard notion of privacy. We carefully defined privacy and showcased how DP is a powerful mathematical guarantee of this notion of privacy. There are many DP variants and mechanisms not introduced as it is far beyond the scope of this course, however, I highly recommend checking out Zero-Concentrated Differential Privacy by Bun and Steinke [9] as well as many DP papers in machine learning conferences as there are active researchers from all over the world who are in active pursuit of closing the gap between DP and non-DP settings. Ultimately, if DP doesn't degrade the performance, it can be trivial to deploy and privacy in ML will be achieved.

Annotated Bibliography

Put your annotated bibliography here. Add links where appropriate.

- ↑ 1.0 1.1 1.2 1.3 1.4 Dwork, Cynthia; Roth, Aaron (2014). The Algorithmic Foundations of Differential Privacy. Foundations and Trends in Theoretical Computer Science.

- ↑ 2.0 2.1 2.2 Kamath, Gautam (Fall 2020). "Lecture 4 —Intro to Differential Privacy, Part 2" (PDF).

- ↑ Dwork, Cynthia; Kenthapad, Krishnaram; Frank, McSherry; Ilya, Mironov; Moni, Naor (2006). [10.1007/978-3-540-34547-3_36 "Our data, ourselves: privacy via distributed noise generation"] Check

|url=value (help). Advances in Cryptology - EUROCRYPT 2006: 486–503 – via Springer Berlin Heidelberg. - ↑ 4.0 4.1 Kamath, Gautam (Fall 2020). "Lecture 5 — Approximate Differential Privacy" (PDF).

- ↑ Vadhan, Salil (2017). "The complexity of differential privacy". Tutorials on the Foundations of Cryptography: Dedicated to Oded Goldreich: 347–450 – via Springer International Publishing AG.

|chapter=ignored (help) - ↑ Dwork, Cynthia; N. Rothblum, Guy; Vadhan, Salil (2010). "Boosting and differential privacy". the 51st Annual IEEE Symposium on Foundations of Computer Science: 51–60 – via FOCS ’10.

- ↑ Kamath, Gautam (Fall 2020). "Lecture 6 — Advanced Composition" (PDF).

- ↑ 8.0 8.1 Mironov, Ilya (2017). "Renyi differential privacy" (PDF). IEEE: 263–275 – via arXiv.

- ↑ Bun, Mark; Steinke, Thomas (2016). "Concentrated differential privacy: simplifications, extensions, and lower bounds". Theory of Cryptography Conference: 635–658 – via Springer.

|

|