Course:CPSC522/Artificial Intelligence and Economic Theory

Artificial Intelligence and Economic Theory

Principal Author: Gudbrand Tandberg

Abstract

This article investigates the effects "the rise of AI" is having, and will have, on economic theory.

Builds on

This article can be seen as the third in a series investigating the interaction between Artificial Intelligence (AI) and Economics. In the first part, we gave a general introduction to the AI subfield of multi-agent systems. In the second part we introduced the application of AI techniques to the problem of financial forecasting. In this third and final article in the series we complete the journey by zooming out and considering how Economic Theory as a whole is and might be impacted by current research efforts in AI.

Contents

Introduction

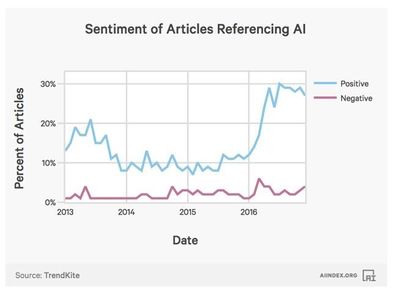

In recent years a renewed surge of interest, expectation and excitement has engulfed AI. Thanks to significant and impressive advances in several important application domains, as well as audacious promises from industry, academia and the media it seems humanity is once again standing on the cusp of a new era. Dubbed "the rise of AI", the impending revolution is hypothesized to impact just about every aspect of life on earth, ranging from health care and transportation to politics, media, consumerism, law and order, entertainment and more. The impact on economic activity brought about by the onset of the Information Age, as the current period of human history is sometimes called, is staggering. Global financial markets, multi-national organizations, and personalized advertisements are only some examples. History has shown that radical and disruptive societal change, such as exemplified by the Digital Revolution (the shift from mechanical and analogue electronic technology to digital electronics that took place roughly from the early 1950s to the early 2000s) and its continuation, "the rise of AI", is more often than not accompanied by a radical shift in economic thought and practice. The underlying hypothesis guiding the material selection in this article is that the rise of AI will engender large changes in the way economic theory is viewed, studied and practiced.

Besides compiling evidence supporting the above hypothesis, the article has four further objectives:

- Highlight and describe some of the areas of economic theory that are affected by the rise of AI.

- Point out specific techniques from AI that are currently being applied in economic theory research.

- Trace some strands of research dealing with questions of AI and Economics, serving as a non-comprehensive but representative review of the literature.

- Speculate on some of the trends moving forward based on the evidence collected in the article.

It must be noted that the current author has no formal training in economics and is not himself involved in any of the research discussed in the article. Nor does he enjoy any special position of privilege in any global economic institution or economic think-tank. Therefore, what follows is to be understood as a curiosity-driven exploration of an interesting and relevant application domain undertaken by an aspiring computer scientist. Hopefully, he will be able to look beyond the mountains of "AI-hype" and paint a critical and fact-based picture of the current state of affairs.

Background

The roots of modern economic theory can be traced back to Adam Smith (1776)[1]. Through his detailed description of the "market mechanism", Smith set the agenda for coming generations of theorists. At the center of his theory was the systematic analysis of the behavior of individual agents pursuing their self-interest under conditions of competition. Smith explicated the way in which the "market mechanism" was to direct this self-interested behavior towards a desirable aggregate outcome for society as a whole.

Fast-forwards a century and the Industrial Revolution has taken the world by storm. Smith's "market economy" model has been refined, formalized, and to a large extent implemented on a global scale. Economic theory had by now grown into a rigorous calculus of consumers and producers, supply and demand, marginal utility, costs of production and rational agents. The emergent approach to economics, dubbed the ”neo-classical model” was brought into a canonical form in the works of Pareto (1896) [2], Walras (1896)[3] and Marshall (1898)[4], among others.

Example: A private ownership economy

To get things started, we present an example of a simple yet powerful economic model. This is the model of Gerard Debreu (later known as the Arrow-Debreu model), as presented in the seminal economics book "Theory of Value: An Axiomatic Analysis of Economic Equilibrium"[5].

A private ownership economy is defined by the following ingredients:

- A set of producers

- A set of consumers

- A set of commodities, or goods

- A commodity space, identified with the positive orthant,

- For each consumer , a consumption set describing feasible consumptions of each good

- For each consumer , a preference relation which defines an ordering on the set of all feasible consumptions

- For each producer , a production set describing the feasible productions of each good for each producer

- The total resources

- For each consumer , their resources

- For each producer and each consumer , Shares

The constraints on the variables are (a consumer can only own a non-negative share in a company), for each (each producer is wholly owned), and (the sum of individual resources equals the total available resources). This model provides a concise description of some important features of an economy, yet brushes other important features neatly under the rug.

An equilibrium of a private ownership economy is a -tuple of points in such that

- maximizes profit relative to on for every

The point is called the price system. One of the major tenets of classical economics was that the price system reflects all available information about the market goods. We will revisit this statement in a later section. The fundamental question is: given a private ownership economy, does it have an equilibrium? This was one of the main questions guiding economists through the first half of the last century. General equilibrium theory, as the theory became known as, sought to provide necessary and sufficient conditions for economic models to have efficient equilibria. Efficiency in economics typically means Pareto efficiency, which describes an outcome where it is impossible for any agent to increase its utility without at least one agent having their utility decreased.

Computers entered the economy almost as soon as they had been invented. As it turns out, finding efficient resource allocations, equilibrium price systems and optimal actions for producers and consumers all result in interesting computational problems. At the end of the day, these problems usually result in some sort of constrained optimization. However, the idealized economic calculus of general equilibrium theory did not always fit into the picture of the real world, thus new tools were needed. In the 70s and 80s, game theory entered mainstream economics as a means of overcoming these problems by modeling rational agents in uncertain "game environments". The game theoretic approach proved useful for modeling concepts such as uncertainty, asymmetric information, beliefs, strategic behavior, and more[6]. Later, the introduction of mechanism design (also sometimes referred to as "reverse game theory") set the question of designing economic game environments at the centre of study. The 90s saw the rise of the Internet, global digital marketplaces, distributed super-computers and increasingly "intelligent" software. Moving ahead to "modern times", a central feature of the current state of the world is the availability of data. Given this state of affairs we have now arrived at, it seems a natural next step to see what happens when AI is added to the mix. In the next section we briefly review some of the strands of research emerging in this interdisciplinary environment of economics, AI, distributed systems and game theory.

Literature Review

The first papers to take seriously the applications of AI to economic theory came about in the early 90s. Some early examples include "Artificial adaptive agents in economic theory." (1991)[7] and "Artificial Intelligence and Economic Theory" (1994)[8]. Both papers discuss the question of modeling the economic behavior of idealized humans; economic agents, or homo oeconomicus, as they are sometimes called. In the latter paper, it is established that the actions an economic agent chooses depends on preferences and perceived opportunities, and certain characteristics of aggregate behavior might be derivable from these individual preferences. It is argued that in a formal model, actions will be a function of perceived opportunities, and perceived opportunities a function of earlier actions. "The crucial issue, then, is the specification of such functions, mapping the agents' past actions and outcomes into current actions". The analogy between this basic economic question and the basic questions of agent-based artificial intelligence are clear; indeed, interdisciplinary efforts in this domain seem natural and inevitable.

Related questions are studied in the field of market design. An interesting early paper in algorithmic market design with artificial agents is "Self-organized markets in a decentralized economy" (1996)[9]. In the paper, "a model of decentralized trade is simulated, with firms that produce a given commodity, and consumers who repeatedly wish to purchase one unit of that commodity. They use a combination of genetic algorithms and classifier systems to model each individual agent separately as a 'machine'. The questions examined are: in how far do these individual agents create opportunities to trade?, "how does the information concerning these opportunities spread through the economy?", and "to what extent are these opportunities traded away?" In other words: "how do self-organized markets emerge in the economy, and what are their characteristics".

Historically, AI has been interested mainly in the architecture of single agents. Work on natural language processing, planning, knowledge representation, reasoning and learning, all focuses on how single agents carry out sophisticated tasks. In the book "Rules of encounter: designing conventions for automated negotiation among computers" (1994)[10] the authors point out that when multiple agents interact, inherently different questions arise—"how can these agents come to agreements, when should they share information, what role do promises and threats have", etc. Answering these and similar questions quickly became vogue in the emergent subfield distributed artificial intelligence, and later, multi-agent systems. An early paper marking the beginnings of these efforts was the Contract Net Protocol for distributed communication and problem solving[11].

Throughout the 90s, the study of the questions introduced in the preceding paragraphs gathered momentum and began to be collected under the headings agent-based computational economics and artificial economics. At the heart of these new approaches was the modeling of economic processes as dynamic systems of interacting agents. An early review of this literature can be found in "Agent-based computational finance: Suggested readings and early research" (1999)[12], and more recently, "Agent-based computational economics: A constructive approach to economic theory" (2006)[13].

Although the "artificial" simulation- and agent-based approach has still not reached mainstream adoption, some believe this is about to change. Two of the common criticisms of the approach (as pointed out in the paper "Why are economists skeptical about agent-based simulations?" (2005)[14]) are interpretability and generalizability of simulated dynamics. Recently positive opinions are starting to emerge. Examples include the papers "Tipping points in macroeconomic agent-based models" (2015)[15], and "Agent-Based Macroeconomic Modeling and Policy Analysis: The Eurace@Unibi Model" (2013)[16]. To quote from the latter paper: "our assessment is that agent-based models in economics have passed the proof-of-concept phase and it is now time to move beyond that stage. It has been shown that new kinds of insights can be obtained that complement established modeling approaches".

Over the years, several journals and conferences have appeared to address the broad range of research questions falling under the category computational economics. The Journal of Economic Dynamics and Control[17], which has been around since 1979, provides an outlet for "publication of research concerning all theoretical and empirical aspects of economic dynamics and control as well as the development and use of computational methods in economics and finance". The Artificial Economics conference series[18] is a series of symposia on "agent-based approaches of Economics and Finance" that has been held every year since 2005. Topics covered include artificial market modeling, complexity of artificial markets, progress in artificial economics, self-organization and more. The Journal of Economic Interaction and Coordination[19], which has been around since 2006, is the official journal of the Association of Economic Science with Heterogeneous Interacting Agents. It is dedicated to "the vibrant and interdisciplinary field of agent-based approaches to economics and social sciences". Finally, the ACM Transactions on Economics and Computation[20], published since 2013, is a journal focusing on the intersection of computer science and economics. Topics include agents in networks, algorithmic game theory, computation of equilibria, computational social choice, cost of strategic behavior, cost of decentralization, design and analysis of electronic markets, learning in games and markets, mechanism design, and more.

In conclusion, it is clear that research efforts in the intersection of computer science and economics are thriving, and are increasing every year.

Methods

Agent-based Computational Economics

For a well-written and general introduction to the field, as well as a wealth of references and suggested readings, we refer the reader to the website [21]. The author formulates five essential properties that motivates the approach of using artificial agents to study economies: "first, they consist of heterogeneous interacting participants characterized by distinct local states (data, attributes, methods) at each given time. Second, they are open-ended dynamic systems whose dynamics are driven by the successive interactions of their participants. Third, human participants are strategic decision-makers whose decision processes take into account past actions and potential future actions of other participants. Fourth, all participants are locally constructive, i.e., constrained to act on the basis of their own local states at each given time. Fifth, the actions taken by participants at any given time affect future local states and hence induce system reflexivity".

One modeling approach, as presented in [22] goes like this:

- Starting point: a population of heterogeneous agents

- Theory: write behavioral rules

- Codifcation: translate the rules into lines of code

- Validation: calibrate the parameters, run simulations, analyze the emerging properties of the model, compare the properties with real world phenomena

Each of the above bullet points can be considered interesting research directions in their own right. For example, how should one model an economic agent, and what sort of different agents exist in the economy?. The classical approach is to model economic agents as "Von Neumann-Morgenstern expected utility maximizers". This approach is still useful when considering truly artificial agents, i.e. when not attempting to model human behavior, but is considered unrealistic when the goal is to understand human agents. In this case, ideas from behavioral economics are applied to model rationally bounded human-like agents. In a recent National Bureau of Economic Research paper "Artificial intelligence and behavioral economics" (2017) [23], the author provides some speculative ideas about "how artificial intelligence (AI) and behavioral economics may interact, particular in future developments in the economy and in research frontiers." The ideas are "that ML can be used in the search for new “behavioral”-type variables that affect choice. [...] that some common limits on human prediction might be understood as the kinds of errors made by poor implementations of machine learning [...] that it is important to study how AI technology used in firms and other institutions can both overcome and exploit human limits". The author goes on: "the fullest understanding of this tech-human interaction will require new knowledge from behavioral economics about attention, the nature of assembled preferences, and perceived fairness".

As an example of what can be learned in the last bullet point, "Artificial intelligence and asymmetric information theory" (2015)[24] find that in simulations of markets consisting of truly artificial agents, markets exhibit less informational asymmetry as well as higher efficiency. Because markets contain increasingly more artificial agents, results such as these are important for guiding future research efforts in economic theory. An interesting theoretical idea, proposed in 2004 by Dr. Andrew Lo, the adaptive market hypothesis attempts to "reconcile economic theories based on the efficient market hypothesis [...] with behavioral economics, by applying the principles of evolution to financial interactions: competition, adaptation and natural selection". We might speculate that theories such as these will need to be taken more seriously in future work.

Artificial Economics

Artificial economics is a "bottom-up and generative approach of agent-based modeling developed to get a deeper insight into the complexity of economics", or more simply, it is "a research field that aims at improving our understanding of socioeconomic processes with the help of computer simulation". While challenges facing the artificial approach abound, the potential advantages are also huge. The following table, taken from Wikipedia, summarizes the potential benefits of using an artificial approach to computational economics, compared with the classical economic approach.

| Traditional restrictions imposed to ensure mathematical tractability | Features that can be explored with Computer Simulation (Artificial Economics approach) |

|---|---|

| Representative agent or a continuum of agents | Explicit and individual representation of agents (agent-based modelling) |

| Rationality (and sometimes common knowledge of rationality) | Adaptation at the individual level (learning) or at the population level (evolution). Satisficing |

| Perfect information | Local and asymmetric information |

| Focus on static equilibria | Focus on out-of-equilibrium dynamics |

| Determinism | Stochasticity |

| Top-down analysis | Bottom-up synthesis |

| Random or complete networks of interaction | Arbitrary (and potentially endogenous) networks of interaction |

| Minor role of physical space | Explicit representation of physical space |

| Infinite populations | Finite populations |

| Preference for uniqueness of solutions | Path dependency and historical contingency |

The higher level goals of artificial economics are uncovering causality, i.e. inferring causal relations between observables, and prediction. To achieve these goals, artificial economists employ a wide range of tools and techniques, machine learning algorithms making up some of these. As we have seen in countless other fields, endowing agents with the ability to learn from data can give huge benefits. One of the main use-cases of machine learning in economics is prediction. Economic forecasting is the process of making predictions about the economy. Forecasts are hugely important for almost all economic decision-making, and play an important role in determining such things as strategies, policies and budgets for all kinds of economic agents. Economic forecasting can be applied at many scales; a rough distinction is the classical delineation between macro-modeling, dealing with aggregate and large-scale quantities such as GDP, inflation and unemployment, and micro-modeling, dealing with companies and individuals, supply, demand, production, and consumption. Large-scale macro-modeling is an important task for such organizations as the International Monetary Fund, the World Bank, the OECD, national governments and central banks.

At the same time, prediction is one of the one of the fundamental challenges addressed by current AI research. Some researchers posit that future advances in AI will be dependent on developing a more general theory of prediction learning. The idea is to move beyond "simple" one-step prediction techniques such as regression and temporal differencing, in order to build truly scalable multi-step prediction methods. As reinforcement learning guru Dr. Richard Sutton says in a 2017 lecture to future AI researchers "you really want to be able to learn from raw data, you want to be scalable. So if these things that we love [TD and supervised learning] are not scalable, what is scalable? [...] Well my answer is simple, it's what I call prediction learning. Prediction learning means learning to predict what will happen"[25]. Sutton goes on to describe this as a form of "unsupervised supervised learning". What he means by this is that there are targets, but that these targets need not be gathered or processed by humans, "we just wait and see what happens". We can imagine one approach to prediction learning might look like some hierarchical combination of techniques from reinforcement learning, online learning, structured prediction, causal reasoning, and more. However ML prediction methods introduce a number of difficulties involving the design and choice of learning algorithms. Especially in economics are problematic forecasts dangerous. As the authors of "Artificial Economics: Artificial Economics: What, Why and How" (2015)[26] point out, black-box predictions are to be treated with extra caution and skepticism. "[Black-box predictions]–whilst potentially useful– cannot be a final stage or ultimate goal in Science. [...] if a perfect-predicting device were suddenly discovered or given to us by some superior form of intelligence, Science would not silently disappear. Instead, much scientific effort would be devoted to finding out how the device worked and why it predicted so well". For an interesting philosophical discussion of the ideas discussed in this section, see "Rationality and Prediction in the Sciences of the Artificial: Economics as a Design Science" (2008) [27].

Prediction Markets

Prediction markets (also known as information markets, decision markets, event derivatives, or virtual markets) are markets created for the purpose of trading on the outcome of events. By allowing individual agents to form beliefs and place bets, prediction markets are an interesting approach to aggregating distributed privately held information to make predictions about the future. The market prices resulting from the market process is then supposed to indicate what a crowd believes the probability of a given event is.

Interestingly, besides being a useful tool for belief aggregation and prediction, the concept of a prediction market might itself provide a useful tool for advancing AI itself. In a recent paper "Machine Learning Markets" (2013)[28], prediction markets are used as a method of implementing machine learning models in a distributed way. It is shown that the markets studied "can implement model combination methods used in machine learning, such as product of expert and mixture of expert approaches as equilibrium pricing models by varying agent utility functions. They can also implement models composed of local potentials, and message passing methods. Prediction markets also allow for more flexible combinations, by combining multiple different utility functions. Conversely, the market mechanisms implement inference in the relevant probabilistic models. This means that market mechanism can be utilized for implementing parallelized model building and inference for probabilistic modeling." The paper goes on: "extending machine learning methods to more and more complicated scenarios will require increasing the flexibility of the modeling approaches. It may well be desirable to build models from inhomogeneous units as standard, and experiment with more flexible compositional methods".

Example: Machine Learning Market

To give an idea of the approach, we provide a light introduction. Suppose we have a space of possible outcomes of the set of relevant future occurrences. The elements of are called events, and one, and only one, of those events will be the actual outcome. Suppose we also have a -algebra of subsets of on . A set of market goods is enumerated by by , each associated with a set to be bets that pay out 1 Grubnick (the agreed upon currency in the market) if the outcome is in . A set of agents is enumerated by . Each agent can buy or sell any of the market goods. Hence each agent has a position vector (or stock holding) in all the goods available. is the total number of items agent has of good . indicates a short position in that good, i.e. a pessimistic bet. Each agent also has an associated utility function defined in terms of the currency, denoting the utility to the agent of a wealth of . Each agent will also have a belief, that is a probability measure defined on . We can also consider agents who have beliefs defined on subspaces of the probability space . These are called local beliefs.

Let denote the current wealth of agent and the cost of goods be denoted by the cost vector . Then the rational agent will choose a utility maximizing position in each of the goods he or she has an opinion about. However because the outcome is uncertain the actual utility of holding the goods is a weighted sum of the utility associated with each possible outcome, weighted by the agent’s belief about the probability of that outcome. This is written as

subject to if . Here, is the return of a bet on good in case of outcome and is 1 if and zero otherwise. For more details on how ensemble machine learning models can be implemented in this framework we refer to the original paper. The main idea is that when agents act rationally, the competitive equilibrium assumption (borrowed from classical General Equilibrium Theory) ensures that the predicted outcome corresponds to an optimal (in a Bayesian sense) output of some machine learning model.

Market-based control

Another interesting and interdisciplinary approach is what the field of "market based control" (MBC). In a similar fashion to prediction markets, MBC applies ideas from economic theory to problems of computer science. According to "Market-based control: A paradigm for distributed resource allocation" (1996)[29], MBC is a paradigm for "controlling complex systems that would otherwise be very difficult to control, maintain, or expand, using an abstract definition of a market as a system with locally interacting components that achieve some overall coherent global behavior." The paradigm has been successfully applied to such problems as operating system memory allocation, saving energy, and machining task allocation in manufacturing.

Speculation

Ontologies

An interesting approach that seems to not yet have received much attention is to deal with economic questions using probabilistic models and knowledge representation and probabilistic reasoning. This is a general approach to acting under uncertainty that works by endowing artificial agents with a representation of the world in terms of objects and relations between these objects. This seems like an obvious approach, but it should be noted that in most "typical" machine learning approaches such as regression, representations of objects is completely absent. An example of a system based on knowledge representation and probabilistic reasoning is IBM's Watson system. While initially trained to reach mastery in the TV game show "Jeopardy", Watson later moved on to the field of medicine. Over 10000 publications are published weekly in the PubMed database, and there also exists a huge amount of health-records of patients, symptoms, treatments and outcomes available to researchers. The task of inferring general truths from this data seems an impossible task for humans, and perhaps even more so for computers. Real-world data is noisy, heterogeneous and machine-unreadable, for a variety of reasons. To overcome this problem, computer scientists use "ontologies" to standardize terminology. While philosophers define ontology as "the study of being", in computer science, an ontology is simply a model of (part of) the world consisting of formal namings and definitions of types, properties, and interrelationships. Thus by enforcing an ontology on a scientific domain, one provides a way for artificial agents to "read" and "understand" papers from different journals, disciplines and countries in a unified way. The agents can then be told to build up a knowledge-base of facts about the world, and based on these facts to come up with hypothesis about the world and to make predictions about the world based on these hypothesis. Thus the process can be seen as a generalization of the scientific method, a "meta-science" whereby individual theories and observations are mapped together onto a larger whole. In Watson's case, users can for example query the system by providing inputs in the form of health-records, and then have the system compute the most likely cause of the patient's symptoms, and also the treatment most likely to cure the patient. The existence of an underlying ontology of medical terms, treatments and processes is essential for the success of such a system. The extension of this approach to economic domains seems natural and promising. The fact that Watson's lead scientist Dr. David Ferrucci recently moved to Bridgewater Associates, the world's largest hedge fund, seems to indicate that this work might already be on the way.

A scan through the internet archives reveals that most co-occurences of "ontology" and "economics" happen in the "Philosophy of Economics" literature, although some work has been published using the computer science definition. In An ontology of economic objects[30], the author aims to lay the groundwork for an ontological description of economic reality consisting of economic objects such as goods, commodities, money, value, price, and exchange. The paper Towards a reference ontology for business models [31] proposes an ontology of business models using concepts from three previously established business model ontologies. The basic concepts in the ontology are actors, resources, and the transfer of resources between actors. Only time will tell whether this kind of modeling approach will be useful in practice and if it will ever see mainstream adoption. Critics of the approach point to intrinsic problems of expressibility of language as a representation of the world, which extends to computer languages from natural language in an obvious way. The Sapir-Whorf hypothesis from linguistics states that the structure of a language "determines or greatly influences the modes of thought and behavior characteristic of the culture in which it is spoken". Or, as Wittgenstein put it: "the limits of my language are the limits of my world". These objections will always exist, and are perhaps in a way intrinsic to scientific progress. The use of flexible or adaptive ontologies partly overcomes this, but at the end of the day, humans and computers alike must take care when expressing knowledge in language and when interpreting language itself.

Economics in the Data Age

Just as the Digital Revolution marked the onset of the Information Age, we can imagine "the rise of AI" as marking a transition to a new period, the "Data Age". "Data", according to Wikidata, is defined as "a precursor to information, meaning that information can be inferred or derived from data"[32]. While data is that which just is (e.g. sensory stimuli or measurements), information and knowledge is the result of transforming data into something utilizable. "Big Data" has recently become an important buzzword in the discourse on emerging technology. The expectations to and the faith in data is not so surprising when one remembers the old saying "knowledge is power". Governments need data to govern, companies need data to make business decisions and individuals need data to share it with their friends online. The theory of the knowledge economy dictates that "rules and practices that determined success in the industrial economy need rewriting in an interconnected, globalized economy where knowledge resources such as trade secrets and expertise are as critical as other economic resources"[33].

Another term used to describe the changing state of affairs is the social data revolution. According to Wikipedia, "social data refers to data individuals create that is knowingly and voluntarily shared by them[34]. The negative media attention that has recently been directed towards privacy issues involving personal data and private companies is perhaps not entirely balanced when it comes to the potential value of this underlying data. In a globally connected world, each individual leaves a trail of data in the form of clicks, views, likes, cookies, purchases, searches, pokes and uploads. Naturally, the question of who can see, own and use this data is of vital concern, and policies and agreements need to be put in place. At the same time it is equally important to acknowledge the potential social utility of this data, and mechanisms for safely extracting and utilizing it needs to be a chief concern of future welfare economists. The transformation of data into knowledge is a key component in economic decision making for companies, nations and individuals alike, and the social data revolution "enables not only new business models like the ones on Amazon.com but also provides large opportunities to improve decision-making for public policy and international development"[35]. As we saw in earlier sections, AI provides a number of tools for this transformation.

AI, Information and the Role of the State

The first study to take the question of information in economics seriously was by Friedrich von Hayek, in his 1945 essay "The use of knowledge in society". The essay opens:

What is the problem we wish to solve when we try to construct a rational economic order?

On certain familiar assumptions the answer is simple enough. If we possess all the relevant information, if we can start out from a given system of preferences and if we command complete knowledge of available means, the problem which remains is purely one of logic.

Hayek goes on to argue that the antecedents in the preceding assumptions are decidedly false, and that "the "data" from which the economic calculus starts are never for the whole society "given" to a single mind which could work out the implications, and can never be so given". Therefore, he argues, the task of centralized economic planning is an impossible one. During the second half of the last century, the question of the role of information in economics gradually became one of the most widely-studied in economic theory, regarded as a "paradigm shift" in economic thought[36]. The emergent school of thought supported Hayek's presentiments, and the distributed nature of economic information became one of the central arguments in "the socialist calculation debate" for a free-market economy versus communist or socialist central planning. In this school, it was widely believed that the market model provided a fair and efficient model of aggregate economic behavior and that the sum of available information was indeed reflected through the price system. As the workings of market failures eventually came to be understood, it was agreed that the role of government in economic planning was to be measured, limited mainly to counteracting the well-known market failures. One could argue however, that the time might be ripe for revisiting the question of the role of the state.

The following exercise, given by economics professor Bryan Caplan to his students, illustrates another example of why the interaction between AI and economics is of importance when considering the role of the state: "Suppose artificial intelligence researchers produce and patent a perfect substitute for human labor at zero [marginal cost]. Use general equilibrium theory to predict the overall economic effects on human welfare before AND after the Artificial Intelligence software patent expires". The professor's answer: "while the patent lasts, [...] Humans who only own labor are worse off, but anyone who owns a home, stocks, etc. experiences offsetting gains. [...] When the patent expires, this effect becomes even more extreme. With 0 fixed costs, wages fall to MC=0, but total output—and GDP per human—skyrockets. Human owners of land, capital, and other non-labor assets capture 100% of all output. Humans who only have labor to sell, however, will starve without charity or tax-funded redistribution"[37]. This is a rather unrealistic and extreme example, but it exemplifies the kinds of questions governments will be faced with after the rise of AI.

In a recent paper "Digital Economics" (2017) from the National Bureau of Economic Research, Goldfarb and Tucker recognize "reduced search costs" as one of five main trends shaping the future of digital economics[38]. The consequence of this, that the potential for information acquisition has increased dramatically, has implications for AI. If the potential for aggregation of distributed data surpasses a critical threshold, it might possible for a centralized government to perform economic planning in an efficient way using AI, or at least rely partly on AI-based decision support. When taken to the extreme this idea will certainly meet with public outcry, although this might be more a problem for the next century. With skepticism towards self-driving cars at the levels they are today, it is not too difficult to imagine the public reaction to the idea of self-governing states. This is understandable of course; humanity has learned that belief in benevolent dictatorship is nothing short of quixotic, and the prospect of Skynet governing the markets is downright frightening. Furthermore, the theory of instrumental convergence suggests that an intelligent agent with apparently harmless goals can act in unexpected harmful ways. For example, a computer with the sole goal of computing efficient resource allocations could attempt to turn the entire Earth into a computer so that it can succeed in its calculations.

However, it is quite plain that doomsday theories such as instrumental convergence, AI-takeover and "the singularity" occupy the minds of the public much more than they do AI researchers, and that there is still room for plenty of useful improvements and applications before these concerns need to be taken too seriously (for some more concrete concerns in AI safety, see [39]). At the same time, professional opinions on the matter diverge widely, as is evident from inspecting this year's "AI Index"[40] report, a meta-survey illustrating "just how broadly AI is being investigated, studied, and applied", which notes that "the field of AI is still evolving rapidly and even experts have a hard time understanding and tracking progress across the field". It is not difficult to find concerned experts, for example Sir Tim Berners-Lee describes his "nightmare scenario where AI runs the financial world" as when "AI starts to make decisions such as who gets a mortgage, that’s a big one. Or which companies to acquire and when AI starts creating its own companies, creating holding companies, generating new versions of itself to run these companies. [...] So you have survival of the fittest going on between these AI companies until you reach the point where you wonder if it becomes possible to understand how to ensure they are being fair, and how do you describe to a computer what that means anyway?”[41]. In this authors view at least, it is more reasonable to fear and regulate human activity, whether it is research, design, entrepreneurial, political or otherwise, than laying blame and liability on AIs, and that this is the main plight of today's AI researchers and policy makers. As the above article closes "A vicious dog may not be able to explain why it attacked a person, but that doesn’t mean we let the dog’s owner off the hook".

Conclusion

In this article, have attempted to give a broad introduction to the exciting field at the intersection of artificial intelligence and economics. We argued that many features unique to our time, such as high-performance computing, AI-improvements, big data, global markets, etc., make the interdisciplinary approach especially relevant to future applications. We pointed to several different but related approaches to the general area of "artificial economics" and attempted to explain the main features of these approaches. We also gave a brief review of some of the literature that has emerged starting from the early 90s. Hopefully, we have convinced the reader of both the unbounded possibilities and the inherent challenges facing the field. Given more time, we would have liked to be able to convey a more accurate picture of what is really going on in the real world. Most of the academic research we have surveyed remains high-level, conceptual, optimistic or vague, although it is clear that what we have presented is indeed both relevant and widely used in practice. But as is the case in many areas, the gap between published research and current practice is wide, and it is hard to discover for the outsider, at least until the speculative branches of science either wither or reach mainstream adoption. To this date 11 Nobel prizes in Economics have been awarded to game theorists. We end this article with a prediction: "within 2 decades we will witness Nobel prizes (in Economics in particular, but perhaps also other fields) being awarded to AI researchers "for having laid the foundations of artificial economics"".

References

- ↑ Smith A. An Inquiry into the Nature and Causes of the Wealth of Nations,(1776). Methuen; 1950.

- ↑ Pareto V. Cours d'économie politique. Librairie Droz; 1964.

- ↑ Walras L. Éléments d'économie politique pure, ou, Théorie de la richesse sociale. F. Rouge; 1896.

- ↑ Marshall A. Principles of economics. Vol. 1. Macmillan And Co., Limited; London; 1898.

- ↑ Debreu G. Theory of value: An axiomatic analysis of economic equilibrium. Yale University Press; 1987.

- ↑ Samuelson L. Game theory in economics and beyond. VOPROSY ECONOMIKI. 2017;5.

- ↑ Holland JH, Miller JH. Artificial adaptive agents in economic theory. The American economic review. 1991 May 1;81(2):365-70.

- ↑ Vriend NJ. Artificial intelligence and economic theory. Many-agent simulation and artificial life. 1994;1994:31-47.

- ↑ Vriend NJ. Self-organized markets in a decentralized economy. Santa Fe Institute

- ↑ Rosenschein JS, Zlotkin G. Rules of encounter: designing conventions for automated negotiation among computers. MIT press; 1994.

- ↑ Smith RG. The contract net protocol: High-level communication and control in a distributed problem solver. IEEE Transactions on computers. 1980 Dec 1(12):1104-13.

- ↑ LeBaron B. Agent-based computational finance: Suggested readings and early research. Journal of Economic Dynamics and Control. 2000 Jun 1;24(5-7):679-702.

- ↑ Tesfatsion, Leigh. "Agent-based computational economics: A constructive approach to economic theory." Handbook of computational economics 2 (2006): 831-880.

- ↑ Leombruni R, Richiardi M. Why are economists sceptical about agent-based simulations?. Physica A: Statistical Mechanics and its Applications. 2005 Sep 1;355(1):103-9.

- ↑ Gualdi S, Tarzia M, Zamponi F, Bouchaud JP. Tipping points in macroeconomic agent-based models. Journal of Economic Dynamics and Control. 2015 Jan 1;50:29-61.

- ↑ Dawid H, Gemkow S, Harting P, van der Hoog S, Neugart M. Agent-based macroeconomic modeling and policy analysis: the Eurace@ Unibi model.

- ↑ http://jedc.com/

- ↑ http://www.artificial-economics.org/

- ↑ https://www.springer.com/economics/economic+theory/journal/11403

- ↑ https://teac.acm.org/

- ↑ http://www2.econ.iastate.edu/tesfatsi/ace.htm

- ↑ http://corsi.unibo.it/emp/Documents/Seminars/Introduction%20to%20Agent%20Based%20Modeling/ABM_L1.pdf

- ↑ Camerer CF. Artificial intelligence and behavioral economics. InEconomics of Artificial Intelligence 2017 Oct 5. University of Chicago Press.

- ↑ Marwala T, Hurwitz E. Artificial intelligence and asymmetric information theory. arXiv preprint arXiv:1510.02867. 2015 Oct 10.

- ↑ https://www.youtube.com/watch?v=EeMCEQa85tw&t=1676s

- ↑ Izquierdo, L. R., & Izquierdo, S. S. (2015). Artificial Economics: What, Why and How.

- ↑ González WJ. Rationality and Prediction in the Sciences of the Artificial: Economics as a Design Science. Reasoning, rationality, and probability. 2008 Feb 15(183):165.

- ↑ Storkey A. Machine learning markets. InProceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics 2011 Jun 14 (pp. 716-724).

- ↑ Clearwater SH. Market-based control: A paradigm for distributed resource allocation. World Scientific; 1996.

- ↑ Zuniga GL. An ontology of economic objects. American Journal of Economics and Sociology. 1998 Apr 1;58(2):299-312.

- ↑ Andersson B, Bergholtz M, Edirisuriya A, Ilayperuma T, Johannesson P, Gordijn J, Grégoire B, Schmitt M, Dubois E, Abels S, Hahn A. Towards a reference ontology for business models. InInternational Conference on Conceptual Modeling 2006 Nov 6 (pp. 482-496). Springer, Berlin, Heidelberg.

- ↑ https://www.wikidata.org/wiki/Help:About_data#Defining_data

- ↑ https://en.wikipedia.org/wiki/Knowledge_economy

- ↑ https://en.wikipedia.org/wiki/Social_data_revolution

- ↑ https://en.wikipedia.org/wiki/Social_data_revolution

- ↑ Stiglitz JE. Information and the Change in the Paradigm in Economics. American Economic Review. 2002 Jun;92(3):460-501.

- ↑ http://econlog.econlib.org/archives/2013/04/ai_and_ge_answe.html

- ↑ Goldfarb A, Tucker C. Digital economics. National Bureau of Economic Research; 2017 Aug 18.

- ↑ Amodei D, Olah C, Steinhardt J, Christiano P, Schulman J, Mané D. Concrete problems in AI safety. arXiv preprint arXiv:1606.06565. 2016 Jun 21.

- ↑ http://cdn.aiindex.org/2017-report.pdf

- ↑ Financial Times, April 11 2017: "The inventor of the web is worried about algorithms running the world", url: https://ftalphaville.ft.com/2017/04/11/2187283/the-inventor-of-the-web-is-worried-about-algorithms-running-the-world/

To Add

|

|