Course:CPSC522/Affect Prediction using Eye Gaze

Affect Prediction from Eye Gaze Data

Affect can be defined as emotion (e.g. sad, happy, angry) or continuous values of affective dimensions (e.g. valence, arousal). Affect prediction is done done by applying machine learning algorithms to classify emotions or continuous values of affective dimensions. Gaze has shown to be one of the most effective forms of predicting affect.

The two papers this page is based off include :

- J. O Dwyer, R. Flynn, and N. Murray, Continuous affect prediction using eye gaze and speech, in 2017 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), 2017, pp. 2001-2007.

- Prasov Z, Chai JY, Jeong H. Eye Gaze for Attention Prediction in Multimodal Human-Machine Conversation. AAAI Spring Symposium: Interaction Challenges for Intelligent Assistants 2007 Mar (pp. 102-110).

Principal Author: Vanessa Putnam

Collaborators:

Abstract

There has been increasing interest in machine recognition of human emotions. Data collection techniques are becoming more extensive in capturing human affect. Some of these modalities include, speech recognition, facial recognition, and text recognition. However, this page specifically addresses using eye gaze as a favorable modality for predicting human affect. The prediction mechanisms discussed here will make use of ideas from computer science and psychology in order to design models that are descriptive of user affective states.

Builds on

Affect prediction builds on common feature selection techniques and classification algorithms in computer science to make predictions. Affective Prediction relies on existing machine learning classifiers, such as Support Vector Machines, Linear Classifiers, Artificial Neural Networks . To understand affect prediction from a psychological lense, see affective forecasting.

Related Pages

Predicting Human Behavior in Normal-Form Games aims to predict human behavior specific to normal form games. Predicting Affect of User's Interaction with an Intelligent Tutoring System uses gaze data to predict user interaction specific to Intelligent Tutoring systems.

Content

Affective computing: What is it?

Affective computing is specifically used to recognize emotion (e.g. sad, happy, angry) or continuous values of affective dimensions (e.g. valence, arousal). Valence indicates emotional value that is associated with a stimulus and can be characterized as positive or negative. Arousal can be defined as to stir to action or strong response. Affective computing research is interested in how to predict these values as well as emotion using different modalities such as speech, facial expression, text, gaze, etc. Affective Computing literature has explored these modalities and others, however in this page we investigate how gaze data can be a valuable information source in predicting user affect.

Eye-tracking

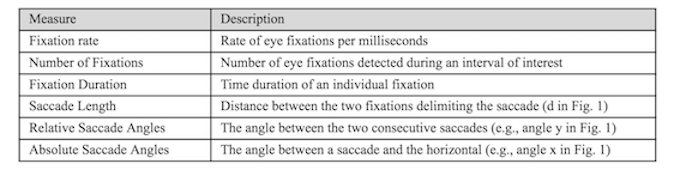

In the eye tracking domain, data is collected in terms of fixations through eye-tracking hardware. Fixations can be defined as maintaining the visual gaze on a single location. There are quite a few features that can be derived from these fixations. To start, an interesting feature of fixations in eye tracking are known as fixation count. This concept is informative of the number of fixations a user has for a fixed amount of time. If there is a high fixation count per unit time, one could conclude that the user was frequently looking from one place to the next. Similarly, if there is a low fixation count per unit time one could conclude that a user was infrequently looking around and fixating on certain areas for a period of time. This idea brings us to our next interesting feature of fixations which is known as fixation duration. This feature can be thought of as the length of time spent on an individual fixation. Longer fixation durations could imply less user activity (zoning out) compared to shorter spurts of fixation durations across a task. However, we could also interpret these longer fixations as more focus in a user interaction. Slight nuances such as these make eye tracking data an interesting information source to investigate.

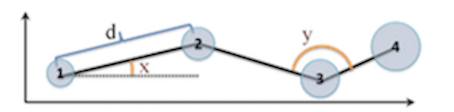

Another feature specific to the eye tracking domain is called a saccade, or in other words the distance from one fixation to another. Saccades are derived from two consecutive fixations to connect a gaze pattern (Figure 1). A gaze pattern is essentially used to infer users' intention or goal within a particular context depending on where they are looking. Similar to fixations, saccades also have a duration component to measure how long it takes to get from one fixation to another. Additionally, saccades are also measure by their length. Longer saccades imply a user fixates in areas of greater distance, whereas short saccades imply that a user is looking (or fixating) in locations that are closer together. Furthermore, eye tracking data also consists of saccade angles or the angle between the saccade and a horizontal (Figure 1). For example, when reading a document, one would expect most saccades to go the right and down since reading is done from left to right in all text read by participants. However, if there are many saccade angles going left or upwards this could indicate a user has been re-reading the same sentence during a reading task. In addition to the features described above, many eye trackers also have the ability to gather features on pupil dilation and headpose. These features are also insightful information sources for making predictions of human affect.

Feature Selection

Gaze data supplies a plethora of features related to user interaction that can later be used to predict user affect. However, an interesting question to address is what features should be used, and which features are the most useful in making these predictions.

Feature selection techniques can be particularly useful for eye tracking prediction. This is especially true when the number of eye-tracking features is large in comparison to a smaller number of training examples. In this situation feature selection is useful to avoid overfitting. [1] The list below gives a brief description of several potential feature selection techniques with respect to eye-tracking data:

- Principal Component Analysis (PCA) – reduces the dimension of a feature set by creating components based on highly correlated subsets of features.

- Wrapper Feature Selection (WFS) – finds useful subsets of features by testing them with a specific classifier.

Feature selection for eye-tracking is not restricted to these methods, for more detailed information about feature selection methods, see feature selection.

It is also important to note that feature selection algorithms are not a mandatory requirement to extract the most useful features. Often times if there is domain knowledge about the most useful feature to be used, an analyst can hand select features to be used in prediction. An example if this will be discussed later in the Eye Gaze for Attention Prediction section.

Algorithms

This list below demonstrates various algorithms selected as classifiers in affect prediction.

- Linear Classifier – Classification happens based on the value obtained from the linear combination of the feature values, which are usually provided in the form of vector features.

- Support Vector Machines – is a type of (usually binary) linear classifier which decides in which of the two (or more) possible classes, each input may fall into.

- Random Forest – work based on following a decision tree in which leaves represent the classification outcome, and branches represent the conjunction of subsequent features that lead to the classification.

- Artificial Neural Network – is a mathematical model, inspired by biological neural networks, that can better grasp possible non-linearities of the feature space.

As with feature selection, these algorithms are not a complete list of all methods that can be used to make predictions on gaze data. The selected algorithm to be used should be chosen to address the specific classification task at hand. For example, Fast and Accurate Algorithm for Eye Localization for Gaze Tracking in Low Resolution Images suggests an algorithm best for localizing pupils in low resolution images. Here the algorithm was chosen and designed to address noise, shadows, occlusions, pose variations, and eye blinks in pupil classification.

Eye Gaze for Attention Prediction

An interesting question to explore with eye-gaze data is whether or not a user is paying attention during a task. Prasov, Chai, Jeong [2] examine how eye gaze contributes to automated identification of user attention during human machine conversation. One may ask why predicting attention would be useful for a page specific to affect. Interestingly, results in [2] demonstrate the effects of arousal on attention. Thus, we describe an in-context example for predicting attention using gaze data.

Data Collection

The data for this prediction task was collected in the form of a user study. Users would interact with a graphical display to describe a scene and answer questions about the scene in a conversational manner.

Thus, the collected eye gaze data consists of a list of fixations, each of which is time-stamped and labeled with a set of interest regions. Speech data is manually transcribed and timestamped. Additionally, a window size is defined to denote list of data instances occurring in the same time. This means for a given input there is an associated list of eye gaze and action data.

Feature Selection

Rather than using a feature selection algorithm, the authors opt for hand selecting features to predict attention.

- Fixation Intensity : The length of a fixation upon an object in a visual scene. Generally, long fixations signify that a user is paying attention to this object.

- Visual Occlusion : The visual occlusion of an object represents how much of this object is obstructed (by other objects) from the user’s viewpoint. When a user's eye gaze happens to simultaneously fixate on two overlapping objects, the user is likely to be more interested in the object appearing in front.

- Fixation Frequency : Represents the number of times an object is fixated in W.

Model

The attention prediction task for this study was formulated as an object activation problem. Meaning, an object is considered activated if it is the focus of attention. This can be a tricky task since many of the total fixations are irrelevant since they can encompass multiple objects. The binary decision of whether an object was activated or not is too coarse to reflect the usefulness of features. For this reason, a logistic regression model was chosen for prediction in order to reflect the likelihood that an object was activated. This likelihood will allow us to rank object activation. Below is a description of the algorithm in context.

Here refers to the class label (activated or

not activated), refers to the feature vector, and and are parameters to be learned from the data. refers to the weights associated with features.

where the following constraint holds

Thus, the likelihood for a particular object to be activated

given a set of features can be expressed as

follows:

Results

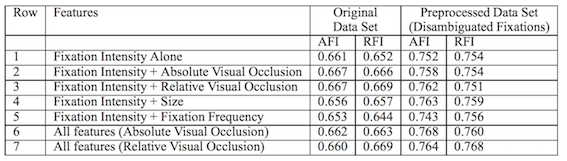

Using the logistic regression likelihood estimator, results show that fixation intensity can be used to predict (attention) object activation. Additionally, preprocessing the fixation data to include the fixation level visual occlusion feature considerably improves reliability of the fixation intensity feature. This work suggest that this model can be extended to open new directions for using eye gaze in spoken language understanding. See figure 4.

Fusion Methods

It is important to note that eye-tracking data is not the only useful modality for predicting user affect. Affective computing utilizes various data sources to make informed predictions on human computer interaction. In order to make use of multiple data sources, Feature fusion is a technique commonly employed in multimodal affective computing that involves the row-wise concatenation of the features from different modalities into one larger feature set for each segment. To provide an in context example, we will discuss the feature fusion of AVEC 2014 speech feature data and eye tracking data. [3]

[4]

There are different ways feature fusion can be achieved in affect prediction. Figure 2 specifically outlines feature fusion as if arousal and valence occur independently. In other words, these implementations only allow inputs to be classified as arousal and valence but not both. Comparatively, Figure 3 aims to exploit the correlations and covariances between arousal and valence emotion dimensions. The following sections will discuss each of the figure graphs in detail.

Speech and eye gaze feature level fusion

This feature fusion method takes features from both modalities and simply adds them together before training a classifier. For example, each segment of ground-truth data in this work would have 2,268 AVEC 2014 speech features and 31 eye gaze features for a total feature vector dimensionality of 2,299 combined speech and eye gaze features. This idea is shown in figure 2 A.

Averaged prediction fusion

Rather than adding each feature set modality into one, this feature fusion method runs a classifier on each modality. In this case, this means there is one classifier for speech and another classifier for gaze. The predictions of speech and eye gaze for a given segment are averaged to

give the final prediction for the segment as shown in figure 2 B.

This method is similar to bagging in ensemble methods.

Model based input fusion

This method is similar to averaged prediction fusion. Data from both modalities are partitioned and a classier is run on each portion. In this method, the final decision is made with an additional classifier that uses the previous classifier outputs as inputs as shown in figure 2 C.

This method is similar to stacking in ensemble methods.

Output associative based input fusion

Output-associative fusion, aims to exploit the correlations and co-variances between arousal and valence emotion dimensions. This method not only partitions a classifier for each modality, but also for each dimension (valence and arousal). The final prediction to be made uses the same stacked methodology as in the previous section. Output-associative fusion is shown in figure 3.

Eye-tracking Resources

- Eye Movement Data Analysis Toolkit (EMDAT) -- a library for processing eye gaze data.

- Tobii -- Eye-tracking hardware.

Annotated Bibliography

- ↑ Jaques, N., Conati, C., Harley, J. and Azevedo, R. Predicting Affect from Gaze Data During Interaction with an Intelligent Tutoring System.Proceedings of ITS 2014, 12th International Conference on Intelligent Tutoring Systems , p. 29-28.

- ↑ 2.0 2.1 Prasov Z, Chai JY, Jeong H. Eye Gaze for Attention Prediction in Multimodal Human-Machine Conversation. AAAI Spring Symposium: Interaction Challenges for Intelligent Assistants 2007 Mar (pp. 102-110). Cite error: Invalid

<ref>tag; name "attention" defined multiple times with different content - ↑ O'Dwyer J, Flynn R, Murray N. Continuous affect prediction using eye gaze. In Signals and Systems Conference (ISSC), 2017 28th Irish 2017 Jun 20 (pp. 1-6). IEEE.

- ↑ J. O Dwyer, R. Flynn, and N. Murray, Continuous affect prediction using eye gaze and speech, in 2017 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), 2017, pp. 2001-2007.

To Add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.

|

|