Hyperparameter value pruning in Bayesian Optimization

Using SHapley Additive exPlanations for hyperparameter value pruning in Bayesian Optimization

Here we test the hypothesis that using Interpretability of Machine Learning, Bayesian Optimization (BO) can quickly learn the expensive regions in the search-space.

Principal Author: Mayank Tiwary, Anubhav Garg

Abstract

Big data systems like Apache Spark expose hundreds of knobs (like spark.executor.memory, spark.serializer) which need to be tuned for minimizing the execution time. The total search space which needs to be considered for tuning these knobs is extremely huge. In literature AutoTuners have used BO based tuning setup for figuring out optimal values of the knobs. These tuners train their ML models per workload on a production scenario. Training on production becomes costly because the ML based tuners have to explore different regions in the search space. And one bad knob value can slow down the execution time by several factors when compared with the execution time achieved by setting the system with default knob values. For example, one bad knob value while executing KMeans workload on Spark increases the execution time by 17X. The tuning cost incurred on production can get amortized only when the tuner suggests good knob values which improves the execution time compared with the default execution time. The amortization of training cost becomes hard if a bad knob value slows down the total execution time by several minutes or hours. To minimize the training cost, the BO style tuners need to effectively explore the search space and avoid bad or expensive regions. Though a BO style tuner can automatically learn about these regions, in this work, we explore the hypothesis that can we help a BO style tuner to quickly learn these regions using Interpretability of ML.

Builds on

This work uses Bayesian Optimization as the core tuning engine for Apache Spark. It also uses Interpretability of Machine Learning (Explainable AI) to figure out and prune bad knob values which would lead to a very slow execution of the workload.

Related Pages

In this work, we use SHapley Additive exPlanations (Shapley values) for quantifying the ML-interpretability. This work is closely related to Tuneful (a Spark ML-tuner which uses BO) [1]. The use of Shapley values was also been evaluated in an evaluation paper [2] where the authors used SHapley Additive exPlanations (SHAP) to prune the entire knob instead of pruning the specific knob values while tuning knobs for databases instead of Apache Spark.

Content

Motivation and Background

Optimizing the behavior of Apache Spark applications is possible through a wide range of configuration options available, such as memory management, parallelism, and serialization. Commonly-tuned Spark configurations include spark.executor.memory, which allocates memory per executor process, increasing performance for memory-intensive applications, but also leading to out-of-memory errors if set too high. The spark.serializer configuration determines the serialization format for data transmission between Spark processes, with Java serialization as the default option, and other formats, such as Kryo, providing better performance for specific data types. Adjusting spark.sql.shuffle.partitions controls the number of partitions used for shuffling data in Spark SQL applications, improving performance for applications with high data shuffling requirements. spark.default.parallelism sets the default number of partitions for RDDs and dataframes, optimizing performance for applications with large datasets, albeit increasing the overhead of task coordination and scheduling. Lastly, spark.streaming.backpressure.enabled enables automatic rate control for Spark Streaming applications, with Spark dynamically adjusting the input rate based on processing rate to prevent system overloading.

Fine-tuning these and other Spark configurations can significantly impact Spark application performance, depending on the specific use case and workload. To streamline the process, there are available tools for automated configuration tuning, such as Tuneful [1]. Overall, optimizing Apache Spark configurations is critical in enhancing the scalability and efficiency of Spark-based big data processing applications.

ML-based tuners (which use BO) are the widely adopted tuners because they can do a knowledge transfer of tuning experiences from one workload to other. However, the problem with these tuners are that they need huge set of observational data (training data). Generating the observational data involves changing the knob values and observing/measuring the execution time while executing a workload. It is important to note that for each workload the tuner needs to be seperately trained [3]. It becomes challenging to collect training data specifically on production scenarios because playing around with knobs in production is often not permitted. This is mainly because if changing a knob configuration leads to a extreme slowdown of execution time when compared with the default execution time.

Shapley Values and SHapley Additive exPlanations

Given any ML model these tools interpret the models and find out insights which can explain the learning of the model. The most recent work I found is the SHapely Additive ExPlanations (SHAP) method [4]. This work interprets the variance in outcome variables caused by the individual features. It computes the shapley values for every feature using a cooperative game-theoretic approach. For example, the below figure shows the SHAP’s output on Boston’s regression dataset (Shapley values for every feature from the dataset), which clearly shows the features which are positively/negatively affecting the outcome i.e. .

SHAP just takes input as the trained model and interprets its learning. In the example shown above, the plot presents the interpretability of just one data point on the trained model for . In this example, the features like LSTAT contribute towards a higher value of and rather RM contributes towards a lower value of . Here the length of the bars represents the Shapley values computed where a positive Shapley value increases the value of and vice versa. However, it is important to note that this plot only shows Shapley values for one data point. In the evaluation below, we use bee swarm plots to visualize the Shapley values for hundreds of data points.

Limitations of tuning Spark knobs with BO based tuners

The biggest limitation of this class of tuners is that it needs to be trainied seperately for different workloads. This becomes more challenging on production scenario because its highly possible that changing a knobs value might degrade the performance. Authors in Tuneful [1] propose an amortization model where the tuners training cost (cost incurred while gathering the training data on production) gets amortized after the training is completed only when the tuner starts recommending better knob values which leads to a better performance. However, this amortization gets more and more difficult if gathering training data takes a lot of time (this time delay happens because the tuner might have picked bad knob values which slowed down the execution by several factors).

Hypothesis

In this work we test the hypothesis that BO based tuners are a bit slow in learning bad regions in the search space compared to SHAP. The BO based tuners will also eventually learn about the bad regions in the search space after performing experiments (training data generation phase). However, the total iterations it takes before it can learn about the bad regions is high and this could make the amortization of training cost difficult. On the other hand, we want to test the hypothesis that SHAP can be used to find out these regioins in the search space bit early and the tuner can quickly prune these regions making the amortization process better.

Tuner Architecture

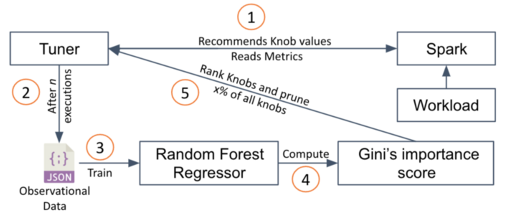

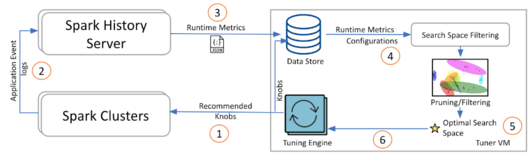

The flow of the architecture presented in the above figure can be summarized as follows:

- The tuning plugin (which executes as a process on the Spark Master node) asks the tuner for optimal knob values, and the tuner replies with a recommendation.

- The tuning plugin configures the Spark with the knob values, and executes the workload. Upon execution, the specific application logs are written to the history server.

- The tuner then reads the runtime metrics from the Spark's history server. It then pre-processes it and stores the metrics in a data store.

- The search space filtering engine starts to read the runtime metrics from the data store and prunes the search space effectively.

- The obtained search space is sent to the tuning engine.

- The tuner restarts from scratch with the new search-space.

Most of the BO style tuners have the search space filtering and tuning engine as the main subcomponent. The main contribution of this work is to improve the search space filtering so that the tuning engine can effectively explore the search space.

Search Space Pruning

The existing tuners in literature focus on pruing the entire knobs that have little or no impact on the execution time. These pruning strategies use importance scores like Gini's importance score to prune the knobs that have low impact on the execution time. Here is a short overview of the knob pruning strategy:

Knob Pruning Approach/Algorithm (from Tuneful: [1] the base tuner we use)

The knob pruning approach aims to find out the non-influential knobs and prune them down from the search space. The idea here is to train a meta model like a Random Forest Regressor from the trained model, compute the Gini’s importance score and rank the knobs/features using the score. The Random Forest Regressor (RFR) works here because the majority of Spark knobs are either categorical or discrete and RFRs work very well with such types of features. The knob pruning process can be summarized as follows:

The knob pruning process takes place in multiple rounds. For each round, the steps are presented in above Figure. The steps are as follows:

- Step 1: Run tuner for n iterations: where in each iteration, the tuner generates random knob values, and using these values Spark executes a workload.

- Step 2: From the execution data after n executions, generate observational data.

- Step 3: From the observational data, train a Random Forest Regressor (RFR).

- Step 4; From the trained model, compute the Gini’s importance score for each knob.

- Step 5: Based on the generated importance score rank the knobs and prune x % of all knobs.

In Tuneful, the authors use a total of 30 knobs and the tuner performs the knob pruning in two rounds each after 10 executions (n). In the first round, the tuner prunes out 40% of the total 30 knobs and then in the second round the tuner again prunes out 40% of the remaining knobs. After a total of 20 executions with 2 rounds of pruning the tuner has a total of 10 knobs to explore further and tune.

Computing Ginis Importance Score

The Gini coefficient is a statistical measure utilized to assess the quality of features used to train a model. Let's try to understand it in the context of the distribution of income or wealth within a population.

It is calculated by taking the ratio of the area between the Lorenz curve, which displays the cumulative percentage of total income or wealth held by the population, and the line of perfect equality, where each individual has equal income or wealth, to the area beneath the line of perfect equality. The formula for the Gini coefficient is , where represents the area between the Lorenz curve and the line of perfect equality, and denotes the area beneath the line of perfect equality. The value of the Gini coefficient ranges from 0 to 1, where 0 represents complete equality (all individuals possess the same income or wealth), and 1 represents perfect inequality (one individual holds all the income or wealth, while others have none). The Gini coefficient is used as a comparative measure of inequality across different populations or time periods. However, it has limitations, such as its sensitivity to changes in the middle of the income or wealth distribution and its inability to account for variations in the sources of income or wealth.

Value Pruning Approach/Algorithm (proposed for testing Hypothesis)

The value pruning approach operates at one level below the knob pruning, where instead of pruning an entire knob, it prunes the specific values of the knobs (bad regions). Unlike the knob pruning approaches which aimed at finding out non-influential knobs, the value pruning approach aims at finding out the bad values in the search space which usually increases the execution time by several factors.

In order to find out the bad values we train a meta model similar to the knob pruning approach, where we train a Random Forest Regressor and use a model interpreter like SHAP [4] to compute the Shapley values for each knob value used in the training data. The classic Shapley values help in decomposing the contribution of individual knobs and its values towards the outcome (execution time). The SHAP considers the features/knobs as game players in a cooperative game. “The Shapley value is the average expected marginal contribution of one player after all possible combinations have been considered” [4]. For example, if an execution time is 10 mins, the Shapley values help in understanding which knob values contributed towards increasing and decreasing execution time. Here a negative Shapley value means the knob is contributing towards the minimization of the execution time and vice-versa. The value pruning process can be summarized as follows:

The value pruning process takes place in a single round and steps are presented in the above Figure. The details are as follows:

- Step 1: Run tuner for n iterations: where in each iteration, the tuner generates knob values, and using these values, Spark executes a workload.

- Step 2: From the execution data after n executions, generate observational data.

- Step 3: From the observational data, train a Random Forest Regressor (RFR).

- Step 4; From the trained model, compute the Shapley value for each knob value in the training data and 500 anchor points randomly created (here one anchor point represent randomly chosen configuration value set) using SHAP’s model interpreter.

- Step 5: Based on the Shapley values, from the entire search space prune all the knob values whose Shapley values are positive.

We use a total of 63 knobs and the tuner performs the value pruning in a single round, after 30 executions (n). The tuner prunes out all the knob values from the search space which have a positive Shapley value. After the value pruning, the leftover search-space is expected to have no region which can adversely affect the outcome.

Experimental Setup

The Spark cluster is a 5 datanode and 1 namenode cluster. This is deployed on Kubernetes and uses HDFS as the storage layer. Here each datanode has a total of 7 vCPUs and 12 GB Memory. The tuner is deployed on a seperate tuner-VM which uses the Bayesian Optimization implementation from HyperOpt [6]. In this evaluation we selected a total of 63 Spark knobs which have been classified in the literature in terms of highly impactful knobs.

We evaluated the proposed value pruning approach for two workloads: Kmeans and WordCount. For KMeans, the dataset loaded on Spark was of size 78 GB and for WordCount the size was 321 GB. Here the dataset was part of the dataset created from HiBench [7]. Here Spark running a workload means that the Spark runs KMeans on a clustering dataset.

One important aspect to note here is that in case of Tuneful after the tuner prunes out the non-influential knobs, say after 20 or 30 iterations, the tuning process has to be restarted as the features have reduced. So as in our proposed case of value pruning, once the tuner prunes out the bad values it has to restart the tuning process as the search space has been altered and the previously used performance prediction model (GPR) cannot be used.

Evaluation with KMeans workload on Apache Spark

As per the proposed value pruning algorithm, we first collected observational data with 30 iterations (30 data points) and then performed the knob value pruning using the above proposed algorithm. The beeswarm plot after with observational data looks like this:

The above bee swarm plot shows the shapley values plotted for each of the knob/feature value when the RFR model is trained with observational data obtained by executing KMeans workload on Spark. The color of the dots in the plot presents the feature value as per the scale shown in the right. Overall this plot represents that high values of knob/feature-58 has high Shapley values. This means higher values of this knob causes higher execution time and hence these knob values needs to be pruned out. Similarly we prune the bad values of feature/knob 47.

The above figure shows the comparision of KMeans execution times with proposed value pruning and a vanilla BO with knob pruning. We used cummulative execution time so as to visualize the training cost amortization i.e. the cost incurred due to BO's exploration must be amortized afterwards. While using the Tuneful, the first round of knob pruning (as explained above Knob Pruning Approach/Algorithm) happens at 15th iteration and the final pruning happens at 30th iteration. After this there were only 15 impactful knobs available for the BO to explore and optimize. On the other hand, for the proposed value pruning, no knob pruning happens rather we prune the specific bad knob values. The value pruning happens at the 30th iteration. It is important to see that even after knob pruning at 30th iteration Tuneful's BO still explores bad regions (as part of its learning process) causing peaks in the cummulative execution time. Here bad regions are small peaks on the cumulative plot after 30th iteration. Instead, in case of the proposed approach, once these values are pruned (at the 30th iteration) BO does not explores these regions any more and shows a better amortization curve. The cumulative execution time curve of the proposed approach shows better amortization after 30th iteration also because of the reason that the low values of feature/knob 58 and 47 (whose high values were pruned) leads to better outcome as per the bee-swarm plots shown above. And now as the high values have been already pruned after 30th iteration, the tuner is left to explore only the good regions which leads to better outcome than the default execution time.

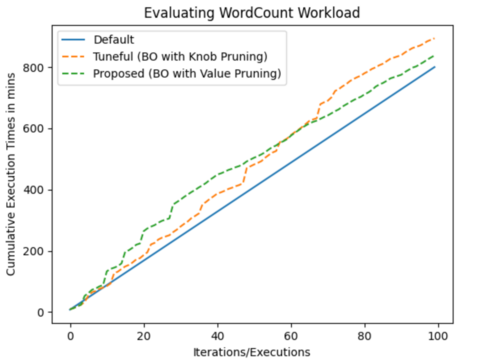

Evaluation with WordCount workload on Apache Spark

As per the proposed value pruning algorithm, we first collected observational data with 30 iterations (30 data points) and then performed the knob value pruning using the above proposed algorithm. The beeswarm plot after with observational data looks like this:

The above bee swarm plot shows the shapley values plotted for each of the knob/feature when the RFR model is trained with observational data obtained by executing WordCount workload. The color of the dots in the plot presents the feature value as per the scale shown in the right. Overall this plot represents that high values of knob/feature-57 and low values for knob/feature-54 has high Shapley values. This means a high value of knob/feature-57 and low value of knob/feature-54 causes higher execution time and hence these knob values needs to be pruned out.

The above figure shows the comparision of WordCount execution times with proposed value pruning and a vanilla BO with knob pruning. While using the Tuneful, the first round of knob pruning (as explained above Knob Pruning Approach/Algorithm) happens at 15th iteration and the final pruning happens at 30th iteration. After this there were only 15 impactful knobs available for the BO to explore and optimize. The value pruning happens at the 30th iteration. It is important to see that even after knob pruning at 30th iteration Tuneful's BO still explores bad regions (as part of its learning process) causing peaks in the cummulative execution time. Here bad regions are small peaks on the cumulative plot after 30th iteration. Instead, in case of the proposed approach, once these values are pruned (at the 30th iteration) BO does not explores these regions any more and shows a better amortization curve. The cumulative execution time curve of the proposed approach shows better amortization after 30th iteration also because of the reason that good values of feature/knob 57 and 54 (whose bad values were pruned) mostly leads a to better outcome as per the bee-swarm plots shown above. And now as the bad values have been already pruned after 30th iteration, the tuner is left to explore only the good regions which leads to better outcome than the default execution time.

Limitations

Though the proposed value pruning approach prunes out the bad knob values or the expensive regions from the search space, still the search space is significantly large enough (with lots of non-influential regions) for the tuner to explore. The knob-pruning algorithm is better in pruning the non-influential regions. On the otherhand, knob-pruning algorithms does not prunes the bad/expensive regions form the search space. Hence, it is worth to use both the approaches with a specific logic to prune both expensive and non-influential regions from the search space.

On the other front, we also noted that there are only a set of workloads which slows down by several factors when executed with bad knob values. This also means that not all workloads suffer from this problems. We conclude this with our experience of using different workloads from HiBench benchmark designed for Apache Spark. Though in production scenario, where we have a very high diversity of workloads, its difficult to make a conclusion like this.

Conclusion

In this article we present our hypothesis that we wanted to test. This hypothesis was that the Interpretable ML can help the BO style tuners to quickly learn the bad/expensive regions in the search-space and avoid them. We proposed a short algorithm similar to the knob pruning algorithm used by Tuneful that helps the tuning framework to figure out the expensive regions. Lastly we experimented and compared the results in terms of tuning cost amortization (using cumulative execution time plot) with Tuneful which is a popular tuner for Apache Spark. As part of the results, we found that the the proposed algorithm helps the BO to quickly locate the bad/expensive regions and avoid them. We tested our hypothesis with two Spark workloads i.e. KMeans and WordCount.

Annotated Bibliography

- ↑ 1.0 1.1 1.2 1.3 Ayat, Fekry (2020). "To tune or not to tune? in search of optimal configurations for data analytics" (PDF). Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining.

- ↑ Xinyi, Zhang (2021). "Facilitating database tuning with hyper-parameter optimization: a comprehensive experimental evaluation" (PDF). arXiv preprint arXiv:2110.12654.

- ↑ Fekry, Ayat (2020). "Accelerating the configuration tuning of big data analytics with similarity-aware multitask bayesian optimization" (PDF). IEEE International Conference on Big Data (Big Data).

- ↑ 4.0 4.1 4.2 Lundberg, Scott M. (2017). "A Unified Approach to Interpreting Model Predictions". Advances in Neural Information Processing Systems 30 (NIPS 2017).

- ↑ Xinyi, Zhang (2021). "Facilitating database tuning with hyper-parameter optimization: a comprehensive experimental evaluation" (PDF). arXiv preprint arXiv:2110.12654.

- ↑ "HyperOpt: Distributed Asynchronous Hyper-parameter Optimization". 2022. Retrieved 2023.

|first=missing|last=(help); Check date values in:|access-date=(help) - ↑ "The bigdata micro benchmark suite". 23-04-2023.

|first=missing|last=(help); Check date values in:|date=(help)