Course:CPSC522/Analysis of hierarchical posterior for Language modeling

Analysis of hierarchical prior for language modeling

Principal Author: Kevin Dsouza

Collaborators:

Hypothesis

Implementing hierarchical prior ramps up the performance of the language model

Builds on

This page builds on

Introduction

To start off, let us first understand why we need to form hidden representation of our data. Given data (image or sentence), we want to express it in the form of hidden (latent) variables through a network that can model the relationship between them. These hidden variables store the salient information of the given data. In the domain of Natural Language Processing, this compact representation can be utilized to do a variety of tasks such as classification, reconstruction, and translation. This relation between the data () and the hidden variables () can be modeled by the posterior distribution . In case of sequential data, this distribution can be modeled by using recurrent neural frameworks like a Long Short-Term Memory (LSTM) or a Gated Recurrent Unit (GRU).

In a regular Recurrent Neural Network Language Model (RNNLM), the hidden representation is a deterministic vector (usually the hidden state of the LSTM) which forms the basis for future operations. The RNN is very good at mimicking the local statistics of the given data and naturally adapts to represent the statistics of the n-grams present in the data sequence. What is therefore lacking is variability in representation, which is a fundamental nature of generative models and human language itself. Here is where the variational models come in. Instead of a deterministic representation of the entire sequence, the variational models drive the representation to a space around this vector from which samples can be drawn later. Figure 1 shows such a Gaussian distribution whose mean () and variance () are parametrized using a neural network.

In order to drive the hidden representation to a distribution, we need to have prior knowledge of the distribution that we want to be close to. This prior distribution of the latent variables P(Z) is usually chosen to be a Normal Gaussian. We want our posterior distribution to be as close to our prior distribution as possible (in the end) so that the samples that we draw later from the prior will be able to reconstruct the data distribution satisfactorily (Let us assume for the sake of explanation that our task is reconstruction of the original data distribution). How is it possible that a sample drawn from a normal Gaussian can regenerate the data distribution? The key here is to understand that any distribution in dimensions can be generated by a set of variables that are normally distributed via a mapping using a complicated function [1]. This complicated function in our case can be a neural network that learns useful latent representation at some layer and then uses this to further generate the data distribution (decoder).

Variational Autoencoders

Variational Autoencoders (VAEs) are a class of autoencoders that aim to reconstruct the data distribution by introducing variability in the intermediate representation. The joint distribution of can be written as , which requires you to draw a sample from the prior and and then sample from the conditional likelihood of . This can be any distribution like a Bernoulli or a Gaussian.

Now the inference in our model i.e. getting good latent variables conditioned on the data samples or the posterior is given by

The issue with this is that the real posterior is intractable as the denominator is a marginal written as

which requires us to compute the integral over all the configuration of latent variables and is thus exponentially complex.

Therefore an approximate posterior is designed to mimic the true posterior and a lower bound to the log marginal distribution is maximized as shown below.

We want to maximize the expectation of the log marginal distribution under the given data distribution . is the actual distribution of the data whereas is the one that we reconstruct. i.e.

Now the log marginal is itself an expectation over the samples of latent variables () taken from the posterior ( because of the fact that we want to obtain hidden variables that can best explain the data.

From Bayes rule we have,

Putting this back in we get,

The issue with this is that the true posterior is intractable as it involves calculating the marginal by integrating out the all latent variables. Therefore an approximate posterior is designed. This results in,

The second term is the KL divergence between the approximate posterior and the prior and the final term is the KL divergence between the approximate posterior and the true posterior .

Therefore we get,

As the true posterior is intractable this cannot be directly estimated. But as the KL divergence is always positive, the third term can be taken to the left and thus lower bound is obtained. This lower bound is called the variational lower bound and forms the basis of optimizing the variational autoencoders.

The RHS is the variational lower bound which has to be maximized. When optimizing we minimize the negative of the lower bound. This can be broken down into two terms, the reconstruction loss (negative log likelihood of data) and the KL loss.

Figure 2 shows the graphical model describing the VAE. The generative function is given by , where are the generative model parameters. The inference function is our approximate posterior given by , where are the inference model parameters.

The question remains whether a single posterior distribution is informative enough to capture the nuances of a lengthy sentence. If the underlying data distribution is fairly complex (highly multimodal), then a regular posterior won’t do a good job of modeling the same. Therefore, it would seem natural for us to move to more complex posterior distributions that can be close to the real intractable posterior such that the variational lower bound is maximized. Towards this end, we can explore a hierarchical posterior distribution which can exploit the natural hierarchy that exists in a sentence. By this, I mean that each word is partly dependent on the word that precedes it and influences the word that follows it, and altogether these words become dependent in the context of the entire sentence. A posterior that can model these rich dependencies would allow the exploration of global sentence context.

Hierarchical Posterior

When the posterior is hierarchical, all the words are dependent on the words preceding them. Adding to this a global latent variable is considered that is dependent on all the words in the sentence in order to give the sentence context. This posterior can be written as

Figure 3 shows this dependence more clearly. It can be seen that every latent word sample () depends on the previous latent word and the current word (). The global latent depends on all the latent words.

Hierarchical Prior

A hierarchical prior will look much like the for hierarchical posterior and has similar properties in the sense that it has richer representational power. This departure from normal Gaussian might help in modeling data that is highly multimodal in nature. The hierarchical prior can be written as

The prior is modeled in such a way that all the latent words depend on the latent words preceding them and the global latent. It should be noted that the global latent variable is considered to be a Normal Gaussian.

It would intuitively make sense to argue that the prior we are assuming should be descriptive enough to represent the data. Would it be acceptable for the prior to be a simple (normal gaussian say) and the posterior to be hierarchical and complex? In which case will the posterior perform better? This is something that I plan to check using experiments and will draw conclusions from the results that I obtain.

Evaluation

The evaluation is conducted on the English Penn TreeBank dataset. Penn Treebank dataset is a large annotated corpus consisting of over 4.5 million words of American English [2]. All the models are run using the Deep Learning framework Tensorflow.

With hierarchical prior and hierarchical posterior

The first experiment I conduct is with a hierarchical prior, with only the global latent being a Normal Gaussian.

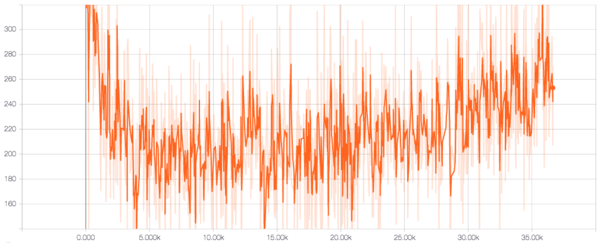

Figure 4 shows the reconstruction loss, which is the negative log likelihood of the data. It can be seen that the reconstruction loss decreases up to 5000 time steps after which there is a steady increase. This is because during training a concept called KL annealing is implemented. This involves zeroing the KL loss and gradually increasing its weight to 1. So until 5000 time steps, the network focuses entirely on reconstruction and hence the decrease. But later, the KL term kicks in we see the reconstruction suffering.

From Figure 5 we can see that the KL loss starts high and rapidly decreases. This is characteristic of a VAE where it tries to match the posterior with the prior and reduce the KL loss. In our case, we have two RNNs with high representational power mimicking the prior and the posterior, which might be the reason why they match up so soon. The KL loss for the global latent shows a similar behavior as seen in Figure 6.

With simple prior and hierarchical posterior

In this experiment, a Normal Gaussian prior is used for all the words and the global latent representation.

Conclusions

Annotated Bibliography

- ↑ A tutorial on Variational Autoencoders

- ↑ Marcus, Mitchell P., Mary Ann Marcinkiewicz, and Beatrice Santorini., 1993, "Building a large annotated corpus of English: The Penn Treebank.", Computational linguistics 19.2 (1993): 313-330.

Further Reading

|

|

![{\displaystyle \max E_{X\sim P_{data}(X)}[log(P_{\theta }(X))]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/cb841010032bd3e121c565d829f7962c0f99d1c2)

![{\displaystyle log(P_{\theta }(X))=E_{Z\sim P_{\theta }(Z|X)}[log(P_{\theta }(X)]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/764a7bd353af49582f43b7aba1fe33e7a6258cf5)

![{\displaystyle E_{X\sim P_{data}(X)}[log(P_{\theta }(X))]=E_{X\sim P_{data}(X)}[E_{Z\sim P_{\theta }(Z|X)}[log({\frac {P_{\theta }(X,Z)}{P_{\theta }(Z|X)}})]]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/52e691bd91f1daece56d2aca3643e28dde948bbe)

![{\displaystyle =E_{X\sim P_{data}(X)}[E_{Z\sim Q_{\phi }(Z|X)}[log({\frac {P_{\theta }(X|Z)P_{\theta }(Z)}{P_{\theta }(Z|X)}})]]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/b302d2fdbc42163dec5494cbde22d0fcc76de420)

![{\displaystyle =E_{X\sim P_{data}(X)}[E_{Z\sim Q_{\phi }(Z|X)}[log(P_{\theta }(X|Z)]-E_{Z\sim Q_{\phi }(Z|X)}[log({\frac {P_{\theta }(Z|X)}{P_{\theta }(Z)}})]]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/6b3767a8fc0860a019f373dc53105f857ee37b85)

![{\displaystyle =E_{X\sim P_{data}(X)}[E_{Z\sim Q_{\phi }(Z|X)}[log(P_{\theta }(X|Z)]-E_{Z\sim Q_{\phi }(Z|X)}[log({\frac {P_{\theta }(Z|X)}{P_{\theta }(Z)}}*{\frac {Q_{\phi }(Z|X)}{Q_{\phi }(Z|X)}})]]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/757bfe16c21bd387c2c55cbb3d166df9cab8ce5f)

![{\displaystyle =E_{X\sim P_{data}(X)}[E_{Z\sim Q_{\phi }(Z|X)}[log(P_{\theta }(X|Z)]-E_{Z\sim Q_{\phi }(Z|X)}[log({\frac {Q_{\phi }(Z|X)}{P_{\theta }(Z)}})]+E_{Z\sim Q_{\phi }(Z|X)}[log({\frac {Q_{\phi }(Z|X)}{P_{\theta }(Z|X)}})]]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/e29c65118e0d4df2a0bfde5cf6205b6c7aa67b27)

![{\displaystyle =E_{X\sim P_{data}(X)}[E_{Z\sim Q_{\phi }(Z|X)}[log(P_{\theta }(X|Z)]-KL(ApproxPost||Prior)+KL(ApproxPost||TruePost)]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/33baf4de3089079d6558c0c9225be28dfb84b066)

![{\displaystyle E_{X\sim P_{data}(X)}[[log(P_{\theta }(X))]-KL(ApproxPost||TruePost)]=E_{X\sim P_{data}(X)}[E_{Z\sim Q_{\phi }(Z|X)}[log(P_{\theta }(X|Z)]-KL(ApproxPost||Prior)]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/a4afd99e5abae65d019f926acd404a749a89f99d)

![{\displaystyle E_{X\sim P_{data}(X)}[log(P_{\theta }(X))]\geq E_{X\sim P_{data}(X)}[E_{Z\sim Q_{\phi }(Z|X)}[log(P_{\theta }(X|Z)]-KL(ApproxPost||Prior)]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/ebf9d5015d6310e74ae35bfe3299d5c026d24671)