Group19

Introduction

As a society, we often welcome and value the opinions, views and input of others and such information becomes significant reference material in the process of our own decision-making or opinion formation (Feldman, 2013; Rao et al., 2014; Taboada, 2016). Comprehension of the expression of emotion, opinion, subjectivity and appraisal and their expression through language as text is a capability that is fundamentally human (Taboada, 2016). While a human may be able to easily and quickly discern whether a famous blogger had a positive impression of the newest Marvel movie, this is no easy feat for a computer that has no concept of natural spoken or written language. Without any context of the meaning of individual words, and their subsequent meaning when woven together, a computer is unable to simply deduce whether a piece of text conveys joy, anger, frustration, or otherwise. Sentiment analysis seeks to solve this problem by using natural language processing to recognize keywords within a document and thus classify the emotional status of the piece.

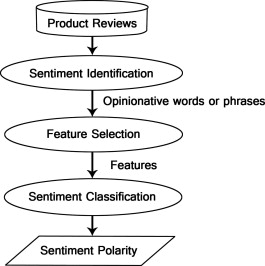

Sentiment Analysis (SA) or Opinion Mining (OM) is the computational study of people’s opinions, attitudes, and emotions toward an entity (Medhat, Hassan, and Korashy, 2014). The entity can represent individuals, events or topics. Often, the terms sentiment analysis and opinion mining are used interchangeably. However, some researchers feel that sentiment analysis and opinion mining represent slightly different notions (Cambria, 2016). Cambria (2016) describes opinion mining as the process of extracting and analyzing one’s opinion about an entity while sentiment analysis identifies the sentiment expressed in a text then analyzes it (Cambria, 2016). For our project, we will treat the two as interchangeable, as there exists a high degree of overlap and inextricable link between the two, as ultimately, the goal of both processes is to identify the sentiment (and as an extension, the opinions) nested in its input data. In general, sentiment analysis approaches are carried out as shown in Figure 1 from Medhat, Hassan, and Korashy (2014): the text data input is parsed into its constituent words, and uses any known emotional associations to identify the sentiment of the individual constituents which ultimately contribute to the overall sentiment polarity of the input.

Understanding and awareness of others’ opinions on certain products, stock, or even political events is information of value to not the general population, but marketers, public relations official, reporters and advertisers alike (Feldman, 2013; Rao et al. 2014). These far-reaching applications of sentiment analysis has propelled it to be one of the fastest growing areas of research at the intersection between computer science and linguistics.

Basic sentiment analysis algorithms use natural language processing (NLP) to classify documents as positive, neutral, or negative. Programmers and data scientists write software which feeds documents into the algorithm and stores the results in a way which is useful for clients to use and understand. Keyword spotting is the simplest technique leveraged by sentiment analysis algorithms. Input data is scanned for obviously positive and negative words like 'happy', 'sad', 'terrible', and 'great'. Algorithms vary in the way they score the documents to decide whether they indicate overall positive or negative sentiment. Different algorithms have different libraries of words and phrases which they score as positive, negative, and neutral (Pang et al, 2002). When exploring these algorithms, you might run into the terms for these libraries of words such as "bag of words" or “emotional dictionary”.

While this approach was the cornerstone of the beginnings of sentiment analysis, it is not without its shortcomings. Consider the text, “The service was terrible, but the food was great!", there is a complexity that isn’t considered as it is composed with both positive and negative words, which would be weighed equally, but that may not accurately convey the sentiment of the overall phrase itself. Thus, more advanced algorithms will split sentences when words like 'but' appear- auch a case is called 'contrastive conjunction'. Then, the sentence would be parsed as such: "The service was terrible" AND "But the food was great!" meaning that the sentence generates two or more scores, which then must be consolidated. This is often referred to as binary sentiment analysis (Pang et al, 2002).

Traditionally, sentiment analysis algorithms made use of movie reviews and over the years, movie review datasets have grown at an exponential rate, with the rise of online mediums for users to post such reviews in an organized manner such as IMDB [1] and Rotten Tomatoes [2]. Algorithms such as SentiWordNet are designed specifically to identify the dominant sentiment of movie reviews, using two different linguistic feature selections containing adjectives, adverbs and verbs and n-gram feature extraction are performed. In aspect-oriented algorithm analysis of textual reviews of a movie is performed and then a sentiment label on each aspect is assigned. By using sentiment labels score is generated. Such scores for each aspect from different reviews are aggregated. Finally, based on all parameters a net sentiment profile of the movie is generated.

Naturally, much of the work in sentiment analysis has focused on highly subject texts, such as blogs or product and movie reviews. In pieces such as news articles and other media reports, however, such opinions are much more understated. Although support or criticism are sometimes expressed, the bias or sentiment of the journalist is often expressed indirectly, for instance by highlighting some facts while possibly omitting others or by the choice of words (Patil et al, 2016). The news and media article avenue has not been neglected by the sentiment analysis field, as algorithms and systems such as the FinSentS: a cutting-edge Sentiment Analysis and News Analytics engine that analysis news items for sentiment using dynamic data sources, which uses one or more variables in its query instead of hard-coded values, thereby enabling the ability to dynamically retrieve data, – such as online news stories and streaming data such as blogs. As a byproduct of the internet boom, the explosion of social media has led to an increasing use twitter messages to determine consumer sentiment towards a brand. The existing literature on Twitter sentiment analysis uses various feature sets and methods, many of which are adapted from more traditional text classification problems (Martinez-Camara, 2014). Moreover, Twitter-specific lexicon because of its reduced length set, which is significantly smaller (only 187 features) than in newspapers or movies, reduces modeling complexity, maintains a high degree of coverage over our Twitter corpus, and yields improved sentiment classification accuracy. Sentiments algorithms like TextBlob, which is a Python (2 and 3) library for processing textual data. It provides a simple API for diving into common natural language processing (NLP) tasks such as part-of-speech tagging, noun phrase extraction, sentiment analysis, classification, translation, and more.

While there has been extensive work down in the arena of sentiment analysis, with many algorithms citing 80% accuracy rates of sentiment detection, it is clear from our discussion above that the general direction in the field is the development of algorithms that are targeted for a particular type of text data (e.g. movie reviews, tweets, or news articles). While there are comparisons made between algorithms analyzing the same type of text data, we found little discussion around the comparison of algorithms across text-modalities. With such a large variety of algorithms available at present, and the wide array of algorithm methodologies, would algorithms not originally designed for a particular type of data still be able to analyze such data at the same level of accuracy as its native data?

Thus, we propose a project to compare algorithms created for sentiment analysis developed around different plain-text lexicons, such as Twitter, newspaper articles, and movie reviews, and evaluate the accuracy (degree of agreement) at which these algorithms can classify emotions compared to humans and compared to each other. There will be two sets of accuracy benchmarks: one being a control, developed by having humans classify sentiments of the datasets, and the other being the results yielded by an algorithm originally constructed for that data type. Thus, we plan to explore the question of whether an algorithm is able to achieve the same level of accuracy when dealing with a data type it was not originally built for, and how these results compare to a human classification. These data would greatly inform the merits of potentially taking combination methods to optimize sentiment analysis, and insight into what type of algorithms (and therefore methods) are best for what type of text data.

Hypothesis

We hypothesize that while algorithms are likely to be able to analyze text types in different domains, each algorithm’s interpretation of the same piece of text may vary, as different techniques are used in each algorithm to analyze the text despite aiming for the same goal. Thus, the algorithms may not necessarily agree to a high degree on the overall sentiment of a piece.

Interdisciplinary Nature of Project

The heart of sentiment analysis lies at the intersection between computer science and linguistics. In order to automate a fundamental human ability, computer scientists must develop algorithms to emulate human cognitive processes, and these structures can allow for efficient organization and retrieval of data, generic techniques for modeling, understanding, and solving different problems. Algorithmics is a modern and active area of computer science, and continues to develop rapidly. Sentiment analysis algorithms seek the best working solution to identify sentiment of texts as a human would. In order to develop such algorithms, there must be a deep understanding of natural language and how language is expressed and perceived, which naturally pulls in linguistics As sentiment algorithms are primarily built for text evaluation and in particular its emotionality, linguistics provides the lexical resources, distributional characteristics of terms and features in the language required to detect sentiment. Linguistics establishes the context which would necessarily be missing in a computer interpretation of plain text, which is essential for accurate sentiment detection. As language evolves, such algorithms must evolve alongside them and this intertwined nature of the two disciplines characterizes the essence of sentiment analysis.

Methods

To maximize representation of the variety of algorithms currently available, several algorithms designed each of the main types of text data being explored in the sentiment analysis field were selected: traditional blogs/newer online social media (considered microblogging), news articles, and movie reviews. In particular, we have selected 8 algorithms to compare: SentiCircles (Saif, Fernandez, He, and Alani, 2014), which was designed for Twitter (microblogging), SentBuk (Ortigosa, Martin, and Carro, 2012), which was designed for Facebook messages and statuses (microblogging), Nasukawa and Yi (2003), which was designed for traditional blog posts, Raina (2013) and GodBole, Srinivasaiah, and Skiena (2002) which were both designed around news articles, and Pang and Lee (2002), Pouransari and Ghili (2005) and SentiWord 3.0 (Baccianella, Esuli, and Sebastiani, 2010).

Algorithm Descriptions

SentiCircles (2014) is a sentiment analysis approach built around Twitter data (e.g. a tweet). It parses this data into words that are then plotted in a circle with polar coordinates and four quadrants: quadrant I being very positive, quadrant II positive, quadrant III being negative and quadrant IV being very negative. The output is a final sentiment classification of the tweet that is either positive, neutral or negative, which is calculated by computing the median sentiment after considering the polar coordinates and corresponding sentiments plotting the words in the SentiCircle.

SentBuk (2012) is a sentiment analysis approach built specifically for Facebook, which accepts Facebook status posts and wall posts. Words are parsed and tagged with sentiments and assigned a score from -1.0 to 1.0. The final output returns a sentiment class “positive”, “neutral” or “negative”.

GodBole, Srinivasaiah, and Skiena (2002) developed a sentiment analysis approach around news articles. Input is parsed and words are associated with certain polarity (positive or negative as a numerical value) and the algorithm queries both synonyms and antonyms, where synonyms are assigned the same polarity and antonyms are the opposite polarity. The final score is either positive or negative, which is computed as the sum of all the word scores.

Raina (2013)'s approach took in news article data. Sentences are parsed into concepts and then assigned to certain polarities. The final polarity score ranged from -1.0 to 1.0 and the final output is a sentiment class “positive” “negative” or “neutral”.

Pang and Lee (2002) approach took in movie reviews and using three standard machine learning techniques (Naive Bayes, maximum entropy classification, and support vector machines) classified the review as either positive and negative.

SentiWordNet 3.0 (2011) is an algorithm created around movies reviews as well. Its process consists of two main steps. In Step 1, words are parsed and annotated automatically as being "positive, negative or objective (neutral)". In Step 2, an overall score, which represents "positive" "negative" or "neutral" sentiment for the whole text is calculated in the "random-walk step", which is mathematically based and fully described in Esuli and Sebastiani (2007).

Pouransari and Ghili (2005) also took movie reviews as data and used bag of words, and skip-gram word2vec models followed by various classifiers, including random forest, SVM, and logistic regression to assign an overview sentiment of positive or negative to the movie review.

Nasukawa and Yi (2003)’s approach to sentiment analysis was based on analyzing text from webpages. This algorithm is centered around the around the fact that often adjectives confer polarity to phrases and picks out key words such as “good” “disappoint”, which are associated as positive or negative sentiment respectively. These keywords contribute to the overall sentiment of the sentence, which could be positive, negative or neutral.

Summary of Algorithms

| Input Data Type | Output | |

|---|---|---|

| SentiCircles (2014) | New Social Media (microblogging) | Positive, Neutral or Negative |

| SentBuk (2012) | New Social Media (microblogging) | Positive, Neutral or Negative |

| Nasukawa and Yi (2003) | Traditional Blogging | Positive, Neutral or Negative |

| GodBole, Srinivasaiah, and Skiena (2002) | News Articles | Positive or Negative |

| Raina (2013) | News Articles | Positive, Neutral or Negative |

| Pang and Lee (2002) | Movie Reviews | Positive or Negative |

| SentiWordNet 3.0 (2010) | Movie Reviews | Positive, Neutral, or Negative |

| Pouransari and Ghili (2005) | Movie Reviews | Positive or Negative |

Dataset Descriptions

We will utilize data from four publicly available datasets: Standford Sentiment Gold Standard Dataset, Kaggle IMBD Dataset, Kaggle News Article Dataset, and the ICWSM 2009 Spinn3r dataset.

Standford Sentiment Gold Standard Dataset: This dataset contains corpus of tweets. It has a total of 2034 Tweets.

Kaggle IMBD Dataset: This dataset originally contains 100,000 IMDB movie reviews that were previously selected for use in sentiment analysis. For the purposes of this study, we will utilize only half of the dataset which is without any rating labels, as to not bias the human subjects during the manual sentiment classification phase.

Kaggle News Article Dataset: The Kaggle News Article dataset was extracted from https://www.thenews.com.pk website. It contains business and sports related news articles from 2015 to early 2017. Its contents contain the heading of article, the article itself and the date and location it was posted. For our purposes, we will simply use the article text content itself as our input data.

ICWSM 2009 Spinn3r Dataset: The ICWSM 2009 Spinn3r dataset is a collection of raw (text-extracted) blog posts created at Stanford University.

Accuracy (Agreement Score) Evaluation

Control Group As a control, we aim to recruit a group of human participants to manually classify sentiment of the data extracts of each data type. As a reference, we looked to the development of SentiWord 3.0 by Baccianella, Esuli, and Sebastiani in 2011 and they had employed 5 human annotators to construct a smaller, manually annotated subset of the movie review dataset they utilized. Since we have an addition with two extra types of data, namely news articles and blogs/microblogs, we aim to recruit 20 annotators to manually annotate a subset of each of the 4 aforementioned datasets. As we have a larger volume of data overall, we would welcome more annotators should they be available, as a greater sample size will strengthen the benchmark for the human-identified sentiment of data. We will provide the data pieces in a randomized order to each participant to minimize any potential order bias. We chose to include this control group as often in the sentiment analyses body of literature, such manually labelled texts are used as benchmarks to evaluate the performance of different sentiment analysis methods (Nasukawa; Nielsen 2011). In addition, this will ensure that the algorithms are performing the task they were design to do – detect emotions as a human would.

Algorithm Comparisons To evaluate the relative accuracy of each algorithm with each type of data, the algorithm selected will be tested against with a dataset of its own type, and the other nonnative type datasets. The results from the algorithm testing its own native data and human control results will act as the baselines for comparison. Using these baselines, the degree of agreement between algorithms will be calculated as a proxy for an accuracy score. All input data, regardless of type, will be reduced to plaintext (i.e. exclude any emoticons) as the majority of algorithms do not have the capability to interpret beyond plaintext.

For example, say we start with evaluating the Twitter algorithm, SentiCircles – this will be our native algorithm. In Phase I, we would have a cohort of human subjects that will identify the sentiment (given choices positive, negative and neutral) a subset of the Standford Sentiment Gold Standard Dataset (Twitter dataset), creating the control responses. Then, in Phase II we would run the Standford Sentiment Gold Standard Dataset through the SentiCircle algorithm, which was designed for Twitter. This will provide the second baseline outputs of comparison. At this point, we would assign SentiCircle an agreement score relative to the manual classification outputs. For instance, if the SentiCircle identified all tweets with the exact same sentiments as the humans, the agreement score would be 100%, if the answers were 80% equivalent, the score would be 80% and so on. Lastly, the Standford Sentiment Gold Standard Dataset will be fed through all 7 other algorithms, and following and agreement scores will be calculated compared to the human control responses, as well as the native algorithm. Overall, each algorithm will be given all four datasets to evaluate, and each algorithm will have two agreement scores, one relative to the native algorithm and one relative to the human control, for that data type (e.g. Twitter or news articles). By having both scores, we will be able to observe agreement across algorithms, data modalities and to human classification.

Protocol Deviations

When algorithms that only output positive or negative labels are the native algorithm (e.g. Pang and Lee (2002) and Pouransari and Ghili (2005), any piece of data rated neutral by humans or the other algorithms will be excluded from the agreement score calculations to ensure a direct comparison can be made between outputs.

Discussion

The body of sentiment analysis literature has traditionally been represented by algorithms that are focused around one type of data, and generalized or ensemble algorithms for all types of texts have been less explored. As such, there follows an implicit assumption that an algorithm designed for Twitter would in turn be the most accurate and reliable approach to gauge sentiment of tweets. While there are comparisons of algorithms, comparisons are made within that data type, for example, newer Twitter algorithms are evaluated in comparison to older Twitter algorithms, such as in Gonçalves et al (2014). To better understand the capacity and capabilities of these algorithms, our project will add to the body of literature by comparing algorithms across different text platforms. We specifically decided on this type of protocol as it allows us to thoroughly compare various algorithms against different parameters in a systematic manner. It provides insight into the accuracy of every algorithm compared to validated baselines which would provide comprehensive results.

Gonçalves et al (2014) conducted a relatively analogous study, which compared eight different sentiment analysis algorithms on an existing Twitter dataset. Similarly, these algorithms were also assessed for agreement in sentiment detection of the given text. Gonçalves et al (2014) found that there was great variance in regards to agreement across algorithms, which scores ranging from 33% agreement to 80% agreement. The implications here are highly relevant to our study, as it indicates that even the same text, when analyzed by different sentiment tools, could have varied sentiment interpretations. Interestingly, there are even algorithms that were recorded to have a complete polarity shift with less than 50% agreement on the overall sentiment, such that one algorithm decides that the sentiment was positive while the other negative.

Considering the results Gonçalves et al (2014)'s study and the general trend in the literature so far, we predict that algorithms will have the highest agreement with the human control responses when encountering the data type it was originally designed for. Since these sentiment analyses approaches and algorithms are built around one type of text data rather than being a generalized algorithm across all text inputs, each algorithm is tailored to the nuances of the specific type of data. As such, these algorithms may not be as robust across different data modalities, and therefore our algorithms, when compared, may display low levels of agreement.

These results would aid to contribute further considerations in the development of new sentiment analysis algorithms. Should the results show, for example, that the Twitter algorithm indeed have the highest agreement with human baselines, then this may be an indication that perhaps the optimal way to continuing developing sentiment analysis algorithms is to identify a type of target data and specify the algorithm for that purpose. Even if low agreement scores between algorithms arise, this would be valuable information, especially when taken into consideration with the agreement scores with the human control responses, since this could shed light on which types of algorithms perform better against which type of data.

Our study greatly compliments the work already done by Gonçalves et al (2014) and others who have begun to compare algorithms, and adds to the body of literature by including not just one type of text. Gonçalves et al (2014) has since developed a combinational sentiment analysis system, called iFeel, which has the highest competitive accuracy when pitted against the 8 algorithms compared in their original 2014 study. It is important to note that syntactic relations are significant features for sentiment classification, but this brings forth a need for higher computation complexity and capacity to analyze nuance. With combinational techniques, perhaps certain algorithms can fill in the computational gaps of others, and better characterize existing datasets. Our study in combination of works such as Gonçalves et al (2014) will be a stepping stone and important data to consider when moving forward to broader implementations of sentiment analysis methods to different types of text inputs, and depending on the results, could help deciding what type of algorithm or which of the already existing algorithms is the best fit (yields the highest accuracy) for that type of text. Further studies to validate such results and inclinations would be valuable, and combinational algorithms to combat increasingly complex text expression should be further tested to identify which areas it excels in, and which areas still require improvement.

Limitations of Proposed Study

While our study aims to provide robust empirical data for the comparison of sentiment analysis algorithms, it is limited to the scope of the algorithms that we have chosen to represent in our study. While we tried our best to select various representative algorithms for each data type, there are so many of such algorithms that while these results may be informative as a preliminary step, it may not be absolutely represented of all the algorithms that are currently available. Further, all the algorithms examined in this study are based around English lexicon, therefore, doesn't speak to whether these results can account for language and cultural differences which would be an interesting avenue to take in the future to expand the implications of this study.

In addition, these datasets are from different time periods, which may not account for the changes of language over time, such as rise of slang and abbreviations to communicate, on the web and in online social media. Algorithms that were developed before the online social media was widely used and accessible may not be able to accurately detect these words as part of their analyzable lexicon, and therefore, may misinterpret overall sentiment of newer social media texts as the polarity of slang and abbreviations are not appropriately considered.

Conclusion

Through this project, we gained exposure to the booming field of research that is sentiment analysis and came to realize its wide-reaching applications, and how pervasive these algorithms are in our everyday lives. It was stimulating to learn about the variety of algorithms available and the vast differences in approaches to ultimately achieve the same task—detecting a sentiment or overall opinion of a piece of text. We learned that in order to create a comprehensive study, we must greatly consider the past work that has been done in this field, and design our project in a way that will contribute to the body of existing research. In this way, we found that a project such as this could help fill the gaps in informing the comparison between algorithms and the potential usage of ensemble algorithms as a potentially effective way to analyze different text environments.

The quickly changing landscape of media and increasingly varied avenues of self-expression through text hastens language change and brings forth greater complexity of the interpretation of sentiment through text, a greater need for “reading between the lines” is apparent, and algorithms should continue to be developed to do so, and evaluated accordingly in empirical studies. Creating algorithms that can analyze a combination of data from different domains may lead to overall a better performance of sentiment analysis algorithms in general. Work thus far seems to indicate that there is a degree of transferability of lexicons exists between different text platforms, so this may be a direction worth taking in the future of sentiment analysis system development.

While much progress has been made in the field of sentiment analysis, the evolution of language will continue to propel the refinement of these algorithms in parallel. We hope this project will serve as a stepping stone to the development of algorithms that are better attuned to the nuanced expression of emotion through text.

Bibliography

Baccianella, S., Esuli, A., & Sebastiani, F. (2010, May). SentiWordNet 3.0: An Enhanced Lexical Resource for Sentiment Analysis and Opinion Mining. In LREC (Vol. 10, pp. 2200-2204).

Cambria, E. (2016). Affective computing and sentiment analysis. IEEE Intelligent Systems, 31(2), 102-107.

Feldman, R. (2013). Techniques and applications for sentiment analysis. Communications of the ACM, 56(4), 82-89.

Ghiassi, M., Skinner, J., & Zimbra, D. (2013). Twitter brand sentiment analysis: A hybrid system using n-gram analysis and dynamic artificial neural network. Expert Systems with applications, 40(16), 6266-6282.

Gonçalves, P., Araújo, M., Benevenuto, F., & Cha, M. (2013, October). Comparing and combining sentiment analysis methods. In Proceedings of the first ACM conference on Online social networks (pp. 27-38). ACM.

Godbole, N., Srinivasaiah, M., & Skiena, S. (2007). Large-Scale Sentiment Analysis for News and Blogs. ICWSM, 7(21), 219-222.

Kouloumpis, E., Wilson, T., & Moore, J. D. (2011). Twitter sentiment analysis: The good the bad and the omg!. Icwsm, 11(538-541), 164.

Martínez-Cámara, E., Martín-Valdivia, M. T., Urena-López, L. A., & Montejo-Ráez, A. R. (2014). Sentiment analysis in Twitter. Natural Language Engineering, 20(1), 1-28.

Medhat, W., Hassan, A., & Korashy, H. (2014). Sentiment analysis algorithms and applications: A survey. Ain Shams Engineering Journal, 5(4), 1093-1113.

Nasukawa, T., & Yi, J. (2003, October). Sentiment analysis: Capturing favorability using natural language processing. In Proceedings of the 2nd international conference on Knowledge capture (pp. 70-77). ACM.

Nielsen, F. Å. (2011). A new ANEW: Evaluation of a word list for sentiment analysis in microblogs. arXiv preprint arXiv:1103.2903.

Ortigosa, A., Martín, J. M., & Carro, R. M. (2014). Sentiment analysis in Facebook and its application to e-learning. Computers in Human Behavior, 31, 527-541.

Pang, B., Lee, L., & Vaithyanathan, S. (2002, July). Thumbs up?: sentiment classification using machine learning techniques. In Proceedings of the ACL-02 conference on Empirical methods in natural language processing-Volume 10(pp. 79-86). Association for Computational Linguistics.

Patil, P. R., & Yalagi, P. S. Sentiment Analysis of movie reviews using SentiWordNet Approach.

Pouransari, H., & Ghili, S. (2014). Deep learning for sentiment analysis of movie reviews. Technical report, Stanford University.

Rao, Y., Lei, J., Wenyin, L., Li, Q., & Chen, M. (2014). Building emotional dictionary for sentiment analysis of online news. World Wide Web, 17(4), 723-742.

Raina, P. (2013, December). Sentiment analysis in news articles using sentic computing. In Data Mining Workshops (ICDMW), 2013 IEEE 13th International Conference on (pp. 959-962). IEEE.

Saif, H., Fernandez, M., He, Y., & Alani, H. (2014, May). Senticircles for contextual and conceptual semantic sentiment analysis of twitter. In European Semantic Web Conference(pp. 83-98). Springer, Cham.

Taboada, M. (2016). Sentiment analysis: An overview from linguistics. Annual Review of Linguistics, 2, 325-347.