GROUP26: Ambient Intelligence and Child Cognitive Development

COGS200 project by Group 26:

Michael Doswell (#85580728)

Matt Hangad (#17934143)

Alvin Lee (# )

Wennie Wu (# )

Introduction

Ambient Intelligence

Ambient Intelligence (AmI) refers to information and intelligence which are embedded within hidden networks of interconnected systems. The advent of AmI was primarily spurred by movements to enhance the human experience. In the envisioned world, AmI systems support peoples' daily activities in an effective and unobtrusive way. These systems are characterized by technologies that are adaptive, context-aware, transparent, and ubiquitous:

- Adaptive: ability to anticipate, change, and/or tailor responses to user needs

- Context-aware: ability to recognize users and their surrounding environment

- Transparent: seamlessly and unobtrusively integrated infrastructure

- Ubiquitous: massively distributed infrastructure

Some modern examples of Ambient Intelligence include the Gator Tech Smart House, and the Aware Home Project. The former generates an assistive environment to support independent living for the elderly, and individuals with disabilities. The system enhances monitoring and intervention practices by incorporating a self-sensing service. The latter provides assistive functions to a common home, such as sensing and tracking technologies for locating lost belongings.

Previous Research

Circumspection is required for implementations of AmI technology. Current research regarding impacts of AmI on cognition are primarily bipolar. Previous work suggests AmI may be detrimental to cognition since users unconsciously outsource cognitive resources to available devices. For example, reduced hippocampal grey matter was observed among participants who regularly played certain types of video games. The researchers suggest that in-game navigation systems eliminate the need for users to allocate resources involved in spatial learning and navigation (West et al., 2017). Therefore, effects are expected to be more drastic if humans become immersed in AmI environments, especially during critical periods of development. Contrarily, some researchers suggest AmI may actually improve aspects of child cognition. For instance, researchers found that AmI enhances the development of children’s representational skills. They suggest that AmI provides an informal learning environment that actively primes children’s representational world. Moreover, interactions with AmI may bridge the gap between representational and physical execution (Greenfield and Calvert, 2004). However, it should be noted that previous research has largely been piecewise. That is, research has often fragmented aspects of AmI for targeted purposes, such as diagnostics (Ellingsen, 2016). As a result, comprehensive investigations are required for a better understanding of the impacts of AmI on cognitive development. This necessity is intensified due to the pervasiveness of Ambient Intelligence. These technologies introduce an overload of information through hidden networks, transmission systems, and interfaces. As well, current developments in accessibility of networked information, such as by expanding quantity, variety, and mobility of access points, are expected to continue rapidly in future decades (Biocca, 2000).

Project Overview

In this study, we implement a longitudinal, correlational study to investigate the relationship between AmI and children’s cognitive development over a 15-year period. We expect children reared in environments saturated with Ambient Intelligence will perform better in cognitive tests than children reared in lower-AmI environments. We also expect diminished cognitive performance when children from high-AmI environments are tested in the absence of AmI. Lastly, it is expected that levels of AmI in a child’s home environment will correlate with levels of trust and positive attributions of AmI devices reported by the child. Briefly, the level of AmI presence in each household will be evaluated against a derived coding scheme. Cognitive assessments will be conducted at three locations: the participant’s home, a lab playroom simulating high AmI presence, and a lab playroom simulating low AmI presence. The tests will measure functions involving memory, visual-spatial processing, quantitative knowledge, and language development. In order to test children’s perception of the AmI devices in their homes and the role the devices play in their lives, a questionnaire will be administered which measures levels of familiarity and trust.

Relevant Fields to Cognitive Systems

The proposed project aims to measure the cognitive development of children raised in the presence of Ambient Intelligence technologies. In doing so, the project will primarily explore disciplines of Psychology and Philosophy. With regards to Psychology, the longitudinal study would constitute a comprehensive investigation on the dynamic processes of developmental change in children's cognition. The experimental design may add to contemporary cognitive testing methods, for instance by incorporating tasks which optionally utilize AmI. The study may also prove useful to clinical psychology by providing insight on individual developmental trajectories. Lastly, the study may elucidate potential considerations or concerns regarding AmI and cognitive development, which may inspire future psychological or experimental work. With regards to Philosophy, the human individual can interact with its environment to create a coupled process that can be seen as a cognitive system in its own right. The investigation would provide invaluable insight into the effects of Ambient Intelligent on human cognition which, in turn, can aid in the development of philosophical frameworks of the mind, like the Extended Mind Thesis. In the Extended Mind, the human mind is not restricted to the boundaries of our skulls (Clark and Chalmers, 1998). The mind can extend into aspects of the environment to form a greater cognitive system, which can complete tasks and solve problems more efficiently. In the same way that removing a part of the brain will impair one’s behavioural capacities, removing aspects of the external environment from this cognitive system will impair the system’s behavioural capacities. Consequently, we investigate ideas proposed in the Extended Mind by observing contextual effects of AmI on children's cognitive development. In application, information of this kind could also be used to inform the development of policies surrounding the implementation of intelligent technology, particularly with regards to welfare of the user’s mind and privacy of their data.

Methods

Participant Eligibility

The sampling approach employs a combination of cluster and multistage sampling methods. It also adapts from previous longitudinal studies of childhood development, including the Millennium Cohort Study (MCS), and the National Institute of Child Health and Human Development’s Study of Early Child Care and Youth Development (NICHD-SECCYD). These longitudinal studies are selected for their comprehensiveness, ability to represent populations, and considerations of data loss (Grammer et al., 2013). Participants are recruited from hospitals across Canada by applying sets of inclusionary and exclusionary filters. Inclusionary filters ensure recruited families satisfy the following: 1) full-time or part-time employed parents; 2) married or cohabiting parents; and 3) ethnically and socioeconomically diverse recruitment sites. Exclusionary criteria filter against the following: 1) families with temporary residency status; 2) families anticipating relocation; 3) children with apparent disabilities; and 4) families with English deficiency. Accessory strategies for participant recruitment include public email announcements, and distribution of advertisements and brochures to various sites such as hospitals, schools, and communities of confirmed participants. Disadvantaged and underdeveloped sites will be oversampled to address possibilities of poor representations of low socioeconomic demographics within the study (Grammer et al., 2013).

Participant Compensation

In an effort to encourage recruitment and continued participation in the study, families of participants will be appropriately compensated. Financial compensation will be provided in two forms: a minor reward for the completion of each cognitive assessment set, and a major reward for the completion of each milestone phase. Due to the involvement of Ambient Intelligence, higher socioeconomic and educational demographics are more likely to participate (Rosnow and Rosenthal, 1976). Thus, monetary compensation may encourage low socioeconomic demographics to participate in the study to balance population representation. Children will also receive rewards such as toys and branded merchandise or souvenirs to demonstrate appreciation of their contribution. Other forms of compensation include providing scientific feedback, media footage of participation, and certificates demonstrating study involvement and completion.

Administrator Eligibility

Selection of researchers for the longitudinal study is paramount to ensure significance, reliability, and validity of cognitive measurements and interpretations. The research team must comprise of experts having sufficient background knowledge regarding child development to inform data analyses, and recognize typical and atypical behavioral patterns of participants. As the study focuses on select cognitive functions, the team can be equipped with specialists to lead sub-teams assessing each cognitive function. For instance, by including speech and language pathologists to assess language development among children. The sub-teams should comprise of trained and relatable research technicians (Grammer et al., 2013). These administrators should rehearse dynamics of cognitive assessments, including verbal and non-verbal instruction, presentation, placement of assessment material, and assessment transitions. Moreover, administrators must be committed to the length and requirements of the longitudinal study. Requirements may include factors such as relatability, first-aid training, and providing regular feedback to participants and families. Administrators must also engage with community stakeholders, which may lend naturally to recruitment of other administrators or liaisons. It is crucial to employ relatable research staff to build and maintain networks with participants to ensure continued participation and engagement with the study (Ellingsen, 2016).

Evaluation of Ambient Intelligence Levels

Evaluation of Ambient Intelligence levels is restricted to devices which contribute work to accomplishing explicit cognitive tasks. To illustrate, compare personal assistant devices, such as Amazon Alexa, to electronic door locks. AmI levels (AmIQ) are expressed as a summation over the intelligence of individual devices (D) contributing to an AmI environment. Individual device intelligence is expressed as the average function of AmI properties satisfied, including adaptive, anticipatory, context-aware, embedded, and personalized (Cook et al., 2009). Each AmI property is qualitatively ranked against a rubric from 1-10, representing poor to exceptional property satisfaction. Individual device intelligence measures the expected contribution of a device to an AmI environment. The following formula represents a function of Ambient Intelligence levels for n devices:

Example Calculation of D for Amazon Alexa

Consider the Amazon Alexa satisfies the properties of AmI, adaptive, anticipatory, context-aware, embedded, personalized, with scores of 9, 4, 7, 8, and 2 respectively. Then,

Cognitive Assessments

Our study employs standardized tests to measure cognitive development of children throughout time, and is informed by methodology presented in the revised Differential Ability Scales (DAS-II) and Kaufman Assessment Battery for Children (KABC-II). Throughout the study, cognitive tests will be performed at three locations: the participant’s home, a lab playroom saturated with ambient intelligence, and a lab playroom absent of ambient intelligence. Each cognitive test session will take roughly 30-45 minutes, prefaced by a 15-minute equilibration period, and split by small breaks between tasks. The study implements four phases to study cognitive development: birth through 3 years of age (Phase I), 4 through 6 years of age (Phase II), 7 through 11 years of age (Phase III), and 12 through 15 years of age (Phase IV). Phase I focuses on establishing baselines for targeted cognitive functions, ambient intelligence levels, and home environments through periodic data collections every 3 months. Progression through phases II through IV will implement triannual assessments, focusing on cognitive development among memory, language development, quantitative knowledge, and visual-spatial processing (See appendix for complete cognitive assessments). Cognitive assessments are partitioned into targeted cognitive functions and subtests. Each subtest comprises of a collection of items with equivalent complexity for testing in different locations. Subtests also comprise of items differing in complexity to tailor cognitive assessments to specific ages (Elliot, C.D., 2007).

Memory

Design Recall: A collection of 30 items, which borrows from block-building and drawing tests. To test visual-spatial information retention, a visual is presented for only five seconds before removal. The child must replicate the item through visual recall. Scores are awarded based on accuracy of replication. Scores are not penalized by decorative additions or unrelated scribbles.

Picture Recognition: A collection of 20 items, each comprising a picture of one or more objects. An item is exposed for 5 to 10 seconds. Next, a modified picture, containing original and distractor objects, is provided for the child. The child must identify the original objects. A point is awarded for each correct object, and scores are penalized for each incorrect object.

Visual-Spatial Processing

Building Blocks: A collection of 15 items, of which a subset are visually presented. The task requires a child to replicate a two- or three-dimensional visual using Lego blocks. Visuals advance in complexity by introducing ambiguity, orientation, and sequences. Scores are based on the number of blocks used, and on a correct replication.

Picture Similarity: A collection of 35 items, whereby each item consists of a set of pictures or designs. In a simple test, the child must select the card image that best corresponds with the stimulus set. In a complex test, the child must identify the relationship shared by elements of a stimulus set.

Quantitative Knowledge

Number Progression: A collection of 40 items partitioned into simple and complex groupings. In the simple group, an incomplete item sequence prompts a child to draw the missing item into the void space. In the complex group, two pairs of numbers are related by an arithmetic rule. The child must respond orally and identify the arithmetic rule. Scores are awarded for correctness of responses.

Language development and Lexical knowledge

Similarity: From a collection of 40 three-word items, a subset is visually presented to a child. To earn a point, the child must understand the contents of each three-word set, and identify the relation within the set. Total scores are based on the cardinality of successes. In addition to language development, this test assesses logical reasoning and abstract thinking.

Verbal Comprehension: From a collection of 36 items, a subset of directives are presented to the child to assess syntax and relational concepts, ability to follow verbal directives, ability to test hypotheses, and short-term auditory memory. A correct, non-verbal execution of the directive earns a point. Total scores are based on the cardinality of successes.

Statistical Analysis

Data will be analysed using R, an open-source software with psychometric statistical frameworks based on Item Response Theory. Using a Rasch model, raw scores from cognitive subtests will be converted to ability scores as a function of item success cardinality and item difficulty (Lalanne et al., 2015). Ability scores demonstrate an individual raw cognitive performance. Ability scores are standardized and transformed to T-scores with μ = 50, and σ = 10. Composite cognitive performance scores are derived from the summation of T-scores of subtest groups established by broad cognitive functions (Elliot, 2007). Standardization and conversion of scores are contextually dependent, such as with ambient intelligence levels or participant age. Correlative relations, between Ambient Intelligence levels and children’s cognitive performance, are assessed by multilevel latent growth curve models of composite cognitive performance scores.

Questionnaire

Children will additionally be asked to complete a questionnaire of the kind proposed by Bartneck et al. (2009), which seeks to find a standard measure of human impressions of artificial intelligence on dimensions such as Likeability, Perceived Safety, and Trust. The questionnaire will be gamified to encourage participation from younger participants, as in Druga et al. (2017).

Results

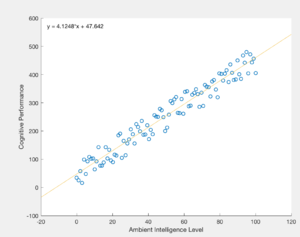

The following scatter plots are hypothetical, randomly generated using R, and selected to demonstrate potentially interesting correlations. For instance, a home environment captures the best representation of a child's cognitive performance due to a familiar environment. As a result, a positive correlation between Ambient Intelligence levels and cognitive performance may suggest that environments with the presence of AmI improve cognitive aspects (Figure 1). Contrarily, a negative correlation may support notions that AmI environments impair or change cognitive development.

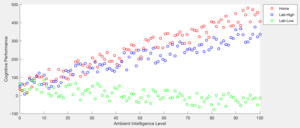

We could also investigate the role of assessment contexts, since drastic differences may indicate changes in cognitive performance could be attributed to setting rather than AmI levels (Figure 2). If comparable cognitive performance is observed among home and high-AmI lab simulations, this may strengthen correlative claims regarding AmI levels in lab settings. This would also be supported by observing negative correlations in an unfamiliar environment simulated by the low-AmI lab playroom. A negative correlation in low-AmI settings would also suggest that children partition cognitive resources to AmI technologies, such that cognitive abilities are deprived or lost in the absence of Ami (Figure 2). Lastly, a higher cognitive performance observed in home environments may suggest that children adapt to, and rely on personal AmI technologies to solve problems. That is, AmI substitutes provided in lab settings would not be tailored to individual participants, thereby limiting the extent to which cognitive abilities can be replicated.

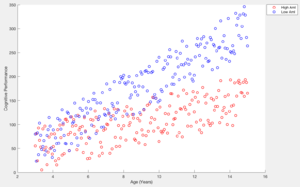

Importantly, it would be interesting to consider cognitive developments of children throughout the span of the longitudinal study (Figure 3). Differences in cognitive performance between children reared in high- and low-AmI environments may change depending on age. For instance, it may be the case that early childhood cognitive performance is improved by the presence of AmI. However, as children become heavily reliant on AmI devices, cognitive performance may be higher in individuals reared in minimal AmI environments (Figure 3). Such results would suggest a potential long-term, negative effect of AmI on children's cognitive development.

Discussion

Selection of Methodology

The study employs a longitudinal and correlational study to examine the dynamic processes of developmental change in children’s cognition. Our focus on children, and younger demographics, enable us to capitalize on invaluable features such as critical periods and impressionability. Moreover, targeting children allows for greater control of AmI novelty by minimizing initial exposure to AmI. A longitudinal study allows us to explore factors that cannot be experimentally manipulated, such as magnitude of Ambient Intelligence exposure, and changes of home environments over time. Such a study would provide insight into individual developmental trajectories, and environmental impacts on cognitive changes in children (Grammer et al., 2013). Our study may also supplement a larger longitudinal data collection and analysis regarding cognitive developments of children. Lastly, the study may inspire or expand on current experimental work.

With regards to experimental design, data collection and cognitive assessments were partitioned into four phases to reflect cognitive stages proposed by Piaget, and important growth milestones (Piaget and Inhelder, 1969). Additionally, contemporary standardized cognitive testing methods, including the DAS-II and KABC-II, were consulted to inform our experimental design. Primarily, these standardized resources provided insight on measures of cognitive development and test durations. These two instruments were selected due to comparable age and testing categories, acclaimed psychometric instrumentation, considerations for demographic diversity, and consistency with contemporary theories of intelligence (Ellingsen, 2016). In Phase I, data collection focuses on establishing an ecological framework through meetings, interviews, and questionnaires to gauge health histories, home environments, relationships with caregivers, cultural and linguistic backgrounds, and levels of Ambient Intelligence. Cognitive assessments are not performed during Phase I as a reflection of Piaget’s Sensorimotor stage characterized by non-symbolic cognition. As well, previous research have noted difficulty in accurately assessing cognitive abilities in children younger than 3 years of age (Shepard et al., 1998). Cognitive assessments begin during Phase II to reflect Piaget’s Preoperational stage, and to capture considerable growth of executive functions occurring during transitions into elementary school. Phases III and IV reflect Piaget’s Concrete and Formal Operational stages of development, respectively (Piaget and Inhelder 1969). As well, Phase IV is timed to the expected onset of puberty among children. The longitudinal study targets a prolonged developmental course. Therefore, data collection occurs triannually to accommodate a wider timeframe between assessments. Similarly, small breaks are implemented between cognitive tasks to combat potential participant fatigue. An equilibration period is also implemented to allow participants to familiarize themselves with research staff and space (Ellingsen, 2016).

Limitations to Methodology

Naturally, longitudinal studies are complex, time- and resource-intensive approaches to research (Grammer et al., 2013). In practice, the study would require an extensive labour force, particularly to handle social aspects involved in participant eligibility screening, communication, and maintenance of relationships. The labour force is amplified by acquisition and interpretation of large-scale data sets, which demands extensive knowledge of child and cognitive development, language development, and analytical techniques relevant to cognitive psychology research. Consequently, the study necessitates an interdisciplinary team of relatable personnel and professionals researchers, including neurologists, cognitive psychologists, language pathologists, and statisticians. Lastly, equipment and instrumentation required throughout the 15-year timeframe also add to the already considerable cost of the study.

It is important to note that collected data is largely dependent on uncontrollable factors regarding a participant’s behavior and disposition during the assessment process, such as comfort, motivation, mood, and responsiveness. Similarly, the variability of these factors are contingent on contexts since assessments are conducted at homes, and in lab playroom simulations of high and low Ambient Intelligence presence. Concerns still exist regarding the accuracy of population representation due to exclusionary criteria applied during participant screening. For instance, correlations observed during the study would not translate to demographics with disabilities, impairments, or health conditions. Despite strategies to improve representation, such as oversampling for low socioeconomic and ethnically diverse demographics (Grammer et al., 2013), the power of correlative inferences may be limited by an English prerequisite. That is, the study fails to accommodate for cognitive expression through other languages, such as French, Spanish, and Sign.

The validity of correlative inferences are also questionable when examining evaluations of Ambient Intelligence levels. Currently, our study employs a purely subjective and qualitative approach to defining AmI exposure levels and usage. The experimental design may be strengthened by employing an objective and quantitative measure of AmI levels, such as through use of a Machine Intelligence Quotient (Zarqa et al., 2014). As well, inferences may be strengthened by incorporating a quantitative log of AmI usage since AmI presence does not automatically imply usage by participants. Lastly, cognitive assessments revolve around standardized and traditional tasks. Thus, it may be beneficial to design cognitive tasks which optionally partition workload to Ambient Intelligence technologies. These tasks would provide better insight on aspects of cognition facilitated or changed by Ambient Intelligence.

Development of Philosophical Frameworks

In The Extended Mind (1998), Clark and Chalmers present a model in which cognition is not restricted to the confines of the brain and skull, but is dispersed throughout the body and environment of a cognitively capable agent. It is reasoned that any affordance used interactively to influence the cognition of an agent, is a constituent part of a cognitive system comprised of the coupling between the affordance and the agent. The thesis is supported by compelling thought experiments. In particular, an experiment whereby a notebook replaces the memory of a man (Otto) who is suffering from mild Alzheimer’s disease. Otto’s notebook, for example, is supposed to be with him nearly as often as any part of the brain involved in memory would be with a person whose memory is internal. However, much evidence in the paper focuses on a very limited set of circumstances.

An important feature of Clark and Chalmer's thesis posits external devices actively coupled with people are considered to be analogous to a part of the person’s brain. The current study investigates the possibility of AmI technology becoming integrated into a child’s mind, such that when their access to the technology is barred, their cognition is comparable to persons with atypical brain structure. By comparing the ability of children who have been exposed to AmI systems during critical periods of cognitive development to those who have not, given full access or no access to AmI, we hope to elucidate claims of this nature.

It should be noted however, that the use of external affordances to assist cognitive functions on a more transient or less reliable basis are hardly addressed by Clark and Chalmers. In order to refine models which locate particular examples of cognitive coupling on a multi-dimensional continuum, our study investigates the ways in which cases of consistent use and transient use of AmI compare. Heersmink’s (2015) model of the extended mind considers using measures of eight dimensions of active coupling to determine how closely a person and an external “cognitive artifact” are coupled. Findings of children’s cognition covarying continuously with the level of AmI in their home would grant some evidence to support the elaboration of models such as Heersmink’s. Moreover, it may offer a more descriptive account of an extended mind.

Support for a model centred on multiple, continuous features would allow for the extended mind theory to be applicable to a greater number of situations, while more accurately representing all such situations in relation to each other. This could address certain challenges to the original extended mind thesis, which often follow from its lack of discrimination in considering most systems to be actively coupled (Menary, 2010). Additionally, such a model could provide a practical framework for identifying the role that an external affordance plays in the life of an individual, hopefully providing empirical measures with which questions of well-being, autonomy, and the inclusion of technology in education can be addressed.

Ethical Considerations

It is to be expected that a person whose mind is closely coupled with an external device will have an intimate connection to that device on the order that one would expect to have with a part of their own mind. The fact that this relationship between the internal and external parts of mind is at least partly outside of the body and contingent on a device created (presumably) with no specific consideration for the person in question prompts some important ethical considerations. It seems that, in most cases, the user’s mind would be far more vulnerable, both to structural damage via decoupling and to external invasion or influence on thought patterns and subsequent behaviour, than any person whose mind is relatively well contained to at least their body.

The objection may be raised that the contained mind is vulnerable in the same ways through damage to brain structures by accidental or intentional injury, or through manipulation or subliminal messaging. However, the person is protected by law from many of these cases, and instances of physical separation of a part of the brain or body are treated with more severity in general and appear to happen less often than those of physical separation from an external device.

Well-being

As actively coupled AmI would be expected to exert a strong influence on cognitive processes, it is important to explore the well-being of its users. If any piece of technology can be a strongly and reliably coupled constituent of a person’s cognitive system, a number of risks to the person are presented. First, that an object might be coupled closely enough to a person to resemble a part of their mind in some way means that that part of the mind is more vulnerable to removal. The object could be left behind in a moment of negligence, destroyed by environmental factors or intentional action, or stolen. Additionally, technology is invariably prone to security breaches and malfunctions, which may threaten the coupling between the person and object; thus, threatening the cognitive system which comprises the user’s mind. In the case of another person intentionally disrupting that coupling through damage or theft of the object, it would be pertinent to re-consider such an action as an attack on the coupled person, rather than merely on their property.

Conversely, having parts of the mind coupled to external implements may allow for more reliable access to the mind for the purposes of diagnosing and ameliorating deficits in cognition. While an externalized component of the mind may be at risk of physical damage, it would not require costly, invasive, and potentially impossible medical intervention to fix once damaged. Furthermore, there exists opportunities to upgrade the coupled technology, and thus increase the mind’s capacity to carry out the cognitive functions associated with it.

Threats of corporate interests

Another concern for the cognitively-coupled individual, especially when coupled to intelligent machines, is where their beliefs originate and whether they alone are responsible for maintaining them. Clark and Chalmers claim that active coupling of the type they envision requires a “high degree of trust, reliance, and accessibility” (1998) of the external component(s) in the coupling. Specifically addressing children’s attitudes towards intelligent technology, Druga & Williams (2017) found that children believe devices such as Google Home and Amazon Alexa to be friendly and trustworthy. Extending this example, in the case that an AmI system has learned the behaviour patterns and preferences of the user, and that the user trusts the system to accurately reflect their beliefs, the user may unquestionably trust that the AmI’s actions and contributions to their combined cognitive system are representative of their (the user’s) beliefs.

Unquestioning faith in the beliefs embedded in a coupled cognitive system could implicate a loss of autonomy for the user of the AmI system, or at least identify a need to redefine the boundaries of the self to incorporate the system. While possibly an uncomfortable notion, such a call to redefine the boundaries of the self is not necessarily negative. In fact, coupling with technology presents certain opportunities to recontextualize fully human behaviour to provide greater self-awareness and potentially even enable more thoughtful approaches to ethical problems (Verbeek, 2009).

Such beneficial extension of the self, however, assumes that the technology to which a person is coupled is interested only in serving the interests of the user. Realistically, there exists a strong possibility that users who feel that their beliefs are accurately represented by their AmI technology will be vulnerable to having their beliefs shaped by the system, thus taking advantage of the user’s faith to support external interests. Intentionally or not, developers of this technology could program into the AmI a tendency to direct the user to buy products from a certain company. As well, sensitive data may be acquired through false levels of comfort, such as through requests for developer feedback by consenting to share personal data.

According to the Canadian Charter of Rights and Freedoms, everyone has “the right to life, liberty, and security of the person” but data privacy is not an explicitly guaranteed right. Having the ability to collect extensive data from a wide population of users, manufacturers of AmI have significant power over the users. Data can be sold for profit or used to produce targeted advertisements. With regards to technology coupled to the user, data can provide the manufacturers with a set of tools with which user behaviour can be influenced almost directly.

Our study intends to measure the opinions of the participating children towards devices they interact with regularly, using similar methods to those of Druga & Williams (2107). If a correlation were found between the level of AmI in a child’s home environment and their level of trust in their specific devices and intelligent devices more generally, it would indicate an increased risk of vulnerability to corporate interests for children exposed to high levels of AmI. These findings would warrant a need to re-evaluate laws intended to protect the minds of individuals, and possibly extend them to include devices which are partly constitutive of an individual’s mind.

Conclusions

In this study, we investigated the effects of Ambient Intelligence on children's cognitive development. We were able to identify contemporary experimental work which supports both negative and positive influences. However, without implementation of our project, it remains difficult to assume a stance on the proposed research question. Additionally, we discovered complexities and frustrations of implementing a longitudinal research project to address philosophical and psychological questions. Particularly, in deriving appropriate and reliable methodologies to measure abstract concepts like cognition. This is especially apparent when securing controls to validate a longitudinal study that involves numerous uncontrollable factors related to human behavior. As well, the difficulty associated with producing readable data to contribute to contemporary theories such as the Extended Mind Thesis. We have also realized the importance of hybridized experimental approaches, such as combining longitudinal and experimental analyses, to produce credible claims. Throughout the COGS 200 project, we have developed a sincere appreciation of the dynamics of cognitive research. Namely, requisite knowledge, demands to stay informed about relevant contemporary methods and research, and the value of interdisciplinary work.

Future Directions

Currently, our longitudinal design is restricted to environmental and contextual factors as potential sources of change in trajectories of cognitive development. However, the design could benefit from supplementing experimental work to allow causal claims. Moreover, the design can be extended to include neuropsychological and genetic data to address questions regarding biological or neurological origins of cognitive changes. Coupling these approaches may support a better understanding of the impacts of Ambient Intelligence on children’s cognitive development. Other directions will ideally address limitations presented in the study, including objective and quantitative measures of AmI levels, and tracking AmI use. Importantly, investigations should consider designs and implementations of cognitive tests, as opposed to classical tests, that provide a comprehensive picture of a child's ability supported by AmI. Future projects could also explore contemporary AmI technologies that improve children's learning and playing experience, and cognitive testing processes. For instance, the CareLog system facilitates data collection, and is designed to help analyses of children’s decision-making behavior (Hayes et al., 2008). Another notable technology is the BEAN framework which aims to monitor, evaluate and enhance children’s skills and abilities by immersing them in a playful AmI environment (Alessandrini et al., 2017). The BEAN framework can assist in detecting children’s cognitive impairment (Zidianakis,et al., 2012).

References

- Barbuscia, A., and Mills, M.C. Cognitive development in children up to age 11 years born after ART—a longitudinal cohort study. Hum Reprod 32, 1482–1488 (2017).

- Biocca, F. New media technology and youth: Trends in the evolution of new media. Journal of Adolescent Health, 27, 22-29 (2000).

- Brey, P. Freedom and privacy in Ambient Intelligence. Ethics and Information Technology, 7(3), 157–166 (2005). DOI: 10.1007/s10676-006-0005-3

- Clark, A., & Chalmers, D. The Extended Mind. Analysis 58(1), 7-19 (1998).

- Cook, D.J. & et al. Ambient intelligence: Technologies, applications, and opportunities. Pervasive and Mobile Computing 5, 277-298 (2009).

- deBruin, L, & Michael, J. (2017). Prediction error minimization: Implications for Embodied Cognition and the Extended Mind Hypothesis. Brain and Cognition 112, 58-63. (link)

- Druga S., and Williams R. “Hey Google, is it OK if I eat you?”: Initial Explorations in Child-Agent Interaction. IDC Stanford (2017).

- Ellingsen, K.M. Standardized Assessment of Cognitive Development: Instruments and Issues. Clinical Child Psychology. (2016) DOI 10.1007/978-1-4939-6349-2_2

- Elliot, C.D. Differential Ability Scales-II (DAS-II). Pearson Ed. (2007) (link)

- Fitzsimons, E. Millenium Cohort Study. Centre for Longitudinal Studies, UCL Institute of Education (2013). (link)

- Grammer, J.K., Coffman, J.L., Ornstein, P.A., & Morrison, F.J. Change over Time: Conducting Longitudinal Studies of Children's Cognitive Development. J. Cogn Dev 14(4): 515-528 (2013)

- Griffin, J.A. Study of early Child Care and Youth Development. NICHD (1991). (link)

- Griffin P., Care E. The ATC21S Method. In: Griffin P., Care E. (eds) Assessment and Teaching of 21st Century Skills. Educational Assessment in an Information Age. Dordrecht: Springer (2015).

- Hayes, G.R., Gardere, L.M., Abowd, G.D., and Truong, K.N.CareLog: a selective archiving tool for behavior management in schools. In: Conference on Human Factors in Computing Systems (CHI 2008). DOI: 10.1145/1357054.1357164

- Heckman, J.J. Skill Formation and the Economics of Investing in Disadvantaged Children. Science 312, 1900–1902 (2006).

- Heersmink, R. Dimensions of Integration in Embedded and Extended Cognitive Systems. Phenomenology and the Cognitive Sciences 13(3), 577-598 (2015).

- Kable, J.W., Caulfield, M.K., Falcone, M., & et al. No Effect of Commercial Cognitive Training on Brain Activity, Choice Behavior, or Cognitive Performance. J. Neurosci. 37, 7390-7402 (2017)

- Kaufman, A.S., and Kaufman, N.L. Kaufman Assessment Battery for Children, Second Edition Normative Update (KABC-II). Pearson Ed. (2004) (link)

- Lalanne, C., Duracinsky, M., Douret, V.L., & Chassany, O. Psychometrics in R: Rasch Model and beyond. Dep Clin Res and Internal Med and Infectious Diseases (2015).

- Ma, W., Adesope, O.O., Nesbit, J.C., & Liu, Q. Intelligent tutoring systems and learning outcomes: A meta-analysis. Journal of Educational Psychology, 106, 901-918 (2014).

- Menary R. The Extended Mind. Cambridge, MA: MIT Press (2010).

- Perry, M. Distributed cognition. Encyclopedia of the Mind (link)

- Piaget, J., and Inhelder, B. The psychology of the child. New York, NY: Basic Books (1969).

- Rahwan, I., Krasnoshtan, D., Shariff, A., & Bonnefon J-F. (2014). Analytical reasoning task reveals limits of social learning in networks. J. R. Soc. Interface 11(93), 20131211. DOI: 10.1098/rsif.2013.1211 (link)

- Rosnow, R.L., and Rosenthal, R. The volunteer subject revisited. Aus. J. Psychology 28(2): 97-108 (1976). DOI: 10.1080/00049537608255268

- Shepard, L., Kagan, S.L., & Wurtz, E. Principles and recommendations for early childhood assessments. The National Education Goals Panel (1998) (link)

- Sparrow, B., Liu, J., & Wegner, D.M. Google Effects on Memory: Cognitive Consequences of Having Information at Our Fingertips. Science, 333(6043), 776-778. (2011). DOI: 10.1126/science.1207745

- Verbeek, P. (2009). Ambient Intelligence and Persuasive Technology: The Blurring Boundaries Between Human and Technology. Nanoethics 3(3), 231–242. DOI: 10.1007/s11569-009-0077-8

- West, G. L., et al. (2017). Impact of video games on plasticity of the hippocampus. Molecular Psychiatry. DOI:10.1038/mp.2017.155 (link)

- Zarqa, A.A., Ozkul, T., & Al-Ali, A.R. Comparative study of different methods for measurement of "smartness" of smart devices. Signal Processing and Computers (2014) (link)

- Zidianakis, Emmanouil & Ioannidi, Danai & Antona, Margherita & Stephanidis, Constantine. (2015). Modeling and Assessing Young Children Abilities and Development in Ambient Intelligence. 17-33. 10.1007/978-3-319-26005-1_2.

See also

Appendix

Complete List of Cognitive Measures

Memory

Design Recall: A collection of 30 items, which borrows from block-building and drawing tests. To test visual-spatial information retention, a visual is presented for only five seconds before removal. The child must replicate the item through visual recall. Scores are awarded based on accuracy of replication. Scores are not penalized by decorative additions or unrelated scribbles.

Digital Recall: Sets of eight numeric sequences, ranging from two to nine digits, are randomly generated. Each set increases in complexity by sequence length. The start point is established by sampling a single sequence per set until a mistake is made. The set preceding the mistake is used as the start point. A child must repeat a sequence of digits presented auditorily by a computer with a specified frequency.

Object Recall: A collection of 20 items, whereby each item consists of 20 related objects. After viewing an item for a specified time, the child must verbally recall items on the card from memory. Points are awarded for correctly recalled objects. Scores are not penalized by misnamed objects. Object recall will occur through three trials varied by exposure times of 60, 30, and 20 seconds respectively. Following the tests, children are prompted for recall strategies such as categorization, replacement, mnemonic, etc…

Picture Recognition: A collection of 20 items, each comprising a picture of one or more objects. An item is exposed for 5 to 10 seconds. Next, a modified picture, containing original and distractor objects, is provided for the child. The child must identify the original objects. A point is awarded for each correct object, and scores are penalized for each incorrect object.

Visual-Spatial Processing

Building Blocks: A collection of 15 items, of which a subset are visually presented. The task requires a child to replicate a two- or three-dimensional visual using Lego blocks. Visuals advance in complexity by introducing ambiguity, orientation, and sequences. Scores are based on the number of blocks used, and on a correct replication.

Copying: A collection of 20 items arranged from simple to complex geometric shapes. A child must draw and replicate the visual, which is available throughout the test. Scores are based on accuracy and orientation of the drawings. Scores are not penalized for decorative additions or unrelated scribbles.

Letter Matching: A collection of 30 items arranged by degree and complexity of letter deformation. A child must correctly identify the deformed letter, assessing discrimination and spatial orientation of common visuals. Scores are awarded for correct identification of letters. Total scores are based on the cardinality of successes.

Pattern Construction: Items, from a collection of 30 items, are presented by a model, picture, or demonstration. In models, the administrator builds a pattern and leaves the expected model in the presence of the child. In pictures, a child is provided an image of the target pattern. In demonstrations, the administrator builds a pattern in the presence of the child, and then scrambles the pattern. Given foam objects with patterned faces, a child must replicate and construct the presented pattern. Patterns range from simple solid faces to striped faces. Scores are based on speed and accuracy of construction.

Picture Similarity: A collection of 35 items, whereby each item consists of a set of pictures or designs. In a simple test, the child must select the card image that best corresponds with the stimulus set. In a complex test, the child must identify the relationship shared by elements of a stimulus set.

Quantitative Knowledge

Basic Number Skills: A workbook of 50 problems separated into five clusters of 10 problems each. To establish a start point, clusters are sampled by presenting three problems per cluster until a child fails the sample. Afterwards, the child must complete computations of the previous cluster to assess their ability to solve computational problems centered on basic arithmetic reasoning and calculations. Total scores are based on cluster level and intra-cluster successes.

Early Number Concepts: A collection of 30 items that assess concepts and skills, including comparing, counting, matching, reciting, and recognizing. Points are awarded for each correct response, which can be verbal or non-verbal. Total scores are based on the cardinality of successes.

Number Progression: A collection of 40 items partitioned into simple and complex groupings. In the simple group, an incomplete item sequence prompts a child to draw the missing item into the void space. In the complex group, two pairs of numbers are related by an arithmetic rule. The child must respond orally and identify the arithmetic rule. Scores are awarded for correctness of responses.

Language development and Lexical knowledge

Naming Vocabulary: From a collection of 30 pictures of objects, a subset is presented for a child to identify and name. The response must be consistent with a reference of acceptable responses to score a point. Total scores are based on the cardinality of successes.

Similarity: From a collection of 40 three-word items, a subset is visually presented to a child. To earn a point, the child must understand the contents of each three-word set, and identify the relation within the set. Total scores are based on the cardinality of successes. In addition to language development, this test assesses logical reasoning and abstract thinking.

Spelling: A collection of 70 words separated into ten clusters of seven words each. The child must spell words dictated by the administrator. To establish a start point, clusters are sampled by presenting two words per cluster until a child fails the sample. Afterwards, the child must spell words of the previous cluster. Scores are ranked based on cluster level and intra-cluster success, and excludes clusters beyond the established start point.

Verbal Comprehension: From a collection of 36 items, a subset of directives are presented to the child to assess syntax and relational concepts, ability to follow verbal directives, ability to test hypotheses, and short-term auditory memory. A correct, non-verbal execution of the directive earns a point. Total scores are based on the cardinality of successes.

Word Definition: From a set of 50 words organized by complexity, a subset is presented orally for a child to define. The child must provide the meaning of, or demonstrate the key concept of a word to score a point. Total scores are based on the cardinality of successes.

Word Reading: A collection of 100 words separated into ten clusters of ten words each. The child must successfully read words in sequence, advancing from cluster to cluster, until encountering seven failures within a cluster. Scores are ranked from 1-10 based on cluster level and intra-cluster success. Performances are recorded phonetically for further analyses.