Course:CPSC522/Stochastic Optimization

Stochastic Optimization

This page is about Stochastic Optimization. Optimization algorithms and machine learning methods where some variables in their objective function are random are called Stochastic Optimization methods.[1] Other methods using randomness in their optimizing iteration are also categorized in Stochastic Optimization.

Principal Author: Amin Aghaee

Collaborators:

Abstract

This page is about Stochastic Optimization in which some of the most famous stochastic methods in machine learning are covered. Generally "stochastic" is an adjective that describes something that was randomly determined. Some Stochastic Optimizers use random variable in their objective function which the want to optimize. These type of algorithms are useful in online learning methods where unlike other offline methods, we do not want to see the whole data and want to update our model at each iteration by seeing a single data. Such algorithms use random variables in their objective functions in order to minimize the total regret or loss.

There are other types of stochastic optimizers which use randomness in optimizing iterations. Sometimes, because of having enormous data or having lots of features for each sample, computing the gradient of our whole model is too expensive. In such cases, some algorithms such as "Stochastic Gradient Descent" randomly samples a subset of samples or features at every step to decrease this cost. We can say that stochastic optimization methods, whether using random variables in their objective function or using random iterations, are more generalized models for deterministic methods and deterministic problems.

Builds on

Put links to the more general categories that this builds on. These links should all be in sentences that make sense without following the links. You can only rely on technical knowledge that is in these links (and their transitive closure). There is no need to put the transitive closure of the links.

Related Pages

This should contain the reverse links to the "builds on" links as well as other related pages. Use in sentences.

Content

One of the most famous stochastic optimization methods is Stochastic Gradient Descent (SGD). SGD has proven its effectiveness with a normal and relatively straightforward dataset. However, in other situations such as online learning, having a sparse and infrequent samples, other methods such as AdaGrad or ADAM outperforms SGD. We will cover some of these methods in this section.

Stochastic Gradient Descent

In optimization problems we consider minimizing an objective function such as . In normal problems we apply deterministic gradient method where we compute from at every iteration:

where is our variable in iteration and iteration cost is linear in and converges with constant or line-search. Stochastic Gradient Method [Robbins & Monro, 1951] [2] , randomly selects a subset from and updates based on that subset

where this time iteration cost is independent of and convergence requires when . On enormous datasets, Stochastic Gradient Descent can converge faster than batch training because it performs updates more frequently and requires less time when computing gradients at each step. However, SGD ignores more complex parameters such as learning rate. It uses the same learning rate for all sub samples which might not be the best idea in sparse datasets or online optimizers.

Stochastic Average Gradient

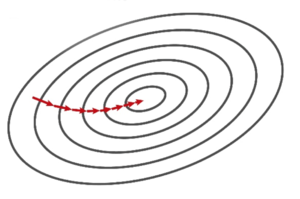

This method is proposed by Mark Schmidt [3] for optimizing the sum of a finite number of smooth convex functions. Both Stochastic Average Gradient (SAG) and Stochastic Gradient algorithms are independent of the number of terms in the sum in terms of cost. However, SAG algorithm has a faster convergence rate by memorizing the value of previously randomly selected features. In other words, if you consider optimization as a ball moving down in a space, it is not only moves toward the direction of the gradient of those randomly selected features, but also updates all dimensions at the same time using the most recent gradient it has for every feature. You can see the pseudo code for this algorithm here: [4]

- Set and gradient approximation for

- while (True):

- sample from

- compute

Online learning and Stochastic optimization

In normal applications of machine learning, we have the whole dataset with an objective function, and then we want to find the best solution for that function. This is called Offline methods. However, if the dataset is a stream data, or if our dataset is too big that doesn't fit in the memory, we should another category of algorithms which are called Online Methods. In these kind of methods, we can update our estimation, every time we see a new data point.

Regret Minimization

In offline methods, we usually use loss function when we want to refer to our objective. However, in online methods the objective which is used is regret which is the averaged loss incurred relative to the best we could have gotten in hindsight using a single fixed parameter value: [5]

where is the sample number and presents sample at that step and the learner must respond with a parameter estimate . One of the simplest algorithm in online learning is Online Gradient Descent http://www.cs.cmu.edu/~maz/publications/techconvex.pdf which at each step, update the parameters using:

where and is the step size and projects onto space. Now suppose, we want to minimize the expected loss in the future rather than minimizing regret function at this step. In mathematic words, we want to minimize . This is the main difference between normal methods and stochastic methods specially when we have infinite data.

AdaGrad

AdaGrad or Adaptive Gradient algorithm [6] is another stochastic optimization method which has per-parameter learning rate. This method targets sparse dataset by using the fact that infrequently occurring parameters are highly informative. So, it increases the learning rate for those sparse features. So eventually, learning rates for infrequent features decreases, whereas learning rate for sparse features increases. This is the pseudo code for the diagonal version of this algorithm:

- INPUT: step-size

- INITIALIZE:

- FOR to do:

- Predict , observe loss

- Let

ADAM

Although AdaGrad performs well with a good convergence rate on many applications and dataset in comparison to other gradient methods, it has a few problems. AdaGrad accumulates the squared gradient and increases at each step (see line 8 of its pseudo code) and the learning rate becomes too small. ADAM [7] or Adaptive Moment Estimation, estimates the mean (first moment) and variance (second moment) of the gradients. They also keeps track of exponentially decaying average of past squared gradients () and Exponentially decaying average of past gradients(). Here's the pseudo code for ADAM:

- INPUT: step-size , decay rates ,

- INITIALIZE: First moment and second moment

- FOR to do:

- Predict , observe loss

- Let

Annotated Bibliography

Kevin P Murphy, Machine learning: a probabilistic perspective [8]

Stochastic Gradient Descent [9]

Mark Schmidt, Stochastic Average Gradient [10]

John Duchi et al, Adaptive Sub-gradient Methods for Online Learning and Stochastic Optimization [11]

Diederik P. Kingma, Jimmy Ba, Adam: A Method for Stochastic Optimization [12]

Stochastic Gradient Descent [13]

Mark Schmidt, CPSC 540 Course Slides [14]

Martin Zinkevich, Online Gradient Descent [15]

To Add

Put links and content here to be added. This does not need to be organized, and will not be graded as part of the page. If you find something that might be useful for a page, feel free to put it here.

|

|

![{\displaystyle f(\theta )=\mathbb {E} [f(\theta ,z)]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/c3675795c1cb73bcf14dada46c657b904f6221e9)

![{\displaystyle S_{1:t}=[S_{1:t-1},\delta _{t}]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/4da720fe0312f4f2989a66346c629fcf5cca5e27)