Course:CPSC522/MCMC

Markov Chain Monte Carlo (MCMC)

MCMC is a class of Markov chain-based algorithms used to generate samples for use in Monte Carlo integration.

Principal Author: Ricky Chen

Collaborators: Jiahong Chen, Yan Zhao

Abstract

Monte Carlo Integration is briefly reviewed, and Markov Chain Monte Carlo is introduced as a method for solving Monte Carlo Integration when the samples are in high dimensions. We briefly review Monte Carlo Integration. Then we describe the theoretical justifications behind MCMC algorithms, and outline the generic Metropolis-Hastings algorithm and the commonly used Gibbs sampler.

Background

Knowledge of probability distributions, expectations, and basic properties of Markov chains are assumed.

Monte Carlo Integration

The goal of Monte Carlo integration is to approximate where is a possibly-multidimensional random variable from some target distribution . Due to the definition of expectation, this means we can approximate any integral of the form

Where satisfies the properties of a density function.

Example

Suppose we want to find the area within the unit circle and denote this area by . This can be written as an integral over the space .

Where is the indicator function

First we must transform this integral to the form that Monte Carlo integration can solve by introducing a random variable that is uniform over the space . Notice that the area of is

Thus the uniform variable over has density function . We can now write

Where we define .

The simplest Monte Carlo simulation involves sampling times from the distribution of , computing the value of for each sample, and then averaging to approximate .

This Monte Carlo simulation written as a Matlab function:

function [estimate] = simPi(N)

f = @(x,y) x^2 + y^2 <= 1;

sum = 0;

for i=1:N

x = rand*2-1;

y = rand*2-1;

sum = sum + f(x,y);

end

estimate = 4*sum/N;

With a high enough , we get a decent estimate of (the constant 3.1415...).

Monte Carlo Integration Based on Markov Chains

The example above only samples from uniform distributions. However, when the distribution of interest is hard to sample from using basic Monte Carlo methods, MCMC is useful if we don't know how to explicitly sample from the target distribution . At its core, MCMC methods make use of the following property of Markov chains.

- Law of Large Numbers for Markov Chains

- Let be a Markov chain in .

- Suppose is homogeneous, irreducible, and the stationary distribution exists, then for any function ,

- Where is the stationary distribution of the Markov chain.

Thus we can perform Monte Carlo integration by designing a Markov chain such that the stationary distribution exists and is the target distribution , then we can simulate the Markov chain to obtain "samples". The law of large numbers assures us that the estimator is consistent, ie reaches the exact value as goes to infinity.

Metropolis-Hastings (MH) Algorithm

We begin by describing the most general algorithm, which does not make any assumptions for , other than that we can evaluate it pointwise. The Metropolis-Hastings algorithm often acts as a base for numerous other MCMC methods.

MH inputs:

- 1. A target distribution that we can evaluate pointwise.

- 2. A proposal distribution/transition from which we can simulate and evaluate pointwise.

MH algorithm:

- 1. Initialize arbitrarily and set

- 2. Loop (until enough samples are generated):

- (a) Propose a new state,

- (b) Compute

- (c) Accept as the new state with probability ; otherwise, accept as the new state.

- (d)

- 3. Return

The MH algorithm traverses the space of where the step direction is proposed by but the decision of whether to take the step is decided based on the acceptance ratio . The acceptance ratio increases if the proposed state is in a region with high density, and decreases if the proposed state is in a region of low density based. This makes sense intuitively since we would want more samples from a high density region.

Proof of Correctness

To prove that the MH algorithm does indeed simulate a Markov chain with stationary distribution , we prove a stronger condition, the detailed balance equation which says for any ,

Where is the probability of transitioning from to . The detailed balance equation implies that is the stationary distribution. To see this, simply take the sum over on both sides.

Detailed balance is trivially true for . First see that for , the transition function is the probability of sampling at step 2(a) times the probability of acceptance

The proof of detailed balance for the MH algorithm is very simple:

Thus applying law of large numbers, we've proven the correctness of the MH algorithm. Similar proofs are constructed for other MCMC algorithms.

Notes

- The density always appears in a ratio, so we may use the MH algorithm even if we only know the density up to a normalizing constant , where is the normalization constant.

- The acceptance step can be implemented by sampling from a Bernoulli distribution with probability , or by sampling from a uniform distribution on (0,1) and checking if

- From a practical perspective, it may be preferable to compute the acceptance ratio in log-space.

Example

Suppose we have a posterior distribution of the form

With the distributions given by and This corresponds to having a binomial distributed likelihood and a non-conjugate prior, resulting in a posterior density that cannot be normalized or sampled from easily. Instead, we can use Metropolis-Hastings to sample from this target distribution. We will use a Normal proposal distribution centered around the current sample, meaning our proposals will be random walks, with length depending on , the standard deviation.

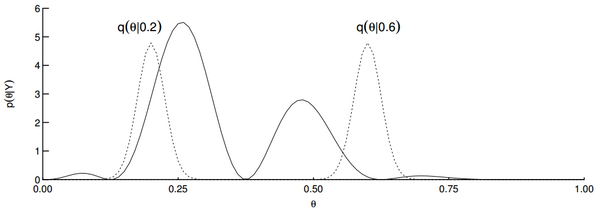

Below is a plot of the posterior distribution and two examples of the proposal distribution if the current samples are 0.2 and 0.6. ()

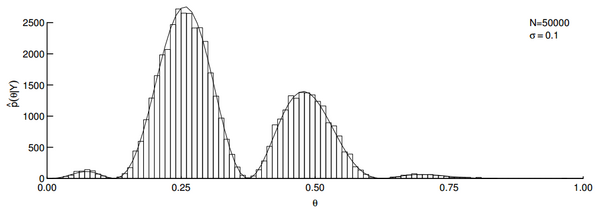

Using the Metropolis-Hastings algorithm with 50,000 samples and , we get the following histogram of samples.

As we can see, the histogram closely matches the target distribution.

Tuning the Proposal Distribution

The tuning problem refers to how the choice of proposal distribution can change the outcome of the MCMC algorithm. The simplest proposal distribution is a random walk in any direction. Imagine a Gaussian distribution centered around the current point with a fixed covariance matrix. Then if the proposal distribution has a high variance, the Markov chain is more likely to jump around. If the proposal distribution has low variance, then the Markov chain may not move very much in the same number of iterations. On the other hand, if the variance is so high that it frequently proposes a point outside the current mode, then the rejection rate shoots up. This behavior is shown in the figure on the right, with sigma being the standard deviation of a Gaussian proposal distribution.

The proposal distribution should in some sense mimic the true distributions, so a slightly more clever proposal may be an approximation of the target . Another property a proposal might have is that it may only update one variable at a time. For all these different choices we get a different transition operator, and any of these transition operators are valid in a MH algorithm.

In fact, we can mix and match transition operators and still have a valid MH algorithm, as long as the concatenation of the transition operators is still irreducible. This leads to what is known as the Gibbs sampler.

Gibbs Sampler

The Gibbs sampler is a special case of the MH algorithm where the transition proposals only change one variable at a time. Suppose the target distribution has dimensions and we knew the conditional probabilities

Then we can alternate between the set of conditionals as the transition distribution. While each does not yield an irreducible Markov chain, the algorithm is valid as long as the concatenation is irreducible.

Gibbs algorithm:

- 1. Initialize arbitrarily,

- 2. Loop (until enough samples are generated):

- (a)

- (b) Sample uniformly from

- (c) Sample

- (d)

- 3. Return

The Gibbs sampler can be more convenient than the basic MH algorithm, due to the avoidance of an accept-reject step. The acceptance ratio can be shown to always be 1. A common variant of the Gibbs sampler proposed here is to deterministically go through each dimension instead of sampling in step 2(b).

Of course, the main caveat of the Gibbs sampler is that knowledge of all the full conditional densities are required to be known, so this is a stronger condition than the basic MH algorithm.

Practical Concerns

Because MCMC algorithms all traverse the space using a Markov chain, the samples obtained are said to be correlated. While the law of large numbers does not assume any sort of low correlation or independence between samples, it is a theorem that only holds true as the number of samples goes to infinity. Since infinity is a concept that cannot be realized in any real application, some common hacks are used to improve the performance of MCMC algorithms.

Thinning

In order to break the dependence between samples, some have suggested to sample only once every transitions. This hack is known as thinning. Thinning is believed to help get more independent samples and also helps save memory as only a fraction of the samples are now stored. However, from a theoretic point of view, this hack is completely unnecessary as long as the chain is irreducible.

Burn-in

Since MCMC algorithms often randomly choose a point to start at, in high dimensions it is very likely this random initialization starts off at an area of low density. Then due to the Markov chain behavior, it may take some time before the MCMC algorithm reaches an area of high density. Oftentimes, the first few iterations are ignored (no samples are taken). This allows the Markov chain to traverse to an area of high density first before taking samples. This is known as having a burn-in interval.

Applications

MCMC can be applied in any context where the density of interest is too complex for simple sampling methods. For example, the Gibbs sampler is widely used in sampling from directed graphical models and the Ising model.

Also, MCMC are often used for calculating numerical approximations of multi-dimensional integrals, for example, Bayesian statistics, computational physics, computational biology and computational linguistics.

Annotated Bibliography

- Robert, Christian, and George Casella. Monte Carlo statistical methods. Springer Science & Business Media, 2013.

- Iain Murray. Monte Carlo Inference Methods. NIPS Tutorial 2015.

- Michael Eichler. Stat24600. University of Chicago.

|

|

![{\displaystyle E[f(X)]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/4b2cfe8a8b5f325f50469725481406a167df28fb)

![{\displaystyle E[f(X)]=\int f(x)\pi (x)dx}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/bed9389db35c2750845aa5b015cc0b6190ffa6d5)

![{\displaystyle \Omega =[-1,1]\times [-1,1]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/4f47cf9397840efe7bcacd61371acd2ee41df0f8)

![{\displaystyle E[f(X,Y)]=\int _{\Omega }f(x,y)\pi (x,y)d(x,y)=(1/4)\int _{\Omega }f(x,y)d(x,y)=K/4}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/dbe6c1a68b2d16f0441a0a4d30b66a40d78ac6f9)

![{\displaystyle E[f(X,Y)]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/d2f5e32452de5a0c2f6f61fbb4cbcb800c2005a8)

![{\displaystyle {\frac {1}{N}}\sum _{i=1}^{N}f(X_{i},Y_{i})\approx E[f(X,Y)]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/2e2b43fb58e5f61264e680faec53bc132df79356)

![{\displaystyle {\frac {1}{N}}\sum _{i=1}^{N}f(X_{n})\rightarrow E[f(X_{\infty })]}](https://wiki.ubc.ca/api/rest_v1/media/math/render/svg/59e5a3a980c85f359178981b1cb7a615ddcc5505)