Course:CPSC522/Latent Dirichlet Allocation

Primary Author: Prithu Banerjee

Latent Dirichlet Allocation

Author: Prithu Banerjee

Paper 1: Blei, Ng, and Jordan, M, LDA: Latent Dirichlet Allocation, JAIR 3 (2003). [4]

Paper 2:Blei, David, and John Lafferty. "Correlated topic models." Advances in neural information processing systems 18 (2006): 147. [5]

Abstract

In this page, I will talk about Latent Dirichlet Allocation in the context of topic modelling. It is one of the most highly used methods to perform the task of topic modelling. I will first present the seminal work of Blei, Ng, and Jordan. Their paper laid the foundation but at the same time introduces few assumptions that were later relaxed. I will highlight few of such assumptions and how relaxing those led to future extensions. Correlated Topic Model in one such extension that I will be describing in details. I will highlight the incremental achievement made by CTM compared to LDA.

Motivation

Suppose you have the following three documents in your corpus:

- Document 1: I like to eat broccoli and bananas. I ate a banana and spinach smoothie for breakfast.

- Document 2: Chinchillas and kittens are cute. My sister adopted a kitten yesterday.

- Document 3: Look at this cute hamster munching on a piece of broccoli.

Your task is to identify the topics each of the sentence is talking about. Latent Dirichlet Allocation (LDA) is one of the most suited tool to perform this task. More specifically, LDA is a probabilistic generative model that allows a collection of discrete observations, such as sentences, to be explained by unobserved (latent) groups, as in topics of the sentences. In this page I will first introduce the formal concepts of Latent Dirichlet Allocation and how this is used to unearth hidden latent variables. Then I will show the statistical assumptions made in LDA, how they can be relaxed and one such relaxation leads to the second paper which is more realistic than LDA.

Note that although throughout the page I will mostly be using text corpora to illustrate working of LDA, it can be applied to any other form of data such as images, where the concerned task could be to annotate the images with short descriptions.

This low dimensional representation of observation in terms of hidden variables, is very useful for multitudes of higher order tasks such as:

- Text summarization: The entire text of a document can be summarized based on the topics the document they talk about

- Document clustering/classifications: Documents exhibiting similar topic structures, can be clustered together which in turn can be used to recommend documents to a user knowing the topics user is interested about.

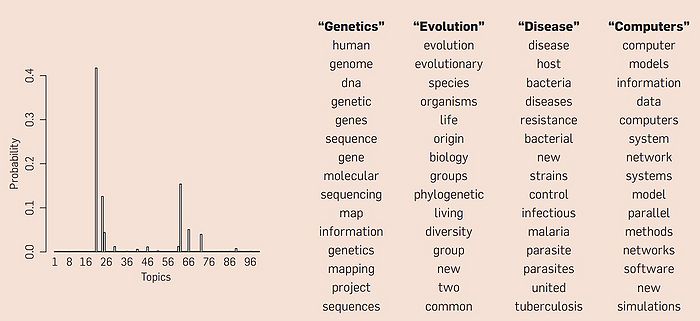

On a side note, as with any unsupervised methods, LDA does not label the classes explicitly. It is left upto the application to understand the class labels. That means, going with our analogy of text documents, LDA will not call a food topic cluster as food. But by looking at high probability words in that topic, one can guess that what the topic might be. To illustrate, in food topic words like broccoli will have high probabilities.

Background Knowledge

In this section, I will briefly present some background required to understand how LDA works.

Generative Models

As stated earlier, LDA is a generative probabilistic model. The three basic steps followed by a generative model are as follows:

% Learning

1 Treat data as observations that arise from a generative probabilistic process that includes hidden variables % document generation process

*For documents, the hidden variables reflect the thematic structure of the collection.

% Updating the variables according to the observations

2 Infer the hidden structure using posterior inference.

*What are the topics that describe this collection?

% Inference using learnt model

3 Situate new data into the estimated model.

*How does this query or new document fit into the estimated topic structure?

Mixed Membership Model

A key contribution that LDA makes as compared to previous generative model [1] is that it assumes a mixed membership model for the documents that are spit out by model. Which means that a document can be constituted by multiple topics, whereas in typical mixture models all words of a document are sampled from a single topic. To illustrate let's assume the same three documents we have seen before. Given these sentences and asked for 2 topics, LDA might produce something like:

- Document 1: 100% Topic A

- Document 2: 100% Topic B

- Document 3: 60% Topic A, 40% Topic B

Topic A: 30% broccoli, 15% bananas, 10% breakfast, 10% munching, … (at which point, you could interpret topic A to be about food) Topic B: 20% chinchillas, 20% kittens, 20% cute, 15% hamster, … (at which point, you could interpret topic B to be about cute animals)

As can be seen, the third document is a mixture of different topics. Thus, a mixed membership model is a more appropriate approach to model such scenarios.

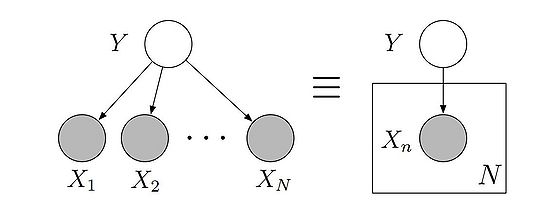

Graphical Model and Plate Notation

In the graphical model, random variables are represented as nodes. Edges between the nodes denote possible dependence. Observed variables are shaded. To illustrate in the figure, the nodes are observed nodes, whereas node is the latent variable and the observed nodes are assumed to be dependent on this node.

Plates are used to denote replicated structure. Thus the plate in the figure denotes all variables where

Structure of the graph defines the pattern of conditional dependence between the ensemble of random variables. For e.g. the graph in the example figure corresponds to

Dirichlet Distribution

Dirichlet distribution , is a family of continuous multivariate probability distributions parameterized by a vector of positive reals. The length of the vector denotes the dimension of the Dirichlet. Dirichlet distributions are very often used as prior distributions in Bayesian statistics, and in fact the Dirichlet distribution is the conjugate prior of the categorical distribution and multinomial distribution. LDA uses dirichlet to put a prior on the topic distribution. Though not essential to follow rest of the content, but here are few properties of Dirichlet worth knowing:

- Dirichlet distribution is an exponential family distribution over the simplex of positive vectors that sum to one.

- Density of dirichlet is given by: .

- This means that when each dimensions of are having similar values, then dirichlet is less peaky, where it becomes more peaky towards higher values of .

- When values of are less than then dirichlet encourages parse distribution.

What is LDA?

Notations

We will be using text data to present the working of LDA. However as already highlighted LDA is not limited to texts, and can be used for other sorts of data such as image also. We assume that:

- A word is the basic unit of discrete data, defined to be an item from a vocabulary indexed by . We represent words using unit-basis vectors that have a single component equal to one and all other components equal to zero. Thus, using superscripts to denote components, the th word in the vocabulary is represented by a -vector such that and for .

- A document is a sequence of words denoted by , where is the th word in the sequence.

- A corpus is a collection of documents denoted by .

Our aim is to find a probabilistic model of a corpus that not only assigns high probability to members of the corpus, but also assigns a high probability to other “similar” documents.

The generative model of LDA

Towards that LDA assumes a generative model for a given corpus. The basic idea is that documents are represented as random mixtures over latent topics, where each topic is characterized by a distribution over words. Matrix is a matrix that denotes the distribution of words over the topics. Thus

LDA assumes the following generative process for each document in a corpus :

1. Choose . 2. Choose . 3. For each of the words : (a) Choose a topic . (b) Choose a word from , a multinomial probability conditioned on the topic .

A -dimensional Dirichlet random variable can take values in the ()-simplex (a -vector lies in the ()-simplex if , )

Given the parameters and , the joint distribution of a topic mixture , a set of topics , and a set of words is given by:

,

where is simply for the unique such that .

Integrating over and summing over , we obtain the marginal distribution of a document:

Finally, taking the product of the marginal probabilities of single documents, we obtain the probability of a corpus:

Graphical Model

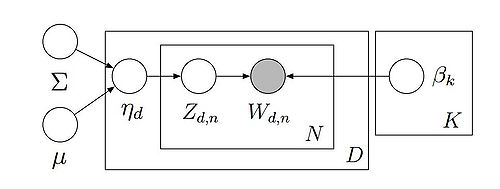

This generative process of LDA can also be viewed as a probabilistic graphical model as shown in figure [2] . As the figure makes clear, there are three levels to the LDA representation. The parameters and are corpuslevel parameters, assumed to be sampled once in the process of generating a corpus. The variables are document-level variables, sampled once per document. Finally, the variables and are word-level variables and are sampled once for each word in each document.

Approximate Posterior Inference

This generative model gives a nice perspective on how to model the corpus generation process. However we don't see all these in our data, rather we are given only a bunch of documents from where we should approximate all the parameters of the models discussed above. In essence, we need to work backward from the observation i.e. a document, and this process is called posterior inference.

So now suppose you have a set of documents. You’ve chosen some fixed number of topics to discover, and want to use LDA to learn the topic representation of each document and the words associated to each topic. How do you do this? One way (known as collapsed Gibbs sampling) is the following:

1. Go through each document, and randomly assign each word in the document to one of the topics . 2. Notice that this random assignment already gives both topic representations of all the documents and word distributions of all the topics (albeit not very good ones). 3. So to improve on them, for each document do the following: 3.1 Go through each word in 3.1.1 And for each topic , compute two things: 1) = the proportion of words in document that are currently assigned to topic , and 2) = the proportion of assignments to topic over all documents that come from this word . Reassign a new topic, where we choose topic with probability . It is worth noting that according to our generative model, this is essentially the probability that topic generated word , so it makes sense that we resample the current word’s topic with this probability. (Also, I’m glossing over a couple of things here, in particular the use of priors/pseudocounts in these probabilities.) 3.1.2 In other words, in this step, we’re assuming that all topic assignments except for the current word in question are correct, and then updating the assignment of the current word using our model of how documents are generated. 4. After repeating the previous step a large number of times, you’ll eventually reach a roughly steady state where your assignments are pretty good. So use these assignments to estimate the topic mixtures of each document (by counting the proportion of words assigned to each topic within that document) and the words associated to each topic (by counting the proportion of words assigned to each topic overall).

Here is an sample implementation of the above described process in R [4]:

lda.collapsed.gibbs.sampler <-

function (documents, K, vocab, num.iterations, alpha, eta, initial = NULL,

burnin = NULL, compute.log.likelihood = FALSE, trace = 0L,

freeze.topics = FALSE)

{

if (class(vocab) == "list") {

lengths <- as.integer(sapply(vocab, length))

all.vocab <- do.call(c, vocab)

}

else {

lengths <- as.integer(length(vocab))

all.vocab <- vocab

}

retval <- structure(.Call("collapsedGibbsSampler", documents,

as.integer(K), lengths, as.integer(num.iterations), as.double(alpha),

as.double(eta), NULL, NULL, NULL, NULL, NULL, NULL, NULL,

initial, as.integer(burnin), as.logical(compute.log.likelihood),

trace, as.logical(freeze.topics)), names = c("assignments",

"topics", "topic_sums", "document_sums", if (is.null(burnin)) NA else "document_expects",

NA, NA, NA, NA, if (compute.log.likelihood) "log.likelihoods" else NA))

colnames(retval$topics) <- all.vocab

retval

}

Layman's Explanation of LDA

In case the discussion above was a little eye-glazing, here’s another illustration of LDA from a different domain[5].

Suppose you’ve just moved to a new city. You’re a hipster and an anime fan, so you want to know where the other hipsters and anime geeks tend to hang out. Of course, as a hipster, you know you can’t just ask, so what do you do?

Here’s the scenario: you scope out a bunch of different establishments (documents) across town, making note of the people (words) hanging out in each of them (e.g., Alice hangs out at the mall and at the park, Bob hangs out at the movie theater and the park, and so on). Crucially, you don’t know the typical interest groups (topics) of each establishment, nor do you know the different interests of each person.

So you pick some number of categories to learn (i.e., you want to learn the most important kinds of categories people fall into), and start by making a guess as to why you see people where you do. For example, you initially guess that Alice is at the mall because people with interests in like to hang out there; when you see her at the park, you guess it’s because her friends with interests in like to hang out there; when you see Bob at the movie theater, you randomly guess it’s because the Z people in this city really like to watch movies; and so on.

Of course, your random guesses are very likely to be incorrect (they’re random guesses, after all!), so you want to improve on them. One way of doing so is to:

*Pick a place and a person (e.g., Alice at the mall). *Why is Alice likely to be at the mall? Probably because other people at the mall with the same interests sent her a message telling her to come. *In other words, the more people with interests in X there are at the mall and the stronger Alice is associated with interest X (at all the other places she goes to), the more likely it is that Alice is at the mall because of interest X. *So make a new guess as to why Alice is at the mall, choosing an interest with some probability according to how likely you think it is.

Go through each place and person over and over again. Your guesses keep getting better and better (after all, if you notice that lots of geeks hang out at the bookstore, and you suspect that Alice is pretty geeky herself, then it’s a good bet that Alice is at the bookstore because her geek friends told her to go there; and now that you have a better idea of why Alice is probably at the bookstore, you can use this knowledge in turn to improve your guesses as to why everyone else is where they are), and eventually you can stop updating. Then take a snapshot (or multiple snapshots) of your guesses, and use it to get all the information you want:

*For each category, you can count the people assigned to that category to figure out what people have this particular interest. By looking at the people themselves, you can interpret the category as well (e.g., if category X contains lots of tall people wearing jerseys and carrying around basketballs, you might interpret X as the “basketball players” group). *For each place P and interest category C, you can compute the proportions of people at P because of C (under the current set of assignments), and these give you a representation of P. For example, you might learn that the people who hang out at Barnes & Noble consist of 10% hipsters, 50% anime fans, 10% jocks, and 30% college students.

Statistical Assumptions

LDA, as described above, is based on few statistical assumptions that it makes. Let us first enumerate a few of them explicitly:

- Number of topics is known. Which is often prohibitive in terms of application context, as it is very hard to assume the number of topics the corpus may contain.

- The occurrences of topics in a document are correlated. LDA assumes no such correlations.

- Bag of words model. It assumes document just as a collection of words, where there is no order between the words.

- Bag of documents model. LDA also neglects the order of documents in a corpus. However in system logs, or scientific journal collections, there are clear reflections of evolving contents.

In next section, we will see how some of these assumptions can be relaxed and will look in detail one model CTM (Correlated Topic Model) which specifically relaxes the second assumption [6] .

Relaxing Assumptions of LDA

NCRP

The Nested Chinese Restaurant Process and Bayesian Nonparametric Inference of Topic Hierarchies[7], relaxes the assumption that number of topics must be known. In this models the topics are assumed to be evolving in a hierarchical manner. A nested Chinese restaurant process governs whether a topic is sampled under a parent node or any of the existing topic is chosen. Note that while estimating the right number of topics, it also infers some relation between topics and its sub-topics.

DTM

The dynamic topic model is proposed to relax the bag of document assumption. As mentioned, LDA assumes that documents are exchangeable within the corpus, and, for many corpora, this assumption is inappropriate. Scholarly journals, email, news articles, and search query logs all reflect evolving content. For example, the Science articles “The Brain of Professor Laborde” and “Reshaping the Cortical Motor Map by Unmasking Latent Intracortical Connections” may both concern aspects of neuroscience, but the field of neuroscience looked much different in 1903 than it did in 1991. The topics of a document collection evolve over time.

The dynamic topic model (DTM) captures the evolution of topics in a sequentially organized corpus of documents. In the DTM, data is divided by time slice, e.g., by year. Then documents of each slice is modeled with a K-component topic model, where the topics associated with slice t evolve from the topics associated with slice t − 1.

CTM

The correlated topic model is developed to explicitly mitigate the inability of LDA to capture topic correlation. Note that while NCRP models hierarchical correlation, it also fails to model generic correlation between the occurrence of topics. In many— indeed most—text corpora, it is natural to expect that the occurrences of the underlying latent topics will be highly correlated. In the Science corpus, for example, an article about genetics may be likely to also be about health and disease, but unlikely to also be about x-ray astronomy.

In the following section we will highlight how the generative process under CTM differs from that of LDA.

CTM

One limitation of LDA is that it fails to directly model correlation between the occurrence of topics. In LDA, this modeling limitation stems from the independence assumptions implicit in the Dirichlet distribution of the topic proportions. Specifically, under a Dirichlet, the components of the proportions vector are nearly independent, which leads to the strong assumption that the presence of one topic is not correlated with the presence of another. (We say “nearly independent” because the components exhibit slight negative correlation because of the constraint that they have to sum to one.) In the correlated topic model (CTM) [8], we model the topic proportions with an alternative, more flexible distribution that allows for covariance structure among the components. This gives a more realistic model of latent topic structure where the presence of one latent topic may be correlated with the presence of another. The CTM better fits the data, and provides a rich way of visualizing and exploring text collections.

The generative model of CTM

The key to the CTM is the logistic normal distribution. The logistic normal is a distribution on the simplex that allows for a general pattern of variability between the components. It achieves this by mapping a multivariate random variable from to the -simplex.

In particular, the logistic normal distribution takes a draw from a multivariate Gaussian, exponentiates it, and maps it to the simplex via normalization. The covariance of the Gaussian leads to correlations between components of the resulting simplicial random variable. The logistic normal was originally studied in the context of analyzing observed data such as the proportions of minerals in geological samples. In the CTM, it is used in a hierarchical model where it describes the hidden composition of topics associated with each document.

Let be a K-dimensional mean and covariance matrix, and let topics be K multinomials over a fixed word vocabulary, as discussed in LDA. The CTM assumes that an N-word document arises from the following generative process:

(1) Draw . (2) For : (a) Draw topic assignment from . (b) Draw word from .

The function that maps the real-vector η to the simplex is

Note that this process is identical to the generative process of LDA, except that the topic proportions are drawn from a logistic normal instead of drawig from a Dirichlet.

The graphical model

The generative described above for CTM, can be seen as the following graphical model.

As can be seen, the logistic normal is a distribution on the simplex that allows for a general pattern of variability between the components by transforming a multivariate normal random variable.

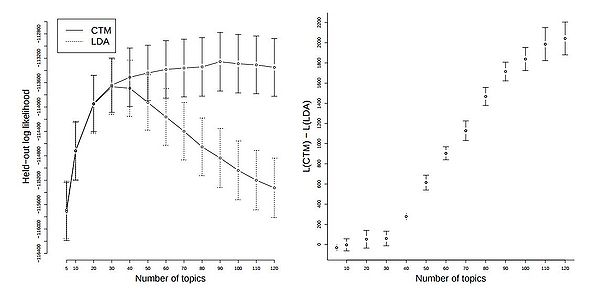

Comparing CTM and LDA

The CTM is more expressive than LDA because the strong independence assumption imposed by the Dirichlet in LDA is not realistic when analyzing real document collections. In this section we shall see how quantitative results confirm how the CTM superior to LDA.

Moreover, this higher order structure given by the covariance can be used as an exploratory tool for better understanding and navigating a large corpus. Figure 7 illustrates the topics and their connections found by analyzing the same Science corpus as for Figure 1. This gives a richer way of visualizing and browsing the latent semantic structure inherent in the corpus. However, the added flexibility of the CTM comes at a computational cost. Mean field variational inference for the CTM is not as fast or straightforward as the algorithm in Figure 5. In particular, the update for the variational distribution of the topic proportions must be fit by gradient-based optimization.

Also, note that in the case when there is no correlation between the topics, the covariance matrix learned will be sparse, as most of the values will be zero. Thus the CTM model will essentially work at least as good as LDA in terms of quality of the topic clusters identified. We shall see that in the floowing experiment section.

Held Out Data

In this set up some document from the training corpus, are held back in the training phase. After the model converges during training, document probabilities of these held out documents are computed using the converged model parameters. Essentially these probabilities suggest how likely are these documents according to the models generative process. Since these documents are part of the training set, then a better model will have a higher generation likelihood for these documents. Hence, they will have a higher probability.

As can be seen in the plots the CTM provides a better fit than LDA and supports more topics; the likelihood for LDA peaks near 30 topics while the likelihood for the CTM peaks close to 90 topics. The means and standard errors of the difference in log-likelihood of the models is shown at right; this indicates that the CTM always gives a better fit.

The left plot also indicates that the performance of CTM is never below LDA even when the number of topics are very small. When number of topics itself is small, it is hard to expect correlation between them. So CTM performs as good as LDA in such scenarios.

Inference Time

However, the added flexibility of the CTM comes at a computational cost. Posterior inference for the CTM is not as fast as that of LDA. In particular, the update for the variational distribution of the topic proportions must be fit by gradient-based optimization. Which makes it significantly slower than LDA

Coanclusion

To summarize, we saw two of the most widely used techniques in topic modeling. While LDA first realised that documents can be seen as mixtures of topics, it failed on many other realistic constraints. CTM mitigated one such constraint where topics can be correlated. It uses a covariance matrix to capture the correlation across the topics. It improved on the LDA model in such a way that even when in the case when there is no (or very little) correlation between topics, the model will work at least as good as LDA. However this came at a cost of higher inference time.

Annotated Bibliography

- ↑ Deerwester, Scott, et al. "Indexing by latent semantic analysis." Journal of the American society for information science 41.6 (1990): 391.

- ↑ Cambridge Machine Learning Summer School [1]

- ↑ Blei, Ng, and Jordan, M, LDA: Latent Dirichlet Allocation, JAIR 3 (2003).

- ↑ R implementations [2]

- ↑ Edwin Chen [3]

- ↑ Blei, David M., and John D. Lafferty. "Topic models." Text mining: classification, clustering, and applications 10.71 (2009): 34.

- ↑ Blei, David M., Thomas L. Griffiths, and Michael I. Jordan. "The nested chinese restaurant process and bayesian nonparametric inference of topic hierarchies." Journal of the ACM (JACM) 57.2 (2010): 7.

- ↑ Blei, David, and John Lafferty. "Correlated topic models." Advances in neural information processing systems 18 (2006): 147.