COGS200 Group21

Introduction

Background Information

There are five factors of decision making: emotional steadiness, oral communication skill, personality, past experience, and self-confidence (Chen, 2000). These five factors are involved in the decision-making process of a human; however, these factors do not extend past humanity or to Artificial Intelligence, thus AI do not use these factors when making a decision. Although AI, such as IBM’s Watson, do not use factors to decide the best course of action, they do use a set of procedural criteria in their decision-making process. The decision-making process is also comprised of seven “ideal” procedural criteria to abide by so there is a better chance the decision maker’s objective are attained.

“These seven criteria are:

- Thoroughly canvas a wide range of alternative courses of action.

- Survey the full range of objectives to be fulfilled and the values implicated by the choice.

- Carefully weigh whatever he knows about the costs and risks of negative consequences, as well as positive consequences, that could flow from each alternative.

- Search for new information relevant to further evaluation of the alternatives.

- Correctly assimilate and take account of any new information or expert judgement to which he is exposed, even when the information or judgement does not support the course of action he initially prefers.

- Re-examine the positive and negative consequences of all known alternatives, including those originally regarded as unacceptable, before making a final choice.

- Make detailed provisions for implementing or executing the chosen course of action, with special attention to contingency plans that might be required if various known risks were to materialize.”

(Janis & Mann, 1977)

A physician is capable of implementing all seven criteria, however, Watson, a rapid-learning computational system, outperforms the physician on the fifth criterion because Watson has access to more information and the most current information, it is able to consolidate and integrate the information to make the most educated decision. So, because Watson ‘knows’ more, it is able to make a decision. Usually, the more you know, the better you do because you are able to use a broader base of knowledge to support your choices. Since Watson can access a wealth of knowledge much quicker than a lone physician could, Watson would make a better decision because it has more information. This phenomenon is known as the knowledge transfer gap and since Watson would be used as a tool by a physician, the confidence of the physician should increase because failure to meet any of the seven criteria would result in a defective decision-making process, and the more defective the decision, the more unanticipated setbacks will occur resulting in the decision maker experiencing post-decision regret.

Knowledge transfer is the conveyance of knowledge from one place, person, ownership, etc. to another (Cordey-Hayes & Major, 2000). The knowledge transfer gap is the growing divergence between the knowledge in the world and the knowledge that one individual knows. With the increasing amount of technology, research is being published at a faster rate than we can understand it. In the medical field, the transfer of research findings into practice is often a slow and haphazard process (Agency for Health Research and Quality, 2001). This means that patients are not getting the best care that is supported by scientific evidence. It is estimated that cancer outcomes could be improved by 30% if the treatment received matched what is currently known (Canadian Cancer Control Strategy, 2001). With a rapid-learning AI system, such as IBM’s Watson, the knowledge transfer gap between the amount of knowledge that Watson has access to, and can interpret is substantial when compared to the knowledge of one physician. Knowledge transfer can be divided into four subcategories: awareness, association, assimilation, and application. These findings mimic those of absorptive capacity, the ability of an organization to recognize the value of new information, to assimilate it and to commercially apply it (Cohen & Levinthal, 1990). In theory, a rapid-learning AI system such as Watson has a higher absorptive capacity compared to an individual human, thus the use of Watson would bridge the knowledge translation gap by fulfilling the role of the knowledge receiver, where it would translate and interpret external knowledge which would then be passed on to the physician to contextualize the external information.

There is ongoing debate about whether the use of technology widens or narrows the knowledge gap within a society, however, when directing our focus to a small and exclusive group, such as oncology physicians, we are operating under the two assumptions: that information itself contributes to problem resolution and that higher levels of information input lead to a general equalization of knowledge throughout the system (Donohue, et al,.1975). Watson can be used to share knowledge which allows individuals to access and utilize essential information which they may not have had access or ability to use. When the relationship between AI and an individual is thought of as a system, knowledge would diffuse from the origin (AI), where knowledge is in a higher concentration, to the destination (physician), where knowledge has a lower concentration, to reach equilibrium. Man and machine will never be equal in computational power or speed, however, machines can aid man to make the most educated decision.

Project Outline

We are interested in whether the use of AI as an aid to oncology physicians would affect their confidence in decision-making in terms of creating a treatment plan. We predict that the use of AI would decrease the length of time between when the physician familiarizes himself and diagnoses the patient, and when the initial treatment is implemented, which is how we will measure the time taken during the initial decision-making process. To test our hypothesis, we would measure the length of time between the physician diagnosing the patient and the implementation of treatment, and administer a survey to the physicians afterwards. The confidence of the physician is investigated to evaluate the correlation between the use of an AI aid and confidence. The experiment would also evaluate if the use of an AI aid changes confidence long-term, comparing the confidence of physicians over the ten-year period of study. We will observe their confidence after an initial decision is made, as well as throughout their patient’s treatment. We hypothesize that a physician's confidence will increase when aided by AI and the AI has a similar decision to treatment as the physician while their confidence would decrease if the AI produced a decision incongruent to their own. We also expect that as treatment continues, confidence will stay the same, or increase, if the outcome of the patient improves, while confidence would decrease if the outcome of the patient worsens. In terms of the execution of a decision, especially when considering cancer care, there are always some hoped-for gains that do not materialize and some undesirable losses that do, which can affect confidence, so we have chosen to evaluate confidence over a five-year period to account for changes due to the outcome of execution.

Importance of Our Research

Our research can be extrapolated to any situation that involves complex decision-making where the situation is fuzzy. Fuzzy is defined as “a "class" with a continuum of grades of membership… Essentially, such a framework provides a natural way of dealing with problems in which the source of imprecision is the absence of sharply defined criteria of class membership rather than the presence of random variables.” (Zadeh, 1965). Zadeh proposed fuzzy set theory to make the optimal decision in a given situation. Fuzziness is present in ill-posed problems, many of which are real life problems in decision-making. The best decision should have the shortest distance from the ideal solution and the farthest distance from the negative-ideal solution in some geometrical sense. Thus, the best decision of an oncology physician would be a decision of treatment in which the patient has the highest chance of entering remission and has the lowest chance of the cancer metastasizing, and an even lower chance of death.

Our research is important because failure to make informed decisions is evident across all groups of decision makers. To make more informed decisions, from health care, to business, to public-policy, we must increase our recognition of the knowledge translation gap and better implement action to narrow the gap. When decision conflicts occur in a situation where the degree of complexity exceeds the limits of cognitive abilities, there is a significant decrease in adequacy of information processing which is a direct effect of information overload and ensuing fatigue (Janis & Mann, 1979). The use of AI in oncology has the possibility to decrease information overload, thus decreasing fatigue on the physician. The use of Watson may have a big influence on the long term consequences as Watson may be able to determine which treatment strategy will have fewer long term consequences. It is no longer just about survival.

Methods

Our research would be conducted using a longitudinal experimental design with the purpose to investigate the efficacy of AI as an aid to a physician’s decision making. The AI we will be investigating is IBM’s Watson. Watson is being used in four hospitals in the United States to aid oncology physicians in determining the next steps of care in a patient’s treatment plan. Medically, Watson has only been used in oncology thus far and has yielded mixed results, however, little research has been conducted on the impact of the use of AI on physicians themselves.

To examine the decision-making process of physicians, we will narrow our scope by focusing on the efficacy of AI as an aid on the physician, instead of the efficacy of AI on treatment of the patient. We have decided the factor most important to decision making is the confidence of the physician, identified by the five beneficial factors of decision making (Chen, 1997). However, we cannot directly examine a physician’s confidence since we are assuming that physicians have a high degree of confidence in their decisions because they are making a decision that is high stakes, thus consideration of every available option must be assessed and evaluated, regardless of the use of AI. To examine doctor confidence, an intangible measure, we will be examining the factors of decision-making by the outcomes of confidence: the time it takes for a physician to reach a decision on the appropriate treatment plan from when they had consolidated the details of the patient and their case. We will also examine a quantitative measure of confidence over time via a survey.

Parameters

Due to the unpredictable nature of oncology in terms of expected results, we must limit the participants selected. These participants would be chosen based on five parameters:

- Patients aged between 25-40 with lung cancer. Lung cancer is the second most common cancer in both men and women with an estimated 222,500 new cases: 116,990 in men and 105,510 in women in the United States in 2017. It is also the leading cause of cancer deaths in both women and men (American Cancer Society, 2017).

- All patients will have been diagnosed with large cell carcinoma (LCC), which makes up 15% of the 80% non-small cell lung cancer (NSCLC) of lung cancer diagnosis (American Cancer Society, 2017). LCC usually originates in the epithelial cells of the lungs and is known to quickly spread, making it harder to treat (American Society of Clinical Oncology, 2017).

- The patients will have a tumour less than 3 cm in size and spherical in shape thus the patients are in the early stage of LCC, so the amount of treatment options is highest.

- The tumour must also be located in the peripheral of the lung, next to either the chest wall or diaphragm which is typical of a patient with LCC.

- The patients would also be of similar ethnicity, income, and socioeconomic class.

Specificity of our patients is important to limit extraneous factors that could have an effect on health outcomes.

The physicians would not have any parameters that would limit their participation in the study, however, we would observe the following areas of interest: 1. How long the physician has been practicing. Based on the five beneficial factors of decision-making, physicians who have been practicing longer would make more successful decisions due to more years of past experience which would also increase their emotional steadiness, oral communication skills, and personality. 2. When and where they attended medical school. A more recent graduate, or a physician that attended a technologically advanced institution may be more comfortable using technology as a tool than a physician who has already developed a method of practice. 3. The innovative vision of the hospital. Hospitals that pride themselves for being on the ‘cutting edge’ would have physicians that align to their institutional values thus they would be more welcome to change and to change by innovative technology. Because this study is theoretical, we are assuming that there are enough patients fitting our parameters in order to carry out the study.

Experimental Design

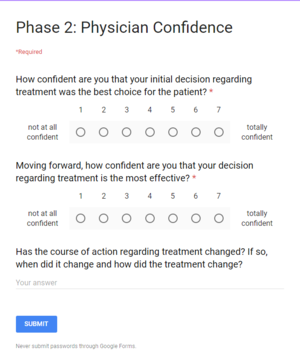

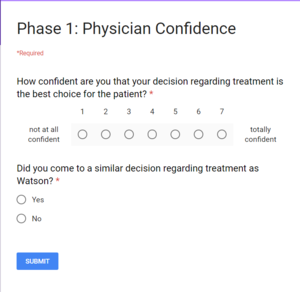

This study would be executed in hospitals that will be be selected from communities of 750,000 -1.5 million people in the Canada where only one cancer centre provides the majority of all treatments for the community. We would have two conditions, our experimental condition and our control condition. Our experimental condition would be composed of the participants that have been assigned a physician that would be using Watson as a tool to aid their decision making. The control group would be traditional patient/doctor care where the patient is assigned a primary physician for care. To prevent the confounding variable of an individual physician's success rate as a potential factor on the effectiveness of the treatment plan, thus extending to their confidence in the treatment plan, all of the primary physicians would have multiple cases with at least one case using the AI experimental condition, one case using the collaborative experimental condition, and one case using the controlled condition. This experiment would last a total of ten years. The experiment is divided into two stages: initial confidence of decision made, and confidence of decision made in hindsight while the patient is undergoing treatment, measured after a certain period of time, as mentioned below. During the first stage of the study: the initial confidence of decision made would be researched for five years. Proceeding the five years of study on initial decision confidence, the physician will then partake in the second stage of the study: confidence of decision made in hindsight while the patient is undergoing treatment which will proceed for another five year after the date the initial decision was made. So, the physician will partake in the entire two stages of the study which will last a length of five years for each individual patient. The physician would also be given a survey (see Figure 1) at the time the treatment plan decision was finalized regarding their level of confidence. The physician would be given a similar survey after the patient had undergone treatment for one month, three months, six months, nine months, 12 months and each additional year after the initial treatment decision. As mentioned above, the survey questions regarding confidence would remain unchanged, however, the survey would also include a section regarding possible changes in the initial treatment plan that will or have been made (see Figure 2). Ideally, the patient would partake in the study and be monitored until their diagnosis changes which will be defined as either when they enter remission, or when the cancer metastasizes.

Additional Experimental Considerations

Unfortunately, this study would need to be performed on actual patients in real time, otherwise the study would hold no merit because the outcome of each patient would already be inputted into Watson, thus the ideal treatment and best decision in terms of care would already be known.

Additionally, our patients would have to be real, they would all need to be aware that they have the opportunity to be a part of a study and that their physician’s decisions may be influenced by an AI aid. It would be stressed that the AI would not be replacing the physician, merely aiding the physician by reducing the knowledge gap between the entire field of oncology and the individual physician.

Expected Results

We would expect that physicians who are more confident, would make a decision regarding the next steps of care faster than a physician who was less confident. We would also expect that confidence would increase, compared to the control, when AI produces a similar treatment plan to the physician (AI experimental condition). We would expect the physician’s confidence to decrease, compared to the control, if the decision by AI is incongruent to the decision of the physician (AI experimental condition).

The rationale behind the increase in time required for the decision making process in respect to incongruent decision making comes from various factors involved with the personal biases physicians may have. For instance, the level of overconfidence that a physician holds affects the effectiveness of cooperation (Croskerry, 2008). Within the medical education field, confidence bias is consistent where the differences exist between decision accuracy and confidence as based on an individual’s cognitive style (Westbrook, 2005). With the addition of possibly contradicting treatment proposals, their ability of self reflection is challenged and the time taken to reach an effective plan is lengthened. This amount of time required is dependent on the amount of flexibility each physician holds to adjust to different input that may change their decision making procedures.

Decision confidence, described as the certainty to which a subject believes their choice is correct is an aspect fundamental to our subjective conscious experience as well as the cognitive functions is an important factor required by physicians (Insabato et al., 2010). It is generally considered a weakness and a sign of vulnerability for clinicians to appear unsure due to the detrimental associated outcomes in regards to the patient to physician relationship (Croskerry, 2008). Overall trends in decision confidence show that people tend to accept advice when it is offered in a situation they are unsure of the outcome (Lee, 2006). This would further support our anticipated result of the increase in confidence given a situation where the coordinating physician or AI provides advice to the primary physician. In the AI experimental condition, the primary physician would be tested on their confidence with their treatment allowing for a certain amount of uncertainty in the cases where treatment plans are not aligned. If for example, their designated patients are not showing anticipated results that the treatment was designed for, we could expect the confidence of the physician to be reduced as time progresses and further steps would need to be taken.

Physicians tend to come to decisions through their own knowledge and the medical literature that they have personally been exposed to however many often have poor self judgement in this aspect (Westbrook, 2005). Due to vigilant information processing, a decision maker may have learned from their past experience that a high degree of selectivity is vital otherwise the decision maker will waste resources, creating unnecessary delays, and adding unproductive confusion (Janis, I. & Mann, L. 1979).

We would expect to see the highest degree of confidence when the decision regarding treatment was initially made. Once the treatment plan is decided and executed, there are negative consequences that the physician did not foresee and there are expectations that are not fulfilled. Because the result of treatment would stray from what is expected, the physician would experience cognitive dissonance that may be presented in the forms of hesitation, vacillation, feelings of uncertainty, or signs of acute stress and resulting in an overall unpleasant feeling of distress (Janis, I. & Mann, L. 1979). These same symptoms would also be found in physicians in our experimental conditions where there is decision conflict between the primary physician and the AI. Because the physician is experiencing cognitive dissonance, their confidence in their initial decision would decrease, as well as their confidence in a decision relating to the treatment of their patient.

Discussion

The experimental design of our study focuses mostly on the impacts of this research with regards to decision-making psychology, as that is more readily quantified. The philosophical impacts are discussed in this section. The knowledge translation gap is a pertinent concept to the field of epistemology, especially with the increasing use of technology (e.g. search engines) that aids in decreasing this gap. The knowledge translation gap is intangible, and as such it cannot be divided into smaller tangible factors, therefore the results of our study will be analyzed as the implications of knowledge translation as a whole, not a specific, smaller component of knowledge translation.

By using AI as a tool in the decision-making process, the knowledge translation gap is narrowed, consolidating and encoding data allowing for a decision to be made with the highest amount of available pertinent information. Based on our expected results, we would anticipate that the physician would be more confident when the AI system suggests a similar decision. Thus, the AI would be used in this case to successfully narrow the knowledge translation gap, acting as a mediator between all of the possibly relevant information available and the physician. In the inherently existing cases where the AI system and the physician do not agree, the physician may suffer cognitive dissonance. One potential method of combating the cognitive dissonance arising in this situation would be to include a third-party objective physician or panel whose job is to sort through these discrepancies before they are passed along to the primary physician. In terms of knowledge translation, the third-party physician would identify the discrepancy in the results of the research that Watson would have consolidated. The third-party physician would therefore act as a preliminary decider thus bridging the gap of knowledge translation. This presents an important opportunity for further research: by adding a third-party physician to the control setup, to aid in decision making, the effect of the difference in collaboration (collaborating with AI versus no collaboration, the setup of this study) could be minimized. Furthermore, one could investigate the effect of a physician's confidence on the length of time it takes for the patient and their family to make a decision regarding treatment.

Within our study, there are various other possible factors that could be further investigated. We anticipate that over the five years the AI system will be used within the hospital, the physicians will adapt to its use. Hence, we expect that their trust in Watson would increase over time, and thus, their confidence using Watson as a tool in decision-making would also increase over the course of the study. Additionally, younger physicians, who are likely more adept with technology and who grew up in a culture more amiable to the use of computational technology, would trust AI more than a physician who does not understand technology.

The trust between a physician and the AI, as well as the trust between a patient and said AI, inherently affects their confidence in the ability of the technology to come to a valid decision. The use of AI as a tool would lead to a paradigm shift in the way we view healthcare not only on an individual level, between AI and a physician, but also on a societal level within a hospital. Further research should be conducted comparing the trust an individual has in AI compared to the trust an individual has in another individual, testing whether or not we trust machines more than other humans, when each is given the same task.

Furthermore, ethical considerations would necessarily be involved in this study as both the physician and the patient would be entrusting a “learning” machine with vast troves of sensitive, personal medical information. Due to the nature of the technology involved, specifically that information inputted will be stored and referenced potentially indefinitely, care must be taken to anonymize data when possible. Patients ostensibly would have to consent to the machine storing and interpreting their medical data, just as patients consent to physicians and other medical professionals they interact with making use of their medical data. As artificial intelligence algorithms are expanded, this consideration grows in importance due to the ever-present risk of data leakage and generally, the usage of personal data in an unwanted manner.

For our research purposes, Watson would only be used as a tool to aid physicians, not to replace them. Watson is a fairly new computational tool that has yet to be perfected, hence physicians would be unable to rely on it completely and it would likely not be a particularly effective ‘crutch’ for a physician to more heavily base their decision-making on. Further research on this topic should be conducted in the future, when the Watson AI becomes more advanced, to ascertain whether more of a decision-making role could be placed on it, taking more burden off of individual physicians, or even replacing some medical personnel completely.

Conclusion

If our anticipated results are correct and our experiment ultimately yields results indicating an increase in physician confidence due to the collaboration with the Watson AI on decision-making, then it is rational to assume that similar rapid-learning AI systems would be effective in bridging the knowledge gap in other academic fields. Generally speaking, translating knowledge from academia to organizations that intend to utilize the data in order to benefit society would inevitably lead to economic growth and social development. These societal implications stress the importance of making knowledge accessible and indicate how AI is a beneficial tool for progress. In order to assess how beneficial of a tool AI is for societal progress, similar studies should be conducted in other fields of study where a knowledge gap exists, such as managerial business, policy makers, etc.

Bibliography

Agency for Health Research and Quality (2001). Translating research into practice (TRIP)-II. Washington, DC: Agency for Health Research and Quality. http://www.ahrq.gov/ research/trip2fac.htm

Canadian Cancer Control Strategy (2001). Canadian strategy for cancer control. Draft synthesis report. Ottawa, Ontario: Canadian Cancer Control Strategy

Cancer.org. (2017). What Is Non-Small Cell Lung Cancer?. [online] Available at: https://www.cancer.org/cancer/non-small-cell-lung-cancer/about/what-is-non-small-cell-lung-cancer.html [Accessed 27 Nov. 2017].

Chen, C. (2000). Extensions of the TOPSIS for group decision-making under fuzzy environment. Fuzzy Sets and Systems, 114(1), pp.1-9.

Cohen, W. and Levinthal, D. (1990). Absorptive Capacity: A New Perspective on Learning and Innovation. Administrative Science Quarterly, 35(1), p.128.

Croskerry, P. and Norman, G. (2008). Overconfidence in Clinical Decision Making. The American Journal of Medicine, 121(5), pp. S24-S29. Available at: http://www.sciencedirect.com.ezproxy.library.ubc.ca/science/article/pii/S0002934308001526

Donohue, G., Tichenor, P. and Olien, C. (1974). Mass Media and Information Flow: The Knowledge Gap Hypothesis Reconsidered. Public Opinion Quarterly, 38(3), pp.483-483.

Ettema, J., Brown, J. and Luepker, R. (1983). Knowledge Gap Effects in a Health Information Campaign. Public Opinion Quarterly, 47(4), p.516.

Graham, I., Logan, J., Harrison, M., Straus, S., Tetroe, J., Caswell, W. and Robinson, N. (2006). Lost in knowledge translation: Time for a map?. Journal of Continuing Education in the Health Professions, 26(1), pp.13-24.

En.wikipedia.org. (2017). Large-cell lung carcinoma. [online] Available at: https://en.wikipedia.org/wiki/Large-cell_lung_carcinoma [Accessed 27 Nov. 2017].

Janis, I. and Mann, L. (1979). Decision making. New York: Free Press.

Insabato, A., Pannunzzi, M., Rolls E. and Deco, G. (2010). Confidence-Related Decision Making. Journal of Neurophysiology, 104(1), pp. 539-547. Available at: http://jn.physiology.org.ezproxy.library.ubc.ca/content/104/1/539

Lee, M. and Dry, M. (2006). Decision Making and Confidence Given Uncertain Advice. Cognitive Science, 30(6), pp. 1081-1095. Available at: http://onlinelibrary.wiley.com.ezproxy.library.ubc.ca/doi/10.1207/s15516709cog0000_71/abstract

Lee, Z. and Lee, J. (2000). An ERP implementation case study from a knowledge transfer perspective. Journal of Information Technology, 15(4), pp.281-288.

Major, E. and Cordey‐Hayes, M. (2000). Knowledge translation: a new perspective on knowledge transfer and foresight. Foresight, 2(4), pp.411-423.

Pathologyoutlines.com. (2017). Large cell undifferentiated carcinoma. [online] Available at: http://www.pathologyoutlines.com/topic/lungtumorlargecell.html [Accessed 27 Nov. 2017].

Radiologyinthai.blogspot.ca. (2017). 2009 Non-Small Cell Lung Cancer Staging System (3). [online] Available at: http://radiologyinthai.blogspot.ca/2009/12/2009-non-small-cell-lung-cancer-staging_30.html [Accessed 27 Nov. 2017].

Straus, S., Tetroe, J. and Graham, I. (2009). Defining knowledge translation. Canadian Medical Association Journal, 181(3-4), pp.165-168.

Szulanski, G. (2000). The Process of Knowledge Transfer: A Diachronic Analysis of Stickiness. Organizational Behavior and Human Decision Processes, 82(1), pp.9-27.

Triantaphyllou, E. (2010). Multi-criteria decision making methods. Dordrecht [u.a.]: Kluwer, pp.1-21.

Westbrook, J., Gosling S. and Coiera, E. (2005). The Impact of an Online Evidence System on Confidence in Decision Making in a Controlled Setting. Medical Decision Making, 25(2), pp. 178-185. Available at: http://journals.sagepub.com.ezproxy.library.ubc.ca/doi/abs/10.1177/0272989X05275155

Zadeh, L. (1965). Fuzzy sets. Information and Control, 8(3), pp.338-353.